195 Repositories

Python v-diffusion-jax Libraries

Source code for paper "Deep Diffusion Models for Robust Channel Estimation", TBA.

diffusion-channels Source code for paper "Deep Diffusion Models for Robust Channel Estimation". Generic flow: Use 'matlab/main.mat' to generate traini

Einshape: DSL-based reshaping library for JAX and other frameworks.

Einshape: DSL-based reshaping library for JAX and other frameworks. The jnp.einsum op provides a DSL-based unified interface to matmul and tensordot o

NDE: Climate Modeling with Neural Diffusion Equation, ICDM'21

Climate Modeling with Neural Diffusion Equation Introduction This is the repository of our accepted ICDM 2021 paper "Climate Modeling with Neural Diff

Conservative Q Learning for Offline Reinforcement Reinforcement Learning in JAX

CQL-JAX This repository implements Conservative Q Learning for Offline Reinforcement Reinforcement Learning in JAX (FLAX). Implementation is built on

A JAX-based research framework for writing differentiable numerical simulators with arbitrary discretizations

jaxdf - JAX-based Discretization Framework Overview | Example | Installation | Documentation ⚠️ This library is still in development. Breaking changes

v objective diffusion inference code for JAX.

v-diffusion-jax v objective diffusion inference code for JAX, by Katherine Crowson (@RiversHaveWings) and Chainbreakers AI (@jd_pressman). The models

Flaxformer: transformer architectures in JAX/Flax

Flaxformer: transformer architectures in JAX/Flax Flaxformer is a transformer library for primarily NLP and multimodal research at Google. It is used

McGill Physics Hackathon 2021: Reaction-Diffusion Models for the Generation of Biological Patterns

DiffuseAnimals: Reaction-Diffusion Models for the Generation of Biological Patterns Introduction Reaction-diffusion equations can be utilized in order

Geometric Algebra package for JAX

JAXGA - JAX Geometric Algebra GitHub | Docs JAXGA is a Geometric Algebra package on top of JAX. It can handle high dimensional algebras by storing onl

Map single-cell transcriptomes to copy number evolutionary trees.

Map single-cell transcriptomes to copy number evolutionary trees. Check out the tutorial for more information. Installation $ pip install scatrex SCA

Bayes-Newton—A Gaussian process library in JAX, with a unifying view of approximate Bayesian inference as variants of Newton's algorithm.

Bayes-Newton Bayes-Newton is a library for approximate inference in Gaussian processes (GPs) in JAX (with objax), built and actively maintained by Wil

A denoising diffusion probabilistic model synthesises galaxies that are qualitatively and physically indistinguishable from the real thing.

Realistic galaxy simulation via score-based generative models Official code for 'Realistic galaxy simulation via score-based generative models'. We us

Machine Learning with JAX Tutorials

The purpose of this repo is to make it easy to get started with JAX. It contains my "Machine Learning with JAX" series of tutorials (YouTube videos and Jupyter Notebooks) as well as the content I found useful while learning JAX.

A suite of benchmarks for CPU and GPU performance of the most popular high-performance libraries for Python :rocket:

A suite of benchmarks for CPU and GPU performance of the most popular high-performance libraries for Python :rocket:

A minimalist implementation of score-based diffusion model

sdeflow-light This is a minimalist codebase for training score-based diffusion models (supporting MNIST and CIFAR-10) used in the following paper "A V

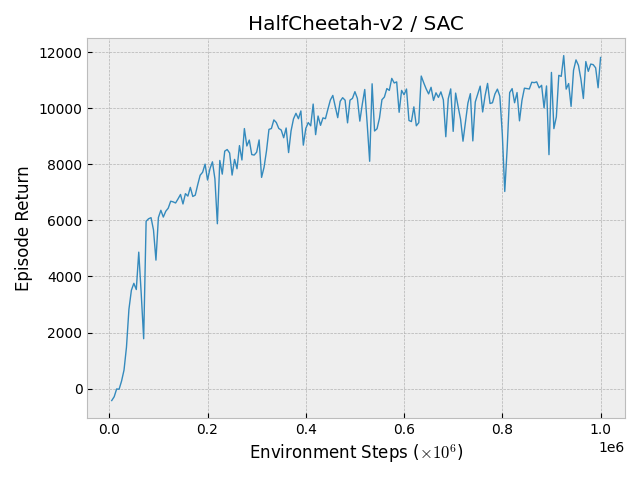

JAXDL: JAX (Flax) Deep Learning Library

JAXDL: JAX (Flax) Deep Learning Library Simple and clean JAX/Flax deep learning algorithm implementations: Soft-Actor-Critic (arXiv:1812.05905) Transf

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrai

An example showing how to use jax to train resnet50 on multi-node multi-GPU

jax-multi-gpu-resnet50-example This repo shows how to use jax for multi-node multi-GPU training. The example is adapted from the resnet50 example in d

Use Jax functions in Pytorch with DLPack

Use Jax functions in Pytorch with DLPack

Deep learning library for solving differential equations and more

DeepXDE Voting on whether we should have a Slack channel for discussion. DeepXDE is a library for scientific machine learning. Use DeepXDE if you need

Diffusion Normalizing Flow (DiffFlow) Neurips2021

Diffusion Normalizing Flow (DiffFlow) Reproduce setup environment The repo heavily depends on jam, a personal toolbox developed by Qsh.zh. The API may

QSIprep: Preprocessing and analysis of q-space images

QSIprep: Preprocessing and analysis of q-space images Full documentation at https://qsiprep.readthedocs.io About qsiprep configures pipelines for proc

Code for Multinomial Diffusion

Code for Multinomial Diffusion Abstract Generative flows and diffusion models have been predominantly trained on ordinal data, for example natural ima

A JAX implementation of Broaden Your Views for Self-Supervised Video Learning, or BraVe for short.

BraVe This is a JAX implementation of Broaden Your Views for Self-Supervised Video Learning, or BraVe for short. The model provided in this package wa

Reimplementation of the paper "Attention, Learn to Solve Routing Problems!" in jax/flax.

JAX + Attention Learn To Solve Routing Problems Reinplementation of the paper Attention, Learn to Solve Routing Problems! using Jax and Flax. Fully su

functorch is a prototype of JAX-like composable function transforms for PyTorch.

functorch is a prototype of JAX-like composable function transforms for PyTorch.

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

Just playing with getting CLIP Guided Diffusion running locally, rather than having to use colab.

CLIP-Guided-Diffusion Just playing with getting CLIP Guided Diffusion running locally, rather than having to use colab. Original colab notebooks by Ka

A machine learning library for spiking neural networks. Supports training with both torch and jax pipelines, and deployment to neuromorphic hardware.

Rockpool Rockpool is a Python package for developing signal processing applications with spiking neural networks. Rockpool allows you to build network

ILVR: Conditioning Method for Denoising Diffusion Probabilistic Models (ICCV 2021 Oral)

ILVR + ADM This is the implementation of ILVR: Conditioning Method for Denoising Diffusion Probabilistic Models (ICCV 2021 Oral). This repository is h

Codebase for Diffusion Models Beat GANS on Image Synthesis.

Codebase for Diffusion Models Beat GANS on Image Synthesis.

A Pytree Module system for Deep Learning in JAX

Treex A Pytree-based Module system for Deep Learning in JAX Intuitive: Modules are simple Python objects that respect Object-Oriented semantics and sh

Unoffical implementation about Image Super-Resolution via Iterative Refinement by Pytorch

Image Super-Resolution via Iterative Refinement Paper | Project Brief This is a unoffical implementation about Image Super-Resolution via Iterative Re

Generate vibrant and detailed images using only text.

CLIP Guided Diffusion From RiversHaveWings. Generate vibrant and detailed images using only text. See captions and more generations in the Gallery See

Scenic: A Jax Library for Computer Vision and Beyond

Scenic Scenic is a codebase with a focus on research around attention-based models for computer vision. Scenic has been successfully used to develop c

Callable PyTrees and filtered JIT/grad transformations = neural networks in JAX.

Equinox Callable PyTrees and filtered JIT/grad transformations = neural networks in JAX Equinox brings more power to your model building in JAX. Repr

NeuralCompression is a Python repository dedicated to research of neural networks that compress data

NeuralCompression is a Python repository dedicated to research of neural networks that compress data. The repository includes tools such as JAX-based entropy coders, image compression models, video compression models, and metrics for image and video evaluation.

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

Implementation of experiments in the paper Clockwork Variational Autoencoders (project website) using JAX and Flax

Clockwork VAEs in JAX/Flax Implementation of experiments in the paper Clockwork Variational Autoencoders (project website) using JAX and Flax, ported

RoBERTa Marathi Language model trained from scratch during huggingface 🤗 x flax community week

RoBERTa base model for Marathi Language (मराठी भाषा) Pretrained model on Marathi language using a masked language modeling (MLM) objective. RoBERTa wa

PIX is an image processing library in JAX, for JAX.

PIX PIX is an image processing library in JAX, for JAX. Overview JAX is a library resulting from the union of Autograd and XLA for high-performance ma

Hardware accelerated, batchable and differentiable optimizers in JAX.

JAXopt Installation | Examples | References Hardware accelerated (GPU/TPU), batchable and differentiable optimizers in JAX. Installation JAXopt can be

Brax is a differentiable physics engine that simulates environments made up of rigid bodies, joints, and actuators

Brax is a differentiable physics engine that simulates environments made up of rigid bodies, joints, and actuators. It's also a suite of learning algorithms to train agents to operate in these environments (PPO, SAC, evolutionary strategy, and direct trajectory optimization are implemented).

Pytorch implementation of "Grad-TTS: A Diffusion Probabilistic Model for Text-to-Speech"

GradTTS Unofficial Pytorch implementation of "Grad-TTS: A Diffusion Probabilistic Model for Text-to-Speech" (arxiv) About this repo This is an unoffic

NU-Wave: A Diffusion Probabilistic Model for Neural Audio Upsampling @ INTERSPEECH 2021 Accepted

NU-Wave — Official PyTorch Implementation NU-Wave: A Diffusion Probabilistic Model for Neural Audio Upsampling Junhyeok Lee, Seungu Han @ MINDsLab Inc

Denoising Diffusion Probabilistic Models

Denoising Diffusion Probabilistic Models This repo contains code for DDPM training. Based on Denoising Diffusion Probabilistic Models, Improved Denois

Shared code for training sentence embeddings with Flax / JAX

flax-sentence-embeddings This repository will be used to share code for the Flax / JAX community event to train sentence embeddings on 1B+ training pa

Implementation and replication of ProGen, Language Modeling for Protein Generation, in Jax

ProGen - (wip) Implementation and replication of ProGen, Language Modeling for Protein Generation, in Pytorch and Jax (the weights will be made easily

Implementation of FitVid video prediction model in JAX/Flax.

FitVid Video Prediction Model Implementation of FitVid video prediction model in JAX/Flax. If you find this code useful, please cite it in your paper:

Brax is a differentiable physics engine that simulates environments made up of rigid bodies, joints, and actuators

Brax is a differentiable physics engine that simulates environments made up of rigid bodies, joints, and actuators. It's also a suite of learning algorithms to train agents to operate in these environments (PPO, SAC, evolutionary strategy, and direct trajectory optimization are implemented).

Code for our TKDE paper "Understanding WeChat User Preferences and “Wow” Diffusion"

wechat-wow-analysis Understanding WeChat User Preferences and “Wow” Diffusion. Fanjin Zhang, Jie Tang, Xueyi Liu, Zhenyu Hou, Yuxiao Dong, Jing Zhang,

Continuous Diffusion Graph Neural Network

We present Graph Neural Diffusion (GRAND) that approaches deep learning on graphs as a continuous diffusion process and treats Graph Neural Networks (GNNs) as discretisations of an underlying PDE.

Python code for "Machine learning: a probabilistic perspective" (2nd edition)

Python code for "Machine learning: a probabilistic perspective" (2nd edition)

ML Optimizers from scratch using JAX

Toy implementations of some popular ML optimizers using Python/JAX

Aggragrating Nested Transformer Official Jax Implementation

NesT is a simple method, which aggragrates nested local transformers on image blocks. The idea makes vision transformers attain better accuracy, data efficiency, and convergence on the ImageNet benchmark. NesT can be scaled to small datasets to match convnet accuracy.

A denoising diffusion probabilistic model (DDPM) tailored for conditional generation of protein distograms

Denoising Diffusion Probabilistic Model for Proteins Implementation of Denoising Diffusion Probabilistic Model in Pytorch. It is a new approach to gen

JAX code for the paper "Control-Oriented Model-Based Reinforcement Learning with Implicit Differentiation"

Optimal Model Design for Reinforcement Learning This repository contains JAX code for the paper Control-Oriented Model-Based Reinforcement Learning wi

Official PyTorch implementation for FastDPM, a fast sampling algorithm for diffusion probabilistic models

Official PyTorch implementation for "On Fast Sampling of Diffusion Probabilistic Models". FastDPM generation on CIFAR-10, CelebA, and LSUN datasets. S

JAX + dataclasses

jax_dataclasses jax_dataclasses provides a wrapper around dataclasses.dataclass for use in JAX, which enables automatic support for: Pytree registrati

Symbolic Parallel Adaptive Importance Sampling for Probabilistic Program Analysis in JAX

SYMPAIS: Symbolic Parallel Adaptive Importance Sampling for Probabilistic Program Analysis Overview | Installation | Documentation | Examples | Notebo

Pytorch Implementation of DiffSinger: Diffusion Acoustic Model for Singing Voice Synthesis (TTS Extension)

DiffSinger - PyTorch Implementation PyTorch implementation of DiffSinger: Diffusion Acoustic Model for Singing Voice Synthesis (TTS Extension). Status

jaxfg - Factor graph-based nonlinear optimization library for JAX.

Factor graphs + nonlinear optimization in JAX

NU-Wave: A Diffusion Probabilistic Model for Neural Audio Upsampling

NU-Wave: A Diffusion Probabilistic Model for Neural Audio Upsampling For Official repo of NU-Wave: A Diffusion Probabilistic Model for Neural Audio Up

Local Attention - Flax module for Jax

Local Attention - Flax Autoregressive Local Attention - Flax module for Jax Install $ pip install local-attention-flax Usage from jax import random fr

A GPT, made only of MLPs, in Jax

MLP GPT - Jax (wip) A GPT, made only of MLPs, in Jax. The specific MLP to be used are gMLPs with the Spatial Gating Units. Working Pytorch implementat

JMP is a Mixed Precision library for JAX.

Mixed precision training [0] is a technique that mixes the use of full and half precision floating point numbers during training to reduce the memory bandwidth requirements and improve the computational efficiency of a given model.

Contains code for the paper "Vision Transformers are Robust Learners".

Vision Transformers are Robust Learners This repository contains the code for the paper Vision Transformers are Robust Learners by Sayak Paul* and Pin

Standalone pre-training recipe with JAX+Flax

Sabertooth Sabertooth is standalone pre-training recipe based on JAX+Flax, with data pipelines implemented in Rust. It runs on CPU, GPU, and/or TPU, b

Pretrained models for Jax/Flax: StyleGAN2, GPT2, VGG, ResNet.

Pretrained models for Jax/Flax: StyleGAN2, GPT2, VGG, ResNet.

This is the codebase for Diffusion Models Beat GANS on Image Synthesis.

This is the codebase for Diffusion Models Beat GANS on Image Synthesis.

Bayesian optimization in JAX

Bayesian optimization in JAX

Learning Energy-Based Models by Diffusion Recovery Likelihood

Learning Energy-Based Models by Diffusion Recovery Likelihood Ruiqi Gao, Yang Song, Ben Poole, Ying Nian Wu, Diederik P. Kingma Paper: https://arxiv.o

Plug-n-Play Reinforcement Learning in Python with OpenAI Gym and JAX

coax is built on top of JAX, but it doesn't have an explicit dependence on the jax python package. The reason is that your version of jaxlib will depend on your CUDA version.

Newt - a Gaussian process library in JAX.

Newt __ \/_ (' \`\ _\, \ \\/ /`\/\ \\ \ \\

Functional tensors for probabilistic programming

Funsor Funsor is a tensor-like library for functions and distributions. See Functional tensors for probabilistic programming for a system description.

Objax Apache-2Objax (🥉19 · ⭐ 580) - Objax is a machine learning framework that provides an Object.. Apache-2 jax

Objax Tutorials | Install | Documentation | Philosophy This is not an officially supported Google product. Objax is an open source machine learning fr

Model parallel transformers in Jax and Haiku

Mesh Transformer Jax A haiku library using the new(ly documented) xmap operator in Jax for model parallelism of transformers. See enwik8_example.py fo

Code for "Infinitely Deep Bayesian Neural Networks with Stochastic Differential Equations"

Infinitely Deep Bayesian Neural Networks with SDEs This library contains JAX and Pytorch implementations of neural ODEs and Bayesian layers for stocha

Very deep VAEs in JAX/Flax

Very Deep VAEs in JAX/Flax Implementation of the experiments in the paper Very Deep VAEs Generalize Autoregressive Models and Can Outperform Them on I

Turning SymPy expressions into JAX functions

sympy2jax Turn SymPy expressions into parametrized, differentiable, vectorizable, JAX functions. All SymPy floats become trainable input parameters. S

Python script for Linear, Non-Linear Convection, Burger’s & Poisson Equation in 1D & 2D, 1D Diffusion Equation using Standard Wall Function, 2D Heat Conduction Convection equation with Dirichlet & Neumann BC, full Navier-Stokes Equation coupled with Poisson equation for Cavity and Channel flow in 2D using Finite Difference Method & Finite Volume Method.

Navier-Stokes-numerical-solution-using-Python- Python script for Linear, Non-Linear Convection, Burger’s & Poisson Equation in 1D & 2D, 1D D

3D Vision functions with end-to-end support for deep learning developers, written in Ivy.

Ivy vision focuses predominantly on 3D vision, with functions for camera geometry, image projections, co-ordinate frame transformations, forward warping, inverse warping, optical flow, depth triangulation, voxel grids, point clouds, signed distance functions, and others. Check out the docs for more info!

Release for Improved Denoising Diffusion Probabilistic Models

improved-diffusion This is the codebase for Improved Denoising Diffusion Probabilistic Models. Usage This section of the README walks through how to t

Ivy is a templated deep learning framework which maximizes the portability of deep learning codebases.

Ivy is a templated deep learning framework which maximizes the portability of deep learning codebases. Ivy wraps the functional APIs of existing frameworks. Framework-agnostic functions, libraries and layers can then be written using Ivy, with simultaneous support for all frameworks. Ivy currently supports Jax, TensorFlow, PyTorch, MXNet and Numpy. Check out the docs for more info!

Extending JAX with custom C++ and CUDA code

Extending JAX with custom C++ and CUDA code This repository is meant as a tutorial demonstrating the infrastructure required to provide custom ops in

Code for "Diffusion is All You Need for Learning on Surfaces"

Source code for "Diffusion is All You Need for Learning on Surfaces", by Nicholas Sharp Souhaib Attaiki Keenan Crane Maks Ovsjanikov NOTE: the linked

Elegy is a framework-agnostic Trainer interface for the Jax ecosystem.

Elegy Elegy is a framework-agnostic Trainer interface for the Jax ecosystem. Main Features Easy-to-use: Elegy provides a Keras-like high-level API tha

JAX-based neural network library

Haiku: Sonnet for JAX Overview | Why Haiku? | Quickstart | Installation | Examples | User manual | Documentation | Citing Haiku What is Haiku? Haiku i

Fast and Easy Infinite Neural Networks in Python

Neural Tangents ICLR 2020 Video | Paper | Quickstart | Install guide | Reference docs | Release notes Overview Neural Tangents is a high-level neural

Deep learning operations reinvented (for pytorch, tensorflow, jax and others)

This video in better quality. einops Flexible and powerful tensor operations for readable and reliable code. Supports numpy, pytorch, tensorflow, and

Flax is a neural network ecosystem for JAX that is designed for flexibility.

Flax: A neural network library and ecosystem for JAX designed for flexibility Overview | Quick install | What does Flax look like? | Documentation See

🔮 A refreshing functional take on deep learning, compatible with your favorite libraries

Thinc: A refreshing functional take on deep learning, compatible with your favorite libraries From the makers of spaCy, Prodigy and FastAPI Thinc is a

Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more

JAX: Autograd and XLA Quickstart | Transformations | Install guide | Neural net libraries | Change logs | Reference docs | Code search News: JAX tops

FedJAX is a library for developing custom Federated Learning (FL) algorithms in JAX.

FedJAX: Federated learning with JAX What is FedJAX? FedJAX is a library for developing custom Federated Learning (FL) algorithms in JAX. FedJAX priori

Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more

JAX: Autograd and XLA Quickstart | Transformations | Install guide | Neural net libraries | Change logs | Reference docs | Code search News: JAX tops