1526 Repositories

Python vision-transformer-tf Libraries

Keras Realtime Multi-Person Pose Estimation - Keras version of Realtime Multi-Person Pose Estimation project

This repository has become incompatible with the latest and recommended version of Tensorflow 2.0 Instead of refactoring this code painfully, I create

Transformer - A TensorFlow Implementation of the Transformer: Attention Is All You Need

[UPDATED] A TensorFlow Implementation of Attention Is All You Need When I opened this repository in 2017, there was no official code yet. I tried to i

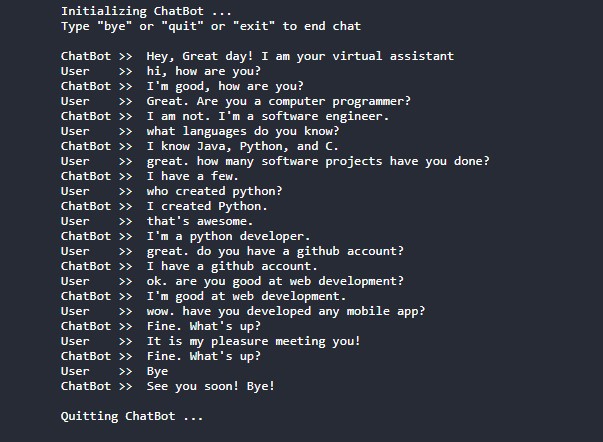

Conversational-AI-ChatBot - Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users!

Conversational AI ChatBot Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users! In this project? Thi

Omdena-abuja-anpd - Automatic Number Plate Detection for the security of lives and properties using Computer Vision.

Omdena-abuja-anpd - Automatic Number Plate Detection for the security of lives and properties using Computer Vision.

SPT_LSA_ViT - Implementation for Visual Transformer for Small-size Datasets

Vision Transformer for Small-Size Datasets Seung Hoon Lee and Seunghyun Lee and Byung Cheol Song | Paper Inha University Abstract Recently, the Vision

Image-popularity-score - A novel deep regression method for image scoring.

Image-popularity-score - A novel deep regression method for image scoring.

“Robust Lightweight Facial Expression Recognition Network with Label Distribution Training”, AAAI 2021.

EfficientFace Zengqun Zhao, Qingshan Liu, Feng Zhou. "Robust Lightweight Facial Expression Recognition Network with Label Distribution Training". AAAI

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

PyTorch Implementation of SSTNs for hyperspectral image classifications from the IEEE T-GRS paper "Spectral-Spatial Transformer Network for Hyperspectral Image Classification: A FAS Framework."

PyTorch Implementation of SSTN for Hyperspectral Image Classification Paper links: SSTN published on IEEE T-GRS. Also, you can directly find the imple

A PyTorch-based Semi-Supervised Learning (SSL) Codebase for Pixel-wise (Pixel) Vision Tasks

PixelSSL is a PyTorch-based semi-supervised learning (SSL) codebase for pixel-wise (Pixel) vision tasks. The purpose of this project is to promote the

Semi-Supervised Semantic Segmentation with Cross-Consistency Training (CCT)

Semi-Supervised Semantic Segmentation with Cross-Consistency Training (CCT) Paper, Project Page This repo contains the official implementation of CVPR

Adversarial Learning for Semi-supervised Semantic Segmentation, BMVC 2018

Adversarial Learning for Semi-supervised Semantic Segmentation This repo is the pytorch implementation of the following paper: Adversarial Learning fo

Weakly- and Semi-Supervised Panoptic Segmentation (ECCV18)

Weakly- and Semi-Supervised Panoptic Segmentation by Qizhu Li*, Anurag Arnab*, Philip H.S. Torr This repository demonstrates the weakly supervised gro

Weakly-supervised object detection.

Wetectron Wetectron is a software system that implements state-of-the-art weakly-supervised object detection algorithms. Project CVPR'20, ECCV'20 | Pa

The official pytorch implementation of ViTAE: Vision Transformer Advanced by Exploring Intrinsic Inductive Bias

ViTAE: Vision Transformer Advanced by Exploring Intrinsic Inductive Bias Introduction | Updates | Usage | Results&Pretrained Models | Statement | Intr

Official implementation of CATs: Cost Aggregation Transformers for Visual Correspondence NeurIPS'21

CATs: Cost Aggregation Transformers for Visual Correspondence NeurIPS'21 For more information, check out the paper on [arXiv]. Training with different

LAVT: Language-Aware Vision Transformer for Referring Image Segmentation

LAVT: Language-Aware Vision Transformer for Referring Image Segmentation Where we are ? 12.27 目前和原论文仍有1%左右得差距,但已经力压很多SOTA了 ckpt__448_epoch_25.pth mIoU

Variable Transformer Calculator

✠ VASCO - VAriable tranSformer CalculatOr Software que calcula informações de transformadores feita para a matéria de "Conversão Eletromecânica de Ene

🔀 Visual Room Rearrangement

AI2-THOR Rearrangement Challenge Welcome to the 2021 AI2-THOR Rearrangement Challenge hosted at the CVPR'21 Embodied-AI Workshop. The goal of this cha

Align and Prompt: Video-and-Language Pre-training with Entity Prompts

ALPRO Align and Prompt: Video-and-Language Pre-training with Entity Prompts [Paper] Dongxu Li, Junnan Li, Hongdong Li, Juan Carlos Niebles, Steven C.H

Official release of MSHT: Multi-stage Hybrid Transformer for the ROSE Image Analysis of Pancreatic Cancer axriv: http://arxiv.org/abs/2112.13513

MSHT: Multi-stage Hybrid Transformer for the ROSE Image Analysis This is the official page of the MSHT with its experimental script and records. We de

For storing the complete exploration of Visual Question Answering for our B.Tech Project

Multi-Image vqa @authors: Akhilesh, Janhavi, Harsh Paper summary, Ideas tried and their corresponding results: on wiki Other discussions: on discussio

Geometry-Aware Learning of Maps for Camera Localization (CVPR2018)

Geometry-Aware Learning of Maps for Camera Localization This is the PyTorch implementation of our CVPR 2018 paper "Geometry-Aware Learning of Maps for

Codebase for ECCV18 "The Sound of Pixels"

Sound-of-Pixels Codebase for ECCV18 "The Sound of Pixels". *This repository is under construction, but the core parts are already there. Environment T

Unofficial TensorFlow implementation of the Keyword Spotting Transformer model

Keyword Spotting Transformer This is the unofficial TensorFlow implementation of the Keyword Spotting Transformer model. This model is used to train o

Official repository of the paper Learning to Regress 3D Face Shape and Expression from an Image without 3D Supervision

Official repository of the paper Learning to Regress 3D Face Shape and Expression from an Image without 3D Supervision

TensorFlow implementation of "Attention is all you need (Transformer)"

[TensorFlow 2] Attention is all you need (Transformer) TensorFlow implementation of "Attention is all you need (Transformer)" Dataset The MNIST datase

PyTorch Implementation for Deep Metric Learning Pipelines

Easily Extendable Basic Deep Metric Learning Pipeline Karsten Roth ([email protected]), Biagio Brattoli ([email protected]) When using thi

The easiest way to use deep metric learning in your application. Modular, flexible, and extensible. Written in PyTorch.

News December 27: v1.1.0 New loss functions: CentroidTripletLoss and VICRegLoss Mean reciprocal rank + per-class accuracies See the release notes Than

XViT - Space-time Mixing Attention for Video Transformer

XViT - Space-time Mixing Attention for Video Transformer This is the official implementation of the XViT paper: @inproceedings{bulat2021space, title

A curated list of the latest breakthroughs in AI (in 2021) by release date with a clear video explanation, link to a more in-depth article, and code.

2021: A Year Full of Amazing AI papers- A Review 📌 A curated list of the latest breakthroughs in AI by release date with a clear video explanation, l

Implementation of Memory-Efficient Neural Networks with Multi-Level Generation, ICCV 2021

Memory-Efficient Multi-Level In-Situ Generation (MLG) By Jiaqi Gu, Hanqing Zhu, Chenghao Feng, Mingjie Liu, Zixuan Jiang, Ray T. Chen and David Z. Pan

Machine Learning Course Project, IMDB movie review sentiment analysis by lstm, cnn, and transformer

IMDB Sentiment Analysis This is the final project of Machine Learning Courses in Huazhong University of Science and Technology, School of Artificial I

Hub is a dataset format with a simple API for creating, storing, and collaborating on AI datasets of any size.

Hub is a dataset format with a simple API for creating, storing, and collaborating on AI datasets of any size. The hub data layout enables rapid transformations and streaming of data while training models at scale. Hub is used by Google, Waymo, Red Cross, Oxford University, and Omdena.

PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation.

ALiBi PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation. Quickstart Clone this reposit

A website which allows you to play with the GPT-2 transformer

transformers A website which allows you to play with the GPT-2 model Built with ❤️ by raphtlw Table of contents Model Setup About Contributors Model T

Pytorch based library to rank predicted bounding boxes using text/image user's prompts.

pytorch_clip_bbox: Implementation of the CLIP guided bbox ranking for Object Detection. Pytorch based library to rank predicted bounding boxes using t

Tensorflow implementation for "Improved Transformer for High-Resolution GANs" (NeurIPS 2021).

HiT-GAN Official TensorFlow Implementation HiT-GAN presents a Transformer-based generator that is trained based on Generative Adversarial Networks (GA

High accurate tool for automatic faces detection with landmarks

faces_detanator High accurate tool for automatic faces detection with landmarks. The library is based on public detectors with high accuracy (TinaFace

Seeks to remove text from an image in a convincing way.

Text-Removal This is a Computer Vision project that seeks to successfully remove text from an image by covering the text areas in a convincing way. He

BisQue is a web-based platform designed to provide researchers with organizational and quantitative analysis tools for 5D image data. Users can extend BisQue by implementing containerized ML workflows.

Overview BisQue is a web-based platform specifically designed to provide researchers with organizational and quantitative analysis tools for up to 5D

Group Activity Recognition with Clustered Spatial Temporal Transformer

GroupFormer Group Activity Recognition with Clustered Spatial-TemporalTransformer Backbone Style Action Acc Activity Acc Config Download Inv3+flow+pos

Source code of SIGIR2021 Paper 'One Chatbot Per Person: Creating Personalized Chatbots based on Implicit Profiles'

DHAP Source code of SIGIR2021 Long Paper: One Chatbot Per Person: Creating Personalized Chatbots based on Implicit User Profiles . Preinstallation Fir

This is a repository to learn and get more computer vision skills, make robotics projects integrating the computer vision as a perception tool and create a lot of awesome advanced controllers for the robots of the future.

This is a repository to learn and get more computer vision skills, make robotics projects integrating the computer vision as a perception tool and create a lot of awesome advanced controllers for the robots of the future.

Codes for the paper Contrast and Mix: Temporal Contrastive Video Domain Adaptation with Background Mixing

Contrast and Mix (CoMix) The repository contains the codes for the paper Contrast and Mix: Temporal Contrastive Video Domain Adaptation with Backgroun

Code implementation from my Medium blog post: [Transformers from Scratch in PyTorch]

transformer-from-scratch Code for my Medium blog post: Transformers from Scratch in PyTorch Note: This Transformer code does not include masked attent

Final project code: Implementing BicycleGAN, for CIS680 FA21 at University of Pennsylvania

680 Final Project: BicycleGAN Haoran Tang Instructions 1. Training To train the network, please run train.py. Change hyper-parameters and folder paths

Final project code: Implementing MAE with downscaled encoders and datasets, for ESE546 FA21 at University of Pennsylvania

546 Final Project: Masked Autoencoder Haoran Tang, Qirui Wu 1. Training To train the network, please run mae_pretraining.py. Please modify folder path

Official Implementation of VAT

Semantic correspondence Few-shot segmentation Cost Aggregation Is All You Need for Few-Shot Segmentation For more information, check out project [Proj

Turn any live video stream or locally stored video into a dataset of interesting samples for ML training, or any other type of analysis.

Sieve Video Data Collection Example Find samples that are interesting within hours of raw video, for free and completely automatically using Sieve API

Pytorch implementation for "Open Compound Domain Adaptation" (CVPR 2020 ORAL)

Open Compound Domain Adaptation [Project] [Paper] [Demo] [Blog] Overview Open Compound Domain Adaptation (OCDA) is the author's re-implementation of t

Curriculum Domain Adaptation for Semantic Segmentation of Urban Scenes, ICCV 2017

AdaptationSeg This is the Python reference implementation of AdaptionSeg proposed in "Curriculum Domain Adaptation for Semantic Segmentation of Urban

This repository contains a CBIR system that uses swin transformer to extract image's feature.

Swin-transformer based CBIR This repository contains a CBIR(content-based image retrieval) system. Here we use Swin-transformer to extract query image

Keras implementation of PersonLab for Multi-Person Pose Estimation and Instance Segmentation.

PersonLab This is a Keras implementation of PersonLab for Multi-Person Pose Estimation and Instance Segmentation. The model predicts heatmaps and vari

StyleSwin: Transformer-based GAN for High-resolution Image Generation

StyleSwin This repo is the official implementation of "StyleSwin: Transformer-based GAN for High-resolution Image Generation". By Bowen Zhang, Shuyang

Official Pytorch implementation of the paper: "Locally Shifted Attention With Early Global Integration"

Locally-Shifted-Attention-With-Early-Global-Integration Pretrained models You can download all the models from here. Training Imagenet python -m torch

Implementation for paper "STAR: A Structure-aware Lightweight Transformer for Real-time Image Enhancement" (ICCV 2021).

STAR-pytorch Implementation for paper "STAR: A Structure-aware Lightweight Transformer for Real-time Image Enhancement" (ICCV 2021). CVF (pdf) STAR-DC

This project aims to explore the deployment of Swin-Transformer based on TensorRT, including the test results of FP16 and INT8.

Swin Transformer This project aims to explore the deployment of SwinTransformer based on TensorRT, including the test results of FP16 and INT8. Introd

OSLO: Open Source framework for Large-scale transformer Optimization

O S L O Open Source framework for Large-scale transformer Optimization What's New: December 21, 2021 Released OSLO 1.0. What is OSLO about? OSLO is a

TransZero++: Cross Attribute-guided Transformer for Zero-Shot Learning

TransZero++ This repository contains the testing code for the paper "TransZero++: Cross Attribute-guided Transformer for Zero-Shot Learning" submitted

PyTorch implementation of DeepUME: Learning the Universal Manifold Embedding for Robust Point Cloud Registration (BMVC 2021)

DeepUME: Learning the Universal Manifold Embedding for Robust Point Cloud Registration [video] [paper] [supplementary] [data] [thesis] Introduction De

MISSFormer: An Effective Medical Image Segmentation Transformer

MISSFormer Code for paper "MISSFormer: An Effective Medical Image Segmentation Transformer". Please read our preprint at the following link: paper_add

computer vision, image processing and machine learning on the web browser or node.

Image processing and Machine learning labs computer vision, image processing and machine learning on the web browser or node note Fast Fourier Trans

Using computer vision method to recognize and calcutate the features of the architecture.

building-feature-recognition In this repository, we accomplished building feature recognition using traditional/dl-assisted computer vision method. Th

TensorFlow implementation of "TokenLearner: What Can 8 Learned Tokens Do for Images and Videos?"

TokenLearner: What Can 8 Learned Tokens Do for Images and Videos? Source: Improving Vision Transformer Efficiency and Accuracy by Learning to Tokenize

Simple and ready-to-use tutorials for TensorFlow

TensorFlow World To support maintaining and upgrading this project, please kindly consider Sponsoring the project developer. Any level of support is a

CPPE - 5 (Medical Personal Protective Equipment) is a new challenging object detection dataset

CPPE - 5 CPPE - 5 (Medical Personal Protective Equipment) is a new challenging dataset with the goal to allow the study of subordinate categorization

Implement object segmentation on images using HOG algorithm proposed in CVPR 2005

HOG Algorithm Implementation Description HOG (Histograms of Oriented Gradients) Algorithm is an algorithm aiming to realize object segmentation (edge

A collection of resources on neural rendering.

awesome neural rendering A collection of resources on neural rendering. Contributing If you think I have missed out on something (or) have any suggest

![[CVPR 2019 Oral] Multi-Channel Attention Selection GAN with Cascaded Semantic Guidance for Cross-View Image Translation](https://github.com/Ha0Tang/SelectionGAN/raw/master/imgs/motivation.jpg)

[CVPR 2019 Oral] Multi-Channel Attention Selection GAN with Cascaded Semantic Guidance for Cross-View Image Translation

SelectionGAN for Guided Image-to-Image Translation CVPR Paper | Extended Paper | Guided-I2I-Translation-Papers Citation If you use this code for your

Adversarial Texture Optimization from RGB-D Scans (CVPR 2020).

AdversarialTexture Adversarial Texture Optimization from RGB-D Scans (CVPR 2020). Scanning Data Download Please refer to data directory for details. B

This repository contains the source codes for the paper AtlasNet V2 - Learning Elementary Structures.

AtlasNet V2 - Learning Elementary Structures This work was build upon Thibault Groueix's AtlasNet and 3D-CODED projects. (you might want to have a loo

AtlasNet: A Papier-Mâché Approach to Learning 3D Surface Generation

AtlasNet [Project Page] [Paper] [Talk] AtlasNet: A Papier-Mâché Approach to Learning 3D Surface Generation Thibault Groueix, Matthew Fisher, Vladimir

A simple baseline for 3d human pose estimation in PyTorch.

3d_pose_baseline_pytorch A PyTorch implementation of a simple baseline for 3d human pose estimation. You can check the original Tensorflow implementat

A simple baseline for 3d human pose estimation in tensorflow. Presented at ICCV 17.

3d-pose-baseline This is the code for the paper Julieta Martinez, Rayat Hossain, Javier Romero, James J. Little. A simple yet effective baseline for 3

Computer vision - fun segmentation experience using classic and deep tools :)

Computer_Vision_Segmentation_Fun Segmentation of Images and Video. Tools: pytorch Models: Classic model - GrabCut Deep model - Deeplabv3_resnet101 Flo

Implementing Vision Transformer (ViT) in PyTorch

Lightning-Hydra-Template A clean and scalable template to kickstart your deep learning project 🚀 ⚡ 🔥 Click on Use this template to initialize new re

Automatically remove the mosaics in images and videos, or add mosaics to them.

Automatically remove the mosaics in images and videos, or add mosaics to them.

Mixed Transformer UNet for Medical Image Segmentation

MT-UNet Update 2021/11/19 Thank you for your interest in our work. We have uploaded the code of our MTUNet to help peers conduct further research on i

Ensembling Off-the-shelf Models for GAN Training

Vision-aided GAN video (3m) | website | paper Can the collective knowledge from a large bank of pretrained vision models be leveraged to improve GAN t

Code for TIP 2017 paper --- Illumination Decomposition for Photograph with Multiple Light Sources.

Illumination_Decomposition Code for TIP 2017 paper --- Illumination Decomposition for Photograph with Multiple Light Sources. This code implements the

Code for paper Multitask-Finetuning of Zero-shot Vision-Language Models

Code for paper Multitask-Finetuning of Zero-shot Vision-Language Models

CDTrans: Cross-domain Transformer for Unsupervised Domain Adaptation

[ICCV2021] TransReID: Transformer-based Object Re-Identification [pdf] The official repository for TransReID: Transformer-based Object Re-Identificati

This repository builds a basic vision transformer from scratch so that one beginner can understand the theory of vision transformer.

vision-transformer-from-scratch This repository includes several kinds of vision transformers from scratch so that one beginner can understand the the

Collection of common code that's shared among different research projects in FAIR computer vision team.

fvcore fvcore is a light-weight core library that provides the most common and essential functionality shared in various computer vision frameworks de

A Transformer-Based Feature Segmentation and Region Alignment Method For UAV-View Geo-Localization

University1652-Baseline [Paper] [Slide] [Explore Drone-view Data] [Explore Satellite-view Data] [Explore Street-view Data] [Video Sample] [中文介绍] This

Official PyTorch implementation of SegFormer

SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers Figure 1: Performance of SegFormer-B0 to SegFormer-B5. Project page

Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE)

[中文|English] Rotary Transformer Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE). The RoPE is a relative

Model parallel transformers in JAX and Haiku

Table of contents Mesh Transformer JAX Updates Pretrained Models GPT-J-6B Links Acknowledgments License Model Details Zero-Shot Evaluations Architectu

Unofficial implementation of Google's FNet: Mixing Tokens with Fourier Transforms

FNet: Mixing Tokens with Fourier Transforms Pytorch implementation of Fnet : Mixing Tokens with Fourier Transforms. Citation: @misc{leethorp2021fnet,

CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Generation

CPT This repository contains code and checkpoints for CPT. CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Gener

Edge-Augmented Graph Transformer

Edge-augmented Graph Transformer Introduction This is the official implementation of the Edge-augmented Graph Transformer (EGT) as described in https:

FastFormers - highly efficient transformer models for NLU

FastFormers FastFormers provides a set of recipes and methods to achieve highly efficient inference of Transformer models for Natural Language Underst

Transformer training code for sequential tasks

Sequential Transformer This is a code for training Transformers on sequential tasks such as language modeling. Unlike the original Transformer archite

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Fine-tuning scripts for evaluating transformer-based models on KLEJ benchmark.

The KLEJ Benchmark Baselines The KLEJ benchmark (Kompleksowa Lista Ewaluacji Językowych) is a set of nine evaluation tasks for the Polish language und

Large-scale pretraining for dialogue

A State-of-the-Art Large-scale Pretrained Response Generation Model (DialoGPT) This repository contains the source code and trained model for a large-

The code for two papers: Feedback Transformer and Expire-Span.

transformer-sequential This repo contains the code for two papers: Feedback Transformer Expire-Span The training code is structured for long sequentia

Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing

Introduction Funnel-Transformer is a new self-attention model that gradually compresses the sequence of hidden states to a shorter one and hence reduc

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Sinkhorn Transformer This is a reproduction of the work outlined in Sparse Sinkhorn Attention, with additional enhancements. It includes a parameteriz