1356 Repositories

Python vision-transformers Libraries

![[CVPR 2019 Oral] Multi-Channel Attention Selection GAN with Cascaded Semantic Guidance for Cross-View Image Translation](https://github.com/Ha0Tang/SelectionGAN/raw/master/imgs/motivation.jpg)

[CVPR 2019 Oral] Multi-Channel Attention Selection GAN with Cascaded Semantic Guidance for Cross-View Image Translation

SelectionGAN for Guided Image-to-Image Translation CVPR Paper | Extended Paper | Guided-I2I-Translation-Papers Citation If you use this code for your

Adversarial Texture Optimization from RGB-D Scans (CVPR 2020).

AdversarialTexture Adversarial Texture Optimization from RGB-D Scans (CVPR 2020). Scanning Data Download Please refer to data directory for details. B

This repository contains the source codes for the paper AtlasNet V2 - Learning Elementary Structures.

AtlasNet V2 - Learning Elementary Structures This work was build upon Thibault Groueix's AtlasNet and 3D-CODED projects. (you might want to have a loo

AtlasNet: A Papier-Mâché Approach to Learning 3D Surface Generation

AtlasNet [Project Page] [Paper] [Talk] AtlasNet: A Papier-Mâché Approach to Learning 3D Surface Generation Thibault Groueix, Matthew Fisher, Vladimir

A simple baseline for 3d human pose estimation in PyTorch.

3d_pose_baseline_pytorch A PyTorch implementation of a simple baseline for 3d human pose estimation. You can check the original Tensorflow implementat

A simple baseline for 3d human pose estimation in tensorflow. Presented at ICCV 17.

3d-pose-baseline This is the code for the paper Julieta Martinez, Rayat Hossain, Javier Romero, James J. Little. A simple yet effective baseline for 3

Computer vision - fun segmentation experience using classic and deep tools :)

Computer_Vision_Segmentation_Fun Segmentation of Images and Video. Tools: pytorch Models: Classic model - GrabCut Deep model - Deeplabv3_resnet101 Flo

Implementing Vision Transformer (ViT) in PyTorch

Lightning-Hydra-Template A clean and scalable template to kickstart your deep learning project 🚀 ⚡ 🔥 Click on Use this template to initialize new re

Yuno is context based search engine for anime.

Yuno yuno.mp4 Table of Contents Introduction Power Of Yuno Try Yuno How Yuno was created? References Introduction Yuno is a context based search engin

Automatically remove the mosaics in images and videos, or add mosaics to them.

Automatically remove the mosaics in images and videos, or add mosaics to them.

Ensembling Off-the-shelf Models for GAN Training

Vision-aided GAN video (3m) | website | paper Can the collective knowledge from a large bank of pretrained vision models be leveraged to improve GAN t

Code for the ICCV'21 paper "Context-aware Scene Graph Generation with Seq2Seq Transformers"

ICCV'21 Context-aware Scene Graph Generation with Seq2Seq Transformers Authors: Yichao Lu*, Himanshu Rai*, Cheng Chang*, Boris Knyazev†, Guangwei Yu,

Code for TIP 2017 paper --- Illumination Decomposition for Photograph with Multiple Light Sources.

Illumination_Decomposition Code for TIP 2017 paper --- Illumination Decomposition for Photograph with Multiple Light Sources. This code implements the

Code for paper Multitask-Finetuning of Zero-shot Vision-Language Models

Code for paper Multitask-Finetuning of Zero-shot Vision-Language Models

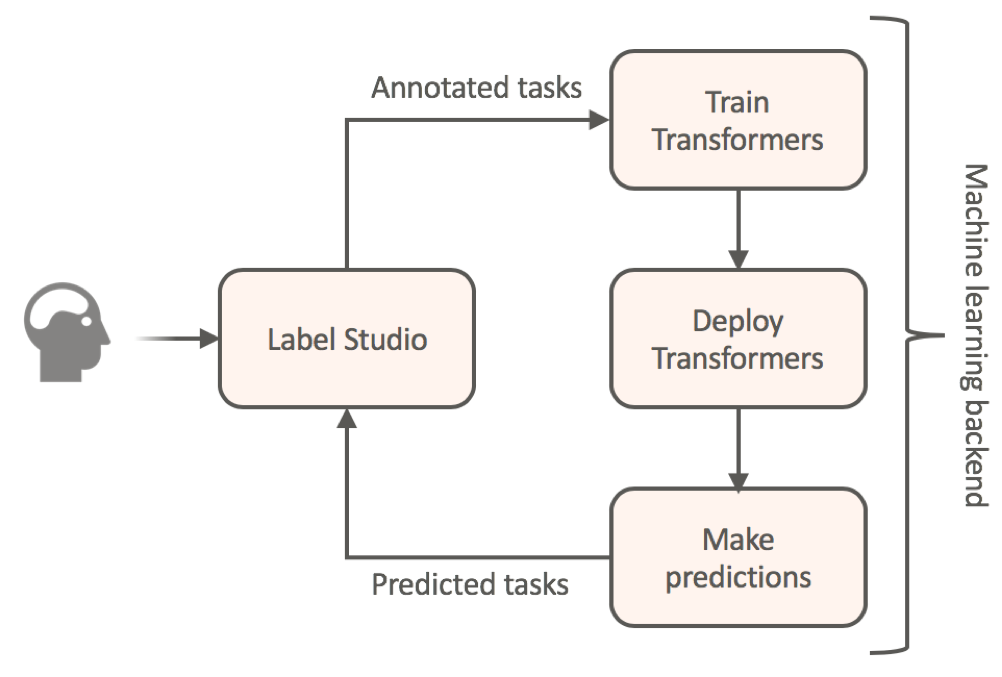

Label data using HuggingFace's transformers and automatically get a prediction service

Label Studio for Hugging Face's Transformers Website • Docs • Twitter • Join Slack Community Transfer learning for NLP models by annotating your textu

This repository builds a basic vision transformer from scratch so that one beginner can understand the theory of vision transformer.

vision-transformer-from-scratch This repository includes several kinds of vision transformers from scratch so that one beginner can understand the the

Collection of common code that's shared among different research projects in FAIR computer vision team.

fvcore fvcore is a light-weight core library that provides the most common and essential functionality shared in various computer vision frameworks de

Model parallel transformers in JAX and Haiku

Table of contents Mesh Transformer JAX Updates Pretrained Models GPT-J-6B Links Acknowledgments License Model Details Zero-Shot Evaluations Architectu

Unofficial implementation of Google's FNet: Mixing Tokens with Fourier Transforms

FNet: Mixing Tokens with Fourier Transforms Pytorch implementation of Fnet : Mixing Tokens with Fourier Transforms. Citation: @misc{leethorp2021fnet,

GANformer: Generative Adversarial Transformers

GANformer: Generative Adversarial Transformers Drew A. Hudson* & C. Lawrence Zitnick Update: We released the new GANformer2 paper! *I wish to thank Ch

The code for the Subformer, from the EMNLP 2021 Findings paper: "Subformer: Exploring Weight Sharing for Parameter Efficiency in Generative Transformers", by Machel Reid, Edison Marrese-Taylor, and Yutaka Matsuo

Subformer This repository contains the code for the Subformer. To help overcome this we propose the Subformer, allowing us to retain performance while

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Sinkhorn Transformer This is a reproduction of the work outlined in Sparse Sinkhorn Attention, with additional enhancements. It includes a parameteriz

DeLighT: Very Deep and Light-Weight Transformers

DeLighT: Very Deep and Light-weight Transformers This repository contains the source code of our work on building efficient sequence models: DeFINE (I

Code for the paper "VisualBERT: A Simple and Performant Baseline for Vision and Language"

This repository contains code for the following two papers: VisualBERT: A Simple and Performant Baseline for Vision and Language (arxiv) with a short

This repository contains the code for running the character-level Sandwich Transformers from our ACL 2020 paper on Improving Transformer Models by Reordering their Sublayers.

Improving Transformer Models by Reordering their Sublayers This repository contains the code for running the character-level Sandwich Transformers fro

Examples of using sparse attention, as in "Generating Long Sequences with Sparse Transformers"

Status: Archive (code is provided as-is, no updates expected) Update August 2020: For an example repository that achieves state-of-the-art modeling pe

Multi Task Vision and Language

12-in-1: Multi-Task Vision and Language Representation Learning Please cite the following if you use this code. Code and pre-trained models for 12-in-

An implementation of model parallel GPT-2 and GPT-3-style models using the mesh-tensorflow library.

GPT Neo 🎉 1T or bust my dudes 🎉 An implementation of model & data parallel GPT3-like models using the mesh-tensorflow library. If you're just here t

Awesome Treasure of Transformers Models Collection

💁 Awesome Treasure of Transformers Models for Natural Language processing contains papers, videos, blogs, official repo along with colab Notebooks. 🛫☑️

Pytorch implementation of the paper "Class-Balanced Loss Based on Effective Number of Samples"

Class-balanced-loss-pytorch Pytorch implementation of the paper Class-Balanced Loss Based on Effective Number of Samples presented at CVPR'19. Yin Cui

Large Scale Fine-Grained Categorization and Domain-Specific Transfer Learning. CVPR 2018

Large Scale Fine-Grained Categorization and Domain-Specific Transfer Learning Tensorflow code and models for the paper: Large Scale Fine-Grained Categ

Learn the Deep Learning for Computer Vision in three steps: theory from base to SotA, code in PyTorch, and space-repetition with Anki

DeepCourse: Deep Learning for Computer Vision arthurdouillard.com/deepcourse/ This is a course I'm giving to the French engineering school EPITA each

💃 VALSE: A Task-Independent Benchmark for Vision and Language Models Centered on Linguistic Phenomena

💃 VALSE: A Task-Independent Benchmark for Vision and Language Models Centered on Linguistic Phenomena.

Text Classification in Turkish Texts with Bert

You can watch the details of the project on my youtube channel Project Interface Project Second Interface Goal= Correctly guessing the classification

Powerful unsupervised domain adaptation method for dense retrieval.

Powerful unsupervised domain adaptation method for dense retrieval

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

keytotext Idea is to build a model which will take keywords as inputs and generate sentences as outputs. Potential use case can include: Marketing Sea

MinkLoc3D-SI: 3D LiDAR place recognition with sparse convolutions,spherical coordinates, and intensity

MinkLoc3D-SI: 3D LiDAR place recognition with sparse convolutions,spherical coordinates, and intensity Introduction The 3D LiDAR place recognition aim

A repository with exploration into using transformers to predict DNA ↔ transcription factor binding

Transcription Factor binding predictions with Attention and Transformers A repository with exploration into using transformers to predict DNA ↔ transc

BOVText: A Large-Scale, Multidimensional Multilingual Dataset for Video Text Spotting

BOVText: A Large-Scale, Bilingual Open World Dataset for Video Text Spotting Updated on December 10, 2021 (Release all dataset(2021 videos)) Updated o

Code basis for the paper "Camera Condition Monitoring and Readjustment by means of Noise and Blur" (2021)

Camera Condition Monitoring and Readjustment by means of Noise and Blur This repository contains the source code of the paper: Wischow, M., Gallego, G

Get 2D point positions (e.g., facial landmarks) projected on 3D mesh

points2d_projection_mesh Input 2D points (e.g. facial landmarks) on an image Camera parameters (extrinsic and intrinsic) of the image Aligned 3D mesh

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

SRA's seminar on Introduction to Computer Vision Fundamentals

Introduction to Computer Vision This repository includes basics to : Python Numpy: A python library Git Computer Vision. The aim of this repository is

A Vision Transformer approach that uses concatenated query and reference images to learn the relationship between query and reference images directly.

A Vision Transformer approach that uses concatenated query and reference images to learn the relationship between query and reference images directly.

Python scripts for performing object detection with the 1000 labels of the ImageNet dataset in ONNX.

Python scripts for performing object detection with the 1000 labels of the ImageNet dataset in ONNX. The repository combines a class agnostic object localizer to first detect the objects in the image, and next a ResNet50 model trained on ImageNet is used to label each box.

Computer Vision Script to recognize first person motion, developed as final project for the course "Machine Learning and Deep Learning"

Overview of The Code BaseColab/MLDL_FPAR.pdf: it contains the full explanation of our work Base Colab: it contains the base colab used to perform all

PyTorch implementation of a collections of scalable Video Transformer Benchmarks.

PyTorch implementation of Video Transformer Benchmarks This repository is mainly built upon Pytorch and Pytorch-Lightning. We wish to maintain a colle

Easy Language Model Pretraining leveraging Huggingface's Transformers and Datasets

Easy Language Model Pretraining leveraging Huggingface's Transformers and Datasets What is LASSL • How to Use What is LASSL LASSL은 LAnguage Semi-Super

Pangu-Alpha for Transformers

Pangu-Alpha for Transformers Usage Download MindSpore FP32 weights for GPU from here to data/Pangu-alpha_2.6B.ckpt Activate MindSpore environment and

BOVText: A Large-Scale, Multidimensional Multilingual Dataset for Video Text Spotting

BOVText: A Large-Scale, Bilingual Open World Dataset for Video Text Spotting Updated on December 10, 2021 (Release all dataset(2021 videos)) Updated o

Official code of IterMVS

IterMVS official source code of paper 'IterMVS: Iterative Probability Estimation for Efficient Multi-View Stereo' Introduction IterMVS is a novel lear

![[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)](https://github.com/ytongbai/ViTs-vs-CNNs/raw/main/resources/teaser.png)

[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)

Are Transformers More Robust Than CNNs? Pytorch implementation for NeurIPS 2021 Paper: Are Transformers More Robust Than CNNs? Our implementation is b

Re-implememtation of MAE (Masked Autoencoders Are Scalable Vision Learners) using PyTorch.

mae-repo PyTorch re-implememtation of "masked autoencoders are scalable vision learners". In this repo, it heavily borrows codes from codebase https:/

Official Implementation for the paper DeepFace-EMD: Re-ranking Using Patch-wise Earth Mover’s Distance Improves Out-Of-Distribution Face Identification

DeepFace-EMD: Re-ranking Using Patch-wise Earth Mover’s Distance Improves Out-Of-Distribution Face Identification Official Implementation for the pape

This is the official code for the paper "Learning with Nested Scene Modeling and Cooperative Architecture Search for Low-Light Vision"

RUAS This is the official code for the paper "Learning with Nested Scene Modeling and Cooperative Architecture Search for Low-Light Vision" A prelimin

2D Human Pose estimation using transformers. Implementation in Pytorch

PE-former: Pose Estimation Transformer Vision transformer architectures perform very well for image classification tasks. Efforts to solve more challe

Official source code of paper 'IterMVS: Iterative Probability Estimation for Efficient Multi-View Stereo'

IterMVS official source code of paper 'IterMVS: Iterative Probability Estimation for Efficient Multi-View Stereo' Introduction IterMVS is a novel lear

Experiments and examples converting Transformers to ONNX

Experiments and examples converting Transformers to ONNX This repository containes experiments and examples on converting different Transformers to ON

Supplemental learning materials for "Fourier Feature Networks and Neural Volume Rendering"

Fourier Feature Networks and Neural Volume Rendering This repository is a companion to a lecture given at the University of Cambridge Engineering Depa

Class-Balanced Loss Based on Effective Number of Samples. CVPR 2019

Class-Balanced Loss Based on Effective Number of Samples Tensorflow code for the paper: Class-Balanced Loss Based on Effective Number of Samples Yin C

Conceptual 12M is a dataset containing (image-URL, caption) pairs collected for vision-and-language pre-training.

Conceptual 12M We introduce the Conceptual 12M (CC12M), a dataset with ~12 million image-text pairs meant to be used for vision-and-language pre-train

Python library for computer vision labeling tasks. The core functionality is to translate bounding box annotations between different formats-for example, from coco to yolo.

PyLabel pip install pylabel PyLabel is a Python package to help you prepare image datasets for computer vision models including PyTorch and YOLOv5. I

State-of-the-art NLP through transformer models in a modular design and consistent APIs.

Trapper (Transformers wRAPPER) Trapper is an NLP library that aims to make it easier to train transformer based models on downstream tasks. It wraps h

A simple rest api that classifies pneumonia infection weather it is Normal, Pneumonia Virus or Pneumonia Bacteria from a chest-x-ray image.

This is a simple rest api that classifies pneumonia infection weather it is Normal, Pneumonia Virus or Pneumonia Bacteria from a chest-x-ray image.

Localized representation learning from Vision and Text (LoVT)

Localized Vision-Text Pre-Training Contrastive learning has proven effective for pre- training image models on unlabeled data and achieved great resul

CALVIN - A benchmark for Language-Conditioned Policy Learning for Long-Horizon Robot Manipulation Tasks

CALVIN CALVIN - A benchmark for Language-Conditioned Policy Learning for Long-Horizon Robot Manipulation Tasks Oier Mees, Lukas Hermann, Erick Rosete,

Hashformers is a framework for hashtag segmentation with transformers.

Hashtag segmentation is the task of automatically inserting the missing spaces between the words in a hashtag. Hashformers applies Transformer models

Implementation of paper "Decision-based Black-box Attack Against Vision Transformers via Patch-wise Adversarial Removal"

Patch-wise Adversarial Removal Implementation of paper "Decision-based Black-box Attack Against Vision Transformers via Patch-wise Adversarial Removal

Repository aimed at compiling code, papers, demos etc.. related to my PhD on 3D vision and machine learning for fruit detection and shape estimation at the university of Lincoln

PhD_3DPerception Repository aimed at compiling code, papers, demos etc.. related to my PhD on 3D vision and machine learning for fruit detection and s

MLPs for Vision and Langauge Modeling (Coming Soon)

MLP Architectures for Vision-and-Language Modeling: An Empirical Study MLP Architectures for Vision-and-Language Modeling: An Empirical Study (Code wi

Post-Training Quantization for Vision transformers.

PTQ4ViT Post-Training Quantization Framework for Vision Transformers. We use the twin uniform quantization method to reduce the quantization error on

MapReader: A computer vision pipeline for the semantic exploration of maps at scale

MapReader A computer vision pipeline for the semantic exploration of maps at scale MapReader is an end-to-end computer vision (CV) pipeline designed b

![[NeurIPS 2021] COCO-LM: Correcting and Contrasting Text Sequences for Language Model Pretraining](https://github.com/microsoft/COCO-LM/raw/main/coco-lm.png)

[NeurIPS 2021] COCO-LM: Correcting and Contrasting Text Sequences for Language Model Pretraining

COCO-LM This repository contains the scripts for fine-tuning COCO-LM pretrained models on GLUE and SQuAD 2.0 benchmarks. Paper: COCO-LM: Correcting an

This is a re-implementation of TransGAN: Two Pure Transformers Can Make One Strong GAN (CVPR 2021) in PyTorch.

TransGAN: Two Transformers Can Make One Strong GAN [YouTube Video] Paper Authors: Yifan Jiang, Shiyu Chang, Zhangyang Wang CVPR 2021 This is re-implem

The implementation code for "DAGAN: Deep De-Aliasing Generative Adversarial Networks for Fast Compressed Sensing MRI Reconstruction"

DAGAN This is the official implementation code for DAGAN: Deep De-Aliasing Generative Adversarial Networks for Fast Compressed Sensing MRI Reconstruct

Lip Reading - Cross Audio-Visual Recognition using 3D Convolutional Neural Networks

Lip Reading - Cross Audio-Visual Recognition using 3D Convolutional Neural Networks - Official Project Page This repository contains the code develope

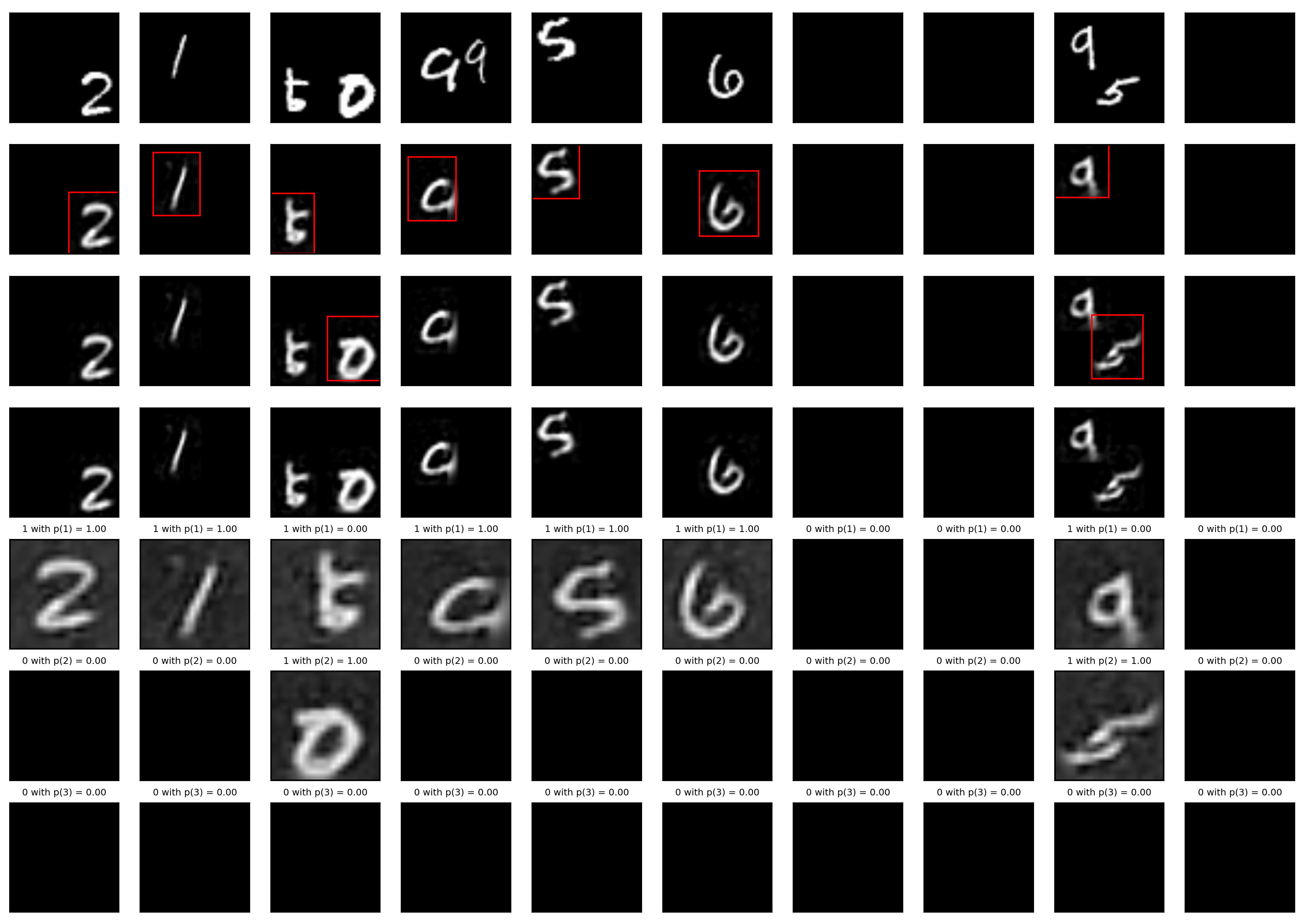

A Tensorfflow implementation of Attend, Infer, Repeat

Attend, Infer, Repeat: Fast Scene Understanding with Generative Models This is an unofficial Tensorflow implementation of Attend, Infear, Repeat (AIR)

![[AAAI22] Reliable Propagation-Correction Modulation for Video Object Segmentation](https://user-images.githubusercontent.com/65257938/145016835-3c4be820-c55d-4eb4-b7f5-b8a012ee0f8c.png)

[AAAI22] Reliable Propagation-Correction Modulation for Video Object Segmentation

Reliable Propagation-Correction Modulation for Video Object Segmentation (AAAI22) Preview version paper of this work is available at: https://arxiv.or

PyTorch implementation of the paper Dynamic Token Normalization Improves Vision Transfromers.

Dynamic Token Normalization Improves Vision Transformers This is the PyTorch implementation of the paper Dynamic Token Normalization Improves Vision T

![[AAAI22] Reliable Propagation-Correction Modulation for Video Object Segmentation](https://user-images.githubusercontent.com/65257938/145016835-3c4be820-c55d-4eb4-b7f5-b8a012ee0f8c.png)

[AAAI22] Reliable Propagation-Correction Modulation for Video Object Segmentation

Reliable Propagation-Correction Modulation for Video Object Segmentation (AAAI22) Preview version paper of this work is available at: https://arxiv.or

Reproduction of Vision Transformer in Tensorflow2. Train from scratch and Finetune.

Vision Transformer(ViT) in Tensorflow2 Tensorflow2 implementation of the Vision Transformer(ViT). This repository is for An image is worth 16x16 words

Implementation of N-Grammer, augmenting Transformers with latent n-grams, in Pytorch

N-Grammer - Pytorch Implementation of N-Grammer, augmenting Transformers with latent n-grams, in Pytorch Install $ pip install n-grammer-pytorch Usage

Train and use generative text models in a few lines of code.

blather Train and use generative text models in a few lines of code. To see blather in action check out the colab notebook! Installation Use the packa

PyTorch implementation of Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets

Simple PyTorch Implementation of "Grokking" Implementation of Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets Usage Running

Spiking Neural Network for Computer Vision using SpikingJelly framework and Pytorch-Lightning

Spiking Neural Network for Computer Vision using SpikingJelly framework and Pytorch-Lightning

Official PyTorch implementation for paper "Efficient Two-Stage Detection of Human–Object Interactions with a Novel Unary–Pairwise Transformer"

UPT: Unary–Pairwise Transformers This repository contains the official PyTorch implementation for the paper Frederic Z. Zhang, Dylan Campbell and Step

Code repository for "Reducing Underflow in Mixed Precision Training by Gradient Scaling" presented at IJCAI '20

Reducing Underflow in Mixed Precision Training by Gradient Scaling This project implements the gradient scaling method to improve the performance of m

Official Pytorch implementation for 2021 ICCV paper "Learning Motion Priors for 4D Human Body Capture in 3D Scenes" and trained models / data

Learning Motion Priors for 4D Human Body Capture in 3D Scenes (LEMO) Official Pytorch implementation for 2021 ICCV (oral) paper "Learning Motion Prior

Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch

NÜWA - Pytorch (wip) Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch. This repository will be popul

Mastering Transformers, published by Packt

Mastering Transformers This is the code repository for Mastering Transformers, published by Packt. Build state-of-the-art models from scratch with adv

Panoptic SegFormer: Delving Deeper into Panoptic Segmentation with Transformers

Panoptic SegFormer: Delving Deeper into Panoptic Segmentation with Transformers Results results on COCO val Backbone Method Lr Schd PQ Config Download

Single-Stage Instance Shadow Detection with Bidirectional Relation Learning (CVPR 2021 Oral)

Single-Stage Instance Shadow Detection with Bidirectional Relation Learning (CVPR 2021 Oral) Tianyu Wang*, Xiaowei Hu*, Chi-Wing Fu, and Pheng-Ann Hen

Revisiting Pre-trained Models for Chinese Natural Language Processing (Findings of EMNLP 2020)

This repository contains the resources in our paper "Revisiting Pre-trained Models for Chinese Natural Language Processing", which will be published i

Code for ACL 2019 Paper: "COMET: Commonsense Transformers for Automatic Knowledge Graph Construction"

To run a generation experiment (either conceptnet or atomic), follow these instructions: First Steps First clone, the repo: git clone https://github.c

Code for the ACL 2021 paper "Structural Guidance for Transformer Language Models"

Structural Guidance for Transformer Language Models This repository accompanies the paper, Structural Guidance for Transformer Language Models, publis

PyTorch code for EMNLP 2019 paper "LXMERT: Learning Cross-Modality Encoder Representations from Transformers".

LXMERT: Learning Cross-Modality Encoder Representations from Transformers Our servers break again :(. I have updated the links so that they should wor

Code for ICLR 2020 paper "VL-BERT: Pre-training of Generic Visual-Linguistic Representations".

VL-BERT By Weijie Su, Xizhou Zhu, Yue Cao, Bin Li, Lewei Lu, Furu Wei, Jifeng Dai. This repository is an official implementation of the paper VL-BERT:

Vision-Language Pre-training for Image Captioning and Question Answering

VLP This repo hosts the source code for our AAAI2020 work Vision-Language Pre-training (VLP). We have released the pre-trained model on Conceptual Cap

Research code for ECCV 2020 paper "UNITER: UNiversal Image-TExt Representation Learning"

UNITER: UNiversal Image-TExt Representation Learning This is the official repository of UNITER (ECCV 2020). This repository currently supports finetun