564 Repositories

Python vrp-attention-jax Libraries

An Implementation of Transformer in Transformer in TensorFlow for image classification, attention inside local patches

Transformer-in-Transformer An Implementation of the Transformer in Transformer paper by Han et al. for image classification, attention inside local pa

Neural Caption Generator with Attention

Neural Caption Generator with Attention Tensorflow implementation of "Show

TensorFlow implementation of the paper "Hierarchical Attention Networks for Document Classification"

Hierarchical Attention Networks for Document Classification This is an implementation of the paper Hierarchical Attention Networks for Document Classi

Hierarchical Attentive Recurrent Tracking

Hierarchical Attentive Recurrent Tracking This is an official Tensorflow implementation of single object tracking in videos by using hierarchical atte

A Tensorfflow implementation of Attend, Infer, Repeat

Attend, Infer, Repeat: Fast Scene Understanding with Generative Models This is an unofficial Tensorflow implementation of Attend, Infear, Repeat (AIR)

Official Pytorch Implementation of Relational Self-Attention: What's Missing in Attention for Video Understanding

Relational Self-Attention: What's Missing in Attention for Video Understanding This repository is the official implementation of "Relational Self-Atte

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

PCAM: Product of Cross-Attention Matrices for Rigid Registration of Point Clouds

PCAM: Product of Cross-Attention Matrices for Rigid Registration of Point Clouds PCAM: Product of Cross-Attention Matrices for Rigid Registration of P

A small library for creating and manipulating custom JAX Pytree classes

Treeo A small library for creating and manipulating custom JAX Pytree classes Light-weight: has no dependencies other than jax. Compatible: Treeo Tree

Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch

NÜWA - Pytorch (wip) Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch. This repository will be popul

Jax/Flax implementation of Variational-DiffWave.

jax-variational-diffwave Jax/Flax implementation of Variational-DiffWave. (Zhifeng Kong et al., 2020, Diederik P. Kingma et al., 2021.) DiffWave with

Neural HMMs are all you need (for high-quality attention-free TTS)

Neural HMMs are all you need (for high-quality attention-free TTS) Shivam Mehta, Éva Székely, Jonas Beskow, and Gustav Eje Henter This is the official

(ACL-IJCNLP 2021) Convolutions and Self-Attention: Re-interpreting Relative Positions in Pre-trained Language Models.

BERT Convolutions Code for the paper Convolutions and Self-Attention: Re-interpreting Relative Positions in Pre-trained Language Models. Contains expe

Code release for "Masked-attention Mask Transformer for Universal Image Segmentation"

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexander Kirillov, Ro

A PyTorch Implementation of PGL-SUM from "Combining Global and Local Attention with Positional Encoding for Video Summarization", Proc. IEEE ISM 2021

PGL-SUM: Combining Global and Local Attention with Positional Encoding for Video Summarization PyTorch Implementation of PGL-SUM From "PGL-SUM: Combin

Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving

GSAN Introduction Code for paper GSAN: Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving, wh

A demo of how to use JAX to create a simple gravity simulation

JAX Gravity This repo contains a demo of how to use JAX to create a simple gravity simulation. It uses JAX's experimental ode package to solve the dif

Modified GPT using average pooling to reduce the softmax attention memory constraints.

NLP-GPT-Upsampling This repository contains an implementation of Open AI's GPT Model. In particular, this implementation takes inspiration from the Ny

BERT Attention Analysis

BERT Attention Analysis This repository contains code for What Does BERT Look At? An Analysis of BERT's Attention. It includes code for getting attent

This is a repository with the code for the ACL 2019 paper

The Story of Heads This is the official repo for the following papers: (ACL 2019) Analyzing Multi-Head Self-Attention: Specialized Heads Do the Heavy

Pytorch implementation of Bert and Pals: Projected Attention Layers for Efficient Adaptation in Multi-Task Learning

PyTorch implementation of BERT and PALs Introduction Work by Asa Cooper Stickland and Iain Murray, University of Edinburgh. Code for BERT and PALs; mo

DeepFill v1/v2 with Contextual Attention and Gated Convolution, CVPR 2018, and ICCV 2019 Oral

Generative Image Inpainting An open source framework for generative image inpainting task, with the support of Contextual Attention (CVPR 2018) and Ga

Implementation of Vaswani, Ashish, et al. "Attention is all you need."

Attention Is All You Need Paper Implementation This is my from-scratch implementation of the original transformer architecture from the following pape

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

PyContinual (An Easy and Extendible Framework for Continual Learning)

PyContinual (An Easy and Extendible Framework for Continual Learning) Easy to Use You can sumply change the baseline, backbone and task, and then read

Representing Long-Range Context for Graph Neural Networks with Global Attention

Graph Augmentation Graph augmentation/self-supervision/etc. Algorithms gcn gcn+virtual node gin gin+virtual node PNA GraphTrans Augmentation methods N

This is a JAX implementation of Neural Radiance Fields for learning purposes.

learn-nerf This is a JAX implementation of Neural Radiance Fields for learning purposes. I've been curious about NeRF and its follow-up work for a whi

Single-step adversarial training (AT) has received wide attention as it proved to be both efficient and robust.

Subspace Adversarial Training Single-step adversarial training (AT) has received wide attention as it proved to be both efficient and robust. However,

Image Captioning using CNN ,LSTM and Attention

Image Captioning using CNN ,LSTM and Attention This is a deeplearning model which tries to summarize an image into a text . Installation Install this

Image Super-Resolution Using Very Deep Residual Channel Attention Networks

Image Super-Resolution Using Very Deep Residual Channel Attention Networks

JAX bindings to the Flatiron Institute Non-uniform Fast Fourier Transform (FINUFFT) library

JAX bindings to FINUFFT This package provides a JAX interface to (a subset of) the Flatiron Institute Non-uniform Fast Fourier Transform (FINUFFT) lib

Reinforcement learning library in JAX.

Reinforcement learning library in JAX.

Official repository for Natural Image Matting via Guided Contextual Attention

GCA-Matting: Natural Image Matting via Guided Contextual Attention The source codes and models of Natural Image Matting via Guided Contextual Attentio

Prototypical Cross-Attention Networks for Multiple Object Tracking and Segmentation, NeurIPS 2021 Spotlight

PCAN for Multiple Object Tracking and Segmentation This is the offical implementation of paper PCAN for MOTS. We also present a trailer that consists

Multi-modal co-attention for drug-target interaction annotation and Its Application to SARS-CoV-2

CoaDTI Multi-modal co-attention for drug-target interaction annotation and Its Application to SARS-CoV-2 Abstract Environment The test was conducted i

Revisiting Video Saliency: A Large-scale Benchmark and a New Model (CVPR18, PAMI19)

DHF1K =========================================================================== Wenguan Wang, J. Shen, M.-M Cheng and A. Borji, Revisiting Video Sal

Deep Reinforced Attention Regression for Partial Sketch Based Image Retrieval.

DARP-SBIR Intro This repository contains the source code implementation for ICDM submission paper Deep Reinforced Attention Regression for Partial Ske

Codebase for "ProtoAttend: Attention-Based Prototypical Learning."

Codebase for "ProtoAttend: Attention-Based Prototypical Learning." Authors: Sercan O. Arik and Tomas Pfister Paper: Sercan O. Arik and Tomas Pfister,

A Python toolbox to create adversarial examples that fool neural networks in PyTorch, TensorFlow, and JAX

Foolbox Native: Fast adversarial attacks to benchmark the robustness of machine learning models in PyTorch, TensorFlow, and JAX Foolbox is a Python li

A denoising autoencoder + adversarial losses and attention mechanisms for face swapping.

faceswap-GAN Adding Adversarial loss and perceptual loss (VGGface) to deepfakes'(reddit user) auto-encoder architecture. Updates Date Update 2018-08-2

nlp-tutorial is a tutorial for who is studying NLP(Natural Language Processing) using Pytorch

nlp-tutorial is a tutorial for who is studying NLP(Natural Language Processing) using Pytorch. Most of the models in NLP were implemented with less than 100 lines of code.(except comments or blank lines)

GAN JAX - A toy project to generate images from GANs with JAX

GAN JAX - A toy project to generate images from GANs with JAX This project aims to bring the power of JAX, a Python framework developped by Google and

PyTorch implementation of Hierarchical Multi-label Text Classification: An Attention-based Recurrent Network

hierarchical-multi-label-text-classification-pytorch Hierarchical Multi-label Text Classification: An Attention-based Recurrent Network Approach This

You Only Sample (Almost) Once: Linear Cost Self-Attention Via Bernoulli Sampling

You Only Sample (Almost) Once: Linear Cost Self-Attention Via Bernoulli Sampling Transformer-based models are widely used in natural language processi

RAANet: Range-Aware Attention Network for LiDAR-based 3D Object Detection with Auxiliary Density Level Estimation

RAANet: Range-Aware Attention Network for LiDAR-based 3D Object Detection with Auxiliary Density Level Estimation Anonymous submission Abstract 3D obj

The implementation of DeBERTa

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

Self-attentive task GAN for space domain awareness data augmentation.

SATGAN TODO: update the article URL once published. Article about this implemention The self-attentive task generative adversarial network (SATGAN) le

A PyTorch implementation of "CoAtNet: Marrying Convolution and Attention for All Data Sizes".

CoAtNet Overview This is a PyTorch implementation of CoAtNet specified in "CoAtNet: Marrying Convolution and Attention for All Data Sizes", arXiv 2021

Sky attention heatmap of submissions to astrometry.net

astroheat Installation Requires Python 3.6+, Tested with Python 3.9.5 Install library dependencies pip install -r requirements.txt The program require

Flaxformer: transformer architectures in JAX/Flax

Flaxformer is a transformer library for primarily NLP and multimodal research at Google.

Local Multi-Head Channel Self-Attention for FER2013

LHC-Net Local Multi-Head Channel Self-Attention This repository is intended to provide a quick implementation of the LHC-Net and to replicate the resu

Summary of related papers on visual attention

This repo is built for paper: Attention Mechanisms in Computer Vision: A Survey paper Vision-Attention-Papers Channel attention Spatial attention Temp

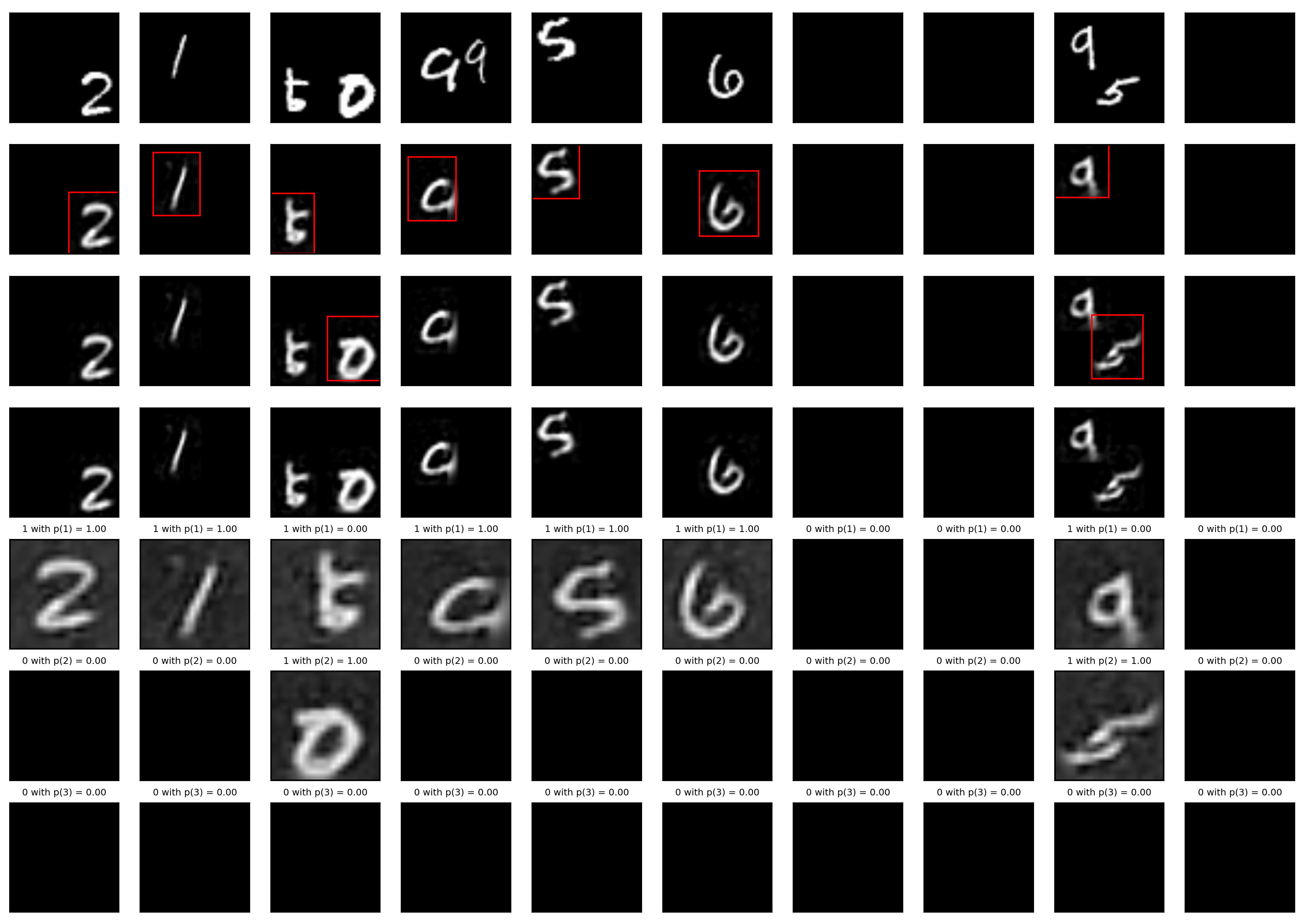

Official code for: A Probabilistic Hard Attention Model For Sequentially Observed Scenes

"A Probabilistic Hard Attention Model For Sequentially Observed Scenes" Authors: Samrudhdhi Rangrej, James Clark Accepted to: BMVC'21 A recurrent atte

Implementation of Uniformer, a simple attention and 3d convolutional net that achieved SOTA in a number of video classification tasks

Uniformer - Pytorch Implementation of Uniformer, a simple attention and 3d convolutional net that achieved SOTA in a number of video classification ta

Code for our paper "Multi-scale Guided Attention for Medical Image Segmentation"

Medical Image Segmentation with Guided Attention This repository contains the code of our paper: "'Multi-scale self-guided attention for medical image

PSANet: Point-wise Spatial Attention Network for Scene Parsing, ECCV2018.

PSANet: Point-wise Spatial Attention Network for Scene Parsing (in construction) by Hengshuang Zhao*, Yi Zhang*, Shu Liu, Jianping Shi, Chen Change Lo

CCNet: Criss-Cross Attention for Semantic Segmentation (TPAMI 2020 & ICCV 2019).

CCNet: Criss-Cross Attention for Semantic Segmentation Paper Links: Our most recent TPAMI version with improvements and extensions (Earlier ICCV versi

Dual Attention Network for Scene Segmentation (CVPR2019)

Dual Attention Network for Scene Segmentation(CVPR2019) Jun Fu, Jing Liu, Haijie Tian, Yong Li, Yongjun Bao, Zhiwei Fang,and Hanqing Lu Introduction W

Use of Attention Gates in a Convolutional Neural Network / Medical Image Classification and Segmentation

Attention Gated Networks (Image Classification & Segmentation) Pytorch implementation of attention gates used in U-Net and VGG-16 models. The framewor

Segmentation-Aware Convolutional Networks Using Local Attention Masks

Segmentation-Aware Convolutional Networks Using Local Attention Masks [Project Page] [Paper] Segmentation-aware convolution filters are invariant to b

Pytorch implementation of U-Net, R2U-Net, Attention U-Net, and Attention R2U-Net.

pytorch Implementation of U-Net, R2U-Net, Attention U-Net, Attention R2U-Net U-Net: Convolutional Networks for Biomedical Image Segmentation https://a

![Models Supported: AlbUNet [18, 34, 50, 101, 152] (1D and 2D versions for Single and Multiclass Segmentation, Feature Extraction with supports for Deep Supervision and Guided Attention)](https://github.com/Sakib1263/AlbUNet-1D-2D-Tensorflow-Keras/raw/main/Documents/Images/AlbuNet.png)

Models Supported: AlbUNet [18, 34, 50, 101, 152] (1D and 2D versions for Single and Multiclass Segmentation, Feature Extraction with supports for Deep Supervision and Guided Attention)

AlbUNet-1D-2D-Tensorflow-Keras This repository contains 1D and 2D Signal Segmentation Model Builder for AlbUNet and several of its variants developed

Einshape: DSL-based reshaping library for JAX and other frameworks.

Einshape: DSL-based reshaping library for JAX and other frameworks. The jnp.einsum op provides a DSL-based unified interface to matmul and tensordot o

Code for the paper "Attention Approximates Sparse Distributed Memory"

Attention Approximates Sparse Distributed Memory - Codebase This is all of the code used to run analyses in the paper "Attention Approximates Sparse D

Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning using 🤗 transformers

hierarchical-transformer-1d Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning using 🤗 transformers In Progress!! 2021.

This repo. is an implementation of ACFFNet, which is accepted for in Image and Vision Computing.

Attention-Guided-Contextual-Feature-Fusion-Network-for-Salient-Object-Detection This repo. is an implementation of ACFFNet, which is accepted for in I

Conservative Q Learning for Offline Reinforcement Reinforcement Learning in JAX

CQL-JAX This repository implements Conservative Q Learning for Offline Reinforcement Reinforcement Learning in JAX (FLAX). Implementation is built on

Contextual Attention Localization for Offline Handwritten Text Recognition

CALText This repository contains the source code for CALText model introduced in "CALText: Contextual Attention Localization for Offline Handwritten T

Global-Local Attention for Emotion Recognition

Global-Local Attention for Emotion Recognition Requirements Python 3 Install tensorflow (or tensorflow-gpu) = 2.0.0 Install some other packages pip i

An implementation of Equivariant e2 convolutional kernals into a convolutional self attention network, applied to radio astronomy data.

EquivariantSelfAttention An implementation of Equivariant e2 convolutional kernals into a convolutional self attention network, applied to radio astro

A JAX-based research framework for writing differentiable numerical simulators with arbitrary discretizations

jaxdf - JAX-based Discretization Framework Overview | Example | Installation | Documentation ⚠️ This library is still in development. Breaking changes

v objective diffusion inference code for JAX.

v-diffusion-jax v objective diffusion inference code for JAX, by Katherine Crowson (@RiversHaveWings) and Chainbreakers AI (@jd_pressman). The models

CNN+Attention+Seq2Seq

Attention_OCR CNN+Attention+Seq2Seq The model and its tensor transformation are shown in the figure below It is necessary ch_ train and ch_ test the p

Liquid Warping GAN with Attention: A Unified Framework for Human Image Synthesis

Liquid Warping GAN with Attention: A Unified Framework for Human Image Synthesis, including human motion imitation, appearance transfer, and novel view synthesis. Currently the paper is under review of IEEE TPAMI. It is an extension of our previous ICCV project impersonator, and it has a more powerful ability in generalization and produces higher-resolution results (512 x 512, 1024 x 1024) than the previous ICCV version.

Flaxformer: transformer architectures in JAX/Flax

Flaxformer: transformer architectures in JAX/Flax Flaxformer is a transformer library for primarily NLP and multimodal research at Google. It is used

It's a implement of this paper:Relation extraction via Multi-Level attention CNNs

Relation Classification via Multi-Level Attention CNNs It's a implement of this paper:Relation Classification via Multi-Level Attention CNNs. Training

Skyformer: Remodel Self-Attention with Gaussian Kernel and Nystr\"om Method (NeurIPS 2021)

Skyformer This repository is the official implementation of Skyformer: Remodel Self-Attention with Gaussian Kernel and Nystr"om Method (NeurIPS 2021).

Measuring if attention is explanation with ROAR

NLP ROAR Interpretability Official code for: Evaluating the Faithfulness of Importance Measures in NLP by Recursively Masking Allegedly Important Toke

A CROSS-MODAL FUSION NETWORK BASED ON SELF-ATTENTION AND RESIDUAL STRUCTURE FOR MULTIMODAL EMOTION RECOGNITION

CFN-SR A CROSS-MODAL FUSION NETWORK BASED ON SELF-ATTENTION AND RESIDUAL STRUCTURE FOR MULTIMODAL EMOTION RECOGNITION The audio-video based multimodal

Official implementation of "Multi-Glimpse Network: A Robust and Efficient Classification Architecture based on Recurrent Downsampled Attention" (BMVC 2021).

Multi-Glimpse Network Multi-Glimpse Network: A Robust and Efficient Classification Architecture based on Recurrent Downsampled Attention arXiv Require

Geometric Algebra package for JAX

JAXGA - JAX Geometric Algebra GitHub | Docs JAXGA is a Geometric Algebra package on top of JAX. It can handle high dimensional algebras by storing onl

Unofficial pytorch implementation of the paper "Dynamic High-Pass Filtering and Multi-Spectral Attention for Image Super-Resolution"

DFSA Unofficial pytorch implementation of the ICCV 2021 paper "Dynamic High-Pass Filtering and Multi-Spectral Attention for Image Super-Resolution" (p

Map single-cell transcriptomes to copy number evolutionary trees.

Map single-cell transcriptomes to copy number evolutionary trees. Check out the tutorial for more information. Installation $ pip install scatrex SCA

Skyformer: Remodel Self-Attention with Gaussian Kernel and Nystr\"om Method (NeurIPS 2021)

Skyformer This repository is the official implementation of Skyformer: Remodel Self-Attention with Gaussian Kernel and Nystr"om Method (NeurIPS 2021).

The source code for CATSETMAT: Cross Attention for Set Matching in Bipartite Hypergraphs

catsetmat The source code for CATSETMAT: Cross Attention for Set Matching in Bipartite Hypergraphs To be able to run it, add catsetmat to PYTHONPATH H

Structure Information is the Key: Self-Attention RoI Feature Extractor in 3D Object Detection

Structure Information is the Key: Self-Attention RoI Feature Extractor in 3D Object Detection abstract:Unlike 2D object detection where all RoI featur

Code for a seq2seq architecture with Bahdanau attention designed to map stereotactic EEG data from human brains to spectrograms, using the PyTorch Lightning.

stereoEEG2speech We provide code for a seq2seq architecture with Bahdanau attention designed to map stereotactic EEG data from human brains to spectro

This repo includes the CUB-GHA (Gaze-based Human Attention) dataset and code of the paper "Human Attention in Fine-grained Classification".

HA-in-Fine-Grained-Classification This repo includes the CUB-GHA (Gaze-based Human Attention) dataset and code of the paper "Human Attention in Fine-g

Bayes-Newton—A Gaussian process library in JAX, with a unifying view of approximate Bayesian inference as variants of Newton's algorithm.

Bayes-Newton Bayes-Newton is a library for approximate inference in Gaussian processes (GPs) in JAX (with objax), built and actively maintained by Wil

Machine Learning with JAX Tutorials

The purpose of this repo is to make it easy to get started with JAX. It contains my "Machine Learning with JAX" series of tutorials (YouTube videos and Jupyter Notebooks) as well as the content I found useful while learning JAX.

Pytorch Implementation of "Diagonal Attention and Style-based GAN for Content-Style disentanglement in image generation and translation" (ICCV 2021)

DiagonalGAN Official Pytorch Implementation of "Diagonal Attention and Style-based GAN for Content-Style Disentanglement in Image Generation and Trans

Open source single image super-resolution toolbox containing various functionality for training a diverse number of state-of-the-art super-resolution models. Also acts as the companion code for the IEEE signal processing letters paper titled 'Improving Super-Resolution Performance using Meta-Attention Layers’.

Deep-FIR Codebase - Super Resolution Meta Attention Networks About This repository contains the main coding framework accompanying our work on meta-at

An example of Scatterbrain implementation (combining local attention and Performer)

An example of Scatterbrain implementation (combining local attention and Performer)

A suite of benchmarks for CPU and GPU performance of the most popular high-performance libraries for Python :rocket:

A suite of benchmarks for CPU and GPU performance of the most popular high-performance libraries for Python :rocket:

Unofficial pytorch implementation of the paper "Context Reasoning Attention Network for Image Super-Resolution (ICCV 2021)"

CRAN Unofficial pytorch implementation of the paper "Context Reasoning Attention Network for Image Super-Resolution (ICCV 2021)" This code doesn't exa

Official implementation of NeurIPS 2021 paper "Contextual Similarity Aggregation with Self-attention for Visual Re-ranking"

CSA: Contextual Similarity Aggregation with Self-attention for Visual Re-ranking PyTorch training code for CSA (Contextual Similarity Aggregation). We

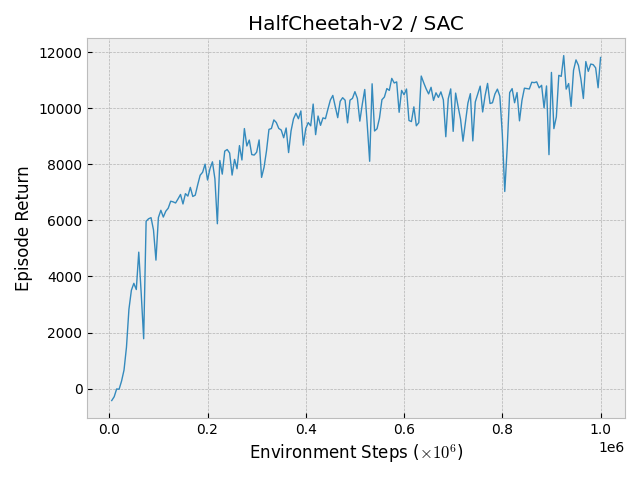

JAXDL: JAX (Flax) Deep Learning Library

JAXDL: JAX (Flax) Deep Learning Library Simple and clean JAX/Flax deep learning algorithm implementations: Soft-Actor-Critic (arXiv:1812.05905) Transf

A Context-aware Visual Attention-based training pipeline for Object Detection from a Webpage screenshot!

CoVA: Context-aware Visual Attention for Webpage Information Extraction Abstract Webpage information extraction (WIE) is an important step to create k

Hierarchical probabilistic 3D U-Net, with attention mechanisms (—𝘈𝘵𝘵𝘦𝘯𝘵𝘪𝘰𝘯 𝘜-𝘕𝘦𝘵, 𝘚𝘌𝘙𝘦𝘴𝘕𝘦𝘵) and a nested decoder structure with deep supervision (—𝘜𝘕𝘦𝘵++).

Hierarchical probabilistic 3D U-Net, with attention mechanisms (—𝘈𝘵𝘵𝘦𝘯𝘵𝘪𝘰𝘯 𝘜-𝘕𝘦𝘵, 𝘚𝘌𝘙𝘦𝘴𝘕𝘦𝘵) and a nested decoder structure with deep supervision (—𝘜𝘕𝘦𝘵++). Built in TensorFlow 2.5. Configured for voxel-level clinically significant prostate cancer detection in multi-channel 3D bpMRI scans.

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrai