1331 Repositories

Python world-models Libraries

Objective of the repository is to learn and build machine learning models using Pytorch. 30DaysofML Using Pytorch

30 Days Of Machine Learning Using Pytorch Objective of the repository is to learn and build machine learning models using Pytorch. List of Algorithms

a CLI that provides a generic automation layer for assessing the security of ML models

a CLI that provides a generic automation layer for assessing the security of ML models

Repository for XLM-T, a framework for evaluating multilingual language models on Twitter data

This is the XLM-T repository, which includes data, code and pre-trained multilingual language models for Twitter. XLM-T - A Multilingual Language Mode

Tilted Empirical Risk Minimization (ICLR '21)

Tilted Empirical Risk Minimization This repository contains the implementation for the paper Tilted Empirical Risk Minimization ICLR 2021 Empirical ri

Official repository for the ICLR 2021 paper Evaluating the Disentanglement of Deep Generative Models with Manifold Topology

Official repository for the ICLR 2021 paper Evaluating the Disentanglement of Deep Generative Models with Manifold Topology Sharon Zhou, Eric Zelikman

Parrot is a paraphrase based utterance augmentation framework purpose built to accelerate training NLU models

Parrot is a paraphrase based utterance augmentation framework purpose built to accelerate training NLU models. A paraphrase framework is more than just a paraphrasing model.

Pytorch-Named-Entity-Recognition-with-BERT

BERT NER Use google BERT to do CoNLL-2003 NER ! Train model using Python and Inference using C++ ALBERT-TF2.0 BERT-NER-TENSORFLOW-2.0 BERT-SQuAD Requi

A modular framework for vision & language multimodal research from Facebook AI Research (FAIR)

MMF is a modular framework for vision and language multimodal research from Facebook AI Research. MMF contains reference implementations of state-of-t

Neural building blocks for speaker diarization: speech activity detection, speaker change detection, overlapped speech detection, speaker embedding

⚠️ Checkout develop branch to see what is coming in pyannote.audio 2.0: a much smaller and cleaner codebase Python-first API (the good old pyannote-au

A PyTorch Implementation of End-to-End Models for Speech-to-Text

speech Speech is an open-source package to build end-to-end models for automatic speech recognition. Sequence-to-sequence models with attention, Conne

Interpretable Models for NLP using PyTorch

This repo is deprecated. Please find the updated package here. https://github.com/EdGENetworks/anuvada Anuvada: Interpretable Models for NLP using PyT

An open source framework for seq2seq models in PyTorch.

pytorch-seq2seq Documentation This is a framework for sequence-to-sequence (seq2seq) models implemented in PyTorch. The framework has modularized and

Statsmodels: statistical modeling and econometrics in Python

About statsmodels statsmodels is a Python package that provides a complement to scipy for statistical computations including descriptive statistics an

Seamlessly integrate pydantic models in your Sphinx documentation.

Seamlessly integrate pydantic models in your Sphinx documentation.

SMPLpix: Neural Avatars from 3D Human Models

subject0_validation_poses.mp4 Left: SMPL-X human mesh registered with SMPLify-X, middle: SMPLpix render, right: ground truth video. SMPLpix: Neural Av

RL and distillation in CARLA using a factorized world model

World on Rails Learning to drive from a world on rails Dian Chen, Vladlen Koltun, Philipp Krähenbühl, arXiv techical report (arXiv 2105.00636) This re

Generative Models for Graph-Based Protein Design

Graph-Based Protein Design This repo contains code for Generative Models for Graph-Based Protein Design by John Ingraham, Vikas Garg, Regina Barzilay

[EMNLP 2020] Keep CALM and Explore: Language Models for Action Generation in Text-based Games

Contextual Action Language Model (CALM) and the ClubFloyd Dataset Code and data for paper Keep CALM and Explore: Language Models for Action Generation

Codes for our paper "SentiLARE: Sentiment-Aware Language Representation Learning with Linguistic Knowledge" (EMNLP 2020)

SentiLARE: Sentiment-Aware Language Representation Learning with Linguistic Knowledge Introduction SentiLARE is a sentiment-aware pre-trained language

Meta Language-Specific Layers in Multilingual Language Models

Meta Language-Specific Layers in Multilingual Language Models This repo contains the source codes for our paper On Negative Interference in Multilingu

Django queries

Djaq Djaq - pronounced “Jack” - provides an instant remote API to your Django models data with a powerful query language. No server-side code beyond t

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

An Easy-to-use, Modular and Prolongable package of deep-learning based Named Entity Recognition Models.

DeepNER An Easy-to-use, Modular and Prolongable package of deep-learning based Named Entity Recognition Models. This repository contains complex Deep

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

ArviZ is a Python package for exploratory analysis of Bayesian models

ArviZ (pronounced "AR-vees") is a Python package for exploratory analysis of Bayesian models. Includes functions for posterior analysis, data storage, model checking, comparison and diagnostics

Task-based datasets, preprocessing, and evaluation for sequence models.

SeqIO: Task-based datasets, preprocessing, and evaluation for sequence models. SeqIO is a library for processing sequential data to be fed into downst

Create a netflix-like service using Django, React.js, & More.

Create a netflix-like service using Django. Learn advanced Django techniques to achieve amazing results like never before.

TorchFlare is a simple, beginner-friendly, and easy-to-use PyTorch Framework train your models effortlessly.

TorchFlare TorchFlare is a simple, beginner-friendly and an easy-to-use PyTorch Framework train your models without much effort. It provides an almost

Learning Energy-Based Models by Diffusion Recovery Likelihood

Learning Energy-Based Models by Diffusion Recovery Likelihood Ruiqi Gao, Yang Song, Ben Poole, Ying Nian Wu, Diederik P. Kingma Paper: https://arxiv.o

🤗 The largest hub of ready-to-use NLP datasets for ML models with fast, easy-to-use and efficient data manipulation tools

🤗 The largest hub of ready-to-use NLP datasets for ML models with fast, easy-to-use and efficient data manipulation tools

MMGeneration is a powerful toolkit for generative models, based on PyTorch and MMCV.

Documentation: https://mmgeneration.readthedocs.io/ Introduction English | 简体中文 MMGeneration is a powerful toolkit for generative models, especially f

Attack classification models with transferability, black-box attack; unrestricted adversarial attacks on imagenet

Attack classification models with transferability, black-box attack; unrestricted adversarial attacks on imagenet, CVPR2021 安全AI挑战者计划第六期:ImageNet无限制对抗攻击 决赛第四名(team name: Advers)

OCTIS: Comparing Topic Models is Simple! A python package to optimize and evaluate topic models (accepted at EACL2021 demo track)

OCTIS : Optimizing and Comparing Topic Models is Simple! OCTIS (Optimizing and Comparing Topic models Is Simple) aims at training, analyzing and compa

A simple way to train and use PyTorch models with multi-GPU, TPU, mixed-precision

🤗 Accelerate was created for PyTorch users who like to write the training loop of PyTorch models but are reluctant to write and maintain the boilerplate code needed to use multi-GPUs/TPU/fp16.

Pytorch implementation of our paper under review — Lottery Jackpots Exist in Pre-trained Models

Lottery Jackpots Exist in Pre-trained Models (Paper Link) Requirements Python = 3.7.4 Pytorch = 1.6.1 Torchvision = 0.4.1 Reproduce the Experiment

This repository contains the code for "Generating Datasets with Pretrained Language Models".

Datasets from Instructions (DINO 🦕 ) This repository contains the code for Generating Datasets with Pretrained Language Models. The paper introduces

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Bayesian Image Reconstruction using Deep Generative Models

Bayesian Image Reconstruction using Deep Generative Models R. Marinescu, D. Moyer, P. Golland For technical inquiries, please create a Github issue. F

Pretrained Pytorch face detection (MTCNN) and recognition (InceptionResnet) models

Face Recognition Using Pytorch Python 3.7 3.6 3.5 Status This is a repository for Inception Resnet (V1) models in pytorch, pretrained on VGGFace2 and

Sandbox for training deep learning networks

Deep learning networks This repo is used to research convolutional networks primarily for computer vision tasks. For this purpose, the repo contains (

Fine-tune pretrained Convolutional Neural Networks with PyTorch

Fine-tune pretrained Convolutional Neural Networks with PyTorch. Features Gives access to the most popular CNN architectures pretrained on ImageNet. A

Quickly comparing your image classification models with the state-of-the-art models (such as DenseNet, ResNet, ...)

Image Classification Project Killer in PyTorch This repo is designed for those who want to start their experiments two days before the deadline and ki

A Pythonic introduction to methods for scaling your data science and machine learning work to larger datasets and larger models, using the tools and APIs you know and love from the PyData stack (such as numpy, pandas, and scikit-learn).

This tutorial's purpose is to introduce Pythonistas to methods for scaling their data science and machine learning work to larger datasets and larger models, using the tools and APIs they know and love from the PyData stack (such as numpy, pandas, and scikit-learn).

Python library containing BART query generation and BERT-based Siamese models for neural retrieval.

Neural Retrieval Embedding-based Zero-shot Retrieval through Query Generation leverages query synthesis over large corpuses of unlabeled text (such as

QA-GNN: Question Answering using Language Models and Knowledge Graphs

QA-GNN: Question Answering using Language Models and Knowledge Graphs This repo provides the source code & data of our paper: QA-GNN: Reasoning with L

Try out deep learning models online on Google Colab

Try out deep learning models online on Google Colab

Mind the Trade-off: Debiasing NLU Models without Degrading the In-distribution Performance

Models for natural language understanding (NLU) tasks often rely on the idiosyncratic biases of the dataset, which make them brittle against test cases outside the training distribution.

Real-world Anomaly Detection in Surveillance Videos- pytorch Re-implementation

Real world Anomaly Detection in Surveillance Videos : Pytorch RE-Implementation This repository is a re-implementation of "Real-world Anomaly Detectio

Newt - a Gaussian process library in JAX.

Newt __ \/_ (' \`\ _\, \ \\/ /`\/\ \\ \ \\

Russian GPT3 models.

Russian GPT-3 models (ruGPT3XL, ruGPT3Large, ruGPT3Medium, ruGPT3Small) trained with 2048 sequence length with sparse and dense attention blocks. We also provide Russian GPT-2 large model (ruGPT2Large) trained with 1024 sequence length.

Introducing neural networks to predict stock prices

IntroNeuralNetworks in Python: A Template Project IntroNeuralNetworks is a project that introduces neural networks and illustrates an example of how o

Platform for building statistical models of cities and regions

UrbanSim UrbanSim is a platform for building statistical models of cities and regions. These models help forecast long-range patterns in real estate d

PyTorch implementation of Algorithm 1 of "On the Anatomy of MCMC-Based Maximum Likelihood Learning of Energy-Based Models"

Code for On the Anatomy of MCMC-Based Maximum Likelihood Learning of Energy-Based Models This repository will reproduce the main results from our pape

《K-Adapter: Infusing Knowledge into Pre-Trained Models with Adapters》(2020)

K-Adapter: Infusing Knowledge into Pre-Trained Models with Adapters This repository is the implementation of the paper "K-Adapter: Infusing Knowledge

Learning recognition/segmentation models without end-to-end training. 40%-60% less GPU memory footprint. Same training time. Better performance.

InfoPro-Pytorch The Information Propagation algorithm for training deep networks with local supervision. (ICLR 2021) Revisiting Locally Supervised Lea

Set of models for classifcation of 3D volumes

Classification models 3D Zoo - Keras and TF.Keras This repository contains 3D variants of popular CNN models for classification like ResNets, DenseNet

Code for pre-training CharacterBERT models (as well as BERT models).

Pre-training CharacterBERT (and BERT) This is a repository for pre-training BERT and CharacterBERT. DISCLAIMER: The code was largely adapted from an o

Official PyTorch implementation of MX-Font (Multiple Heads are Better than One: Few-shot Font Generation with Multiple Localized Experts)

Introduction Pytorch implementation of Multiple Heads are Better than One: Few-shot Font Generation with Multiple Localized Expert. | paper Song Park1

A collection of scripts to preprocess ASR datasets and finetune language-specific Wav2Vec2 XLSR models

wav2vec-toolkit A collection of scripts to preprocess ASR datasets and finetune language-specific Wav2Vec2 XLSR models This repository accompanies the

Source code for the GPT-2 story generation models in the EMNLP 2020 paper "STORIUM: A Dataset and Evaluation Platform for Human-in-the-Loop Story Generation"

Storium GPT-2 Models This is the official repository for the GPT-2 models described in the EMNLP 2020 paper [STORIUM: A Dataset and Evaluation Platfor

Code, Data and Demo for Paper: Controllable Generation from Pre-trained Language Models via Inverse Prompting

InversePrompting Paper: Controllable Generation from Pre-trained Language Models via Inverse Prompting Code: The code is provided in the "chinese_ip"

Official code for the CVPR 2021 paper "How Well Do Self-Supervised Models Transfer?"

How Well Do Self-Supervised Models Transfer? This repository hosts the code for the experiments in the CVPR 2021 paper How Well Do Self-Supervised Mod

(ImageNet pretrained models) The official pytorch implemention of the TPAMI paper "Res2Net: A New Multi-scale Backbone Architecture"

Res2Net The official pytorch implemention of the paper "Res2Net: A New Multi-scale Backbone Architecture" Our paper is accepted by IEEE Transactions o

Top2Vec is an algorithm for topic modeling and semantic search.

Top2Vec is an algorithm for topic modeling and semantic search. It automatically detects topics present in text and generates jointly embedded topic, document and word vectors.

Neural models of common sense. 🤖

Unicorn on Rainbow Neural models of common sense. This repository is for the paper: Unicorn on Rainbow: A Universal Commonsense Reasoning Model on a N

This project is part of Eleuther AI's quest to create a massive repository of high quality text data for training language models.

This project is part of Eleuther AI's quest to create a massive repository of high quality text data for training language models.

A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''.

P-tuning A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''. How to use our code We have released the code

NewsBlur is a personal news reader bringing people together to talk about the world.

NewsBlur NewsBlur is a personal news reader bringing people together to talk about the world.

Viewflow is an Airflow-based framework that allows data scientists to create data models without writing Airflow code.

Viewflow Viewflow is a framework built on the top of Airflow that enables data scientists to create materialized views. It allows data scientists to f

Automated Machine Learning Pipeline with Feature Engineering and Hyper-Parameters Tuning

The mljar-supervised is an Automated Machine Learning Python package that works with tabular data. I

A probabilistic programming library for Bayesian deep learning, generative models, based on Tensorflow

ZhuSuan is a Python probabilistic programming library for Bayesian deep learning, which conjoins the complimentary advantages of Bayesian methods and

A probabilistic programming language in TensorFlow. Deep generative models, variational inference.

Edward is a Python library for probabilistic modeling, inference, and criticism. It is a testbed for fast experimentation and research with probabilis

Describing statistical models in Python using symbolic formulas

Patsy is a Python library for describing statistical models (especially linear models, or models that have a linear component) and building design mat

A Python package for Bayesian forecasting with object-oriented design and probabilistic models under the hood.

Disclaimer This project is stable and being incubated for long-term support. It may contain new experimental code, for which APIs are subject to chang

Python Library for learning (Structure and Parameter) and inference (Statistical and Causal) in Bayesian Networks.

pgmpy pgmpy is a python library for working with Probabilistic Graphical Models. Documentation and list of algorithms supported is at our official sit

Fast, flexible and easy to use probabilistic modelling in Python.

Please consider citing the JMLR-MLOSS Manuscript if you've used pomegranate in your academic work! pomegranate is a package for building probabilistic

Hidden Markov Models in Python, with scikit-learn like API

hmmlearn hmmlearn is a set of algorithms for unsupervised learning and inference of Hidden Markov Models. For supervised learning learning of HMMs and

Scikit-learn compatible estimation of general graphical models

skggm : Gaussian graphical models using the scikit-learn API In the last decade, learning networks that encode conditional independence relationships

Differentiable SDE solvers with GPU support and efficient sensitivity analysis.

PyTorch Implementation of Differentiable SDE Solvers This library provides stochastic differential equation (SDE) solvers with GPU support and efficie

Pretrained EfficientNet, EfficientNet-Lite, MixNet, MobileNetV3 / V2, MNASNet A1 and B1, FBNet, Single-Path NAS

(Generic) EfficientNets for PyTorch A 'generic' implementation of EfficientNet, MixNet, MobileNetV3, etc. that covers most of the compute/parameter ef

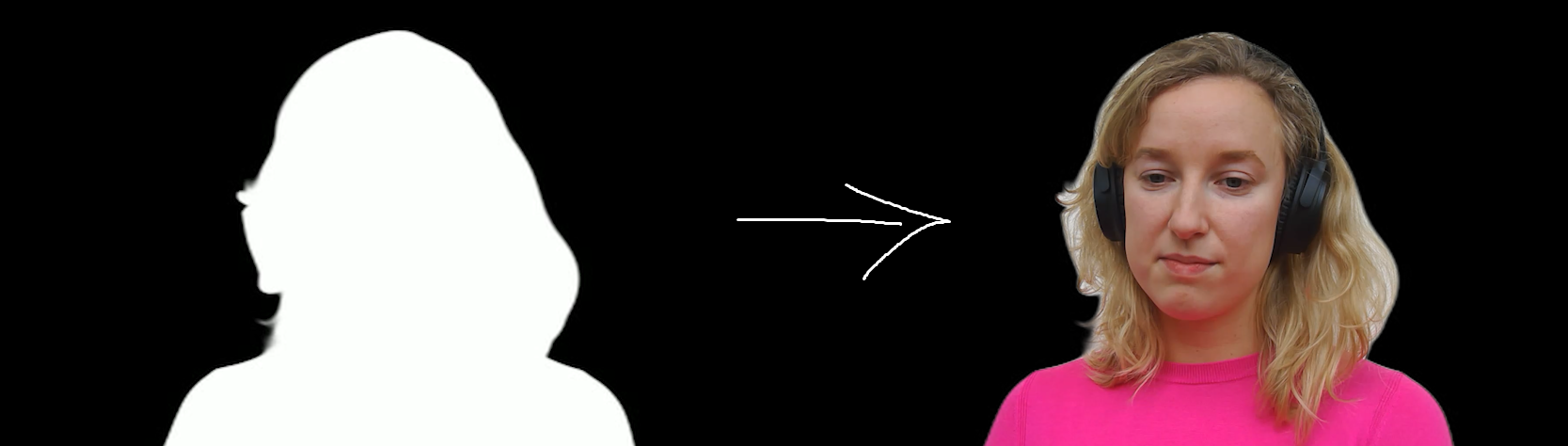

Rembg Video Virtual Green Screen Edition

Rembg Virtual Greenscreen Edition is a tool to create a green screen matte for videos

Decentralized deep learning in PyTorch. Built to train models on thousands of volunteers across the world.

Hivemind: decentralized deep learning in PyTorch Hivemind is a PyTorch library to train large neural networks across the Internet. Its intended usage

Petastorm library enables single machine or distributed training and evaluation of deep learning models from datasets in Apache Parquet format. It supports ML frameworks such as Tensorflow, Pytorch, and PySpark and can be used from pure Python code.

Petastorm Contents Petastorm Installation Generating a dataset Plain Python API Tensorflow API Pytorch API Spark Dataset Converter API Analyzing petas

AtsPy: Automated Time Series Models in Python (by @firmai)

Automated Time Series Models in Python (AtsPy) SSRN Report Easily develop state of the art time series models to forecast univariate data series. Simp

Automatically build ARIMA, SARIMAX, VAR, FB Prophet and XGBoost Models on Time Series data sets with a Single Line of Code. Now updated with Dask to handle millions of rows.

Auto_TS: Auto_TimeSeries Automatically build multiple Time Series models using a Single Line of Code. Now updated with Dask. Auto_timeseries is a comp

A python library for easy manipulation and forecasting of time series.

Time Series Made Easy in Python darts is a python library for easy manipulation and forecasting of time series. It contains a variety of models, from

A statistical library designed to fill the void in Python's time series analysis capabilities, including the equivalent of R's auto.arima function.

pmdarima Pmdarima (originally pyramid-arima, for the anagram of 'py' + 'arima') is a statistical library designed to fill the void in Python's time se

ARCH models in Python

arch Autoregressive Conditional Heteroskedasticity (ARCH) and other tools for financial econometrics, written in Python (with Cython and/or Numba used

Qlib is an AI-oriented quantitative investment platform, which aims to realize the potential, empower the research, and create the value of AI technologies in quantitative investment. With Qlib, you can easily try your ideas to create better Quant investment strategies.

Qlib is an AI-oriented quantitative investment platform, which aims to realize the potential, empower the research, and create the value of AI technol

Source code, datasets and trained models for the paper Learning Advanced Mathematical Computations from Examples (ICLR 2021), by François Charton, Amaury Hayat (ENPC-Rutgers) and Guillaume Lample

Maths from examples - Learning advanced mathematical computations from examples This is the source code and data sets relevant to the paper Learning a

Diverse Image Captioning with Context-Object Split Latent Spaces (NeurIPS 2020)

Diverse Image Captioning with Context-Object Split Latent Spaces This repository is the PyTorch implementation of the paper: Diverse Image Captioning

CDIoU and CDIoU loss is like a convenient plug-in that can be used in multiple models. CDIoU and CDIoU loss have different excellent performances in several models such as Faster R-CNN, YOLOv4, RetinaNet and . There is a maximum AP improvement of 1.9% and an average AP of 0.8% improvement on MS COCO dataset, compared to traditional evaluation-feedback modules. Here we just use as an example to illustrate the code.

CDIoU-CDIoUloss CDIoU and CDIoU loss is like a convenient plug-in that can be used in multiple models. CDIoU and CDIoU loss have different excellent p

⚡ boost inference speed of T5 models by 5x & reduce the model size by 3x using fastT5.

Reduce T5 model size by 3X and increase the inference speed up to 5X. Install Usage Details Functionalities Benchmarks Onnx model Quantized onnx model

Monocular Depth Estimation - Weighted-average prediction from multiple pre-trained depth estimation models

merged_depth runs (1) AdaBins, (2) DiverseDepth, (3) MiDaS, (4) SGDepth, and (5) Monodepth2, and calculates a weighted-average per-pixel absolute dept

Repository providing a wide range of self-supervised pretrained models for computer vision tasks.

Hierarchical Pretraining: Research Repository This is a research repository for reproducing the results from the project "Self-supervised pretraining

This repository lets you train neural networks models for performing end-to-end full-page handwriting recognition using the Apache MXNet deep learning frameworks on the IAM Dataset.

Handwritten Text Recognition (OCR) with MXNet Gluon These notebooks have been created by Jonathan Chung, as part of his internship as Applied Scientis

TensorFlow code for the neural network presented in the paper: "Structural Language Models of Code" (ICML'2020)

SLM: Structural Language Models of Code This is an official implementation of the model described in: "Structural Language Models of Code" [PDF] To ap

中文语音识别系列,读者可以借助它快速训练属于自己的中文语音识别模型,或直接使用预训练模型测试效果。

MASR中文语音识别(pytorch版) 开箱即用 自行训练 使用与训练分离(增量训练) 识别率高 说明:因为每个人电脑机器不同,而且有些安装包安装起来比较麻烦,强烈建议直接用我编译好的docker环境跑 目前docker基础环境为ubuntu-cuda10.1-cudnn7-pytorch1.6.