927 Repositories

Python attention-alignment-visualization Libraries

Hierarchical Uniform Manifold Approximation and Projection

HUMAP Hierarchical Manifold Approximation and Projection (HUMAP) is a technique based on UMAP for hierarchical non-linear dimensionality reduction. HU

Shuffle Attention for MobileNetV3

SA-MobileNetV3 Shuffle Attention for MobileNetV3 Train Run the following command for train model on your own dataset: python train.py --dataset mnist

EPSANet:An Efficient Pyramid Split Attention Block on Convolutional Neural Network

EPSANet:An Efficient Pyramid Split Attention Block on Convolutional Neural Network This repo contains the official Pytorch implementaion code and conf

Code + pre-trained models for the paper Keeping Your Eye on the Ball Trajectory Attention in Video Transformers

Motionformer This is an official pytorch implementation of paper Keeping Your Eye on the Ball: Trajectory Attention in Video Transformers. In this rep

FANet - Real-time Semantic Segmentation with Fast Attention

FANet Real-time Semantic Segmentation with Fast Attention Ping Hu, Federico Perazzi, Fabian Caba Heilbron, Oliver Wang, Zhe Lin, Kate Saenko , Stan Sc

Official implement of "CAT: Cross Attention in Vision Transformer".

CAT: Cross Attention in Vision Transformer This is official implement of "CAT: Cross Attention in Vision Transformer". Abstract Since Transformer has

Implementation of Segformer, Attention + MLP neural network for segmentation, in Pytorch

Segformer - Pytorch Implementation of Segformer, Attention + MLP neural network for segmentation, in Pytorch. Install $ pip install segformer-pytorch

Official PyTorch implementation of Less is More: Pay Less Attention in Vision Transformers.

Less is More: Pay Less Attention in Vision Transformers Official PyTorch implementation of Less is More: Pay Less Attention in Vision Transformers. By

The official PyTorch implementation of recent paper - SAINT: Improved Neural Networks for Tabular Data via Row Attention and Contrastive Pre-Training

This repository is the official PyTorch implementation of SAINT. Find the paper on arxiv SAINT: Improved Neural Networks for Tabular Data via Row Atte

Statistics and Visualization of acceptance rate, main keyword of CVPR 2021 accepted papers for the main Computer Vision conference (CVPR)

Statistics and Visualization of acceptance rate, main keyword of CVPR 2021 accepted papers for the main Computer Vision conference (CVPR)

InsightFace: 2D and 3D Face Analysis Project on MXNet and PyTorch

InsightFace: 2D and 3D Face Analysis Project on MXNet and PyTorch

LinkedIn connections analyzer

LinkedIn Connections Analyzer 🔗 https://linkedin-analzyer.herokuapp.com Hey hey 👋 , welcome to my LinkedIn connections analyzer. I recently found ou

Streamlit component for Let's-Plot visualization library

streamlit-letsplot This is a work-in-progress, providing a convenience function to plot charts from the Lets-Plot visualization library. Example usage

Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning

We challenge a common assumption underlying most supervised deep learning: that a model makes a prediction depending only on its parameters and the features of a single input. To this end, we introduce a general-purpose deep learning architecture that takes as input the entire dataset instead of processing one datapoint at a time.

Unofficial PyTorch implementation of Attention Free Transformer (AFT) layers by Apple Inc.

aft-pytorch Unofficial PyTorch implementation of Attention Free Transformer's layers by Zhai, et al. [abs, pdf] from Apple Inc. Installation You can i

PyTorch implementation of Pay Attention to MLPs

gMLP PyTorch implementation of Pay Attention to MLPs. Quickstart Clone this repository. git clone https://github.com/jaketae/g-mlp.git Navigate to th

Learn Blockchains by Building One, A simple Blockchain in Python using Flask as a micro web framework.

Blockchain ✨ Learn Blockchains by Building One Yourself Installation Make sure Python 3.6+ is installed. Install Flask Web Framework. Clone this repos

External Attention Network

Beyond Self-attention: External Attention using Two Linear Layers for Visual Tasks paper : https://arxiv.org/abs/2105.02358 EAMLP will come soon Jitto

Code for the paper "How Attentive are Graph Attention Networks?"

How Attentive are Graph Attention Networks? This repository is the official implementation of How Attentive are Graph Attention Networks?. The PyTorch

Attention-driven Robot Manipulation (ARM) which includes Q-attention

Attention-driven Robotic Manipulation (ARM) This codebase is home to: Q-attention: Enabling Efficient Learning for Vision-based Robotic Manipulation I

Implements MLP-Mixer: An all-MLP Architecture for Vision.

MLP-Mixer-CIFAR10 This repository implements MLP-Mixer as proposed in MLP-Mixer: An all-MLP Architecture for Vision. The paper introduces an all MLP (

Code for CVPR2021 "Visualizing Adapted Knowledge in Domain Transfer". Visualization for domain adaptation. #explainable-ai

Visualizing Adapted Knowledge in Domain Transfer @inproceedings{hou2021visualizing, title={Visualizing Adapted Knowledge in Domain Transfer}, auth

Implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification

CrossViT : Cross-Attention Multi-Scale Vision Transformer for Image Classification This is an unofficial PyTorch implementation of CrossViT: Cross-Att

An implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep Neural Networks in PyTorch.

Neural Attention Distillation This is an implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep

Plot, scatter plots and histograms in the terminal using braille dots

Plot, scatter plots and histograms in the terminal using braille dots, with (almost) no dependancies. Plot with color or make complex figures - similar to a very small sibling to matplotlib. Or use the canvas to plot dots and lines yourself.

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Github project for Attention-guided Temporal Coherent Video Object Matting.

Attention-guided Temporal Coherent Video Object Matting This is the Github project for our paper Attention-guided Temporal Coherent Video Object Matti

Official implementation for (Show, Attend and Distill: Knowledge Distillation via Attention-based Feature Matching, AAAI-2021)

Show, Attend and Distill: Knowledge Distillation via Attention-based Feature Matching Official pytorch implementation of "Show, Attend and Distill: Kn

Local Attention - Flax module for Jax

Local Attention - Flax Autoregressive Local Attention - Flax module for Jax Install $ pip install local-attention-flax Usage from jax import random fr

Code for our paper "Mask-Align: Self-Supervised Neural Word Alignment" in ACL 2021

Mask-Align: Self-Supervised Neural Word Alignment This is the implementation of our work Mask-Align: Self-Supervised Neural Word Alignment. @inproceed

Lingtrain Aligner — ML powered library for the accurate texts alignment.

Lingtrain Aligner ML powered library for the accurate texts alignment in different languages. Purpose Main purpose of this alignment tool is to build

Implementation of Bidirectional Recurrent Independent Mechanisms (Learning to Combine Top-Down and Bottom-Up Signals in Recurrent Neural Networks with Attention over Modules)

BRIMs Bidirectional Recurrent Independent Mechanisms Implementation of the paper Learning to Combine Top-Down and Bottom-Up Signals in Recurrent Neura

PyTorch code for our paper "Attention in Attention Network for Image Super-Resolution"

Under construction... Attention in Attention Network for Image Super-Resolution (A2N) This repository is an PyTorch implementation of the paper "Atten

Pytorch Implementation of Google's Parallel Tacotron 2: A Non-Autoregressive Neural TTS Model with Differentiable Duration Modeling

Parallel Tacotron2 Pytorch Implementation of Google's Parallel Tacotron 2: A Non-Autoregressive Neural TTS Model with Differentiable Duration Modeling

Contains code for the paper "Vision Transformers are Robust Learners".

Vision Transformers are Robust Learners This repository contains the code for the paper Vision Transformers are Robust Learners by Sayak Paul* and Pin

the code of the paper: Recurrent Multi-view Alignment Network for Unsupervised Surface Registration (CVPR 2021)

RMA-Net This repo is the implementation of the paper: Recurrent Multi-view Alignment Network for Unsupervised Surface Registration (CVPR 2021). Paper

Drone-based Joint Density Map Estimation, Localization and Tracking with Space-Time Multi-Scale Attention Network

DroneCrowd Paper Detection, Tracking, and Counting Meets Drones in Crowds: A Benchmark. Introduction This paper proposes a space-time multi-scale atte

External Attention Network

Beyond Self-attention: External Attention using Two Linear Layers for Visual Tasks paper : https://arxiv.org/abs/2105.02358 Jittor code will come soon

![[NAACL & ACL 2021] SapBERT: Self-alignment pretraining for BERT.](https://github.com/cambridgeltl/sapbert/raw/main/sapbert_front_graphs_v6.png?raw=true)

[NAACL & ACL 2021] SapBERT: Self-alignment pretraining for BERT.

SapBERT: Self-alignment pretraining for BERT This repo holds code for the SapBERT model presented in our NAACL 2021 paper: Self-Alignment Pretraining

Exploring whether attention is necessary for vision transformers

Do You Even Need Attention? A Stack of Feed-Forward Layers Does Surprisingly Well on ImageNet Paper/Report TL;DR We replace the attention layer in a v

Reformer, the efficient Transformer, in Pytorch

Reformer, the Efficient Transformer, in Pytorch This is a Pytorch implementation of Reformer https://openreview.net/pdf?id=rkgNKkHtvB It includes LSH

Twins: Revisiting the Design of Spatial Attention in Vision Transformers

Twins: Revisiting the Design of Spatial Attention in Vision Transformers Very recently, a variety of vision transformer architectures for dense predic

Reimplementation of the paper `Human Attention Maps for Text Classification: Do Humans and Neural Networks Focus on the Same Words? (ACL2020)`

Human Attention for Text Classification Re-implementation of the paper Human Attention Maps for Text Classification: Do Humans and Neural Networks Foc

Implementation of "Distribution Alignment: A Unified Framework for Long-tail Visual Recognition"(CVPR 2021)

Implementation of "Distribution Alignment: A Unified Framework for Long-tail Visual Recognition"(CVPR 2021)

notebookJS: seamless JavaScript integration in Python Notebooks

notebookJS enables the execution of custom JavaScript code in Python Notebooks (Jupyter Notebook and Google Colab). This Python library can be useful for implementing and reusing interactive Data Visualizations in the Notebook environment.

A Python package that provides evaluation and visualization tools for the DexYCB dataset

DexYCB Toolkit DexYCB Toolkit is a Python package that provides evaluation and visualization tools for the DexYCB dataset. The dataset and results wer

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Implementation of Convolutional enhanced image Transformer

CeiT : Convolutional enhanced image Transformer This is an unofficial PyTorch implementation of Incorporating Convolution Designs into Visual Transfor

SummVis is an interactive visualization tool for text summarization.

SummVis is an interactive visualization tool for analyzing abstractive summarization model outputs and datasets.

Implementation of Perceiver, General Perception with Iterative Attention in TensorFlow

Perceiver This Python package implements Perceiver: General Perception with Iterative Attention by Andrew Jaegle in TensorFlow. This model builds on t

The official pytorch implementation of our paper "Is Space-Time Attention All You Need for Video Understanding?"

TimeSformer This is an official pytorch implementation of Is Space-Time Attention All You Need for Video Understanding?. In this repository, we provid

Visualization Toolbox for Long Short Term Memory networks (LSTMs)

Visualization Toolbox for Long Short Term Memory networks (LSTMs)

Visualize classified time series data with interactive Sankey plots in Google Earth Engine

sankee Visualize changes in classified time series data with interactive Sankey plots in Google Earth Engine Contents Description Installation Using P

Visualization toolkit for neural networks in PyTorch! Demo --

FlashTorch A Python visualization toolkit, built with PyTorch, for neural networks in PyTorch. Neural networks are often described as "black box". The

:fire: 2D and 3D Face alignment library build using pytorch

Face Recognition Detect facial landmarks from Python using the world's most accurate face alignment network, capable of detecting points in both 2D an

Devkit for 3D -- Some utils for 3D object detection based on Numpy and Pytorch

D3D Devkit for 3D: Some utils for 3D object detection and tracking based on Numpy and Pytorch Please consider siting my work if you find this library

Code for the paper "MASTER: Multi-Aspect Non-local Network for Scene Text Recognition" (Pattern Recognition 2021)

MASTER-PyTorch PyTorch reimplementation of "MASTER: Multi-Aspect Non-local Network for Scene Text Recognition" (Pattern Recognition 2021). This projec

Data Visualization Guide for Presentations, Reports, and Dashboards

This is a highly practical and example-based guide on visually representing data in reports and dashboards.

Implementation of Cross Transformer for spatially-aware few-shot transfer, in Pytorch

Cross Transformers - Pytorch (wip) Implementation of Cross Transformer for spatially-aware few-shot transfer, in Pytorch Install $ pip install cross-t

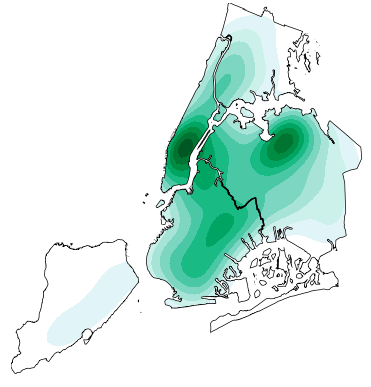

A Jupyter - Leaflet.js bridge

ipyleaflet A Jupyter / Leaflet bridge enabling interactive maps in the Jupyter notebook. Usage Selecting a basemap for a leaflet map: Loading a geojso

High-level geospatial data visualization library for Python.

geoplot: geospatial data visualization geoplot is a high-level Python geospatial plotting library. It's an extension to cartopy and matplotlib which m

Satellite imagery for dummies.

felicette Satellite imagery for dummies. What can you do with this tool? TL;DR: Generate JPEG earth imagery from coordinates/location name with public

OSMnx: Python for street networks. Retrieve, model, analyze, and visualize street networks and other spatial data from OpenStreetMap.

OSMnx OSMnx is a Python package that lets you download geospatial data from OpenStreetMap and model, project, visualize, and analyze real-world street

Net2Vis automatically generates abstract visualizations for convolutional neural networks from Keras code.

Automatic neural network visualizations generated in your browser!

Tool for visualizing attention in the Transformer model (BERT, GPT-2, Albert, XLNet, RoBERTa, CTRL, etc.)

Tool for visualizing attention in the Transformer model (BERT, GPT-2, Albert, XLNet, RoBERTa, CTRL, etc.)

Apache Superset is a Data Visualization and Data Exploration Platform

Apache Superset is a Data Visualization and Data Exploration Platform

Hands-on machine learning workshop

emb-ntua-workshop This workshop discusses introductory concepts of machine learning and data mining following a hands-on approach using popular tools

Implementation of TransGanFormer, an all-attention GAN that combines the finding from the recent GanFormer and TransGan paper

TransGanFormer (wip) Implementation of TransGanFormer, an all-attention GAN that combines the finding from the recent GansFormer and TransGan paper. I

NetworkX is a Python package for the creation, manipulation, and study of the structure, dynamics, and functions of complex networks.

NetworkX is a Python package for the creation, manipulation, and study of the structure, dynamics, and functions of complex networks.

Official PyTorch implementation for Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers, a novel method to visualize any Transformer-based network. Including examples for DETR, VQA.

PyTorch Implementation of Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers 1 Using Colab Please notic

Code for "3D Human Pose and Shape Regression with Pyramidal Mesh Alignment Feedback Loop"

PyMAF This repository contains the code for the following paper: 3D Human Pose and Shape Regression with Pyramidal Mesh Alignment Feedback Loop Hongwe

Code for the paper "Graph Attention Tracking". (CVPR2021)

SiamGAT 1. Environment setup This code has been tested on Ubuntu 16.04, Python 3.5, Pytorch 1.2.0, CUDA 9.0. Please install related libraries before r

Code for paper "A Critical Assessment of State-of-the-Art in Entity Alignment" (https://arxiv.org/abs/2010.16314)

A Critical Assessment of State-of-the-Art in Entity Alignment This repository contains the source code for the paper A Critical Assessment of State-of

Implementation of the Swin Transformer in PyTorch.

Swin Transformer - PyTorch Implementation of the Swin Transformer architecture. This paper presents a new vision Transformer, called Swin Transformer,

Implementation of STAM (Space Time Attention Model), a pure and simple attention model that reaches SOTA for video classification

STAM - Pytorch Implementation of STAM (Space Time Attention Model), yet another pure and simple SOTA attention model that bests all previous models in

Implementation of LambdaNetworks, a new approach to image recognition that reaches SOTA with less compute

Lambda Networks - Pytorch Implementation of λ Networks, a new approach to image recognition that reaches SOTA on ImageNet. The new method utilizes λ l

An implementation of Performer, a linear attention-based transformer, in Pytorch

Performer - Pytorch An implementation of Performer, a linear attention-based transformer variant with a Fast Attention Via positive Orthogonal Random

Reformer, the efficient Transformer, in Pytorch

Reformer, the Efficient Transformer, in Pytorch This is a Pytorch implementation of Reformer https://openreview.net/pdf?id=rkgNKkHtvB It includes LSH

Simple, concise geographical visualization in Python

Geographic visualizations for HoloViews. Build Status Coverage Latest dev release Latest release Docs What is it? GeoViews is a Python library that ma

A Jupyter - Leaflet.js bridge

ipyleaflet A Jupyter / Leaflet bridge enabling interactive maps in the Jupyter notebook. Usage Selecting a basemap for a leaflet map: Loading a geojso

WebGL2 powered geospatial visualization layers

deck.gl | Website WebGL2-powered, highly performant large-scale data visualization deck.gl is designed to simplify high-performance, WebGL-based visua

git《Self-Attention Attribution: Interpreting Information Interactions Inside Transformer》(AAAI 2021) GitHub:

Self-Attention Attribution This repository contains the implementation for AAAI-2021 paper Self-Attention Attribution: Interpreting Information Intera

The open source code of SA-UNet: Spatial Attention U-Net for Retinal Vessel Segmentation.

SA-UNet: Spatial Attention U-Net for Retinal Vessel Segmentation(ICPR 2020) Overview This code is for the paper: Spatial Attention U-Net for Retinal V

Implementation of the 😇 Attention layer from the paper, Scaling Local Self-Attention For Parameter Efficient Visual Backbones

HaloNet - Pytorch Implementation of the Attention layer from the paper, Scaling Local Self-Attention For Parameter Efficient Visual Backbones. This re

Visual Automata is a Python 3 library built as a wrapper for Caleb Evans' Automata library to add more visualization features.

Visual Automata Copyright 2021 Lewi Lie Uberg Released under the MIT license Visual Automata is a Python 3 library built as a wrapper for Caleb Evans'

Visual Attention based OCR

Attention-OCR Authours: Qi Guo and Yuntian Deng Visual Attention based OCR. The model first runs a sliding CNN on the image (images are resized to hei

A Tensorflow model for text recognition (CNN + seq2seq with visual attention) available as a Python package and compatible with Google Cloud ML Engine.

Attention-based OCR Visual attention-based OCR model for image recognition with additional tools for creating TFRecords datasets and exporting the tra

🖺 OCR using tensorflow with attention

tensorflow-ocr 🖺 OCR using tensorflow with attention, batteries included Installation git clone --recursive http://github.com/pannous/tensorflow-ocr

Single Shot Text Detector with Regional Attention

Single Shot Text Detector with Regional Attention Introduction SSTD is initially described in our ICCV 2017 spotlight paper. A third-party implementat

Implement 'Single Shot Text Detector with Regional Attention, ICCV 2017 Spotlight'

SSTDNet Implement 'Single Shot Text Detector with Regional Attention, ICCV 2017 Spotlight' using pytorch. This code is work for general object detecti

textspotter - An End-to-End TextSpotter with Explicit Alignment and Attention

An End-to-End TextSpotter with Explicit Alignment and Attention This is initially described in our CVPR 2018 paper. Getting Started Installation Clone

Pytorch implementation of PSEnet with Pyramid Attention Network as feature extractor

Scene Text-Spotting based on PSEnet+CRNN Pytorch implementation of an end to end Text-Spotter with a PSEnet text detector and CRNN text recognizer. We

MORAN: A Multi-Object Rectified Attention Network for Scene Text Recognition

MORAN: A Multi-Object Rectified Attention Network for Scene Text Recognition Python 2.7 Python 3.6 MORAN is a network with rectification mechanism for

Adaptive Attention Span for Reinforcement Learning

Adaptive Transformers in RL Official implementation of Adaptive Transformers in RL In this work we replicate several results from Stabilizing Transfor

CVPR 2021: "Generating Diverse Structure for Image Inpainting With Hierarchical VQ-VAE"

Diverse Structure Inpainting ArXiv | Papar | Supplementary Material | BibTex This repository is for the CVPR 2021 paper, "Generating Diverse Structure

![[ICLR 2021] Is Attention Better Than Matrix Decomposition?](https://github.com/Gsunshine/Enjoy-Hamburger/raw/main/assets/Hamburger.jpg)

[ICLR 2021] Is Attention Better Than Matrix Decomposition?

Enjoy-Hamburger 🍔 Official implementation of Hamburger, Is Attention Better Than Matrix Decomposition? (ICLR 2021) Under construction. Introduction T

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

git-cola: The highly caffeinated Git GUI

git-cola: The highly caffeinated Git GUI git-cola is a powerful Git GUI with a slick and intuitive user interface. Copyright (C) 2007-2020, David Agu

Official implementation of Self-supervised Graph Attention Networks (SuperGAT), ICLR 2021.

SuperGAT Official implementation of Self-supervised Graph Attention Networks (SuperGAT). This model is presented at How to Find Your Friendly Neighbor

Object-Centric Learning with Slot Attention

Slot Attention This is a re-implementation of "Object-Centric Learning with Slot Attention" in PyTorch (https://arxiv.org/abs/2006.15055). Requirement