461 Repositories

Python attention-approximates-sdm Libraries

Attention-based Transformation from Latent Features to Point Clouds (AAAI 2022)

Attention-based Transformation from Latent Features to Point Clouds This repository contains a PyTorch implementation of the paper: Attention-based Tr

Bottom-up attention model for image captioning and VQA, based on Faster R-CNN and Visual Genome

bottom-up-attention This code implements a bottom-up attention model, based on multi-gpu training of Faster R-CNN with ResNet-101, using object and at

DeepSpamReview: Detection of Fake Reviews on Online Review Platforms using Deep Learning Architectures. Summer Internship project at CoreView Systems.

Detection of Fake Reviews on Online Review Platforms using Deep Learning Architectures Dataset: https://s3.amazonaws.com/fast-ai-nlp/yelp_review_polar

SAFL: A Self-Attention Scene Text Recognizer with Focal Loss

SAFL: A Self-Attention Scene Text Recognizer with Focal Loss This repository implements the SAFL in pytorch. Installation conda env create -f environm

DAGAN - Dual Attention GANs for Semantic Image Synthesis

Contents Semantic Image Synthesis with DAGAN Installation Dataset Preparation Generating Images Using Pretrained Model Train and Test New Models Evalu

AttentionGAN for Unpaired Image-to-Image Translation & Multi-Domain Image-to-Image Translation

AttentionGAN-v2 for Unpaired Image-to-Image Translation AttentionGAN-v2 Framework The proposed generator learns both foreground and background attenti

A unified framework to jointly model images, text, and human attention traces.

connect-caption-and-trace This repository contains the reference code for our paper Connecting What to Say With Where to Look by Modeling Human Attent

![Implementation of 'X-Linear Attention Networks for Image Captioning' [CVPR 2020]](https://github.com/JDAI-CV/image-captioning/raw/master/images/framework.jpg)

Implementation of 'X-Linear Attention Networks for Image Captioning' [CVPR 2020]

Introduction This repository is for X-Linear Attention Networks for Image Captioning (CVPR 2020). The original paper can be found here. Please cite wi

Code for paper Adaptively Aligned Image Captioning via Adaptive Attention Time

Adaptively Aligned Image Captioning via Adaptive Attention Time This repository includes the implementation for Adaptively Aligned Image Captioning vi

Tensorflow implementation of soft-attention mechanism for video caption generation.

SA-tensorflow Tensorflow implementation of soft-attention mechanism for video caption generation. An example of soft-attention mechanism. The attentio

Show-attend-and-tell - TensorFlow Implementation of "Show, Attend and Tell"

Show, Attend and Tell Update (December 2, 2016) TensorFlow implementation of Show, Attend and Tell: Neural Image Caption Generation with Visual Attent

Image captioning - Tensorflow implementation of Show, Attend and Tell: Neural Image Caption Generation with Visual Attention

Introduction This neural system for image captioning is roughly based on the paper "Show, Attend and Tell: Neural Image Caption Generation with Visual

![[3DV 2021] Channel-Wise Attention-Based Network for Self-Supervised Monocular Depth Estimation](https://github.com/kamiLight/CADepth-master/raw/main/images/Quantitative_result.png)

[3DV 2021] Channel-Wise Attention-Based Network for Self-Supervised Monocular Depth Estimation

Channel-Wise Attention-Based Network for Self-Supervised Monocular Depth Estimation This is the official implementation for the method described in Ch

AlexaUsingPython - Alexa will pay attention to your order, as: Hello Alexa, play music, Hello Alexa

AlexaUsingPython - Alexa will pay attention to your order, as: Hello Alexa, play music, Hello Alexa, what's the time? Alexa will pay attention to your order, get it, and afterward do some activity as indicated by your order.

Awesome Graph Classification - A collection of important graph embedding, classification and representation learning papers with implementations.

A collection of graph classification methods, covering embedding, deep learning, graph kernel and factorization papers

Transformer - A TensorFlow Implementation of the Transformer: Attention Is All You Need

[UPDATED] A TensorFlow Implementation of Attention Is All You Need When I opened this repository in 2017, there was no official code yet. I tried to i

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

Self-supervised Equivariant Attention Mechanism for Weakly Supervised Semantic Segmentation, CVPR 2020 (Oral)

SEAM The implementation of Self-supervised Equivariant Attention Mechanism for Weakly Supervised Semantic Segmentaion. You can also download the repos

A PyTorch implementation of "SelfGNN: Self-supervised Graph Neural Networks without explicit negative sampling"

SelfGNN A PyTorch implementation of "SelfGNN: Self-supervised Graph Neural Networks without explicit negative sampling" paper, which will appear in Th

Source code of the "Graph-Bert: Only Attention is Needed for Learning Graph Representations" paper

Graph-Bert Source code of "Graph-Bert: Only Attention is Needed for Learning Graph Representations". Please check the script.py as the entry point. We

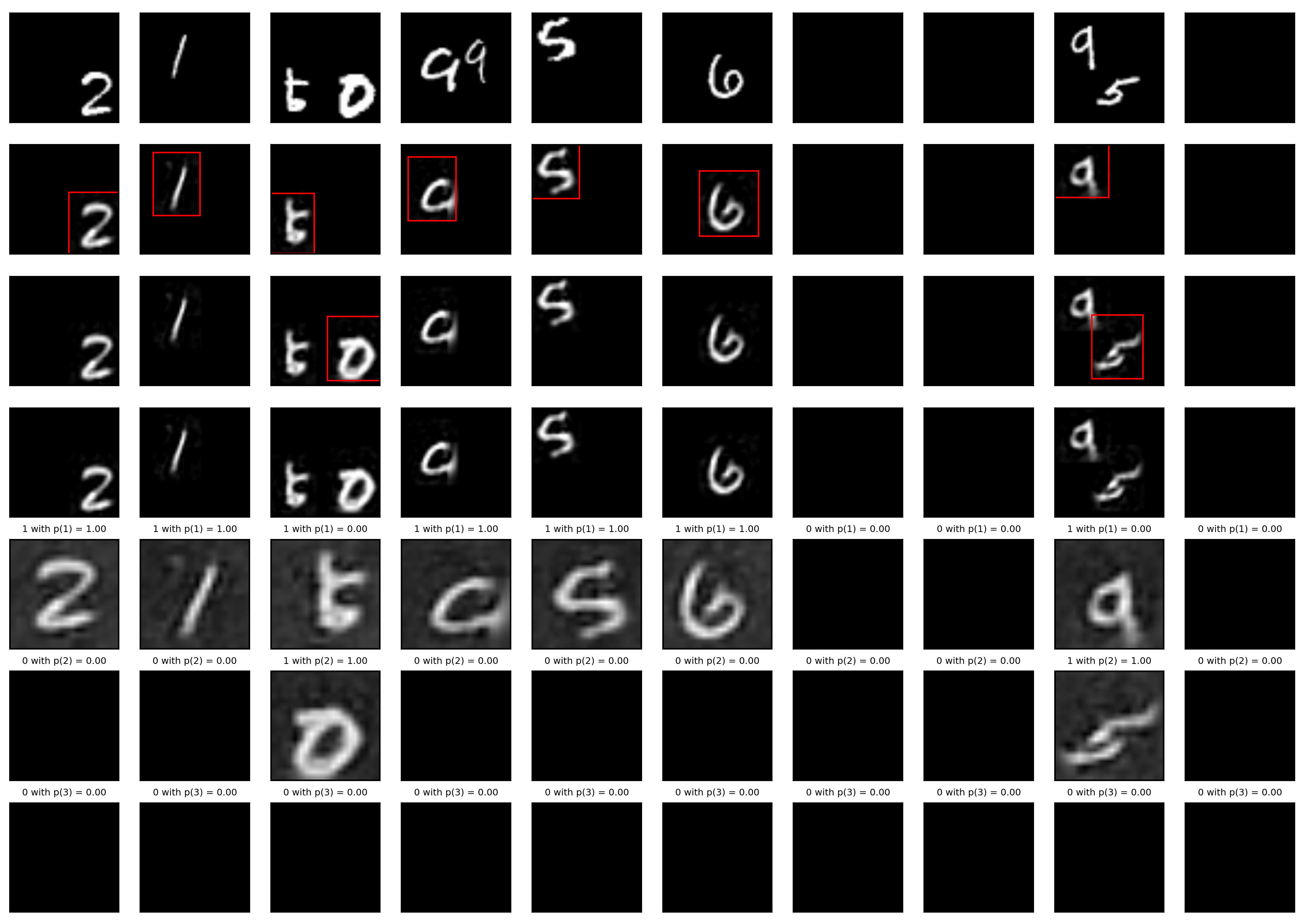

TensorFlow implementation of "Attention is all you need (Transformer)"

[TensorFlow 2] Attention is all you need (Transformer) TensorFlow implementation of "Attention is all you need (Transformer)" Dataset The MNIST datase

The code for SAG-DTA: Prediction of Drug–Target Affinity Using Self-Attention Graph Network.

SAG-DTA The code is the implementation for the paper 'SAG-DTA: Prediction of Drug–Target Affinity Using Self-Attention Graph Network'. Requirements py

XViT - Space-time Mixing Attention for Video Transformer

XViT - Space-time Mixing Attention for Video Transformer This is the official implementation of the XViT paper: @inproceedings{bulat2021space, title

Attention for PyTorch with Linear Memory Footprint

Attention for PyTorch with Linear Memory Footprint Unofficially implements https://arxiv.org/abs/2112.05682 to get Linear Memory Cost on Attention (+

PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation.

ALiBi PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation. Quickstart Clone this reposit

Learning hierarchical attention for weakly-supervised chest X-ray abnormality localization and diagnosis

Hierarchical Attention Mining (HAM) for weakly-supervised abnormality localization This is the official PyTorch implementation for the HAM method. Pap

Multi-Probe Attention for Semantic Indexing

Multi-Probe Attention for Semantic Indexing About This project is developed for the topic of COVID-19 semantic indexing. Directories & files A. The di

A human-readable PyTorch implementation of "Self-attention Does Not Need O(n^2) Memory"

memory_efficient_attention.pytorch A human-readable PyTorch implementation of "Self-attention Does Not Need O(n^2) Memory" (Rabe&Staats'21). def effic

Weak-supervised Visual Geo-localization via Attention-based Knowledge Distillation

Weak-supervised Visual Geo-localization via Attention-based Knowledge Distillation Introduction WAKD is a PyTorch implementation for our ICPR-2022 pap

ttslearn: Library for Pythonで学ぶ音声合成 (Text-to-speech with Python)

ttslearn: Library for Pythonで学ぶ音声合成 (Text-to-speech with Python) 日本語は以下に続きます (Japanese follows) English: This book is written in Japanese and primaril

Source code of D-HAN: Dynamic News Recommendation with Hierarchical Attention Network

D-HAN The source code of D-HAN This is the source code of D-HAN: Dynamic News Recommendation with Hierarchical Attention Network. However, only the co

Codes for “A Deeply Supervised Attention Metric-Based Network and an Open Aerial Image Dataset for Remote Sensing Change Detection”

DSAMNet The pytorch implementation for "A Deeply-supervised Attention Metric-based Network and an Open Aerial Image Dataset for Remote Sensing Change

CoANet: Connectivity Attention Network for Road Extraction From Satellite Imagery

CoANet: Connectivity Attention Network for Road Extraction From Satellite Imagery This paper (CoANet) has been published in IEEE TIP 2021. This code i

Official Pytorch implementation of the paper: "Locally Shifted Attention With Early Global Integration"

Locally-Shifted-Attention-With-Early-Global-Integration Pretrained models You can download all the models from here. Training Imagenet python -m torch

A-ESRGAN aims to provide better super-resolution images by using multi-scale attention U-net discriminators.

A-ESRGAN: Training Real-World Blind Super-Resolution with Attention-based U-net Discriminators The authors are hidden for the purpose of double blind

Tensorflow Implementation for "Pre-trained Deep Convolution Neural Network Model With Attention for Speech Emotion Recognition"

Tensorflow Implementation for "Pre-trained Deep Convolution Neural Network Model With Attention for Speech Emotion Recognition" Pre-trained Deep Convo

TensorFlow Implementation of "Show, Attend and Tell"

Show, Attend and Tell Update (December 2, 2016) TensorFlow implementation of Show, Attend and Tell: Neural Image Caption Generation with Visual Attent

![[CVPR 2019 Oral] Multi-Channel Attention Selection GAN with Cascaded Semantic Guidance for Cross-View Image Translation](https://github.com/Ha0Tang/SelectionGAN/raw/master/imgs/motivation.jpg)

[CVPR 2019 Oral] Multi-Channel Attention Selection GAN with Cascaded Semantic Guidance for Cross-View Image Translation

SelectionGAN for Guided Image-to-Image Translation CVPR Paper | Extended Paper | Guided-I2I-Translation-Papers Citation If you use this code for your

Memory Efficient Attention (O(sqrt(n)) for Jax and PyTorch

Memory Efficient Attention This is unofficial implementation of Self-attention Does Not Need O(n^2) Memory for Jax and PyTorch. Implementation is almo

Code for the paper Progressive Pose Attention for Person Image Generation in CVPR19 (Oral).

Pose-Transfer Code for the paper Progressive Pose Attention for Person Image Generation in CVPR19(Oral). The paper is available here. Video generation

The official implementation of the Hybrid Self-Attention NEAT algorithm

PUREPLES - Pure Python Library for ES-HyperNEAT About This is a library of evolutionary algorithms with a focus on neuroevolution, implemented in pure

GANformer: Generative Adversarial Transformers

GANformer: Generative Adversarial Transformers Drew A. Hudson* & C. Lawrence Zitnick Update: We released the new GANformer2 paper! *I wish to thank Ch

Edge-Augmented Graph Transformer

Edge-augmented Graph Transformer Introduction This is the official implementation of the Edge-augmented Graph Transformer (EGT) as described in https:

Nystromformer: A Nystrom-based Algorithm for Approximating Self-Attention

Nystromformer: A Nystrom-based Algorithm for Approximating Self-Attention April 6, 2021 We extended segment-means to compute landmarks without requiri

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Sinkhorn Transformer This is a reproduction of the work outlined in Sparse Sinkhorn Attention, with additional enhancements. It includes a parameteriz

An implementation of the Pay Attention when Required transformer

Pay Attention when Required (PAR) Transformer-XL An implementation of the Pay Attention when Required transformer from the paper: https://arxiv.org/pd

Fully featured implementation of Routing Transformer

Routing Transformer A fully featured implementation of Routing Transformer. The paper proposes using k-means to route similar queries / keys into the

Reproducing the Linear Multihead Attention introduced in Linformer paper (Linformer: Self-Attention with Linear Complexity)

Linear Multihead Attention (Linformer) PyTorch Implementation of reproducing the Linear Multihead Attention introduced in Linformer paper (Linformer:

DeBERTa: Decoding-enhanced BERT with Disentangled Attention

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

Examples of using sparse attention, as in "Generating Long Sequences with Sparse Transformers"

Status: Archive (code is provided as-is, no updates expected) Update August 2020: For an example repository that achieves state-of-the-art modeling pe

PyContinual (An Easy and Extendible Framework for Continual Learning)

PyContinual (An Easy and Extendible Framework for Continual Learning) Easy to Use You can sumply change the baseline, backbone and task, and then read

[ACM MM 2021] Yes, "Attention is All You Need", for Exemplar based Colorization

Transformer for Image Colorization This is an implemention for Yes, "Attention Is All You Need", for Exemplar based Colorization, and the current soft

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation in TensorFlow 2

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation in TensorFlow 2 Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexan

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm.

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm. It contains the code to reproduce the results presented in the original paper: https://arxiv.org/abs/2112.03670

TRACER: Extreme Attention Guided Salient Object Tracing Network implementation in PyTorch

TRACER: Extreme Attention Guided Salient Object Tracing Network This paper was accepted at AAAI 2022 SA poster session. Datasets All datasets are avai

A repository with exploration into using transformers to predict DNA ↔ transcription factor binding

Transcription Factor binding predictions with Attention and Transformers A repository with exploration into using transformers to predict DNA ↔ transc

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

Implementation of Heterogeneous Graph Attention Network

HetGAN Implementation of Heterogeneous Graph Attention Network This is the code repository of paper "Prediction of Metro Ridership During the COVID-19

Official implementation of the paper Do pedestrians pay attention? Eye contact detection for autonomous driving

Do pedestrians pay attention? Eye contact detection for autonomous driving Official implementation of the paper Do pedestrians pay attention? Eye cont

A Dynamic Residual Self-Attention Network for Lightweight Single Image Super-Resolution

DRSAN A Dynamic Residual Self-Attention Network for Lightweight Single Image Super-Resolution Karam Park, Jae Woong Soh, and Nam Ik Cho Environments U

Codes for TIM2021 paper "Anchor-Based Spatio-Temporal Attention 3-D Convolutional Networks for Dynamic 3-D Point Cloud Sequences"

Codes for TIM2021 paper "Anchor-Based Spatio-Temporal Attention 3-D Convolutional Networks for Dynamic 3-D Point Cloud Sequences"

Pytorch Implementations of large number classical backbone CNNs, data enhancement, torch loss, attention, visualization and some common algorithms.

Torch-template-for-deep-learning Pytorch implementations of some **classical backbone CNNs, data enhancement, torch loss, attention, visualization and

An end to end ASR Transformer model training repo

END TO END ASR TRANSFORMER 本项目基于transformer 6*encoder+6*decoder的基本结构构造的端到端的语音识别系统 Model Instructions 1.数据准备: 自行下载数据,遵循文件结构如下: ├── data │ ├── train │

Markov Attention Models

Introduction This repo contains code for reproducing the results in the paper Graphical Models with Attention for Context-Specific Independence and an

Python implementation of ADD: Frequency Attention and Multi-View based Knowledge Distillation to Detect Low-Quality Compressed Deepfake Images, AAAI2022.

ADD: Frequency Attention and Multi-View based Knowledge Distillation to Detect Low-Quality Compressed Deepfake Images Binh M. Le & Simon S. Woo, "ADD:

Bayesian Deep Learning and Deep Reinforcement Learning for Object Shape Error Response and Correction of Manufacturing Systems

Bayesian Deep Learning for Manufacturing 2.0 (dlmfg) Object Shape Error Response (OSER) Digital Lifecycle Management - In Process Quality Improvement

Unofficial implementation of "Coordinate Attention for Efficient Mobile Network Design"

Unofficial implementation of "Coordinate Attention for Efficient Mobile Network Design". CoordAttention tensorflow slim

Robust Lane Detection via Expanded Self Attention (WACV 2022)

Robust Lane Detection via Expanded Self Attention (WACV 2022) Minhyeok Lee, Junhyeop Lee, Dogyoon Lee, Woojin Kim, Sangwon Hwang, Sangyoun Lee Overvie

An Implementation of Transformer in Transformer in TensorFlow for image classification, attention inside local patches

Transformer-in-Transformer An Implementation of the Transformer in Transformer paper by Han et al. for image classification, attention inside local pa

Neural Caption Generator with Attention

Neural Caption Generator with Attention Tensorflow implementation of "Show

TensorFlow implementation of the paper "Hierarchical Attention Networks for Document Classification"

Hierarchical Attention Networks for Document Classification This is an implementation of the paper Hierarchical Attention Networks for Document Classi

Hierarchical Attentive Recurrent Tracking

Hierarchical Attentive Recurrent Tracking This is an official Tensorflow implementation of single object tracking in videos by using hierarchical atte

A Tensorfflow implementation of Attend, Infer, Repeat

Attend, Infer, Repeat: Fast Scene Understanding with Generative Models This is an unofficial Tensorflow implementation of Attend, Infear, Repeat (AIR)

Official Pytorch Implementation of Relational Self-Attention: What's Missing in Attention for Video Understanding

Relational Self-Attention: What's Missing in Attention for Video Understanding This repository is the official implementation of "Relational Self-Atte

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

PCAM: Product of Cross-Attention Matrices for Rigid Registration of Point Clouds

PCAM: Product of Cross-Attention Matrices for Rigid Registration of Point Clouds PCAM: Product of Cross-Attention Matrices for Rigid Registration of P

Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch

NÜWA - Pytorch (wip) Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch. This repository will be popul

Neural HMMs are all you need (for high-quality attention-free TTS)

Neural HMMs are all you need (for high-quality attention-free TTS) Shivam Mehta, Éva Székely, Jonas Beskow, and Gustav Eje Henter This is the official

(ACL-IJCNLP 2021) Convolutions and Self-Attention: Re-interpreting Relative Positions in Pre-trained Language Models.

BERT Convolutions Code for the paper Convolutions and Self-Attention: Re-interpreting Relative Positions in Pre-trained Language Models. Contains expe

Code release for "Masked-attention Mask Transformer for Universal Image Segmentation"

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexander Kirillov, Ro

A PyTorch Implementation of PGL-SUM from "Combining Global and Local Attention with Positional Encoding for Video Summarization", Proc. IEEE ISM 2021

PGL-SUM: Combining Global and Local Attention with Positional Encoding for Video Summarization PyTorch Implementation of PGL-SUM From "PGL-SUM: Combin

Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving

GSAN Introduction Code for paper GSAN: Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving, wh

Modified GPT using average pooling to reduce the softmax attention memory constraints.

NLP-GPT-Upsampling This repository contains an implementation of Open AI's GPT Model. In particular, this implementation takes inspiration from the Ny

A package with multiple bias correction methods for climatic variables, including the QM, DQM, QDM, UQM, and SDM methods

A package with multiple bias correction methods for climatic variables, including the QM, DQM, QDM, UQM, and SDM methods

BERT Attention Analysis

BERT Attention Analysis This repository contains code for What Does BERT Look At? An Analysis of BERT's Attention. It includes code for getting attent

This is a repository with the code for the ACL 2019 paper

The Story of Heads This is the official repo for the following papers: (ACL 2019) Analyzing Multi-Head Self-Attention: Specialized Heads Do the Heavy

Pytorch implementation of Bert and Pals: Projected Attention Layers for Efficient Adaptation in Multi-Task Learning

PyTorch implementation of BERT and PALs Introduction Work by Asa Cooper Stickland and Iain Murray, University of Edinburgh. Code for BERT and PALs; mo

DeepFill v1/v2 with Contextual Attention and Gated Convolution, CVPR 2018, and ICCV 2019 Oral

Generative Image Inpainting An open source framework for generative image inpainting task, with the support of Contextual Attention (CVPR 2018) and Ga

Implementation of Vaswani, Ashish, et al. "Attention is all you need."

Attention Is All You Need Paper Implementation This is my from-scratch implementation of the original transformer architecture from the following pape

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

PyContinual (An Easy and Extendible Framework for Continual Learning)

PyContinual (An Easy and Extendible Framework for Continual Learning) Easy to Use You can sumply change the baseline, backbone and task, and then read

Representing Long-Range Context for Graph Neural Networks with Global Attention

Graph Augmentation Graph augmentation/self-supervision/etc. Algorithms gcn gcn+virtual node gin gin+virtual node PNA GraphTrans Augmentation methods N

Single-step adversarial training (AT) has received wide attention as it proved to be both efficient and robust.

Subspace Adversarial Training Single-step adversarial training (AT) has received wide attention as it proved to be both efficient and robust. However,

Image Captioning using CNN ,LSTM and Attention

Image Captioning using CNN ,LSTM and Attention This is a deeplearning model which tries to summarize an image into a text . Installation Install this

Image Super-Resolution Using Very Deep Residual Channel Attention Networks

Image Super-Resolution Using Very Deep Residual Channel Attention Networks

Official repository for Natural Image Matting via Guided Contextual Attention

GCA-Matting: Natural Image Matting via Guided Contextual Attention The source codes and models of Natural Image Matting via Guided Contextual Attentio

Prototypical Cross-Attention Networks for Multiple Object Tracking and Segmentation, NeurIPS 2021 Spotlight

PCAN for Multiple Object Tracking and Segmentation This is the offical implementation of paper PCAN for MOTS. We also present a trailer that consists

Multi-modal co-attention for drug-target interaction annotation and Its Application to SARS-CoV-2

CoaDTI Multi-modal co-attention for drug-target interaction annotation and Its Application to SARS-CoV-2 Abstract Environment The test was conducted i

Revisiting Video Saliency: A Large-scale Benchmark and a New Model (CVPR18, PAMI19)

DHF1K =========================================================================== Wenguan Wang, J. Shen, M.-M Cheng and A. Borji, Revisiting Video Sal

Deep Reinforced Attention Regression for Partial Sketch Based Image Retrieval.

DARP-SBIR Intro This repository contains the source code implementation for ICDM submission paper Deep Reinforced Attention Regression for Partial Ske