640 Repositories

Python dynamic-attention Libraries

Implementation of Memorizing Transformers (ICLR 2022), attention net augmented with indexing and retrieval of memories using approximate nearest neighbors, in Pytorch

Memorizing Transformers - Pytorch Implementation of Memorizing Transformers (ICLR 2022), attention net augmented with indexing and retrieval of memori

[CVPR 2022 Oral] MixFormer: End-to-End Tracking with Iterative Mixed Attention

MixFormer The official implementation of the CVPR 2022 paper MixFormer: End-to-End Tracking with Iterative Mixed Attention [Models and Raw results] (G

OcclusionFusion: realtime dynamic 3D reconstruction based on single-view RGB-D

OcclusionFusion (CVPR'2022) Project Page | Paper | Video Overview This repository contains the code for the CVPR 2022 paper OcclusionFusion, where we

Official implementation for "Style Transformer for Image Inversion and Editing" (CVPR 2022)

Style Transformer for Image Inversion and Editing (CVPR2022) https://arxiv.org/abs/2203.07932 Existing GAN inversion methods fail to provide latent co

Implementation of Deformable Attention in Pytorch from the paper "Vision Transformer with Deformable Attention"

Deformable Attention Implementation of Deformable Attention from this paper in Pytorch, which appears to be an improvement to what was proposed in DET

PyTorch implementation of U-TAE and PaPs for satellite image time series panoptic segmentation.

Panoptic Segmentation of Satellite Image Time Series with Convolutional Temporal Attention Networks (ICCV 2021) This repository is the official implem

This repo presents you the official code of "VISTA: Boosting 3D Object Detection via Dual Cross-VIew SpaTial Attention"

VISTA VISTA: Boosting 3D Object Detection via Dual Cross-VIew SpaTial Attention Shengheng Deng, Zhihao Liang, Lin Sun and Kui Jia* (*) Corresponding a

Implementation of the Transformer variant proposed in "Transformer Quality in Linear Time"

FLASH - Pytorch Implementation of the Transformer variant proposed in the paper Transformer Quality in Linear Time Install $ pip install FLASH-pytorch

A Text Attention Network for Spatial Deformation Robust Scene Text Image Super-resolution (CVPR2022)

A Text Attention Network for Spatial Deformation Robust Scene Text Image Super-resolution (CVPR2022) https://arxiv.org/abs/2203.09388 Jianqi Ma, Zheto

Code release for "BoxeR: Box-Attention for 2D and 3D Transformers"

BoxeR By Duy-Kien Nguyen, Jihong Ju, Olaf Booij, Martin R. Oswald, Cees Snoek. This repository is an official implementation of the paper BoxeR: Box-A

Curso práctico: NLP de cero a cien 🤗

Curso Práctico: NLP de cero a cien Comprende todos los conceptos y arquitecturas clave del estado del arte del NLP y aplícalos a casos prácticos utili

B-cos Networks: Attention is All we Need for Interpretability

Convolutional Dynamic Alignment Networks for Interpretable Classifications M. Böhle, M. Fritz, B. Schiele. B-cos Networks: Alignment is All we Need fo

Multi-Scale Temporal Frequency Convolutional Network With Axial Attention for Speech Enhancement

MTFAA-Net Unofficial PyTorch implementation of Baidu's MTFAA-Net: "Multi-Scale Temporal Frequency Convolutional Network With Axial Attention for Speec

Implementation of CoCa, Contrastive Captioners are Image-Text Foundation Models, in Pytorch

CoCa - Pytorch Implementation of CoCa, Contrastive Captioners are Image-Text Foundation Models, in Pytorch. They were able to elegantly fit in contras

![[ICLR 2022] DAB-DETR: Dynamic Anchor Boxes are Better Queries for DETR](https://github.com/IDEA-opensource/DAB-DETR/raw/main/figure/arch_new2.png)

[ICLR 2022] DAB-DETR: Dynamic Anchor Boxes are Better Queries for DETR

DAB-DETR This is the official pytorch implementation of our ICLR 2022 paper DAB-DETR. Authors: Shilong Liu, Feng Li, Hao Zhang, Xiao Yang, Xianbiao Qi

Boilerplate template formwork for a Python Flask application with Mysql,Build dynamic websites rapidly.

Overview English | 简体中文 How to Build dynamic web rapidly? We choose Formwork-Flask. Formwork is a highly packaged Flask Demo. It's intergrates various

![[ICML 2022] The official implementation of Graph Stochastic Attention (GSAT).](https://github.com/Graph-COM/GSAT/raw/main/data/arch.png)

[ICML 2022] The official implementation of Graph Stochastic Attention (GSAT).

Graph Stochastic Attention (GSAT) The official implementation of GSAT for our paper: Interpretable and Generalizable Graph Learning via Stochastic Att

Unsupervised phone and word segmentation using dynamic programming on self-supervised VQ features.

Unsupervised Phone and Word Segmentation using Vector-Quantized Neural Networks Overview Unsupervised phone and word segmentation on speech data is pe

Implementation of CaiT models in TensorFlow and ImageNet-1k checkpoints. Includes code for inference and fine-tuning.

CaiT-TF (Going deeper with Image Transformers) This repository provides TensorFlow / Keras implementations of different CaiT [1] variants from Touvron

Codes for "Efficient Long-Range Attention Network for Image Super-resolution"

ELAN Codes for "Efficient Long-Range Attention Network for Image Super-resolution", arxiv link. Dependencies & Installation Please refer to the follow

Official implementation of cosformer-attention in cosFormer: Rethinking Softmax in Attention

cosFormer Official implementation of cosformer-attention in cosFormer: Rethinking Softmax in Attention Update log 2022/2/28 Add core code License This

QuadTree Attention for Vision Transformers (ICLR2022)

This repository contains codes for quadtree attention. This repo contains codes for feature matching, image classficiation, object detection and seman

(CVPR 2022) Pytorch implementation of "Self-supervised transformers for unsupervised object discovery using normalized cut"

(CVPR 2022) TokenCut Pytorch implementation of Tokencut: Self-supervised Transformers for Unsupervised Object Discovery using Normalized Cut Yangtao W

.png)

Contextual Attention Network: Transformer Meets U-Net

Contextual Attention Network: Transformer Meets U-Net Contexual attention network for medical image segmentation with state of the art results on skin

Package towards building Explainable Forecasting and Nowcasting Models with State-of-the-art Deep Neural Networks and Dynamic Factor Model on Time Series data sets with single line of code. Also, provides utilify facility for time-series signal similarities matching, and removing noise from timeseries signals.

DeepXF: Explainable Forecasting and Nowcasting with State-of-the-art Deep Neural Networks and Dynamic Factor Model Also, verify TS signal similarities

SciFive: a text-text transformer model for biomedical literature

SciFive SciFive provided a Text-Text framework for biomedical language and natural language in NLP. Under the T5's framework and desrbibed in the pape

Official implementation for "QS-Attn: Query-Selected Attention for Contrastive Learning in I2I Translation" (CVPR 2022)

QS-Attn: Query-Selected Attention for Contrastive Learning in I2I Translation (CVPR2022) https://arxiv.org/abs/2203.08483 Unpaired image-to-image (I2I

Implementation of a protein autoregressive language model, but with autoregressive infilling objective (editing subsequences capability)

Protein GLM (wip) Implementation of a protein autoregressive language model, but with autoregressive infilling objective (editing subsequences capabil

Implementation of 🦩 Flamingo, state-of-the-art few-shot visual question answering attention net out of Deepmind, in Pytorch

🦩 Flamingo - Pytorch Implementation of Flamingo, state-of-the-art few-shot visual question answering attention net, in Pytorch. It will include the p

Implementation of the GVP-Transformer, which was used in the paper "Learning inverse folding from millions of predicted structures" for de novo protein design alongside Alphafold2

GVP Transformer (wip) Implementation of the GVP-Transformer, which was used in the paper Learning inverse folding from millions of predicted structure

Official Implementation of HRDA: Context-Aware High-Resolution Domain-Adaptive Semantic Segmentation

HRDA: Context-Aware High-Resolution Domain-Adaptive Semantic Segmentation by Lukas Hoyer, Dengxin Dai, and Luc Van Gool [Arxiv] [Paper] Overview Unsup

![[CVPRW 2022] Attentions Help CNNs See Better: Attention-based Hybrid Image Quality Assessment Network](https://github.com/IIGROUP/AHIQ/raw/main/Figures/architecture.png)

[CVPRW 2022] Attentions Help CNNs See Better: Attention-based Hybrid Image Quality Assessment Network

Attention Helps CNN See Better: Hybrid Image Quality Assessment Network [CVPRW 2022] Code for Hybrid Image Quality Assessment Network [paper] [code] T

code for paper"A High-precision Semantic Segmentation Method Combining Adversarial Learning and Attention Mechanism"

PyTorch implementation of UAGAN(U-net Attention Generative Adversarial Networks) This repository contains the source code for the paper "A High-precis

The code is for the paper "A Self-Distillation Embedded Supervised Affinity Attention Model for Few-Shot Segmentation"

SD-AANet The code is for the paper "A Self-Distillation Embedded Supervised Affinity Attention Model for Few-Shot Segmentation" [arxiv] Overview confi

Implementation of MeMOT - Multi-Object Tracking with Memory - in Pytorch

MeMOT - Pytorch (wip) Implementation of MeMOT - Multi-Object Tracking with Memory - in Pytorch. This paper is just one in a line of work, but importan

Attention-based CNN-LSTM and XGBoost hybrid model for stock prediction

Attention-based CNN-LSTM and XGBoost hybrid model for stock prediction Requirements The code has been tested running under Python 3.7.4, with the foll

Implementation of the Hybrid Perception Block and Dual-Pruned Self-Attention block from the ITTR paper for Image to Image Translation using Transformers

ITTR - Pytorch Implementation of the Hybrid Perception Block (HPB) and Dual-Pruned Self-Attention (DPSA) block from the ITTR paper for Image to Image

Implementation of a memory efficient multi-head attention as proposed in the paper, "Self-attention Does Not Need O(n²) Memory"

Memory Efficient Attention Pytorch Implementation of a memory efficient multi-head attention as proposed in the paper, Self-attention Does Not Need O(

Using this repository you can send mails to multiple recipients.Was created in support of Ukraine, to turn society`s attention to war.

mails-in-support-of-UA Using this repository you can send mails to multiple recipients.Was created in support of Ukraine, to turn society`s attention

Job-Recommend-Competition - Vectorwise Interpretable Attentions for Multimodal Tabular Data

SiD - Simple Deep Model Vectorwise Interpretable Attentions for Multimodal Tabul

Theory-inspired Parameter Control Benchmarks for Dynamic Algorithm Configuration

This repo is for the paper: Theory-inspired Parameter Control Benchmarks for Dynamic Algorithm Configuration The DAC environment is based on the Dynam

This repository contains the source code of Auto-Lambda and baselines from the paper, Auto-Lambda: Disentangling Dynamic Task Relationships.

Auto-Lambda This repository contains the source code of Auto-Lambda and baselines from the paper, Auto-Lambda: Disentangling Dynamic Task Relationship

Different steganography methods with examples and my own small image database

literally-the-most-useless-project [Different steganography methods with examples and my own small image database] This project currently contains thr

Pytorch implementation of Depth-conditioned Dynamic Message Propagation forMonocular 3D Object Detection

DDMP-3D Pytorch implementation of Depth-conditioned Dynamic Message Propagation forMonocular 3D Object Detection, a paper on CVPR2021. Instroduction T

End-to-end image captioning with EfficientNet-b3 + LSTM with Attention

Image captioning End-to-end image captioning with EfficientNet-b3 + LSTM with Attention Model is seq2seq model. In the encoder pretrained EfficientNet

HAIS_2GNN: 3D Visual Grounding with Graph and Attention

HAIS_2GNN: 3D Visual Grounding with Graph and Attention This repository is for the HAIS_2GNN research project. Tao Gu, Yue Chen Introduction The motiv

DCSAU-Net: A Deeper and More Compact Split-Attention U-Net for Medical Image Segmentation

DCSAU-Net: A Deeper and More Compact Split-Attention U-Net for Medical Image Segmentation By Qing Xu, Wenting Duan and Na He Requirements pytorch==1.1

Temporal Dynamic Convolutional Neural Network for Text-Independent Speaker Verification and Phonemetic Analysis

TDY-CNN for Text-Independent Speaker Verification Official implementation of Temporal Dynamic Convolutional Neural Network for Text-Independent Speake

To create a deep learning model which can explain the content of an image in the form of speech through caption generation with attention mechanism on Flickr8K dataset.

To create a deep learning model which can explain the content of an image in the form of speech through caption generation with attention mechanism on Flickr8K dataset.

A pure PyTorch implementation of the loss described in "Online Segment to Segment Neural Transduction"

ssnt-loss ℹ️ This is a WIP project. the implementation is still being tested. A pure PyTorch implementation of the loss described in "Online Segment t

An implementation of IMLE-Net: An Interpretable Multi-level Multi-channel Model for ECG Classification

IMLE-Net: An Interpretable Multi-level Multi-channel Model for ECG Classification The repostiory consists of the code, results and data set links for

Pytorch implementation of the paper "Enhancing Content Preservation in Text Style Transfer Using Reverse Attention and Conditional Layer Normalization"

Pytorch implementation of the paper "Enhancing Content Preservation in Text Style Transfer Using Reverse Attention and Conditional Layer Normalization"

Pytorch implementation of various High Dynamic Range (HDR) Imaging algorithms

Deep High Dynamic Range Imaging Benchmark This repository is the pytorch impleme

An implementation of the "Attention is all you need" paper without extra bells and whistles, or difficult syntax

Simple Transformer An implementation of the "Attention is all you need" paper without extra bells and whistles, or difficult syntax. Note: The only ex

Deep Ensembling with No Overhead for either Training or Testing: The All-Round Blessings of Dynamic Sparsity

[ICLR 2022] Deep Ensembling with No Overhead for either Training or Testing: The All-Round Blessings of Dynamic Sparsity by Shiwei Liu, Tianlong Chen, Zahra Atashgahi, Xiaohan Chen, Ghada Sokar, Elena Mocanu, Mykola Pechenizkiy, Zhangyang Wang, Decebal Constantin Mocanu

clustering moroccan stocks time series data using k-means with dtw (dynamic time warping)

Moroccan Stocks Clustering Context Hey! we don't always have to forecast time series am I right ? We use k-means to cluster about 70 moroccan stock pr

Neural Scene Flow Fields for Space-Time View Synthesis of Dynamic Scenes

Neural Scene Flow Fields PyTorch implementation of paper "Neural Scene Flow Fields for Space-Time View Synthesis of Dynamic Scenes", CVPR 2021 [Projec

A face dataset generator with out-of-focus blur detection and dynamic interval adjustment.

A face dataset generator with out-of-focus blur detection and dynamic interval adjustment.

PyTorch implementation for the paper Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime

Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime Created by Prarthana Bhattacharyya. Disclaimer: This is n

PyTorch implementation of an end-to-end Handwritten Text Recognition (HTR) system based on attention encoder-decoder networks

AttentionHTR PyTorch implementation of an end-to-end Handwritten Text Recognition (HTR) system based on attention encoder-decoder networks. Scene Text

Framework for training options with different attention mechanism and using them to solve downstream tasks.

Using Attention in HRL Framework for training options with different attention mechanism and using them to solve downstream tasks. Requirements GPU re

Official code of Team Yao at Multi-Modal-Fact-Verification-2022

Official code of Team Yao at Multi-Modal-Fact-Verification-2022 A Multi-Modal Fact Verification dataset released as part of the De-Factify workshop in

This repository is for Contrastive Embedding Distribution Refinement and Entropy-Aware Attention Network (CEDR)

CEDR This repository is for Contrastive Embedding Distribution Refinement and Entropy-Aware Attention Network (CEDR) introduced in the following paper

Dynamic vae - Dynamic VAE algorithm is used for anomaly detection of battery data

Dynamic VAE frame Automatic feature extraction can be achieved by probability di

Sinkformers: Transformers with Doubly Stochastic Attention

Code for the paper : "Sinkformers: Transformers with Doubly Stochastic Attention" Paper You will find our paper here. Compat This package has been dev

Human Dynamics from Monocular Video with Dynamic Camera Movements

Human Dynamics from Monocular Video with Dynamic Camera Movements Ri Yu, Hwangpil Park and Jehee Lee Seoul National University ACM Transactions on Gra

This is the repo of the manuscript "Dual-branch Attention-In-Attention Transformer for speech enhancement"

DB-AIAT: A Dual-branch attention-in-attention transformer for single-channel SE

Multimodal Co-Attention Transformer (MCAT) for Survival Prediction in Gigapixel Whole Slide Images

Multimodal Co-Attention Transformer (MCAT) for Survival Prediction in Gigapixel Whole Slide Images [ICCV 2021] © Mahmood Lab - This code is made avail

Static Features Classifier - A static features classifier for Point-Could clusters using an Attention-RNN model

Static Features Classifier This is a static features classifier for Point-Could

Built a deep neural network (DNN) that functions as an end-to-end machine translation pipeline

Built a deep neural network (DNN) that functions as an end-to-end machine translation pipeline. The pipeline accepts english text as input and returns the French translation.

Pytorch implementation of ICASSP 2022 paper Attention Probe: Vision Transformer Distillation in the Wild

Attention Probe: Vision Transformer Distillation in the Wild Jiahao Wang, Mingdeng Cao, Shuwei Shi, Baoyuan Wu, Yujiu Yang In ICASSP 2022 This code is

Attention Probe: Vision Transformer Distillation in the Wild

Attention Probe: Vision Transformer Distillation in the Wild Jiahao Wang, Mingdeng Cao, Shuwei Shi, Baoyuan Wu, Yujiu Yang In ICASSP 2022 This code is

HistoSeg : Quick attention with multi-loss function for multi-structure segmentation in digital histology images

HistoSeg : Quick attention with multi-loss function for multi-structure segmentation in digital histology images Histological Image Segmentation This

AIDynamicTextReader - A simple dynamic text reader based on Artificial intelligence

AI Dynamic Text Reader: This is a simple dynamic text reader based on Artificial

Transformer in Vision

Transformer-in-Vision Recent Transformer-based CV and related works. Welcome to comment/contribute! Keep updated. Resource SCENIC: A JAX Library for C

A curated list of efficient attention modules

awesome-fast-attention A curated list of efficient attention modules

Dynamic Token Normalization Improves Vision Transformers

Dynamic Token Normalization Improves Vision Transformers This is the PyTorch implementation of the paper Dynamic Token Normalization Improves Vision T

GalaXC: Graph Neural Networks with Labelwise Attention for Extreme Classification

GalaXC GalaXC: Graph Neural Networks with Labelwise Attention for Extreme Classification @InProceedings{Saini21, author = {Saini, D. and Jain,

Seq2seq attn - Use the Seq2Seq method to implement machine translation and introduce Attention mechanism to improve the results

Seq2seq_attn Use the Seq2Seq method to implement machine translation and use the

Unofficial PyTorch Implementation of "Augmenting Convolutional networks with attention-based aggregation"

Pytorch Implementation of Augmenting Convolutional networks with attention-based aggregation This is the unofficial PyTorch Implementation of "Augment

Escaping the Gradient Vanishing: Periodic Alternatives of Softmax in Attention Mechanism

Period-alternatives-of-Softmax Experimental Demo for our paper 'Escaping the Gradient Vanishing: Periodic Alternatives of Softmax in Attention Mechani

Official PyTorch Implementation of paper EAN: Event Adaptive Network for Efficient Action Recognition

Official PyTorch Implementation of paper EAN: Event Adaptive Network for Efficient Action Recognition

Official implementation of "Refiner: Refining Self-attention for Vision Transformers".

RefinerViT This repo is the official implementation of "Refiner: Refining Self-attention for Vision Transformers". The repo is build on top of timm an

Dynamic Head: Unifying Object Detection Heads with Attentions

Dynamic Head: Unifying Object Detection Heads with Attentions dyhead_video.mp4 This is the official implementation of CVPR 2021 paper "Dynamic Head: U

RETRO-pytorch - Implementation of RETRO, Deepmind's Retrieval based Attention net, in Pytorch

RETRO - Pytorch (wip) Implementation of RETRO, Deepmind's Retrieval based Attent

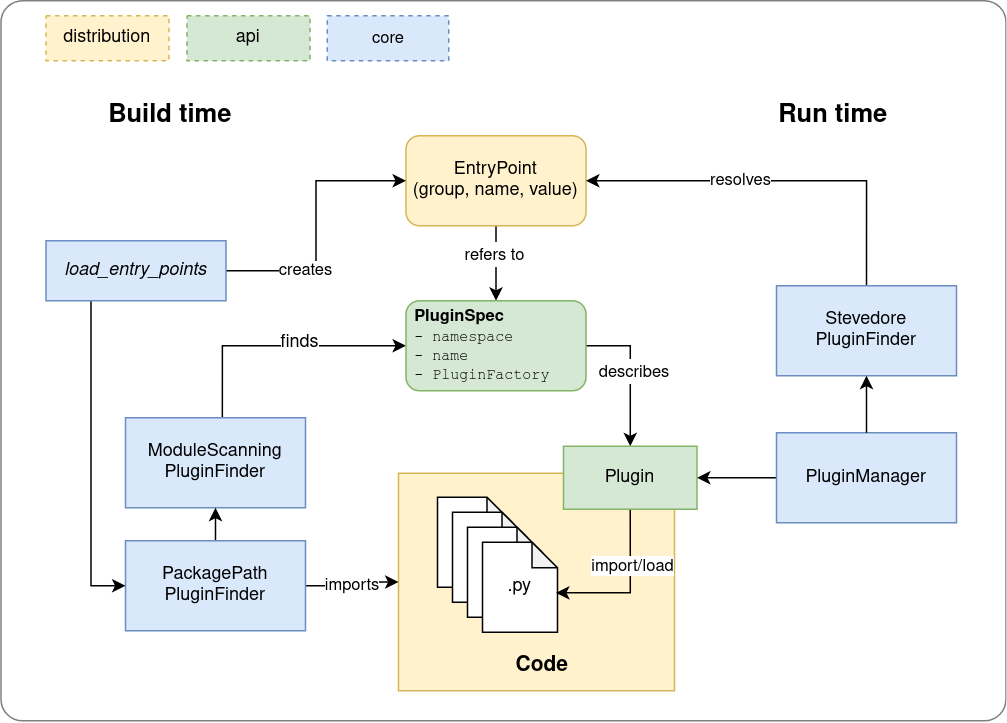

Plux - A dynamic code loading framework for building plugable Python distributions

Plux plux is the dynamic code loading framework used in LocalStack. Overview The

Implementation of Memory-Compressed Attention, from the paper "Generating Wikipedia By Summarizing Long Sequences"

Memory Compressed Attention Implementation of the Self-Attention layer of the proposed Memory-Compressed Attention, in Pytorch. This repository offers

Official PyTorch code for "BAM: Bottleneck Attention Module (BMVC2018)" and "CBAM: Convolutional Block Attention Module (ECCV2018)"

BAM and CBAM Official PyTorch code for "BAM: Bottleneck Attention Module (BMVC2018)" and "CBAM: Convolutional Block Attention Module (ECCV2018)" Updat

An implementation of the efficient attention module.

Efficient Attention An implementation of the efficient attention module. Description Efficient attention is an attention mechanism that substantially

This repository contains an implementation of the Permutohedral Attention Module in Pytorch

Permutohedral_attention_module This repository contains an implementation of the Permutohedral Attention Module

The code for Expectation-Maximization Attention Networks for Semantic Segmentation (ICCV'2019 Oral)

EMANet News The bug in loading the pretrained model is now fixed. I have updated the .pth. To use it, download it again. EMANet-101 gets 80.99 on the

Pytorch implementation of Compressive Transformers, from Deepmind

Compressive Transformer in Pytorch Pytorch implementation of Compressive Transformers, a variant of Transformer-XL with compressed memory for long-ran

Implementation of Axial attention - attending to multi-dimensional data efficiently

Axial Attention Implementation of Axial attention in Pytorch. A simple but powerful technique to attend to multi-dimensional data efficiently. It has

Implementing SYNTHESIZER: Rethinking Self-Attention in Transformer Models using Pytorch

Implementing SYNTHESIZER: Rethinking Self-Attention in Transformer Models using Pytorch Reference Paper URL Author: Yi Tay, Dara Bahri, Donald Metzler

My take on a practical implementation of Linformer for Pytorch.

Linformer Pytorch Implementation A practical implementation of the Linformer paper. This is attention with only linear complexity in n, allowing for v

Implementation of Kronecker Attention in Pytorch

Kronecker Attention Pytorch Implementation of Kronecker Attention in Pytorch. Results look less than stellar, but if someone found some context where

Implementation of Memformer, a Memory-augmented Transformer, in Pytorch

Memformer - Pytorch Implementation of Memformer, a Memory-augmented Transformer, in Pytorch. It includes memory slots, which are updated with attentio

![[NeurIPS 2020] Official Implementation:](https://github.com/giannisdaras/smyrf/raw/master/visuals/quality_degradation.png)

[NeurIPS 2020] Official Implementation: "SMYRF: Efficient Attention using Asymmetric Clustering".

SMYRF: Efficient attention using asymmetric clustering Get started: Abstract We propose a novel type of balanced clustering algorithm to approximate a

An easy-to-learn, dynamic, interpreted, procedural programming language

Gen Programming Language WARNING!! THIS LANGUAGE IS IN DEVELOPMENT. ANYTHING CAN CHANGE AT ANY MOMENT. Gen is a dynamic, interpreted, procedural progr

![[ACM MM 2021] TSA-Net: Tube Self-Attention Network for Action Quality Assessment](https://github.com/Shunli-Wang/TSA-Net/raw/main/fig/TSA-Net.jpg)

[ACM MM 2021] TSA-Net: Tube Self-Attention Network for Action Quality Assessment

Tube Self-Attention Network (TSA-Net) This repository contains the PyTorch implementation for paper TSA-Net: Tube Self-Attention Network for Action Qu