565 Repositories

Python box-attention Libraries

OpenSource Poc && Vulnerable-Target Storage Box.

reapoc OpenSource Poc && Vulnerable-Target Storage Box. We are aming to collect different normalized poc and the vulerable target to verify it. Now re

An end to end ASR Transformer model training repo

END TO END ASR TRANSFORMER 本项目基于transformer 6*encoder+6*decoder的基本结构构造的端到端的语音识别系统 Model Instructions 1.数据准备: 自行下载数据,遵循文件结构如下: ├── data │ ├── train │

Markov Attention Models

Introduction This repo contains code for reproducing the results in the paper Graphical Models with Attention for Context-Specific Independence and an

The code for "Deep Level Set for Box-supervised Instance Segmentation in Aerial Images".

Deep Levelset for Box-supervised Instance Segmentation in Aerial Images Wentong Li, Yijie Chen, Wenyu Liu, Jianke Zhu* This code is based on MMdetecti

Implementation of paper "Decision-based Black-box Attack Against Vision Transformers via Patch-wise Adversarial Removal"

Patch-wise Adversarial Removal Implementation of paper "Decision-based Black-box Attack Against Vision Transformers via Patch-wise Adversarial Removal

Python implementation of ADD: Frequency Attention and Multi-View based Knowledge Distillation to Detect Low-Quality Compressed Deepfake Images, AAAI2022.

ADD: Frequency Attention and Multi-View based Knowledge Distillation to Detect Low-Quality Compressed Deepfake Images Binh M. Le & Simon S. Woo, "ADD:

Bayesian Deep Learning and Deep Reinforcement Learning for Object Shape Error Response and Correction of Manufacturing Systems

Bayesian Deep Learning for Manufacturing 2.0 (dlmfg) Object Shape Error Response (OSER) Digital Lifecycle Management - In Process Quality Improvement

Unofficial implementation of "Coordinate Attention for Efficient Mobile Network Design"

Unofficial implementation of "Coordinate Attention for Efficient Mobile Network Design". CoordAttention tensorflow slim

Robust Lane Detection via Expanded Self Attention (WACV 2022)

Robust Lane Detection via Expanded Self Attention (WACV 2022) Minhyeok Lee, Junhyeop Lee, Dogyoon Lee, Woojin Kim, Sangwon Hwang, Sangyoun Lee Overvie

An Implementation of Transformer in Transformer in TensorFlow for image classification, attention inside local patches

Transformer-in-Transformer An Implementation of the Transformer in Transformer paper by Han et al. for image classification, attention inside local pa

Neural Caption Generator with Attention

Neural Caption Generator with Attention Tensorflow implementation of "Show

Reinfore learning tool box, contains trpo, a3c algorithm for continous action space

RL_toolbox all the algorithm is running on pycharm IDE, or the package loss error may exist. implemented algorithm: trpo a3c a3c:for continous action

TensorFlow implementation of the paper "Hierarchical Attention Networks for Document Classification"

Hierarchical Attention Networks for Document Classification This is an implementation of the paper Hierarchical Attention Networks for Document Classi

Hierarchical Attentive Recurrent Tracking

Hierarchical Attentive Recurrent Tracking This is an official Tensorflow implementation of single object tracking in videos by using hierarchical atte

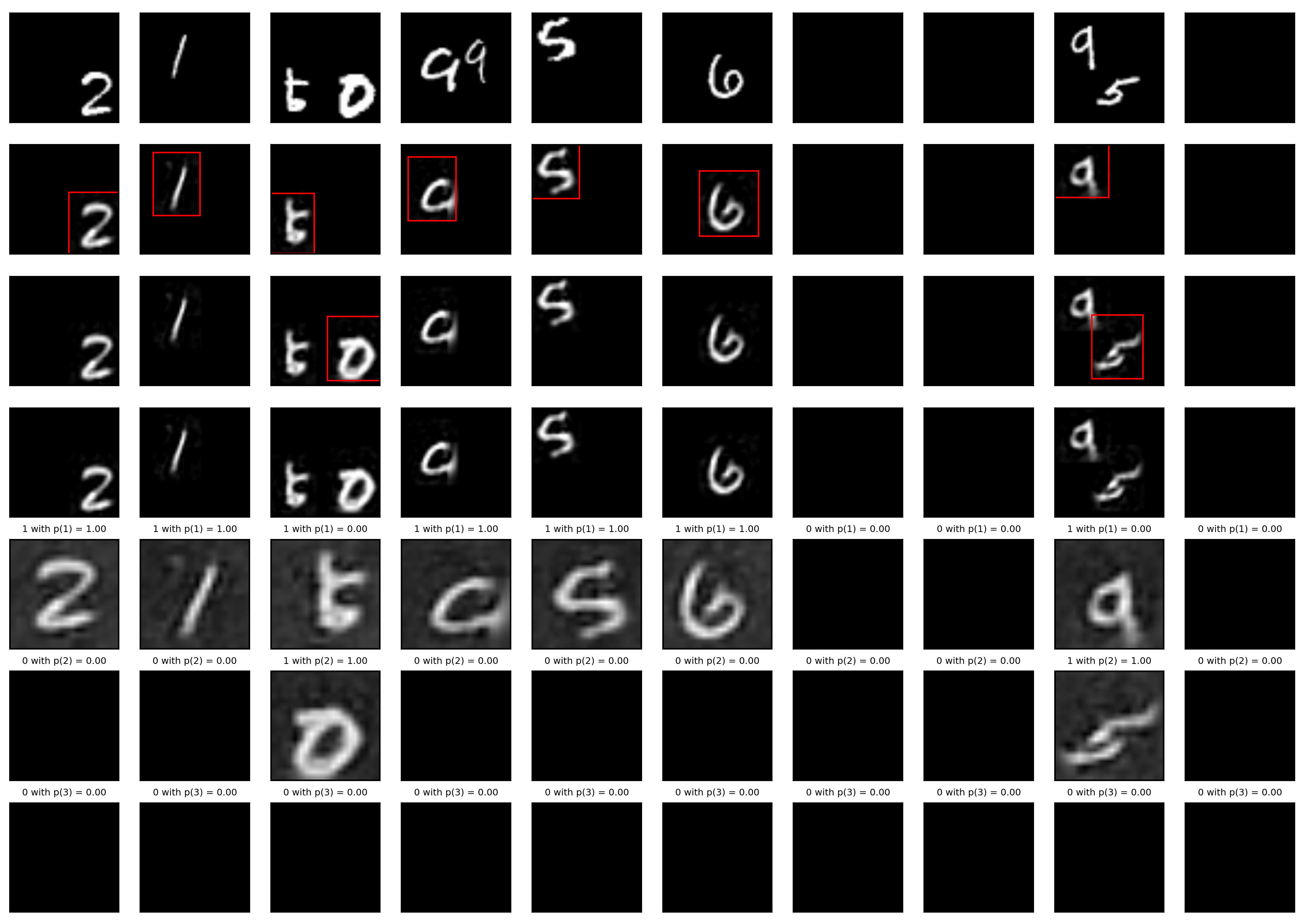

A Tensorfflow implementation of Attend, Infer, Repeat

Attend, Infer, Repeat: Fast Scene Understanding with Generative Models This is an unofficial Tensorflow implementation of Attend, Infear, Repeat (AIR)

Official Pytorch Implementation of Relational Self-Attention: What's Missing in Attention for Video Understanding

Relational Self-Attention: What's Missing in Attention for Video Understanding This repository is the official implementation of "Relational Self-Atte

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

PCAM: Product of Cross-Attention Matrices for Rigid Registration of Point Clouds

PCAM: Product of Cross-Attention Matrices for Rigid Registration of Point Clouds PCAM: Product of Cross-Attention Matrices for Rigid Registration of P

Confidence Propagation Cluster aims to replace NMS-based methods as a better box fusion framework in 2D/3D Object detection

CP-Cluster Confidence Propagation Cluster aims to replace NMS-based methods as a better box fusion framework in 2D/3D Object detection, Instance Segme

Python implementation of Bayesian optimization over permutation spaces.

Bayesian Optimization over Permutation Spaces This repository contains the source code and the resources related to the paper "Bayesian Optimization o

Code for "The Box Size Confidence Bias Harms Your Object Detector"

The Box Size Confidence Bias Harms Your Object Detector - Code Disclaimer: This repository is for research purposes only. It is designed to maintain r

Out-of-the-box support register, sign in, email verification and password recovery workflows for websites based on Django and MongoDB

Using djmongoauth What is it? djmongoauth provides out-of-the-box support for basic user management and additional operations including user registrat

Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch

NÜWA - Pytorch (wip) Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch. This repository will be popul

Neural HMMs are all you need (for high-quality attention-free TTS)

Neural HMMs are all you need (for high-quality attention-free TTS) Shivam Mehta, Éva Székely, Jonas Beskow, and Gustav Eje Henter This is the official

Color box that provides various colors‘ rgb decimal code.

colorbox Color box that provides various colors‘ rgb decimal code

(ACL-IJCNLP 2021) Convolutions and Self-Attention: Re-interpreting Relative Positions in Pre-trained Language Models.

BERT Convolutions Code for the paper Convolutions and Self-Attention: Re-interpreting Relative Positions in Pre-trained Language Models. Contains expe

Code release for "Masked-attention Mask Transformer for Universal Image Segmentation"

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexander Kirillov, Ro

A PyTorch Implementation of PGL-SUM from "Combining Global and Local Attention with Positional Encoding for Video Summarization", Proc. IEEE ISM 2021

PGL-SUM: Combining Global and Local Attention with Positional Encoding for Video Summarization PyTorch Implementation of PGL-SUM From "PGL-SUM: Combin

Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving

GSAN Introduction Code for paper GSAN: Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving, wh

Code for "The Box Size Confidence Bias Harms Your Object Detector"

The Box Size Confidence Bias Harms Your Object Detector - Code Disclaimer: This repository is for research purposes only. It is designed to maintain r

Modified GPT using average pooling to reduce the softmax attention memory constraints.

NLP-GPT-Upsampling This repository contains an implementation of Open AI's GPT Model. In particular, this implementation takes inspiration from the Ny

BERT Attention Analysis

BERT Attention Analysis This repository contains code for What Does BERT Look At? An Analysis of BERT's Attention. It includes code for getting attent

This is a repository with the code for the ACL 2019 paper

The Story of Heads This is the official repo for the following papers: (ACL 2019) Analyzing Multi-Head Self-Attention: Specialized Heads Do the Heavy

Pytorch implementation of Bert and Pals: Projected Attention Layers for Efficient Adaptation in Multi-Task Learning

PyTorch implementation of BERT and PALs Introduction Work by Asa Cooper Stickland and Iain Murray, University of Edinburgh. Code for BERT and PALs; mo

DeepFill v1/v2 with Contextual Attention and Gated Convolution, CVPR 2018, and ICCV 2019 Oral

Generative Image Inpainting An open source framework for generative image inpainting task, with the support of Contextual Attention (CVPR 2018) and Ga

Implementation of Vaswani, Ashish, et al. "Attention is all you need."

Attention Is All You Need Paper Implementation This is my from-scratch implementation of the original transformer architecture from the following pape

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

PyContinual (An Easy and Extendible Framework for Continual Learning)

PyContinual (An Easy and Extendible Framework for Continual Learning) Easy to Use You can sumply change the baseline, backbone and task, and then read

Optimizing Value-at-Risk and Conditional Value-at-Risk of Black Box Functions with Lacing Values (LV)

BayesOpt-LV Optimizing Value-at-Risk and Conditional Value-at-Risk of Black Box Functions with Lacing Values (LV) About This repository contains the s

Representing Long-Range Context for Graph Neural Networks with Global Attention

Graph Augmentation Graph augmentation/self-supervision/etc. Algorithms gcn gcn+virtual node gin gin+virtual node PNA GraphTrans Augmentation methods N

Auditing Black-Box Prediction Models for Data Minimization Compliance

Data-Minimization-Auditor An auditing tool for model-instability based data minimization that is introduced in "Auditing Black-Box Prediction Models f

Behavioral "black-box" testing for recommender systems

RecList RecList Free software: MIT license Documentation: https://reclist.readthedocs.io. Overview RecList is an open source library providing behavio

Single-step adversarial training (AT) has received wide attention as it proved to be both efficient and robust.

Subspace Adversarial Training Single-step adversarial training (AT) has received wide attention as it proved to be both efficient and robust. However,

Image Captioning using CNN ,LSTM and Attention

Image Captioning using CNN ,LSTM and Attention This is a deeplearning model which tries to summarize an image into a text . Installation Install this

Tools to create pixel-wise object masks, bounding box labels (2D and 3D) and 3D object model (PLY triangle mesh) for object sequences filmed with an RGB-D camera.

Tools to create pixel-wise object masks, bounding box labels (2D and 3D) and 3D object model (PLY triangle mesh) for object sequences filmed with an RGB-D camera. This project prepares training and testing data for various deep learning projects such as 6D object pose estimation projects singleshotpose, as well as object detection and instance segmentation projects.

Image Super-Resolution Using Very Deep Residual Channel Attention Networks

Image Super-Resolution Using Very Deep Residual Channel Attention Networks

labelpix is a graphical image labeling interface for drawing bounding boxes

Welcome to labelpix 👋 labelpix is a graphical image labeling interface for drawing bounding boxes. 🏠 Homepage Install pip install -r requirements.tx

Official repository for Natural Image Matting via Guided Contextual Attention

GCA-Matting: Natural Image Matting via Guided Contextual Attention The source codes and models of Natural Image Matting via Guided Contextual Attentio

Prototypical Cross-Attention Networks for Multiple Object Tracking and Segmentation, NeurIPS 2021 Spotlight

PCAN for Multiple Object Tracking and Segmentation This is the offical implementation of paper PCAN for MOTS. We also present a trailer that consists

Minimum Bounding Box of Geospatial data

BBOX Problem definition: The spatial data users often are required to obtain the coordinates of the minimum bounding box of vector and raster data in

Multi-modal co-attention for drug-target interaction annotation and Its Application to SARS-CoV-2

CoaDTI Multi-modal co-attention for drug-target interaction annotation and Its Application to SARS-CoV-2 Abstract Environment The test was conducted i

Omnidirectional Scene Text Detection with Sequential-free Box Discretization (IJCAI 2019). Including competition model, online demo, etc.

Box_Discretization_Network This repository is built on the pytorch [maskrcnn_benchmark]. The method is the foundation of our ReCTs-competition method

Revisiting Video Saliency: A Large-scale Benchmark and a New Model (CVPR18, PAMI19)

DHF1K =========================================================================== Wenguan Wang, J. Shen, M.-M Cheng and A. Borji, Revisiting Video Sal

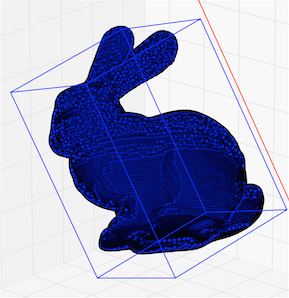

Fast algorithms to compute an approximation of the minimal volume oriented bounding box of a point cloud in 3D.

ApproxMVBB Status Build UnitTests Homepage Fast algorithms to compute an approximation of the minimal volume oriented bounding box of a point cloud in

RecList is an open source library providing behavioral, "black-box" testing for recommender systems.

RecList is an open source library providing behavioral, "black-box" testing for recommender systems.

Deep Reinforced Attention Regression for Partial Sketch Based Image Retrieval.

DARP-SBIR Intro This repository contains the source code implementation for ICDM submission paper Deep Reinforced Attention Regression for Partial Ske

A method that utilized Generative Adversarial Network (GAN) to interpret the black-box deep image classifier models by PyTorch.

A method that utilized Generative Adversarial Network (GAN) to interpret the black-box deep image classifier models by PyTorch.

Codebase for "ProtoAttend: Attention-Based Prototypical Learning."

Codebase for "ProtoAttend: Attention-Based Prototypical Learning." Authors: Sercan O. Arik and Tomas Pfister Paper: Sercan O. Arik and Tomas Pfister,

zeus is a Python implementation of the Ensemble Slice Sampling method.

zeus is a Python implementation of the Ensemble Slice Sampling method. Fast & Robust Bayesian Inference, Efficient Markov Chain Monte Carlo (MCMC), Bl

A denoising autoencoder + adversarial losses and attention mechanisms for face swapping.

faceswap-GAN Adding Adversarial loss and perceptual loss (VGGface) to deepfakes'(reddit user) auto-encoder architecture. Updates Date Update 2018-08-2

nlp-tutorial is a tutorial for who is studying NLP(Natural Language Processing) using Pytorch

nlp-tutorial is a tutorial for who is studying NLP(Natural Language Processing) using Pytorch. Most of the models in NLP were implemented with less than 100 lines of code.(except comments or blank lines)

ByteTrack(Multi-Object Tracking by Associating Every Detection Box)のPythonでのONNX推論サンプル

ByteTrack-ONNX-Sample ByteTrack(Multi-Object Tracking by Associating Every Detection Box)のPythonでのONNX推論サンプルです。 ONNXに変換したモデルも同梱しています。 変換自体を試したい方はByteT

PyTorch implementation of Hierarchical Multi-label Text Classification: An Attention-based Recurrent Network

hierarchical-multi-label-text-classification-pytorch Hierarchical Multi-label Text Classification: An Attention-based Recurrent Network Approach This

You Only Sample (Almost) Once: Linear Cost Self-Attention Via Bernoulli Sampling

You Only Sample (Almost) Once: Linear Cost Self-Attention Via Bernoulli Sampling Transformer-based models are widely used in natural language processi

RAANet: Range-Aware Attention Network for LiDAR-based 3D Object Detection with Auxiliary Density Level Estimation

RAANet: Range-Aware Attention Network for LiDAR-based 3D Object Detection with Auxiliary Density Level Estimation Anonymous submission Abstract 3D obj

The implementation of DeBERTa

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

Self-attentive task GAN for space domain awareness data augmentation.

SATGAN TODO: update the article URL once published. Article about this implemention The self-attentive task generative adversarial network (SATGAN) le

A PyTorch implementation of "CoAtNet: Marrying Convolution and Attention for All Data Sizes".

CoAtNet Overview This is a PyTorch implementation of CoAtNet specified in "CoAtNet: Marrying Convolution and Attention for All Data Sizes", arXiv 2021

Sky attention heatmap of submissions to astrometry.net

astroheat Installation Requires Python 3.6+, Tested with Python 3.9.5 Install library dependencies pip install -r requirements.txt The program require

Implementation of [Time in a Box: Advancing Knowledge Graph Completion with Temporal Scopes].

Time2box Implementation of [Time in a Box: Advancing Knowledge Graph Completion with Temporal Scopes].

Local Multi-Head Channel Self-Attention for FER2013

LHC-Net Local Multi-Head Channel Self-Attention This repository is intended to provide a quick implementation of the LHC-Net and to replicate the resu

Summary of related papers on visual attention

This repo is built for paper: Attention Mechanisms in Computer Vision: A Survey paper Vision-Attention-Papers Channel attention Spatial attention Temp

Official code for: A Probabilistic Hard Attention Model For Sequentially Observed Scenes

"A Probabilistic Hard Attention Model For Sequentially Observed Scenes" Authors: Samrudhdhi Rangrej, James Clark Accepted to: BMVC'21 A recurrent atte

moDel Agnostic Language for Exploration and eXplanation

moDel Agnostic Language for Exploration and eXplanation Overview Unverified black box model is the path to the failure. Opaqueness leads to distrust.

A Python module for parallel optimization of expensive black-box functions

blackbox: A Python module for parallel optimization of expensive black-box functions What is this? A minimalistic and easy-to-use Python module that e

Black box hyperparameter optimization made easy.

BBopt BBopt aims to provide the easiest hyperparameter optimization you'll ever do. Think of BBopt like Keras (back when Theano was still a thing) for

Implementation of Uniformer, a simple attention and 3d convolutional net that achieved SOTA in a number of video classification tasks

Uniformer - Pytorch Implementation of Uniformer, a simple attention and 3d convolutional net that achieved SOTA in a number of video classification ta

Code for our paper "Multi-scale Guided Attention for Medical Image Segmentation"

Medical Image Segmentation with Guided Attention This repository contains the code of our paper: "'Multi-scale self-guided attention for medical image

PSANet: Point-wise Spatial Attention Network for Scene Parsing, ECCV2018.

PSANet: Point-wise Spatial Attention Network for Scene Parsing (in construction) by Hengshuang Zhao*, Yi Zhang*, Shu Liu, Jianping Shi, Chen Change Lo

CCNet: Criss-Cross Attention for Semantic Segmentation (TPAMI 2020 & ICCV 2019).

CCNet: Criss-Cross Attention for Semantic Segmentation Paper Links: Our most recent TPAMI version with improvements and extensions (Earlier ICCV versi

Dual Attention Network for Scene Segmentation (CVPR2019)

Dual Attention Network for Scene Segmentation(CVPR2019) Jun Fu, Jing Liu, Haijie Tian, Yong Li, Yongjun Bao, Zhiwei Fang,and Hanqing Lu Introduction W

Use of Attention Gates in a Convolutional Neural Network / Medical Image Classification and Segmentation

Attention Gated Networks (Image Classification & Segmentation) Pytorch implementation of attention gates used in U-Net and VGG-16 models. The framewor

Segmentation-Aware Convolutional Networks Using Local Attention Masks

Segmentation-Aware Convolutional Networks Using Local Attention Masks [Project Page] [Paper] Segmentation-aware convolution filters are invariant to b

Pytorch implementation of U-Net, R2U-Net, Attention U-Net, and Attention R2U-Net.

pytorch Implementation of U-Net, R2U-Net, Attention U-Net, Attention R2U-Net U-Net: Convolutional Networks for Biomedical Image Segmentation https://a

![Models Supported: AlbUNet [18, 34, 50, 101, 152] (1D and 2D versions for Single and Multiclass Segmentation, Feature Extraction with supports for Deep Supervision and Guided Attention)](https://github.com/Sakib1263/AlbUNet-1D-2D-Tensorflow-Keras/raw/main/Documents/Images/AlbuNet.png)

Models Supported: AlbUNet [18, 34, 50, 101, 152] (1D and 2D versions for Single and Multiclass Segmentation, Feature Extraction with supports for Deep Supervision and Guided Attention)

AlbUNet-1D-2D-Tensorflow-Keras This repository contains 1D and 2D Signal Segmentation Model Builder for AlbUNet and several of its variants developed

Code for the paper "Attention Approximates Sparse Distributed Memory"

Attention Approximates Sparse Distributed Memory - Codebase This is all of the code used to run analyses in the paper "Attention Approximates Sparse D

An Indexer that works out-of-the-box when you have less than 100K stored Documents

U100KIndexer An Indexer that works out-of-the-box when you have less than 100K stored Documents. U100K means under 100K. At 100K stored Documents with

Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning using 🤗 transformers

hierarchical-transformer-1d Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning using 🤗 transformers In Progress!! 2021.

This repo. is an implementation of ACFFNet, which is accepted for in Image and Vision Computing.

Attention-Guided-Contextual-Feature-Fusion-Network-for-Salient-Object-Detection This repo. is an implementation of ACFFNet, which is accepted for in I

Contextual Attention Localization for Offline Handwritten Text Recognition

CALText This repository contains the source code for CALText model introduced in "CALText: Contextual Attention Localization for Offline Handwritten T

Global-Local Attention for Emotion Recognition

Global-Local Attention for Emotion Recognition Requirements Python 3 Install tensorflow (or tensorflow-gpu) = 2.0.0 Install some other packages pip i

An implementation of Equivariant e2 convolutional kernals into a convolutional self attention network, applied to radio astronomy data.

EquivariantSelfAttention An implementation of Equivariant e2 convolutional kernals into a convolutional self attention network, applied to radio astro

CNN+Attention+Seq2Seq

Attention_OCR CNN+Attention+Seq2Seq The model and its tensor transformation are shown in the figure below It is necessary ch_ train and ch_ test the p

Liquid Warping GAN with Attention: A Unified Framework for Human Image Synthesis

Liquid Warping GAN with Attention: A Unified Framework for Human Image Synthesis, including human motion imitation, appearance transfer, and novel view synthesis. Currently the paper is under review of IEEE TPAMI. It is an extension of our previous ICCV project impersonator, and it has a more powerful ability in generalization and produces higher-resolution results (512 x 512, 1024 x 1024) than the previous ICCV version.

It's a implement of this paper:Relation extraction via Multi-Level attention CNNs

Relation Classification via Multi-Level Attention CNNs It's a implement of this paper:Relation Classification via Multi-Level Attention CNNs. Training

Skyformer: Remodel Self-Attention with Gaussian Kernel and Nystr\"om Method (NeurIPS 2021)

Skyformer This repository is the official implementation of Skyformer: Remodel Self-Attention with Gaussian Kernel and Nystr"om Method (NeurIPS 2021).

Benchmark library for high-dimensional HPO of black-box models based on Weighted Lasso regression

LassoBench LassoBench is a library for high-dimensional hyperparameter optimization benchmarks based on Weighted Lasso regression. Note: LassoBench is

Measuring if attention is explanation with ROAR

NLP ROAR Interpretability Official code for: Evaluating the Faithfulness of Importance Measures in NLP by Recursively Masking Allegedly Important Toke

A CROSS-MODAL FUSION NETWORK BASED ON SELF-ATTENTION AND RESIDUAL STRUCTURE FOR MULTIMODAL EMOTION RECOGNITION

CFN-SR A CROSS-MODAL FUSION NETWORK BASED ON SELF-ATTENTION AND RESIDUAL STRUCTURE FOR MULTIMODAL EMOTION RECOGNITION The audio-video based multimodal

Official implementation of "Multi-Glimpse Network: A Robust and Efficient Classification Architecture based on Recurrent Downsampled Attention" (BMVC 2021).

Multi-Glimpse Network Multi-Glimpse Network: A Robust and Efficient Classification Architecture based on Recurrent Downsampled Attention arXiv Require