1223 Repositories

Python dialogue-models Libraries

Multi-Task Pre-Training for Plug-and-Play Task-Oriented Dialogue System

Multi-Task Pre-Training for Plug-and-Play Task-Oriented Dialogue System Authors: Yixuan Su, Lei Shu, Elman Mansimov, Arshit Gupta, Deng Cai, Yi-An Lai

Library of deep learning models and datasets designed to make deep learning more accessible and accelerate ML research.

Tensor2Tensor Tensor2Tensor, or T2T for short, is a library of deep learning models and datasets designed to make deep learning more accessible and ac

This package proposes simplified exporting pytorch models to ONNX and TensorRT, and also gives some base interface for model inference.

PyTorch Infer Utils This package proposes simplified exporting pytorch models to ONNX and TensorRT, and also gives some base interface for model infer

The Easy-to-use Dialogue Response Selection Toolkit for Researchers

The Easy-to-use Dialogue Response Selection Toolkit for Researchers

💛 Code and Dataset for our EMNLP 2021 paper: "Perspective-taking and Pragmatics for Generating Empathetic Responses Focused on Emotion Causes"

Perspective-taking and Pragmatics for Generating Empathetic Responses Focused on Emotion Causes Official PyTorch implementation and EmoCause evaluatio

Hapi is a Python library for building Conceptual Distributed Model using HBV96 lumped model & Muskingum routing method

Current build status All platforms: Current release info Name Downloads Version Platforms Hapi - Hydrological library for Python Hapi is an open-sourc

The Multi-Mission Maximum Likelihood framework (3ML)

PyPi Conda The Multi-Mission Maximum Likelihood framework (3ML) A framework for multi-wavelength/multi-messenger analysis for astronomy/astrophysics.

Tangram makes it easy for programmers to train, deploy, and monitor machine learning models.

Tangram Website | Discord Tangram makes it easy for programmers to train, deploy, and monitor machine learning models. Run tangram train to train a mo

A practical and feature-rich paraphrasing framework to augment human intents in text form to build robust NLU models for conversational engines. Created by Prithiviraj Damodaran. Open to pull requests and other forms of collaboration.

Parrot Parrot is a paraphrase based utterance augmentation framework purpose built to accelerate training NLU models. A paraphrase framework is more t

PyPI package for scaffolding out code for decision tree models that can learn to find relationships between the attributes of an object.

Decision Tree Writer This package allows you to train a binary classification decision tree on a list of labeled dictionaries or class instances, and

Optimized Gillespie algorithm for simulating Stochastic sPAtial models of Cancer Evolution (OG-SPACE)

OG-SPACE Introduction Optimized Gillespie algorithm for simulating Stochastic sPAtial models of Cancer Evolution (OG-SPACE) is a computational framewo

Analysis of rationale selection in neural rationale models

Neural Rationale Interpretability Analysis We analyze the neural rationale models proposed by Lei et al. (2016) and Bastings et al. (2019), as impleme

Companion code for "Bayesian logistic regression for online recalibration and revision of risk prediction models with performance guarantees"

Companion code for "Bayesian logistic regression for online recalibration and revision of risk prediction models with performance guarantees" Installa

The Easy-to-use Dialogue Response Selection Toolkit for Researchers

Easy-to-use toolkit for retrieval-based Chatbot Recent Activity Our released RRS corpus can be found here. Our released BERT-FP post-training checkpoi

Benchmarking the robustness of Spatial-Temporal Models

Benchmarking the robustness of Spatial-Temporal Models This repositery contains the code for the paper Benchmarking the Robustness of Spatial-Temporal

Codebase of deep learning models for inferring stability of mRNA molecules

Kaggle OpenVaccine Models Codebase of deep learning models for inferring stability of mRNA molecules, corresponding to the Kaggle Open Vaccine Challen

The self-supervised goal reaching benchmark introduced in Discovering and Achieving Goals via World Models

Lexa-Benchmark Codebase for the self-supervised goal reaching benchmark introduced in 'Discovering and Achieving Goals via World Models'. Setup Create

Code & Data for the Paper "Time Masking for Temporal Language Models", WSDM 2022

Time Masking for Temporal Language Models This repository provides a reference implementation of the paper: Time Masking for Temporal Language Models

Neural text generators like the GPT models promise a general-purpose means of manipulating texts.

Boolean Prompting for Neural Text Generators Neural text generators like the GPT models promise a general-purpose means of manipulating texts. These m

The Easy-to-use Dialogue Response Selection Toolkit for Researchers

Easy-to-use toolkit for retrieval-based Chatbot Our released data can be found at this link. Make sure the following steps are adopted to use our code

Bayesian regularization for functional graphical models.

BayesFGM Paper: Jiajing Niu, Andrew Brown. Bayesian regularization for functional graphical models. Requirements R version 3.6.3 and up Python 3.6 and

New approach to benchmark VQA models

VQA Benchmarking This repository contains the web application & the python interface to evaluate VQA models. Documentation Please see the documentatio

Deep learning models for classification of 15 common weeds in the southern U.S. cotton production systems.

CottonWeeds Deep learning models for classification of 15 common weeds in the southern U.S. cotton production systems. requirements pytorch torchsumma

Repository sharing code and the model for the paper "Rescoring Sequence-to-Sequence Models for Text Line Recognition with CTC-Prefixes"

Rescoring Sequence-to-Sequence Models for Text Line Recognition with CTC-Prefixes Setup virtualenv -p python3 venv source venv/bin/activate pip instal

HyperCube: Implicit Field Representations of Voxelized 3D Models

HyperCube: Implicit Field Representations of Voxelized 3D Models Authors: Magdalena Proszewska, Marcin Mazur, Tomasz Trzcinski, Przemysław Spurek [Pap

The Few-Shot Bot: Prompt-Based Learning for Dialogue Systems

Few-Shot Bot: Prompt-Based Learning for Dialogue Systems This repository includes the dataset, experiments results, and code for the paper: Few-Shot B

This is the repository for paper NEEDLE: Towards Non-invertible Backdoor Attack to Deep Learning Models.

This is the repository for paper NEEDLE: Towards Non-invertible Backdoor Attack to Deep Learning Models.

Toolkit for building machine learning models that generalize to unseen domains and are robust to privacy and other attacks.

Toolkit for Building Robust ML models that generalize to unseen domains (RobustDG) Divyat Mahajan, Shruti Tople, Amit Sharma Privacy & Causal Learning

Pre-training BERT masked language models with custom vocabulary

Pre-training BERT Masked Language Models (MLM) This repository contains the method to pre-train a BERT model using custom vocabulary. It was used to p

Implementations of Machine Learning models, Regularizers, Optimizers and different Cost functions.

Linear Models Implementations of LinearRegression, LassoRegression and RidgeRegression with appropriate Regularizers and Optimizers. Linear Regression

cqMore is a CadQuery plugin based on CadQuery 2.1.

cqMore (under construction) cqMore is a CadQuery plugin based on CadQuery 2.1. Installation Please use conda to install CadQuery and its dependencies

A framework for evaluating Knowledge Graph Embedding Models in a fine-grained manner.

A framework for evaluating Knowledge Graph Embedding Models in a fine-grained manner.

Text2Art is an AI art generator powered with VQGAN + CLIP and CLIPDrawer models

Text2Art is an AI art generator powered with VQGAN + CLIP and CLIPDrawer models. You can easily generate all kind of art from drawing, painting, sketch, or even a specific artist style just using a text input. You can also specify the dimensions of the image. The process can take 3-20 mins and the results will be emailed to you.

DeepCAD: A Deep Generative Network for Computer-Aided Design Models

DeepCAD This repository provides source code for our paper: DeepCAD: A Deep Generative Network for Computer-Aided Design Models Rundi Wu, Chang Xiao,

Training vision models with full-batch gradient descent and regularization

Stochastic Training is Not Necessary for Generalization -- Training competitive vision models without stochasticity This repository implements trainin

Blender addon to generate better building models from satellite imagery.

Blender addon to generate better building models from satellite imagery.

Pytorch implementation of "Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling"

RNN-for-Joint-NLU Pytorch implementation of "Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling"

TorchXRayVision: A library of chest X-ray datasets and models.

torchxrayvision A library for chest X-ray datasets and models. Including pre-trained models. ( 🎬 promo video about the project) Motivation: While the

A pytorch-based deep learning framework for multi-modal 2D/3D medical image segmentation

A 3D multi-modal medical image segmentation library in PyTorch We strongly believe in open and reproducible deep learning research. Our goal is to imp

Explainer for black box models that predict molecule properties

Explaining why that molecule exmol is a package to explain black-box predictions of molecules. The package uses model agnostic explanations to help us

Prompt-learning is the latest paradigm to adapt pre-trained language models (PLMs) to downstream NLP tasks

Prompt-learning is the latest paradigm to adapt pre-trained language models (PLMs) to downstream NLP tasks, which modifies the input text with a textual template and directly uses PLMs to conduct pre-trained tasks. This library provides a standard, flexible and extensible framework to deploy the prompt-learning pipeline. OpenPrompt supports loading PLMs directly from huggingface transformers. In the future, we will also support PLMs implemented by other libraries.

pcnaDeep integrates cutting-edge detection techniques with tracking and cell cycle resolving models.

pcnaDeep: a deep-learning based single-cell cycle profiler with PCNA signal Welcome! pcnaDeep integrates cutting-edge detection techniques with tracki

A Chinese to English Neural Model Translation Project

ZH-EN NMT Chinese to English Neural Machine Translation This project is inspired by Stanford's CS224N NMT Project Dataset used in this project: News C

Pytorch implementation of paper "Efficient Nearest Neighbor Language Models" (EMNLP 2021)

Pytorch implementation of paper "Efficient Nearest Neighbor Language Models" (EMNLP 2021)

Model factory is a ML training platform to help engineers to build ML models at scale

Model Factory Machine learning today is powering many businesses today, e.g., search engine, e-commerce, news or feed recommendation. Training high qu

Pre-trained BERT Models for Ancient and Medieval Greek, and associated code for LaTeCH 2021 paper titled - "A Pilot Study for BERT Language Modelling and Morphological Analysis for Ancient and Medieval Greek"

Ancient Greek BERT The first and only available Ancient Greek sub-word BERT model! State-of-the-art post fine-tuning on Part-of-Speech Tagging and Mor

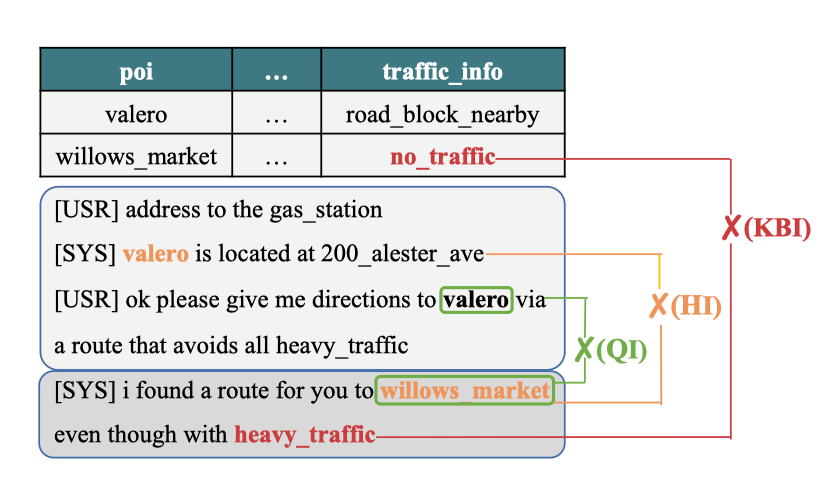

PyTorch code for EMNLP 2021 paper: Don't be Contradicted with Anything! CI-ToD: Towards Benchmarking Consistency for Task-oriented Dialogue System

PyTorch code for EMNLP 2021 paper: Don't be Contradicted with Anything! CI-ToD: Towards Benchmarking Consistency for Task-oriented Dialogue System

A PyTorch implementation of EfficientNet and EfficientNetV2 (coming soon!)

EfficientNet PyTorch Quickstart Install with pip install efficientnet_pytorch and load a pretrained EfficientNet with: from efficientnet_pytorch impor

PyTorch code accompanying our paper on Maximum Entropy Generators for Energy-Based Models

Maximum Entropy Generators for Energy-Based Models All experiments have tensorboard visualizations for samples / density / train curves etc. To run th

![A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]](https://user-images.githubusercontent.com/33194443/104581604-2592cb00-56a2-11eb-9610-5eaa0afb6e1f.png)

A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]

PINTO_model_zoo Please read the contents of the LICENSE file located directly under each folder before using the model. My model conversion scripts ar

A scalable template for PyTorch projects, with examples in Image Segmentation, Object classification, GANs and Reinforcement Learning.

PyTorch Project Template is being sponsored by the following tool; please help to support us by taking a look and signing up to a free trial PyTorch P

Pytorch implementations of various Deep NLP models in cs-224n(Stanford Univ)

DeepNLP-models-Pytorch Pytorch implementations of various Deep NLP models in cs-224n(Stanford Univ: NLP with Deep Learning) This is not for Pytorch be

A collection of various deep learning architectures, models, and tips

Deep Learning Models A collection of various deep learning architectures, models, and tips for TensorFlow and PyTorch in Jupyter Notebooks. Traditiona

Visualizer for neural network, deep learning, and machine learning models

Netron is a viewer for neural network, deep learning and machine learning models. Netron supports ONNX, TensorFlow Lite, Keras, Caffe, Darknet, ncnn,

Making decision trees competitive with neural networks on CIFAR10, CIFAR100, TinyImagenet200, Imagenet

Neural-Backed Decision Trees · Site · Paper · Blog · Video Alvin Wan, *Lisa Dunlap, *Daniel Ho, Jihan Yin, Scott Lee, Henry Jin, Suzanne Petryk, Sarah

Quickly and easily create / train a custom DeepDream model

Dream-Creator This project aims to simplify the process of creating a custom DeepDream model by using pretrained GoogleNet models and custom image dat

PyTorch code for EMNLP 2021 paper: Don't be Contradicted with Anything! CI-ToD: Towards Benchmarking Consistency for Task-oriented Dialogue System

Don’t be Contradicted with Anything!CI-ToD: Towards Benchmarking Consistency for Task-oriented Dialogue System This repository contains the PyTorch im

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

A Tools that help Data Scientists and ML engineers train and deploy ML models.

Domino Research This repo contains projects under active development by the Domino R&D team. We build tools that help Data Scientists and ML engineers

fastgradio is a python library to quickly build and share gradio interfaces of your trained fastai models.

fastgradio is a python library to quickly build and share gradio interfaces of your trained fastai models.

GluonMM is a library of transformer models for computer vision and multi-modality research

GluonMM is a library of transformer models for computer vision and multi-modality research. It contains reference implementations of widely adopted baseline models and also research work from Amazon Research.

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

Code for ACL'2021 paper WARP 🌀 Word-level Adversarial ReProgramming

Code for ACL'2021 paper WARP 🌀 Word-level Adversarial ReProgramming. Outperforming `GPT-3` on SuperGLUE Few-Shot text classification.

TruthfulQA: Measuring How Models Imitate Human Falsehoods

TruthfulQA: Measuring How Models Imitate Human Falsehoods

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context Code in both PyTorch and TensorFlow

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context This repository contains the code in both PyTorch and TensorFlow for our paper

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

PyTorch implementation of convolutional neural networks-based text-to-speech synthesis models

Deepvoice3_pytorch PyTorch implementation of convolutional networks-based text-to-speech synthesis models: arXiv:1710.07654: Deep Voice 3: Scaling Tex

StarGAN - Official PyTorch Implementation (CVPR 2018)

StarGAN - Official PyTorch Implementation ***** New: StarGAN v2 is available at https://github.com/clovaai/stargan-v2 ***** This repository provides t

The PASS dataset: pretrained models and how to get the data - PASS: Pictures without humAns for Self-Supervised Pretraining

The PASS dataset: pretrained models and how to get the data - PASS: Pictures without humAns for Self-Supervised Pretraining

Silero Models: pre-trained speech-to-text, text-to-speech models and benchmarks made embarrassingly simple

Silero Models: pre-trained speech-to-text, text-to-speech models and benchmarks made embarrassingly simple

Discretized Integrated Gradients for Explaining Language Models (EMNLP 2021)

Discretized Integrated Gradients for Explaining Language Models (EMNLP 2021) Overview of paths used in DIG and IG. w is the word being attributed. The

Tevatron is a simple and efficient toolkit for training and running dense retrievers with deep language models.

Tevatron Tevatron is a simple and efficient toolkit for training and running dense retrievers with deep language models. The toolkit has a modularized

In this repository we have tested 3 VQA models on the ImageCLEF-2019 dataset.

Med-VQA In this repository we have tested 3 VQA models on the ImageCLEF-2019 dataset. Two of these are made on top of Facebook AI Reasearch's Multi-Mo

Implementation of Squeezenet in pytorch, pretrained models on Cifar 10 data to come

Pytorch Squeeznet Pytorch implementation of Squeezenet model as described in https://arxiv.org/abs/1602.07360 on cifar-10 Data. The definition of Sque

Reinforcement learning models in ViZDoom environment

DoomNet DoomNet is a ViZDoom agent trained by reinforcement learning. The agent is a neural network that outputs a probability of actions given only p

Sequence to Sequence Models with PyTorch

Sequence to Sequence models with PyTorch This repository contains implementations of Sequence to Sequence (Seq2Seq) models in PyTorch At present it ha

PICARD - Parsing Incrementally for Constrained Auto-Regressive Decoding from Language Models

This is the official implementation of the following paper: Torsten Scholak, Nathan Schucher, Dzmitry Bahdanau. PICARD - Parsing Incrementally for Con

Hierarchical unsupervised and semi-supervised topic models for sparse count data with CorEx

Anchored CorEx: Hierarchical Topic Modeling with Minimal Domain Knowledge Correlation Explanation (CorEx) is a topic model that yields rich topics tha

Neural network models for joint POS tagging and dependency parsing (CoNLL 2017-2018)

Neural Network Models for Joint POS Tagging and Dependency Parsing Implementations of joint models for POS tagging and dependency parsing, as describe

Model Agnostic Confidence Estimator (MACEST) - A Python library for calibrating Machine Learning models' confidence scores

Model Agnostic Confidence Estimator (MACEST) - A Python library for calibrating Machine Learning models' confidence scores

Official pytorch implementation of "Scaling-up Disentanglement for Image Translation", ICCV 2021.

Official pytorch implementation of "Scaling-up Disentanglement for Image Translation", ICCV 2021.

Code and dataset for the EMNLP 2021 Finding paper "Can NLI Models Verify QA Systems’ Predictions?"

Code and dataset for the EMNLP 2021 Finding paper "Can NLI Models Verify QA Systems’ Predictions?"

A2T: Towards Improving Adversarial Training of NLP Models (EMNLP 2021 Findings)

A2T: Towards Improving Adversarial Training of NLP Models This is the source code for the EMNLP 2021 (Findings) paper "Towards Improving Adversarial T

EMNLP'2021: Can Language Models be Biomedical Knowledge Bases?

BioLAMA BioLAMA is biomedical factual knowledge triples for probing biomedical LMs. The triples are collected and pre-processed from three sources: CT

💛 Code and Dataset for our EMNLP 2021 paper: "Perspective-taking and Pragmatics for Generating Empathetic Responses Focused on Emotion Causes"

Perspective-taking and Pragmatics for Generating Empathetic Responses Focused on Emotion Causes Official PyTorch implementation and EmoCause evaluatio

Code for evaluating Japanese pretrained models provided by NTT Ltd.

japanese-dialog-transformers 日本語の説明文はこちら This repository provides the information necessary to evaluate the Japanese Transformer Encoder-decoder dialo

TorchGeo is a PyTorch domain library, similar to torchvision, that provides datasets, transforms, samplers, and pre-trained models specific to geospatial data.

TorchGeo is a PyTorch domain library, similar to torchvision, that provides datasets, transforms, samplers, and pre-trained models specific to geospatial data.

The repository for the paper: Multilingual Translation via Grafting Pre-trained Language Models

Graformer The repository for the paper: Multilingual Translation via Grafting Pre-trained Language Models Graformer (also named BridgeTransformer in t

A Django application that provides country choices for use with forms, flag icons static files, and a country field for models.

Django Countries A Django application that provides country choices for use with forms, flag icons static files, and a country field for models. Insta

A BitField extension for Django Models

django-bitfield Provides a BitField like class (using a BigIntegerField) for your Django models. (If you're upgrading from a version before 1.2 the AP

This is a vision-based 3d model manipulation and control UI

Manipulation of 3D Models Using Hand Gesture This program allows user to manipulation 3D models (.obj format) with their hands. The project support bo

A collection of SOTA Image Classification Models in PyTorch

A collection of SOTA Image Classification Models in PyTorch

![[EMNLP 2021] LM-Critic: Language Models for Unsupervised Grammatical Error Correction](https://github.com/michiyasunaga/LM-Critic/raw/main/figs/local_optimality.png)

[EMNLP 2021] LM-Critic: Language Models for Unsupervised Grammatical Error Correction

LM-Critic: Language Models for Unsupervised Grammatical Error Correction This repo provides the source code & data of our paper: LM-Critic: Language M

Code & Models for 3DETR - an End-to-end transformer model for 3D object detection

3DETR: An End-to-End Transformer Model for 3D Object Detection PyTorch implementation and models for 3DETR. 3DETR (3D DEtection TRansformer) is a simp

Object Moderation Layer

django-oml Welcome to the documentation for django-oml! OML means Object Moderation Layer, the idea is to have a mixin model that allows you to modera

CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Generation

CPT This repository contains code and checkpoints for CPT. CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Gener

Must-read papers on improving efficiency for pre-trained language models.

Must-read papers on improving efficiency for pre-trained language models.

Keras attention models including botnet,CoaT,CoAtNet,CMT,cotnet,halonet,resnest,resnext,resnetd,volo,mlp-mixer,resmlp,gmlp,levit

Keras_cv_attention_models Keras_cv_attention_models Usage Basic Usage Layers Model surgery AotNet ResNetD ResNeXt ResNetQ BotNet VOLO ResNeSt HaloNet

ILVR: Conditioning Method for Denoising Diffusion Probabilistic Models (ICCV 2021 Oral)

ILVR + ADM This is the implementation of ILVR: Conditioning Method for Denoising Diffusion Probabilistic Models (ICCV 2021 Oral). This repository is h