354 Repositories

Python distributed-representations Libraries

HyperCube: Implicit Field Representations of Voxelized 3D Models

HyperCube: Implicit Field Representations of Voxelized 3D Models Authors: Magdalena Proszewska, Marcin Mazur, Tomasz Trzcinski, Przemysław Spurek [Pap

PyTorch implementation for paper StARformer: Transformer with State-Action-Reward Representations.

StARformer This repository contains the PyTorch implementation for our paper titled StARformer: Transformer with State-Action-Reward Representations.

Permute Me Softly: Learning Soft Permutations for Graph Representations

Permute Me Softly: Learning Soft Permutations for Graph Representations

Experiments for distributed optimization algorithms

Network-Distributed Algorithm Experiments -- This repository contains a set of optimization algorithms and objective functions, and all code needed to

Code for the paper Relation Prediction as an Auxiliary Training Objective for Improving Multi-Relational Graph Representations (AKBC 2021).

Relation Prediction as an Auxiliary Training Objective for Knowledge Base Completion This repo provides the code for the paper Relation Prediction as

Unofficial PyTorch implementation of DeepMind's Perceiver IO with PyTorch Lightning scripts for distributed training

Unofficial PyTorch implementation of DeepMind's Perceiver IO with PyTorch Lightning scripts for distributed training

This repository contains the code for the CVPR 2020 paper "Differentiable Volumetric Rendering: Learning Implicit 3D Representations without 3D Supervision"

Differentiable Volumetric Rendering Paper | Supplementary | Spotlight Video | Blog Entry | Presentation | Interactive Slides | Project Page This repos

This repository contains the code for the CVPR 2021 paper "GIRAFFE: Representing Scenes as Compositional Generative Neural Feature Fields"

GIRAFFE: Representing Scenes as Compositional Generative Neural Feature Fields Project Page | Paper | Supplementary | Video | Slides | Blog | Talk If

An official reimplementation of the method described in the INTERSPEECH 2021 paper - Speech Resynthesis from Discrete Disentangled Self-Supervised Representations.

Speech Resynthesis from Discrete Disentangled Self-Supervised Representations Implementation of the method described in the Speech Resynthesis from Di

Pritunl is a distributed enterprise vpn server built using the OpenVPN protocol.

Pritunl is a distributed enterprise vpn server built using the OpenVPN protocol.

A lightweight wrapper for PyTorch that provides a simple declarative API for context switching between devices, distributed modes, mixed-precision, and PyTorch extensions.

A lightweight wrapper for PyTorch that provides a simple declarative API for context switching between devices, distributed modes, mixed-precision, and PyTorch extensions.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

A Pytorch implementation of "Splitter: Learning Node Representations that Capture Multiple Social Contexts" (WWW 2019).

Splitter ⠀⠀ A PyTorch implementation of Splitter: Learning Node Representations that Capture Multiple Social Contexts (WWW 2019). Abstract Recent inte

PyTorch implementation of the NIPS-17 paper "Poincaré Embeddings for Learning Hierarchical Representations"

Poincaré Embeddings for Learning Hierarchical Representations PyTorch implementation of Poincaré Embeddings for Learning Hierarchical Representations

Pytorch implementation of Distributed Proximal Policy Optimization: https://arxiv.org/abs/1707.02286

Pytorch-DPPO Pytorch implementation of Distributed Proximal Policy Optimization: https://arxiv.org/abs/1707.02286 Using PPO with clip loss (from https

Supervised Contrastive Learning for Downstream Optimized Sequence Representations

SupCL-Seq 📖 Supervised Contrastive Learning for Downstream Optimized Sequence representations (SupCS-Seq) accepted to be published in EMNLP 2021, ext

[EMNLP 2021] MuVER: Improving First-Stage Entity Retrieval with Multi-View Entity Representations

MuVER This repo contains the code and pre-trained model for our EMNLP 2021 paper: MuVER: Improving First-Stage Entity Retrieval with Multi-View Entity

Source code for the paper "TearingNet: Point Cloud Autoencoder to Learn Topology-Friendly Representations"

TearingNet: Point Cloud Autoencoder to Learn Topology-Friendly Representations Created by Jiahao Pang, Duanshun Li, and Dong Tian from InterDigital In

Python implementation of the IPv8 layer provide authenticated communication with privacy

Python implementation of the IPv8 layer provide authenticated communication with privacy

Tensorflow 2 implementation of the paper: Learning and Evaluating Representations for Deep One-class Classification published at ICLR 2021

Deep Representation One-class Classification (DROC). This is not an officially supported Google product. Tensorflow 2 implementation of the paper: Lea

PyTorch implementation of SimCLR: A Simple Framework for Contrastive Learning of Visual Representations

PyTorch implementation of SimCLR: A Simple Framework for Contrastive Learning of Visual Representations

![[ICCV'21] Official implementation for the paper Social NCE: Contrastive Learning of Socially-aware Motion Representations](https://github.com/vita-epfl/social-nce-crowdnav/raw/main/docs/illustration.png)

[ICCV'21] Official implementation for the paper Social NCE: Contrastive Learning of Socially-aware Motion Representations

CrowdNav with Social-NCE This is an official implementation for the paper Social NCE: Contrastive Learning of Socially-aware Motion Representations by

Pytorch implementation of Distributed Proximal Policy Optimization

Pytorch-DPPO Pytorch implementation of Distributed Proximal Policy Optimization: https://arxiv.org/abs/1707.02286 Using PPO with clip loss (from https

[ICCV'21] NEAT: Neural Attention Fields for End-to-End Autonomous Driving

NEAT: Neural Attention Fields for End-to-End Autonomous Driving Paper | Supplementary | Video | Poster | Blog This repository is for the ICCV 2021 pap

We evaluate our method on different datasets (including ShapeNet, CUB-200-2011, and Pascal3D+) and achieve state-of-the-art results, outperforming all the other supervised and unsupervised methods and 3D representations, all in terms of performance, accuracy, and training time.

An Effective Loss Function for Generating 3D Models from Single 2D Image without Rendering Papers with code | Paper Nikola Zubić Pietro Lio University

Code for Motion Representations for Articulated Animation paper

Motion Representations for Articulated Animation This repository contains the source code for the CVPR'2021 paper Motion Representations for Articulat

"Inductive Entity Representations from Text via Link Prediction" @ The Web Conference 2021

Inductive entity representations from text via link prediction This repository contains the code used for the experiments in the paper "Inductive enti

PyTorch implementation of the NIPS-17 paper "Poincaré Embeddings for Learning Hierarchical Representations"

Poincaré Embeddings for Learning Hierarchical Representations PyTorch implementation of Poincaré Embeddings for Learning Hierarchical Representations

Global Rhythm Style Transfer Without Text Transcriptions

Global Prosody Style Transfer Without Text Transcriptions This repository provides a PyTorch implementation of AutoPST, which enables unsupervised glo

A Pytorch implementation of "Splitter: Learning Node Representations that Capture Multiple Social Contexts" (WWW 2019).

Splitter ⠀⠀ A PyTorch implementation of Splitter: Learning Node Representations that Capture Multiple Social Contexts (WWW 2019). Abstract Recent inte

Global Rhythm Style Transfer Without Text Transcriptions

Global Prosody Style Transfer Without Text Transcriptions This repository provides a PyTorch implementation of AutoPST, which enables unsupervised glo

PyTorch implementation of paper: HPNet: Deep Primitive Segmentation Using Hybrid Representations.

HPNet This repository contains the PyTorch implementation of paper: HPNet: Deep Primitive Segmentation Using Hybrid Representations. Installation The

HyperPose is a library for building high-performance custom pose estimation applications.

HyperPose is a library for building high-performance custom pose estimation applications.

XGBoost-Ray is a distributed backend for XGBoost, built on top of distributed computing framework Ray.

XGBoost-Ray is a distributed backend for XGBoost, built on top of distributed computing framework Ray.

Here is the implementation of our paper S2VC: A Framework for Any-to-Any Voice Conversion with Self-Supervised Pretrained Representations.

S2VC Here is the implementation of our paper S2VC: A Framework for Any-to-Any Voice Conversion with Self-Supervised Pretrained Representations. In thi

Code release for BlockGAN: Learning 3D Object-aware Scene Representations from Unlabelled Images

BlockGAN Code release for BlockGAN: Learning 3D Object-aware Scene Representations from Unlabelled Images BlockGAN: Learning 3D Object-aware Scene Rep

WAGMA-SGD is a decentralized asynchronous SGD for distributed deep learning training based on model averaging.

WAGMA-SGD is a decentralized asynchronous SGD based on wait-avoiding group model averaging. The synchronization is relaxed by making the collectives externally-triggerable, namely, a collective can be initiated without requiring that all the processes enter it. It partially reduces the data within non-overlapping groups of process, improving the parallel scalability.

Bagua is a flexible and performant distributed training algorithm development framework.

Bagua is a flexible and performant distributed training algorithm development framework.

[ACL 20] Probing Linguistic Features of Sentence-level Representations in Neural Relation Extraction

REval Table of Contents Introduction Overview Requirements Installation Probing Usage Citation License 🎓 Introduction REval is a simple framework for

A universal framework for learning timestamp-level representations of time series

TS2Vec This repository contains the official implementation for the paper Learning Timestamp-Level Representations for Time Series with Hierarchical C

Distributed DataLoader For Pytorch Based On Ray

Dpex——用户无感知分布式数据预处理组件 一、前言 随着GPU与CPU的算力差距越来越大以及模型训练时的预处理Pipeline变得越来越复杂,CPU部分的数据预处理已经逐渐成为了模型训练的瓶颈所在,这导致单机的GPU配置的提升并不能带来期望的线性加速。预处理性能瓶颈的本质在于每个GPU能够使用的C

Code for the paper "Implicit Representations of Meaning in Neural Language Models"

Implicit Representations of Meaning in Neural Language Models Preliminaries Create and set up a conda environment as follows: conda create -n state-pr

Code for "Learning Canonical Representations for Scene Graph to Image Generation", Herzig & Bar et al., ECCV2020

Learning Canonical Representations for Scene Graph to Image Generation (ECCV 2020) Roei Herzig*, Amir Bar*, Huijuan Xu, Gal Chechik, Trevor Darrell, A

ACL'2021: Learning Dense Representations of Phrases at Scale

DensePhrases DensePhrases is an extractive phrase search tool based on your natural language inputs. From 5 million Wikipedia articles, it can search

This repository contains all the code and materials distributed in the 2021 Q-Programming Summer of Qode.

Q-Programming Summer of Qode This repository contains all the code and materials distributed in the Q-Programming Summer of Qode. If you want to creat

Code to train models from "Paraphrastic Representations at Scale".

Paraphrastic Representations at Scale Code to train models from "Paraphrastic Representations at Scale". The code is written in Python 3.7 and require

Compositional and Parameter-Efficient Representations for Large Knowledge Graphs

NodePiece - Compositional and Parameter-Efficient Representations for Large Knowledge Graphs NodePiece is a "tokenizer" for reducing entity vocabulary

Pretraining Representations For Data-Efficient Reinforcement Learning

Pretraining Representations For Data-Efficient Reinforcement Learning Max Schwarzer, Nitarshan Rajkumar, Michael Noukhovitch, Ankesh Anand, Laurent Ch

Secure Distributed Training at Scale

Secure Distributed Training at Scale This repository contains the implementation of experiments from the paper "Secure Distributed Training at Scale"

DistML is a Ray extension library to support large-scale distributed ML training on heterogeneous multi-node multi-GPU clusters

DistML is a Ray extension library to support large-scale distributed ML training on heterogeneous multi-node multi-GPU clusters

Code for CVPR2021 paper 'Where and What? Examining Interpretable Disentangled Representations'.

PS-SC GAN This repository contains the main code for training a PS-SC GAN (a GAN implemented with the Perceptual Simplicity and Spatial Constriction c

Learning trajectory representations using self-supervision and programmatic supervision.

Trajectory Embedding for Behavior Analysis (TREBA) Implementation from the paper: Jennifer J. Sun, Ann Kennedy, Eric Zhan, David J. Anderson, Yisong Y

A parallel framework for population-based multi-agent reinforcement learning.

MALib: A parallel framework for population-based multi-agent reinforcement learning MALib is a parallel framework of population-based learning nested

Code for the ACL2021 paper "Combining Static Word Embedding and Contextual Representations for Bilingual Lexicon Induction"

CSCBLI Code for our ACL Findings 2021 paper, "Combining Static Word Embedding and Contextual Representations for Bilingual Lexicon Induction". Require

![Revisiting Contrastive Methods for Unsupervised Learning of Visual Representations. [2021]](https://github.com/wvangansbeke/Revisiting-Contrastive-SSL/raw/main/images/teaser.jpg)

Revisiting Contrastive Methods for Unsupervised Learning of Visual Representations. [2021]

Revisiting Contrastive Methods for Unsupervised Learning of Visual Representations This repo contains the Pytorch implementation of our paper: Revisit

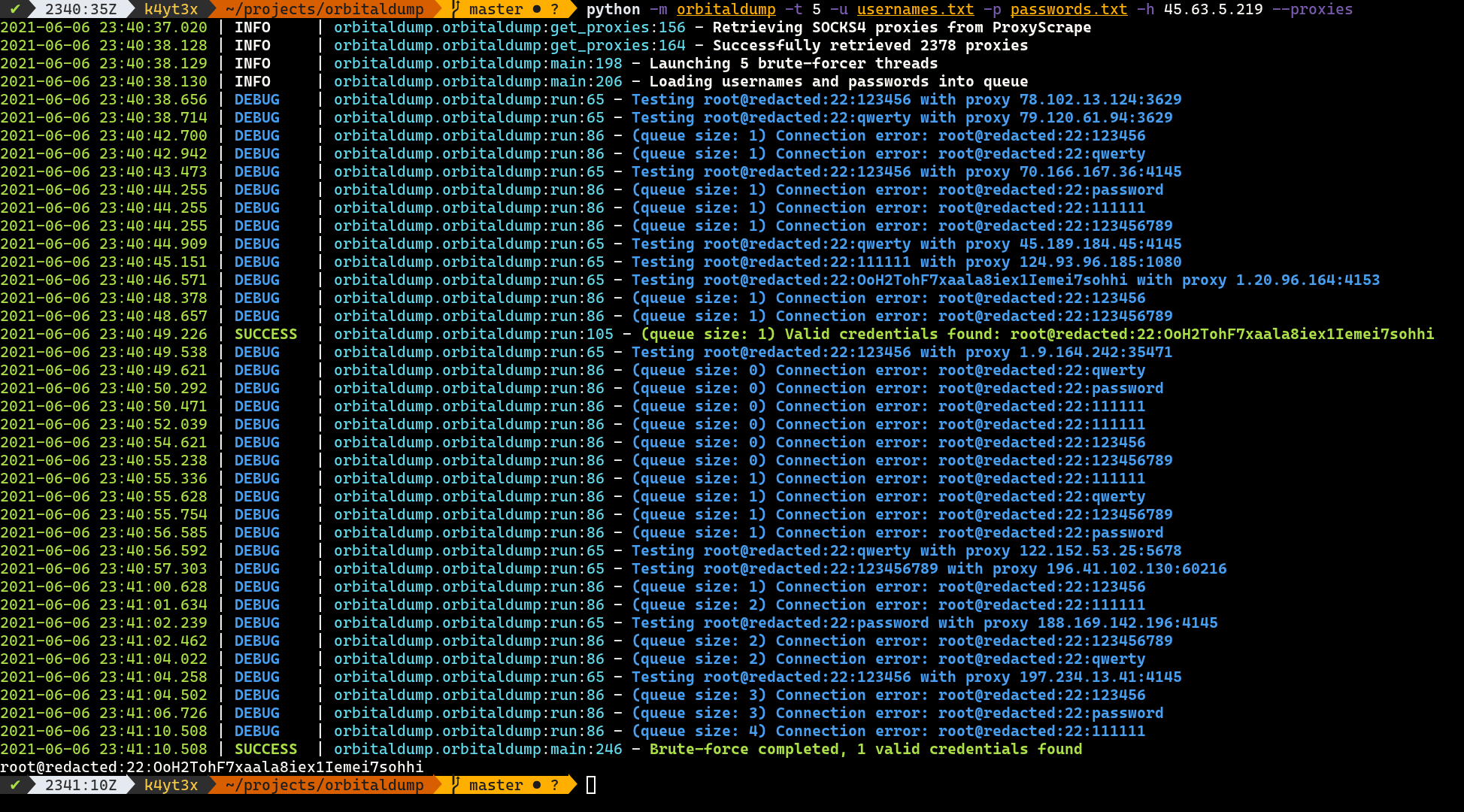

A simple multi-threaded distributed SSH brute-forcing tool written in Python.

OrbitalDump A simple multi-threaded distributed SSH brute-forcing tool written in Python. How it Works When the script is executed without the --proxi

To automate the generation and validation tests of COSE/CBOR Codes and it's base45/2D Code representations

To automate the generation and validation tests of COSE/CBOR Codes and it's base45/2D Code representations, a lot of data has to be collected to ensure the variance of the tests. This respository was established to collect a lot of different test data and related test cases of different member states in a standardized manner. Each member state can generate a folder in this section.

dask-sql is a distributed SQL query engine in python using Dask

dask-sql is a distributed SQL query engine in Python. It allows you to query and transform your data using a mixture of common SQL operations and Python code and also scale up the calculation easily if you need it.

PyTorch implementation of: Michieli U. and Zanuttigh P., "Continual Semantic Segmentation via Repulsion-Attraction of Sparse and Disentangled Latent Representations", CVPR 2021.

Continual Semantic Segmentation via Repulsion-Attraction of Sparse and Disentangled Latent Representations This is the official PyTorch implementation

Conformer: Local Features Coupling Global Representations for Visual Recognition

Conformer: Local Features Coupling Global Representations for Visual Recognition (arxiv) This repository is built upon DeiT and timm Usage First, inst

Code for Learning Manifold Patch-Based Representations of Man-Made Shapes, in ICLR 2021.

LearningPatches | Webpage | Paper | Video Learning Manifold Patch-Based Representations of Man-Made Shapes Dmitriy Smirnov, Mikhail Bessmeltsev, Justi

CURL: Contrastive Unsupervised Representations for Reinforcement Learning

CURL Rainbow Status: Archive (code is provided as-is, no updates expected) This is an implementation of CURL: Contrastive Unsupervised Representations

Deduplication is the task to combine different representations of the same real world entity.

Deduplication is the task to combine different representations of the same real world entity. This package implements deduplication using active learning. Active learning allows for rapid training without having to provide a large, manually labelled dataset.

A general-purpose multi-agent training framework.

MALib A general-purpose multi-agent training framework. Installation step1: build environment conda create -n malib python==3.7 -y conda activate mali

A static analysis library for computing graph representations of Python programs suitable for use with graph neural networks.

python_graphs This package is for computing graph representations of Python programs for machine learning applications. It includes the following modu

Predicting Semantic Map Representations from Images with Pyramid Occupancy Networks

This is the code associated with the paper Predicting Semantic Map Representations from Images with Pyramid Occupancy Networks, published at CVPR 2020.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

Learning General Purpose Distributed Sentence Representations via Large Scale Multi-task Learning

GenSen Learning General Purpose Distributed Sentence Representations via Large Scale Multi-task Learning Sandeep Subramanian, Adam Trischler, Yoshua B

Language-Agnostic SEntence Representations

LASER Language-Agnostic SEntence Representations LASER is a library to calculate and use multilingual sentence embeddings. NEWS 2019/11/08 CCMatrix is

Ray provides a simple, universal API for building distributed applications.

An open source framework that provides a simple, universal API for building distributed applications. Ray is packaged with RLlib, a scalable reinforcement learning library, and Tune, a scalable hyperparameter tuning library.

Distributed Task Queue (development branch)

Version: 5.1.0b1 (singularity) Web: https://docs.celeryproject.org/en/stable/index.html Download: https://pypi.org/project/celery/ Source: https://git

A multiprocessing distributed task queue for Django

A multiprocessing distributed task queue for Django Features Multiprocessing worker pool Asynchronous tasks Scheduled, cron and repeated tasks Signed

Code for the paper "Unsupervised Contrastive Learning of Sound Event Representations", ICASSP 2021.

Unsupervised Contrastive Learning of Sound Event Representations This repository contains the code for the following paper. If you use this code or pa

A Research-oriented Federated Learning Library and Benchmark Platform for Graph Neural Networks. Accepted to ICLR'2021 - DPML and MLSys'21 - GNNSys workshops.

FedGraphNN: A Federated Learning System and Benchmark for Graph Neural Networks A Research-oriented Federated Learning Library and Benchmark Platform

Code for the paper: Learning Adversarially Robust Representations via Worst-Case Mutual Information Maximization (https://arxiv.org/abs/2002.11798)

Representation Robustness Evaluations Our implementation is based on code from MadryLab's robustness package and Devon Hjelm's Deep InfoMax. For all t

Simple, fast, and parallelized symbolic regression in Python/Julia via regularized evolution and simulated annealing

Parallelized symbolic regression built on Julia, and interfaced by Python. Uses regularized evolution, simulated annealing, and gradient-free optimization.

Pytorch implementation of COIN, a framework for compression with implicit neural representations 🌸

COIN 🌟 This repo contains a Pytorch implementation of COIN: COmpression with Implicit Neural representations, including code to reproduce all experim

Proto-RL: Reinforcement Learning with Prototypical Representations

Proto-RL: Reinforcement Learning with Prototypical Representations This is a PyTorch implementation of Proto-RL from Reinforcement Learning with Proto

Code for Iso-Points: Optimizing Neural Implicit Surfaces with Hybrid Representations

Implementation for Iso-Points (CVPR 2021) Official code for paper Iso-Points: Optimizing Neural Implicit Surfaces with Hybrid Representations paper |

Official PyTorch implementation of Synergies Between Affordance and Geometry: 6-DoF Grasp Detection via Implicit Representations

Synergies Between Affordance and Geometry: 6-DoF Grasp Detection via Implicit Representations Zhenyu Jiang, Yifeng Zhu, Maxwell Svetlik, Kuan Fang, Yu

《LXMERT: Learning Cross-Modality Encoder Representations from Transformers》(EMNLP 2020)

The Most Important Thing. Our code is developed based on: LXMERT: Learning Cross-Modality Encoder Representations from Transformers

Learning Dense Representations of Phrases at Scale (Lee et al., 2020)

DensePhrases DensePhrases provides answers to your natural language questions from the entire Wikipedia in real-time. While it efficiently searches th

Simple Pose: Rethinking and Improving a Bottom-up Approach for Multi-Person Pose Estimation

SimplePose Code and pre-trained models for our paper, “Simple Pose: Rethinking and Improving a Bottom-up Approach for Multi-Person Pose Estimation”, a

A PyTorch Extension: Tools for easy mixed precision and distributed training in Pytorch

Introduction This repository holds NVIDIA-maintained utilities to streamline mixed precision and distributed training in Pytorch. Some of the code her

🎛 Distributed machine learning made simple.

🎛 lazycluster Distributed machine learning made simple. Use your preferred distributed ML framework like a lazy engineer. Getting Started • Highlight

Distributed Evolutionary Algorithms in Python

DEAP DEAP is a novel evolutionary computation framework for rapid prototyping and testing of ideas. It seeks to make algorithms explicit and data stru

Distributed scikit-learn meta-estimators in PySpark

sk-dist: Distributed scikit-learn meta-estimators in PySpark What is it? sk-dist is a Python package for machine learning built on top of scikit-learn

Decentralized deep learning in PyTorch. Built to train models on thousands of volunteers across the world.

Hivemind: decentralized deep learning in PyTorch Hivemind is a PyTorch library to train large neural networks across the Internet. Its intended usage

Distributed Computing for AI Made Simple

Project Home Blog Documents Paper Media Coverage Join Fiber users email list [email protected] Fiber Distributed Computing for AI Made Simp

A high performance and generic framework for distributed DNN training

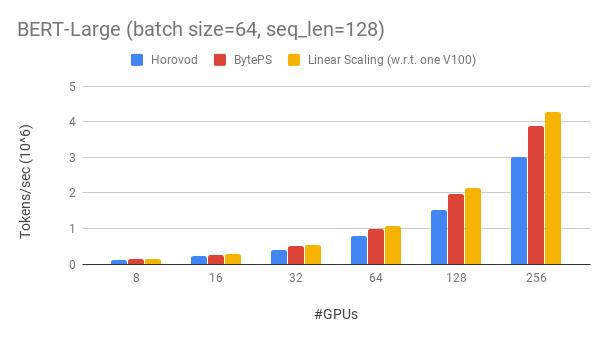

BytePS BytePS is a high performance and general distributed training framework. It supports TensorFlow, Keras, PyTorch, and MXNet, and can run on eith

a distributed deep learning platform

Apache SINGA Distributed deep learning system http://singa.apache.org Quick Start Installation Examples Issues JIRA tickets Code Analysis: Mailing Lis

Distributed Tensorflow, Keras and PyTorch on Apache Spark/Flink & Ray

A unified Data Analytics and AI platform for distributed TensorFlow, Keras and PyTorch on Apache Spark/Flink & Ray What is Analytics Zoo? Analytics Zo

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective. 10x Larger Models 10x Faster Trainin

Petastorm library enables single machine or distributed training and evaluation of deep learning models from datasets in Apache Parquet format. It supports ML frameworks such as Tensorflow, Pytorch, and PySpark and can be used from pure Python code.

Petastorm Contents Petastorm Installation Generating a dataset Plain Python API Tensorflow API Pytorch API Spark Dataset Converter API Analyzing petas

Distributed Deep learning with Keras & Spark

Elephas: Distributed Deep Learning with Keras & Spark Elephas is an extension of Keras, which allows you to run distributed deep learning models at sc

BigDL: Distributed Deep Learning Framework for Apache Spark

BigDL: Distributed Deep Learning on Apache Spark What is BigDL? BigDL is a distributed deep learning library for Apache Spark; with BigDL, users can w

Distributed training framework for TensorFlow, Keras, PyTorch, and Apache MXNet.

Horovod Horovod is a distributed deep learning training framework for TensorFlow, Keras, PyTorch, and Apache MXNet. The goal of Horovod is to make dis

An open source framework that provides a simple, universal API for building distributed applications. Ray is packaged with RLlib, a scalable reinforcement learning library, and Tune, a scalable hyperparameter tuning library.

Ray provides a simple, universal API for building distributed applications. Ray is packaged with the following libraries for accelerating machine lear

Code for: Gradient-based Hierarchical Clustering using Continuous Representations of Trees in Hyperbolic Space. Nicholas Monath, Manzil Zaheer, Daniel Silva, Andrew McCallum, Amr Ahmed. KDD 2019.

gHHC Code for: Gradient-based Hierarchical Clustering using Continuous Representations of Trees in Hyperbolic Space. Nicholas Monath, Manzil Zaheer, D

Pytorch Lightning Distributed Accelerators using Ray

Distributed PyTorch Lightning Training on Ray This library adds new PyTorch Lightning plugins for distributed training using the Ray distributed compu