2325 Repositories

Python graph-attention-model Libraries

Code repository for the paper "Doubly-Trained Adversarial Data Augmentation for Neural Machine Translation" with instructions to reproduce the results.

Doubly Trained Neural Machine Translation System for Adversarial Attack and Data Augmentation Languages Experimented: Data Overview: Source Target Tra

Official implementation for Multi-Modal Interaction Graph Convolutional Network for Temporal Language Localization in Videos

Multi-modal Interaction Graph Convolutioal Network for Temporal Language Localization in Videos Official implementation for Multi-Modal Interaction Gr

A brand new hub for Scene Graph Generation methods based on MMdetection (2021). The pipeline of from detection, scene graph generation to downstream tasks (e.g., image cpationing) is supported. Pytorch version implementation of HetH (ECCV 2020) and TopicSG (ICCV 2021) is included.

MMSceneGraph Introduction MMSceneneGraph is an open source code hub for scene graph generation as well as supporting downstream tasks based on the sce

Making self-supervised learning work on molecules by using their 3D geometry to pre-train GNNs. Implemented in DGL and Pytorch Geometric.

3D Infomax improves GNNs for Molecular Property Prediction Video | Paper We pre-train GNNs to understand the geometry of molecules given only their 2D

Code for ICCV2021 paper PARE: Part Attention Regressor for 3D Human Body Estimation

PARE: Part Attention Regressor for 3D Human Body Estimation [ICCV 2021] PARE: Part Attention Regressor for 3D Human Body Estimation, Muhammed Kocabas,

![[ICCV 2021 Oral] Deep Evidential Action Recognition](https://github.com/Cogito2012/DEAR/raw/master/assets/gif/known/Basketball_v_Basketball_g05_c03.gif)

[ICCV 2021 Oral] Deep Evidential Action Recognition

DEAR (Deep Evidential Action Recognition) Project | Paper & Supp Wentao Bao, Qi Yu, Yu Kong International Conference on Computer Vision (ICCV Oral), 2

i3DMM: Deep Implicit 3D Morphable Model of Human Heads

i3DMM: Deep Implicit 3D Morphable Model of Human Heads CVPR 2021 (Oral) Arxiv | Poject Page This project is the official implementation our work, i3DM

Revitalizing CNN Attention via Transformers in Self-Supervised Visual Representation Learning

Revitalizing CNN Attention via Transformers in Self-Supervised Visual Representation Learning

PyTorch implementation of DreamerV2 model-based RL algorithm

PyDreamer Reimplementation of DreamerV2 model-based RL algorithm in PyTorch. The official DreamerV2 implementation can be found here. Features ... Run

Code for Understanding Pooling in Graph Neural Networks

Select, Reduce, Connect This repository contains the code used for the experiments of: "Understanding Pooling in Graph Neural Networks" Setup Install

Tooling for converting STAC metadata to ODC data model

Tooling for converting STAC metadata to ODC data model.

Official PyTorch implementation of "AASIST: Audio Anti-Spoofing using Integrated Spectro-Temporal Graph Attention Networks"

AASIST This repository provides the overall framework for training and evaluating audio anti-spoofing systems proposed in 'AASIST: Audio Anti-Spoofing

![[ICRA2021] Reconstructing Interactive 3D Scene by Panoptic Mapping and CAD Model Alignment](https://github.com/hmz-15/Interactive-Scene-Reconstruction/raw/main/motivation.jpg)

[ICRA2021] Reconstructing Interactive 3D Scene by Panoptic Mapping and CAD Model Alignment

Interactive Scene Reconstruction Project Page | Paper This repository contains the implementation of our ICRA2021 paper Reconstructing Interactive 3D

Deep Structured Instance Graph for Distilling Object Detectors (ICCV 2021)

DSIG Deep Structured Instance Graph for Distilling Object Detectors Authors: Yixin Chen, Pengguang Chen, Shu Liu, Liwei Wang, Jiaya Jia. [pdf] [slide]

This is the official pytorch implementation for our ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visual Question Answering" on VQA Task

🌈 ERASOR (RA-L'21 with ICRA Option) Official page of "ERASOR: Egocentric Ratio of Pseudo Occupancy-based Dynamic Object Removal for Static 3D Point C

Pytorch implementation for our ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visual Question Answering".

TRAnsformer Routing Networks (TRAR) This is an official implementation for ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visu

Simple image captioning model - CLIP prefix captioning.

Simple image captioning model - CLIP prefix captioning.

A framework for building (and incrementally growing) graph-based data structures used in hierarchical or DAG-structured clustering and nearest neighbor search

A framework for building (and incrementally growing) graph-based data structures used in hierarchical or DAG-structured clustering and nearest neighbor search

Permute Me Softly: Learning Soft Permutations for Graph Representations

Permute Me Softly: Learning Soft Permutations for Graph Representations

A game theoretic approach to explain the output of any machine learning model.

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allo

Code for KHGT model, AAAI2021

KHGT Code for KHGT accepted by AAAI2021 Please unzip the data files in Datasets/ first. To run KHGT on Yelp data, use python labcode_yelp.py For Movi

Code for the paper Relation Prediction as an Auxiliary Training Objective for Improving Multi-Relational Graph Representations (AKBC 2021).

Relation Prediction as an Auxiliary Training Objective for Knowledge Base Completion This repo provides the code for the paper Relation Prediction as

PyTorch Implementation of PortaSpeech: Portable and High-Quality Generative Text-to-Speech

PortaSpeech - PyTorch Implementation PyTorch Implementation of PortaSpeech: Portable and High-Quality Generative Text-to-Speech. Model Size Module Nor

Reimplementation of the paper "Attention, Learn to Solve Routing Problems!" in jax/flax.

JAX + Attention Learn To Solve Routing Problems Reinplementation of the paper Attention, Learn to Solve Routing Problems! using Jax and Flax. Fully su

A graph neural network (GNN) model to predict protein-protein interactions (PPI) with no sample features

A graph neural network (GNN) model to predict protein-protein interactions (PPI) with no sample features

A geometric deep learning pipeline for predicting protein interface contacts.

A geometric deep learning pipeline for predicting protein interface contacts.

Code for ICCV 2021 paper "HuMoR: 3D Human Motion Model for Robust Pose Estimation"

Code for ICCV 2021 paper "HuMoR: 3D Human Motion Model for Robust Pose Estimation"

A framework for evaluating Knowledge Graph Embedding Models in a fine-grained manner.

A framework for evaluating Knowledge Graph Embedding Models in a fine-grained manner.

Train 🤗-transformers model with Poutyne.

poutyne-transformers Train 🤗 -transformers models with Poutyne. Installation pip install poutyne-transformers Example import torch from transformers

Graphsignal is a machine learning model monitoring platform.

Graphsignal is a machine learning model monitoring platform. It helps ML engineers, MLOps teams and data scientists to quickly address issues with data and models as well as proactively analyze model performance and availability.

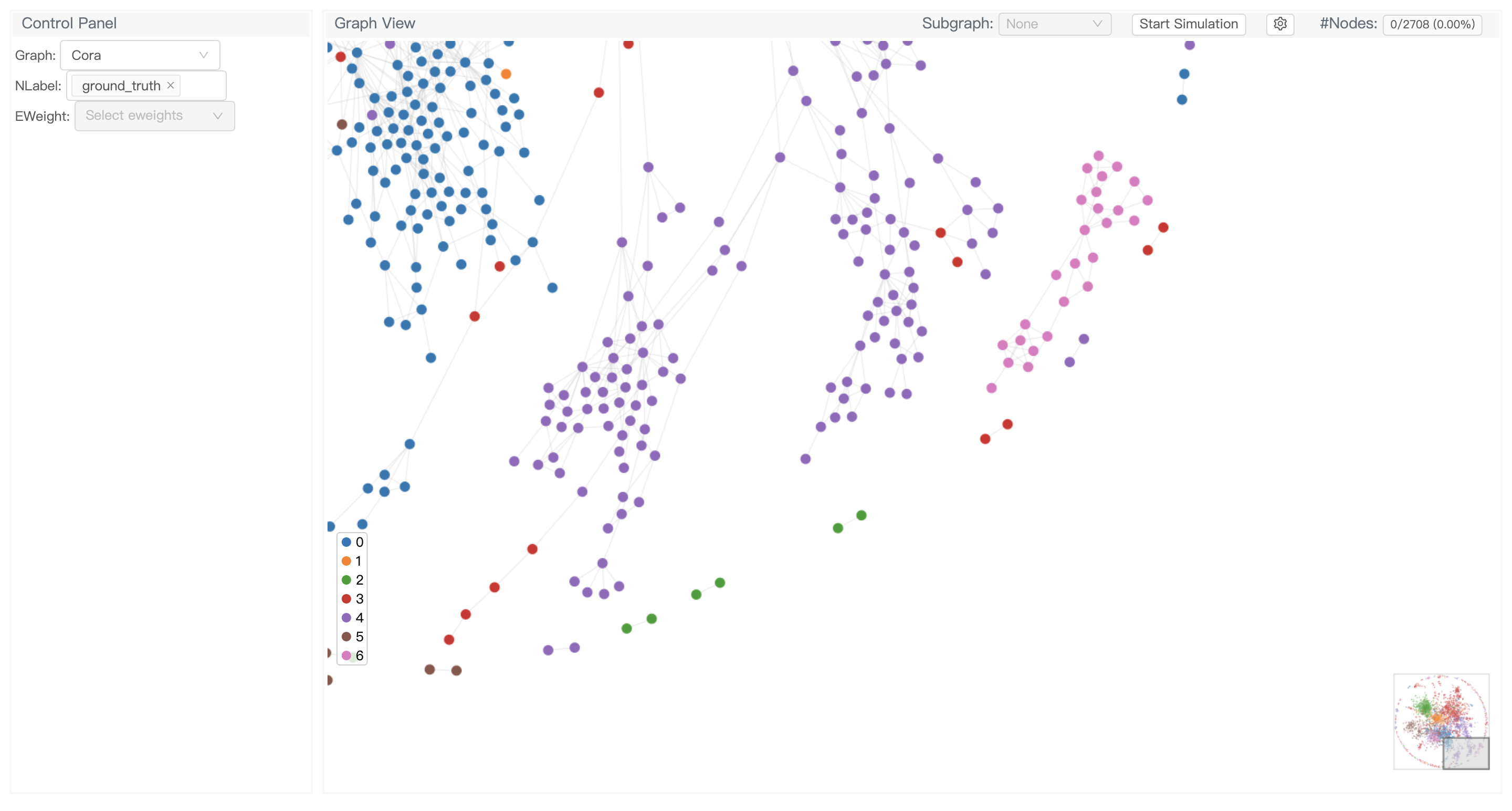

GNNLens2 is an interactive visualization tool for graph neural networks (GNN).

GNNLens2 is an interactive visualization tool for graph neural networks (GNN).

PortaSpeech - PyTorch Implementation

PortaSpeech - PyTorch Implementation PyTorch Implementation of PortaSpeech: Portable and High-Quality Generative Text-to-Speech. Model Size Module Nor

Code for CodeT5: a new code-aware pre-trained encoder-decoder model.

CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation This is the official PyTorch implementation

Inferred Model-based Fuzzer

IMF: Inferred Model-based Fuzzer IMF is a kernel API fuzzer that leverages an automated API model inferrence techinque proposed in our paper at CCS. I

DGCNN - Dynamic Graph CNN for Learning on Point Clouds

DGCNN is the author's re-implementation of Dynamic Graph CNN, which achieves state-of-the-art performance on point-cloud-related high-level tasks including category classification, semantic segmentation and part segmentation.

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API.

Python script for performing depth completion from sparse depth and rgb images using the msg_chn_wacv20. model in ONNX

ONNX msg_chn_wacv20 depth completion Python script for performing depth completion from sparse depth and rgb images using the msg_chn_wacv20 model in

DziriBERT: a Pre-trained Language Model for the Algerian Dialect

DziriBERT DziriBERT is the first Transformer-based Language Model that has been pre-trained specifically for the Algerian Dialect. It handles Algerian

This repository contains the code for the CVPR 2021 paper "GIRAFFE: Representing Scenes as Compositional Generative Neural Feature Fields"

GIRAFFE: Representing Scenes as Compositional Generative Neural Feature Fields Project Page | Paper | Supplementary | Video | Slides | Blog | Talk If

Pytorch implementation of "Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling"

RNN-for-Joint-NLU Pytorch implementation of "Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling"

LSTM and QRNN Language Model Toolkit for PyTorch

LSTM and QRNN Language Model Toolkit This repository contains the code used for two Salesforce Research papers: Regularizing and Optimizing LSTM Langu

PyTorch implementation of the cross-modality generative model that synthesizes dance from music.

Dancing to Music PyTorch implementation of the cross-modality generative model that synthesizes dance from music. Paper Hsin-Ying Lee, Xiaodong Yang,

A PyTorch Library for Accelerating 3D Deep Learning Research

Kaolin: A Pytorch Library for Accelerating 3D Deep Learning Research Overview NVIDIA Kaolin library provides a PyTorch API for working with a variety

![[NeurIPS 2021] Galerkin Transformer: a linear attention without softmax](https://github.com/scaomath/galerkin-transformer/raw/main/data/simple_ft.png)

[NeurIPS 2021] Galerkin Transformer: a linear attention without softmax

[NeurIPS 2021] Galerkin Transformer: linear attention without softmax Summary A non-numerical analyst oriented explanation on Toward Data Science abou

Google and Stanford University released a new pre-trained model called ELECTRA

Google and Stanford University released a new pre-trained model called ELECTRA, which has a much compact model size and relatively competitive performance compared to BERT and its variants. For further accelerating the research of the Chinese pre-trained model, the Joint Laboratory of HIT and iFLYTEK Research (HFL) has released the Chinese ELECTRA models based on the official code of ELECTRA. ELECTRA-small could reach similar or even higher scores on several NLP tasks with only 1/10 parameters compared to BERT and its variants.

A Chinese to English Neural Model Translation Project

ZH-EN NMT Chinese to English Neural Machine Translation This project is inspired by Stanford's CS224N NMT Project Dataset used in this project: News C

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Model for recasing and repunctuating ASR transcripts

Recasing and punctuation model based on Bert Benoit Favre 2021 This system converts a sequence of lowercase tokens without punctuation to a sequence o

A fast model to compute optical flow between two input images.

DCVNet: Dilated Cost Volumes for Fast Optical Flow This repository contains our implementation of the paper: @InProceedings{jiang2021dcvnet, title={

Model factory is a ML training platform to help engineers to build ML models at scale

Model Factory Machine learning today is powering many businesses today, e.g., search engine, e-commerce, news or feed recommendation. Training high qu

This YoloV5 based model is fit to detect people and different types of land vehicles, and displaying their density on a fitted map, according to their coordinates and detected labels.

This YoloV5 based model is fit to detect people and different types of land vehicles, and displaying their density on a fitted map, according to their

DziriBERT: a Pre-trained Language Model for the Algerian Dialect

DziriBERT is the first Transformer-based Language Model that has been pre-trained specifically for the Algerian Dialect.

🔥🔥High-Performance Face Recognition Library on PaddlePaddle & PyTorch🔥🔥

face.evoLVe: High-Performance Face Recognition Library based on PaddlePaddle & PyTorch Evolve to be more comprehensive, effective and efficient for fa

Sign Language is detected in realtime using video sequences. Our approach involves MediaPipe Holistic for keypoints extraction and LSTM Model for prediction.

RealTime Sign Language Detection using Action Recognition Approach Real-Time Sign Language is commonly predicted using models whose architecture consi

![A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]](https://user-images.githubusercontent.com/33194443/104581604-2592cb00-56a2-11eb-9610-5eaa0afb6e1f.png)

A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]

PINTO_model_zoo Please read the contents of the LICENSE file located directly under each folder before using the model. My model conversion scripts ar

Python scripts for performing 3D human pose estimation using the Mobile Human Pose model in ONNX.

Python scripts for performing 3D human pose estimation using the Mobile Human Pose model in ONNX.

C++ Implementation of PyTorch Tutorials for Everyone

C++ Implementation of PyTorch Tutorials for Everyone OS (Compiler)\LibTorch 1.9.0 macOS (clang 10.0, 11.0, 12.0) Linux (gcc 8, 9, 10, 11) Windows (msv

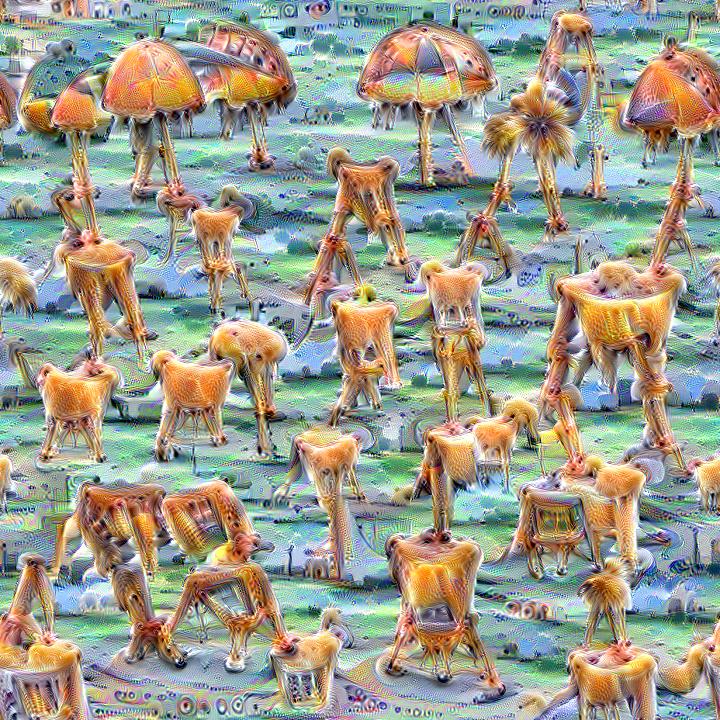

Quickly and easily create / train a custom DeepDream model

Dream-Creator This project aims to simplify the process of creating a custom DeepDream model by using pretrained GoogleNet models and custom image dat

This project deploys a yolo fastest model in the form of tflite on raspberry 3b+. The model is from another repository of mine called -Trash-Classification-Car

Deploy-yolo-fastest-tflite-on-raspberry 觉得有用的话可以顺手点个star嗷 这个项目将垃圾分类小车中的tflite模型移植到了树莓派3b+上面。 该项目主要是为了记录在树莓派部署yolo fastest tflite的流程 (之后有时间会尝试用C++部署来提升

The source code and data of the paper "Instance-wise Graph-based Framework for Multivariate Time Series Forecasting".

IGMTF The source code and data of the paper "Instance-wise Graph-based Framework for Multivariate Time Series Forecasting". Requirements The framework

A simple but complete full-attention transformer with a set of promising experimental features from various papers

x-transformers A concise but fully-featured transformer, complete with a set of promising experimental features from various papers. Install $ pip ins

GLaRA: Graph-based Labeling Rule Augmentation for Weakly Supervised Named Entity Recognition

GLaRA: Graph-based Labeling Rule Augmentation for Weakly Supervised Named Entity Recognition

Code and data to accompany the camera-ready version of "Cross-Attention is All You Need: Adapting Pretrained Transformers for Machine Translation" in EMNLP 2021

Code and data to accompany the camera-ready version of "Cross-Attention is All You Need: Adapting Pretrained Transformers for Machine Translation" in EMNLP 2021

PyTorch implementation of CVPR 2020 paper (Reference-Based Sketch Image Colorization using Augmented-Self Reference and Dense Semantic Correspondence) and pre-trained model on ImageNet dataset

Reference-Based-Sketch-Image-Colorization-ImageNet This is a PyTorch implementation of CVPR 2020 paper (Reference-Based Sketch Image Colorization usin

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

Real-Time Multi-Contact Model Predictive Control via ADMM

Here, you can find the code for the paper 'Real-Time Multi-Contact Model Predictive Control via ADMM'. Code is currently being cleared up and optimize

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

Code and data form the paper BERT Got a Date: Introducing Transformers to Temporal Tagging

BERT Got a Date: Introducing Transformers to Temporal Tagging Satya Almasian*, Dennis Aumiller*, and Michael Gertz Heidelberg University Contact us vi

A Pytorch implementation of "Splitter: Learning Node Representations that Capture Multiple Social Contexts" (WWW 2019).

Splitter ⠀⠀ A PyTorch implementation of Splitter: Learning Node Representations that Capture Multiple Social Contexts (WWW 2019). Abstract Recent inte

PyTorch original implementation of Cross-lingual Language Model Pretraining.

XLM NEW: Added XLM-R model. PyTorch original implementation of Cross-lingual Language Model Pretraining. Includes: Monolingual language model pretrain

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

PyTorch Language Model for 1-Billion Word (LM1B / GBW) Dataset

PyTorch Large-Scale Language Model A Large-Scale PyTorch Language Model trained on the 1-Billion Word (LM1B) / (GBW) dataset Latest Results 39.98 Perp

Pervasive Attention: 2D Convolutional Networks for Sequence-to-Sequence Prediction

This is a fork of Fairseq(-py) with implementations of the following models: Pervasive Attention - 2D Convolutional Neural Networks for Sequence-to-Se

A Structured Self-attentive Sentence Embedding

Structured Self-attentive sentence embeddings Implementation for the paper A Structured Self-Attentive Sentence Embedding, which was published in ICLR

Convolutional 2D Knowledge Graph Embeddings resources

ConvE Convolutional 2D Knowledge Graph Embeddings resources. Paper: Convolutional 2D Knowledge Graph Embeddings Used in the paper, but do not use thes

pytorch implementation of Attention is all you need

A Pytorch Implementation of the Transformer: Attention Is All You Need Our implementation is largely based on Tensorflow implementation Requirements N

PyTorch implementation of Tacotron speech synthesis model.

tacotron_pytorch PyTorch implementation of Tacotron speech synthesis model. Inspired from keithito/tacotron. Currently not as much good speech quality

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Apply Graph Self-Supervised Learning methods to graph-level task(TUDataset, MolculeNet Datset)

Graphlevel-SSL Overview Apply Graph Self-Supervised Learning methods to graph-level task(TUDataset, MolculeNet Dataset). It is unified framework to co

Dynamic Attentive Graph Learning for Image Restoration, ICCV2021 [PyTorch Code]

Dynamic Attentive Graph Learning for Image Restoration This repository is for GATIR introduced in the following paper: Chong Mou, Jian Zhang, Zhuoyuan

High level network definitions with pre-trained weights in TensorFlow

TensorNets High level network definitions with pre-trained weights in TensorFlow (tested with 2.1.0 = TF = 1.4.0). Guiding principles Applicability.

A PyTorch implementation of the Transformer model in "Attention is All You Need".

Attention is all you need: A Pytorch Implementation This is a PyTorch implementation of the Transformer model in "Attention is All You Need" (Ashish V

Intent parsing and slot filling in PyTorch with seq2seq + attention

PyTorch Seq2Seq Intent Parsing Reframing intent parsing as a human - machine translation task. Work in progress successor to torch-seq2seq-intent-pars

DeepLab resnet v2 model in pytorch

pytorch-deeplab-resnet DeepLab resnet v2 model implementation in pytorch. The architecture of deepLab-ResNet has been replicated exactly as it is from

Improving Convolutional Networks via Attention Transfer (ICLR 2017)

Attention Transfer PyTorch code for "Paying More Attention to Attention: Improving the Performance of Convolutional Neural Networks via Attention Tran

CNNs for Sentence Classification in PyTorch

Introduction This is the implementation of Kim's Convolutional Neural Networks for Sentence Classification paper in PyTorch. Kim's implementation of t

PyTorch implementation of "Learning to Discover Cross-Domain Relations with Generative Adversarial Networks"

DiscoGAN in PyTorch PyTorch implementation of Learning to Discover Cross-Domain Relations with Generative Adversarial Networks. * All samples in READM

Task-based end-to-end model learning in stochastic optimization

Task-based End-to-end Model Learning in Stochastic Optimization This repository is by Priya L. Donti, Brandon Amos, and J. Zico Kolter and contains th

Official DGL implementation of "Rethinking High-order Graph Convolutional Networks"

SE Aggregation This is the implementation for Rethinking High-order Graph Convolutional Networks. Here we show the codes for citation networks as an e

Official implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification

CrossViT This repository is the official implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification. ArXiv If

A model library for exploring state-of-the-art deep learning topologies and techniques for optimizing Natural Language Processing neural networks

A Deep Learning NLP/NLU library by Intel® AI Lab Overview | Models | Installation | Examples | Documentation | Tutorials | Contributing NLP Architect

TextAttack 🐙 is a Python framework for adversarial attacks, data augmentation, and model training in NLP

TextAttack 🐙 Generating adversarial examples for NLP models [TextAttack Documentation on ReadTheDocs] About • Setup • Usage • Design About TextAttack

Model Agnostic Confidence Estimator (MACEST) - A Python library for calibrating Machine Learning models' confidence scores

Model Agnostic Confidence Estimator (MACEST) - A Python library for calibrating Machine Learning models' confidence scores

Django model field that can hold a geoposition, and corresponding widget

django-geoposition A model field that can hold a geoposition (latitude/longitude), and corresponding admin/form widget. Prerequisites Starting with ve

Learnable Multi-level Frequency Decomposition and Hierarchical Attention Mechanism for Generalized Face Presentation Attack Detection

LMFD-PAD Note This is the official repository of the paper: LMFD-PAD: Learnable Multi-level Frequency Decomposition and Hierarchical Attention Mechani

Learning based AI for playing multi-round Koi-Koi hanafuda card games. Have fun.

Koi-Koi AI Learning based AI for playing multi-round Koi-Koi hanafuda card games. Platform Python PyTorch PySimpleGUI (for the interface playing vs AI

Incorporating KenLM language model with HuggingFace implementation of Wav2Vec2CTC Model using beam search decoding

Wav2Vec2CTC With KenLM Using KenLM ARPA language model with beam search to decode audio files and show the most probable transcription. Assuming you'v

Replication attempt for the Protein Folding Model

RGN2-Replica (WIP) To eventually become an unofficial working Pytorch implementation of RGN2, an state of the art model for MSA-less Protein Folding f

Beta Distribution Guided Aspect-aware Graph for Aspect Category Sentiment Analysis with Affective Knowledge. Proceedings of EMNLP 2021

AAGCN-ACSA EMNLP 2021 Introduction This repository was used in our paper: Beta Distribution Guided Aspect-aware Graph for Aspect Category Sentiment An