600 Repositories

Python onnx-transformers Libraries

RoNER is a Named Entity Recognition model based on a pre-trained BERT transformer model trained on RONECv2

RoNER RoNER is a Named Entity Recognition model based on a pre-trained BERT transformer model trained on RONECv2. It is meant to be an easy to use, hi

A curated list and survey of awesome Vision Transformers.

English | 简体中文 A curated list and survey of awesome Vision Transformers. You can use mind mapping software to open the mind mapping source file. You c

BERTopic is a topic modeling technique that leverages 🤗 transformers and c-TF-IDF to create dense clusters allowing for easily interpretable topics whilst keeping important words in the topic descriptions

BERTopic BERTopic is a topic modeling technique that leverages 🤗 transformers and c-TF-IDF to create dense clusters allowing for easily interpretable

Awesome Transformers in Medical Imaging

This repo supplements our Survey on Transformers in Medical Imaging Fahad Shamshad, Salman Khan, Syed Waqas Zamir, Muhammad Haris Khan, Munawar Hayat,

Pytorch Implementation of Residual Vision Transformers(ResViT)

ResViT Official Pytorch Implementation of Residual Vision Transformers(ResViT) which is described in the following paper: Onat Dalmaz and Mahmut Yurt

This code is for our paper "VTGAN: Semi-supervised Retinal Image Synthesis and Disease Prediction using Vision Transformers"

ICCV Workshop 2021 VTGAN This code is for our paper "VTGAN: Semi-supervised Retinal Image Synthesis and Disease Prediction using Vision Transformers"

Unsupervised MRI Reconstruction via Zero-Shot Learned Adversarial Transformers

Official TensorFlow implementation of the unsupervised reconstruction model using zero-Shot Learned Adversarial TransformERs (SLATER). (https://arxiv.

Accelerated Multi-Modal MR Imaging with Transformers

Accelerated Multi-Modal MR Imaging with Transformers Dependencies numpy==1.18.5 scikit_image==0.16.2 torchvision==0.8.1 torch==1.7.0 runstats==1.8.0 p

This repo holds the code of TransFuse: Fusing Transformers and CNNs for Medical Image Segmentation

TransFuse This repo holds the code of TransFuse: Fusing Transformers and CNNs for Medical Image Segmentation Requirements Pytorch=1.6.0, 1.9.0 (=1.

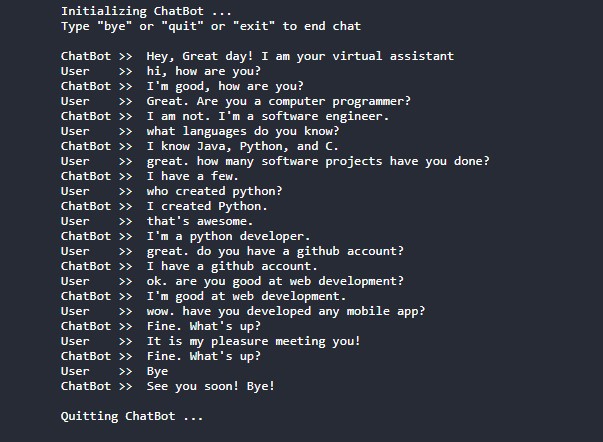

ChatBot-Pytorch - A GPT-2 ChatBot implemented using Pytorch and Huggingface-transformers

ChatBot-Pytorch A GPT-2 ChatBot implemented using Pytorch and Huggingface-transf

Use AutoModelForSeq2SeqLM in Huggingface Transformers to train COMET

Training COMET using seq2seq setting Use AutoModelForSeq2SeqLM in Huggingface Transformers to train COMET. The codes are modified from run_summarizati

Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents

Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents [Project Page] [Paper] [Video] Wenlong Huang1, Pieter Abbee

Transformer in Vision

Transformer-in-Vision Recent Transformer-based CV and related works. Welcome to comment/contribute! Keep updated. Resource SCENIC: A JAX Library for C

Dynamic Token Normalization Improves Vision Transformers

Dynamic Token Normalization Improves Vision Transformers This is the PyTorch implementation of the paper Dynamic Token Normalization Improves Vision T

Look Closer: Bridging Egocentric and Third-Person Views with Transformers for Robotic Manipulation

Look Closer: Bridging Egocentric and Third-Person Views with Transformers for Robotic Manipulation Official PyTorch implementation for the paper Look

TransMVSNet: Global Context-aware Multi-view Stereo Network with Transformers.

TransMVSNet This repository contains the official implementation of the paper: "TransMVSNet: Global Context-aware Multi-view Stereo Network with Trans

3D-RETR: End-to-End Single and Multi-View3D Reconstruction with Transformers

3D-RETR: End-to-End Single and Multi-View 3D Reconstruction with Transformers (BMVC 2021) Zai Shi*, Zhao Meng*, Yiran Xing, Yunpu Ma, Roger Wattenhofe

Pose Transformers: Human Motion Prediction with Non-Autoregressive Transformers

Pose Transformers: Human Motion Prediction with Non-Autoregressive Transformers This is the repo used for human motion prediction with non-autoregress

In this project, we compared Spanish BERT and Multilingual BERT in the Sentiment Analysis task.

Applying BERT Fine Tuning to Sentiment Classification on Amazon Reviews Abstract Sentiment analysis has made great progress in recent years, due to th

This repository contains demos I made with the Transformers library by HuggingFace.

Transformers-Tutorials Hi there! This repository contains demos I made with the Transformers library by 🤗 HuggingFace. Currently, all of them are imp

SynapseML - an open source library to simplify the creation of scalable machine learning pipelines

Synapse Machine Learning SynapseML (previously MMLSpark) is an open source library to simplify the creation of scalable machine learning pipelines. Sy

No-Reference Image Quality Assessment via Transformers, Relative Ranking, and Self-Consistency

This repository contains the implementation for the paper: No-Reference Image Quality Assessment via Transformers, Relative Ranking, and Self-Consiste

HAT: Hierarchical Aggregation Transformers for Person Re-identification

HAT: Hierarchical Aggregation Transformers for Person Re-identification

"Exploring Vision Transformers for Fine-grained Classification" at CVPRW FGVC8

FGVC8 Exploring Vision Transformers for Fine-grained Classification paper presented at the CVPR 2021, The Eight Workshop on Fine-Grained Visual Catego

Official implementation of "Refiner: Refining Self-attention for Vision Transformers".

RefinerViT This repo is the official implementation of "Refiner: Refining Self-attention for Vision Transformers". The repo is build on top of timm an

RETRO-pytorch - Implementation of RETRO, Deepmind's Retrieval based Attention net, in Pytorch

RETRO - Pytorch (wip) Implementation of RETRO, Deepmind's Retrieval based Attent

A Unified Framework and Analysis for Structured Knowledge Grounding

UnifiedSKG 📚 : Unifying and Multi-Tasking Structured Knowledge Grounding with Text-to-Text Language Models Code for paper UnifiedSKG: Unifying and Mu

![[CVPR 2021]](https://github.com/decisionforce/mmTransformer/raw/main/figs/model.png)

[CVPR 2021] "Multimodal Motion Prediction with Stacked Transformers": official code implementation and project page.

mmTransformer Introduction This repo is official implementation for mmTransformer in pytorch. Currently, the core code of mmTransformer is implemented

Official PyTorch Implementation of "AgentFormer: Agent-Aware Transformers for Socio-Temporal Multi-Agent Forecasting".

AgentFormer This repo contains the official implementation of our paper: AgentFormer: Agent-Aware Transformers for Socio-Temporal Multi-Agent Forecast

"Investigating the Limitations of Transformers with Simple Arithmetic Tasks", 2021

transformers-arithmetic This repository contains the code to reproduce the experiments from the paper: Nogueira, Jiang, Lin "Investigating the Limitat

Pytorch implementation of Compressive Transformers, from Deepmind

Compressive Transformer in Pytorch Pytorch implementation of Compressive Transformers, a variant of Transformer-XL with compressed memory for long-ran

The accompanying code for the paper "GMAT: Global Memory Augmentation for Transformers" (Ankit Gupta and Jonathan Berant).

GMAT: Global Memory Augmentation for Transformers This repository contains the accompanying code for the paper: "GMAT: Global Memory Augmentation for

Implementation of Memformer, a Memory-augmented Transformer, in Pytorch

Memformer - Pytorch Implementation of Memformer, a Memory-augmented Transformer, in Pytorch. It includes memory slots, which are updated with attentio

Official code repository of the paper Linear Transformers Are Secretly Fast Weight Programmers.

Linear Transformers Are Secretly Fast Weight Programmers This repository contains the code accompanying the paper Linear Transformers Are Secretly Fas

YOLOPのPythonでのONNX推論サンプル

YOLOP-ONNX-Video-Inference-Sample YOLOPのPythonでのONNX推論サンプルです。 ONNXモデルは、hustvl/YOLOP/weights を使用しています。 Requirement OpenCV 3.4.2 or later onnxruntime 1.

Unofficial JAX implementations of Deep Learning models

JAX Models Table of Contents About The Project Getting Started Prerequisites Installation Usage Contributing License Contact About The Project The JAX

A Transformer Implementation that is easy to understand and customizable.

Simple Transformer I've written a series of articles on the transformer architecture and language models on Medium. This repository contains an implem

Paddle pit - Rethinking Spatial Dimensions of Vision Transformers

基于Paddle实现PiT ——Rethinking Spatial Dimensions of Vision Transformers,arxiv 官方原版代

Transformers based fully on MLPs

Awesome MLP-based Transformers papers An up-to-date list of Transformers based fully on MLPs without attention! Why this repo? After transformers and

A curated list of the top 10 computer vision papers in 2021 with video demos, articles, code and paper reference.

The Top 10 Computer Vision Papers of 2021 The top 10 computer vision papers in 2021 with video demos, articles, code, and paper reference. While the w

Setup and customize deep learning environment in seconds.

Deepo is a series of Docker images that allows you to quickly set up your deep learning research environment supports almost all commonly used deep le

A Demo server serving Bert through ONNX with GPU written in Rust with 3

Demo BERT ONNX server written in rust This demo showcase the use of onnxruntime-rs on BERT with a GPU on CUDA 11 served by actix-web and tokenized wit

Advbox is a toolbox to generate adversarial examples that fool neural networks in PaddlePaddle、PyTorch、Caffe2、MxNet、Keras、TensorFlow and Advbox can benchmark the robustness of machine learning models.

Advbox is a toolbox to generate adversarial examples that fool neural networks in PaddlePaddle、PyTorch、Caffe2、MxNet、Keras、TensorFlow and Advbox can benchmark the robustness of machine learning models. Advbox give a command line tool to generate adversarial examples with Zero-Coding.

It is a simple library to speed up CLIP inference up to 3x (K80 GPU)

CLIP-ONNX It is a simple library to speed up CLIP inference up to 3x (K80 GPU) Usage Install clip-onnx module and requirements first. Use this trick !

Repository for Project Insight: NLP as a Service

Project Insight NLP as a Service Contents Introduction Features Installation Setup and Documentation Project Details Demonstration Directory Details H

A Streamlit web app that generates Rick and Morty stories using GPT2.

Rick and Morty Story Generator This project uses a pre-trained GPT2 model, which was fine-tuned on Rick and Morty transcripts, to generate new stories

Rhyme with AI

Local development Create a conda virtual environment and activate it: conda env create --file environment.yml conda activate rhyme-with-ai Install the

KoBERT - Korean BERT pre-trained cased (KoBERT)

KoBERT KoBERT Korean BERT pre-trained cased (KoBERT) Why'?' Training Environment Requirements How to install How to use Using with PyTorch Using with

Conversational-AI-ChatBot - Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users!

Conversational AI ChatBot Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users! In this project? Thi

ncnn is a high-performance neural network inference framework optimized for the mobile platform

ncnn ncnn is a high-performance neural network inference computing framework optimized for mobile platforms. ncnn is deeply considerate about deployme

Official implementation of CATs: Cost Aggregation Transformers for Visual Correspondence NeurIPS'21

CATs: Cost Aggregation Transformers for Visual Correspondence NeurIPS'21 For more information, check out the paper on [arXiv]. Training with different

Source code of AAAI 2022 paper "Towards End-to-End Image Compression and Analysis with Transformers".

Towards End-to-End Image Compression and Analysis with Transformers Source code of our AAAI 2022 paper "Towards End-to-End Image Compression and Analy

Pre-training of Graph Augmented Transformers for Medication Recommendation

G-Bert Pre-training of Graph Augmented Transformers for Medication Recommendation Intro G-Bert combined the power of Graph Neural Networks and BERT (B

Implementation of the paper "Fine-Tuning Transformers: Vocabulary Transfer"

Transformer-vocabulary-transfer Implementation of the paper "Fine-Tuning Transfo

XViT - Space-time Mixing Attention for Video Transformer

XViT - Space-time Mixing Attention for Video Transformer This is the official implementation of the XViT paper: @inproceedings{bulat2021space, title

Utilize Korean BERT model in sentence-transformers library

ko-sentence-transformers 이 프로젝트는 KoBERT 모델을 sentence-transformers 에서 보다 쉽게 사용하기 위해 만들어졌습니다. Ko-Sentence-BERT-SKTBERT 프로젝트에서는 KoBERT 모델을 sentence-trans

PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation.

ALiBi PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation. Quickstart Clone this reposit

ONNX Command-Line Toolbox

ONNX Command Line Toolbox Aims to improve your experience of investigating ONNX models. Use it like onnx infershape /path/to/model.onnx. (See the usag

jiant is an NLP toolkit

🚨 Update 🚨 : As of 2021/10/17, the jiant project is no longer being actively maintained. This means there will be no plans to add new models, tasks,

An executor that loads ONNX models and embeds documents using the ONNX runtime.

ONNXEncoder An executor that loads ONNX models and embeds documents using the ONNX runtime. Usage via Docker image (recommended) from jina import Flow

SeMask: Semantically Masked Transformers for Semantic Segmentation.

SeMask: Semantically Masked Transformers Jitesh Jain, Anukriti Singh, Nikita Orlov, Zilong Huang, Jiachen Li, Steven Walton, Humphrey Shi This repo co

Code implementation from my Medium blog post: [Transformers from Scratch in PyTorch]

transformer-from-scratch Code for my Medium blog post: Transformers from Scratch in PyTorch Note: This Transformer code does not include masked attent

ONNX Runtime: cross-platform, high performance ML inferencing and training accelerator

ONNX Runtime is a cross-platform inference and training machine-learning accelerator. ONNX Runtime inference can enable faster customer experiences an

⛵️The official PyTorch implementation for "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing" (EMNLP 2020).

BERT-of-Theseus Code for paper "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing". BERT-of-Theseus is a new compressed BERT by progre

A quick recipe to learn all about Transformers

Transformers have accelerated the development of new techniques and models for natural language processing (NLP) tasks.

Yuno is context based search engine for anime.

Yuno yuno.mp4 Table of Contents Introduction Power Of Yuno Try Yuno How Yuno was created? References Introduction Yuno is a context based search engin

Code for the ICCV'21 paper "Context-aware Scene Graph Generation with Seq2Seq Transformers"

ICCV'21 Context-aware Scene Graph Generation with Seq2Seq Transformers Authors: Yichao Lu*, Himanshu Rai*, Cheng Chang*, Boris Knyazev†, Guangwei Yu,

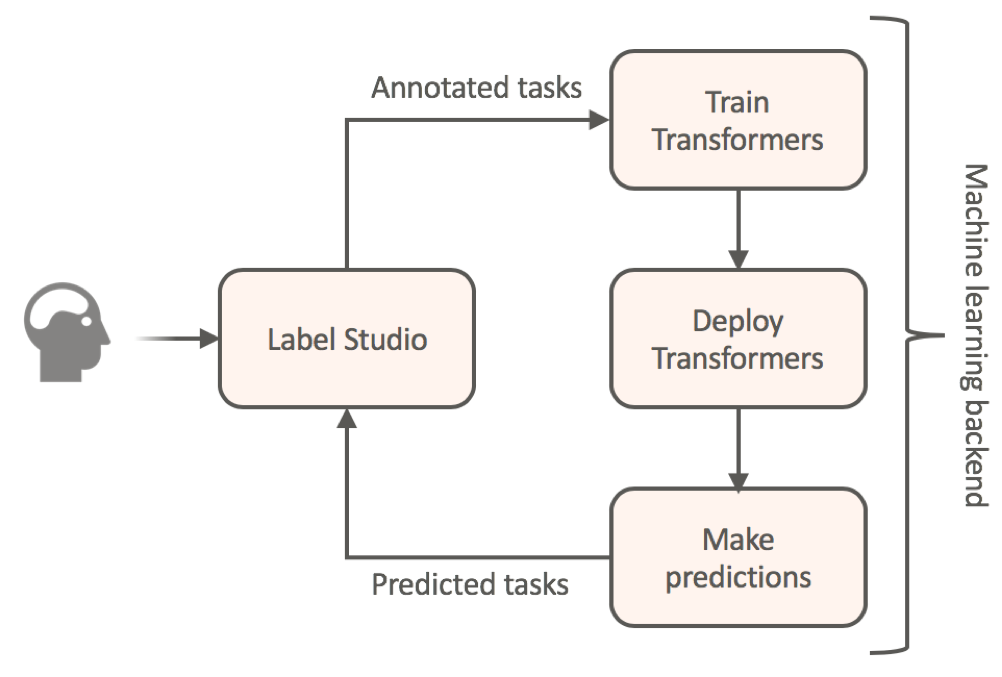

Label data using HuggingFace's transformers and automatically get a prediction service

Label Studio for Hugging Face's Transformers Website • Docs • Twitter • Join Slack Community Transfer learning for NLP models by annotating your textu

Model parallel transformers in JAX and Haiku

Table of contents Mesh Transformer JAX Updates Pretrained Models GPT-J-6B Links Acknowledgments License Model Details Zero-Shot Evaluations Architectu

GANformer: Generative Adversarial Transformers

GANformer: Generative Adversarial Transformers Drew A. Hudson* & C. Lawrence Zitnick Update: We released the new GANformer2 paper! *I wish to thank Ch

The code for the Subformer, from the EMNLP 2021 Findings paper: "Subformer: Exploring Weight Sharing for Parameter Efficiency in Generative Transformers", by Machel Reid, Edison Marrese-Taylor, and Yutaka Matsuo

Subformer This repository contains the code for the Subformer. To help overcome this we propose the Subformer, allowing us to retain performance while

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Sinkhorn Transformer This is a reproduction of the work outlined in Sparse Sinkhorn Attention, with additional enhancements. It includes a parameteriz

DeLighT: Very Deep and Light-Weight Transformers

DeLighT: Very Deep and Light-weight Transformers This repository contains the source code of our work on building efficient sequence models: DeFINE (I

This repository contains the code for running the character-level Sandwich Transformers from our ACL 2020 paper on Improving Transformer Models by Reordering their Sublayers.

Improving Transformer Models by Reordering their Sublayers This repository contains the code for running the character-level Sandwich Transformers fro

Examples of using sparse attention, as in "Generating Long Sequences with Sparse Transformers"

Status: Archive (code is provided as-is, no updates expected) Update August 2020: For an example repository that achieves state-of-the-art modeling pe

An implementation of model parallel GPT-2 and GPT-3-style models using the mesh-tensorflow library.

GPT Neo 🎉 1T or bust my dudes 🎉 An implementation of model & data parallel GPT3-like models using the mesh-tensorflow library. If you're just here t

Awesome Treasure of Transformers Models Collection

💁 Awesome Treasure of Transformers Models for Natural Language processing contains papers, videos, blogs, official repo along with colab Notebooks. 🛫☑️

Text Classification in Turkish Texts with Bert

You can watch the details of the project on my youtube channel Project Interface Project Second Interface Goal= Correctly guessing the classification

Powerful unsupervised domain adaptation method for dense retrieval.

Powerful unsupervised domain adaptation method for dense retrieval

Convert onnx models to pytorch.

onnx2torch onnx2torch is an ONNX to PyTorch converter. Our converter: Is easy to use – Convert the ONNX model with the function call convert; Is easy

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

keytotext Idea is to build a model which will take keywords as inputs and generate sentences as outputs. Potential use case can include: Marketing Sea

A repository with exploration into using transformers to predict DNA ↔ transcription factor binding

Transcription Factor binding predictions with Attention and Transformers A repository with exploration into using transformers to predict DNA ↔ transc

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

Python scripts for performing object detection with the 1000 labels of the ImageNet dataset in ONNX.

Python scripts for performing object detection with the 1000 labels of the ImageNet dataset in ONNX. The repository combines a class agnostic object localizer to first detect the objects in the image, and next a ResNet50 model trained on ImageNet is used to label each box.

PyTorch implementation of a collections of scalable Video Transformer Benchmarks.

PyTorch implementation of Video Transformer Benchmarks This repository is mainly built upon Pytorch and Pytorch-Lightning. We wish to maintain a colle

Easy Language Model Pretraining leveraging Huggingface's Transformers and Datasets

Easy Language Model Pretraining leveraging Huggingface's Transformers and Datasets What is LASSL • How to Use What is LASSL LASSL은 LAnguage Semi-Super

Pangu-Alpha for Transformers

Pangu-Alpha for Transformers Usage Download MindSpore FP32 weights for GPU from here to data/Pangu-alpha_2.6B.ckpt Activate MindSpore environment and

![[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)](https://github.com/ytongbai/ViTs-vs-CNNs/raw/main/resources/teaser.png)

[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)

Are Transformers More Robust Than CNNs? Pytorch implementation for NeurIPS 2021 Paper: Are Transformers More Robust Than CNNs? Our implementation is b

Automatically replace ONNX's RandomNormal node with Constant node.

onnx-remove-random-normal This is a script to replace RandomNormal node with Constant node. Example Imagine that we have something ONNX model like the

2D Human Pose estimation using transformers. Implementation in Pytorch

PE-former: Pose Estimation Transformer Vision transformer architectures perform very well for image classification tasks. Efforts to solve more challe

Experiments and examples converting Transformers to ONNX

Experiments and examples converting Transformers to ONNX This repository containes experiments and examples on converting different Transformers to ON

SOLOv2 on onnx & tensorRT

SOLOv2.tensorRT: NOTE: code based on WXinlong/SOLO add support to TensorRT inference onnxruntime tensorRT full_dims and dynamic shape postprocess with

State-of-the-art NLP through transformer models in a modular design and consistent APIs.

Trapper (Transformers wRAPPER) Trapper is an NLP library that aims to make it easier to train transformer based models on downstream tasks. It wraps h

Hashformers is a framework for hashtag segmentation with transformers.

Hashtag segmentation is the task of automatically inserting the missing spaces between the words in a hashtag. Hashformers applies Transformer models

Implementation of paper "Decision-based Black-box Attack Against Vision Transformers via Patch-wise Adversarial Removal"

Patch-wise Adversarial Removal Implementation of paper "Decision-based Black-box Attack Against Vision Transformers via Patch-wise Adversarial Removal

Post-Training Quantization for Vision transformers.

PTQ4ViT Post-Training Quantization Framework for Vision Transformers. We use the twin uniform quantization method to reduce the quantization error on

![[NeurIPS 2021] COCO-LM: Correcting and Contrasting Text Sequences for Language Model Pretraining](https://github.com/microsoft/COCO-LM/raw/main/coco-lm.png)

[NeurIPS 2021] COCO-LM: Correcting and Contrasting Text Sequences for Language Model Pretraining

COCO-LM This repository contains the scripts for fine-tuning COCO-LM pretrained models on GLUE and SQuAD 2.0 benchmarks. Paper: COCO-LM: Correcting an

This is a re-implementation of TransGAN: Two Pure Transformers Can Make One Strong GAN (CVPR 2021) in PyTorch.

TransGAN: Two Transformers Can Make One Strong GAN [YouTube Video] Paper Authors: Yifan Jiang, Shiyu Chang, Zhangyang Wang CVPR 2021 This is re-implem

Implementation of N-Grammer, augmenting Transformers with latent n-grams, in Pytorch

N-Grammer - Pytorch Implementation of N-Grammer, augmenting Transformers with latent n-grams, in Pytorch Install $ pip install n-grammer-pytorch Usage