1588 Repositories

Python pre-trained-model Libraries

Mesh TensorFlow: Model Parallelism Made Easier

Mesh TensorFlow - Model Parallelism Made Easier Introduction Mesh TensorFlow (mtf) is a language for distributed deep learning, capable of specifying

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models

PEGASUS library Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models, or PEGASUS, uses self-supervised

Large-scale Self-supervised Pre-training Across Tasks, Languages, and Modalities

Hiring We are hiring at all levels (including FTE researchers and interns)! If you are interested in working with us on NLP and large-scale pre-traine

Unsupervised Language Model Pre-training for French

FlauBERT and FLUE FlauBERT is a French BERT trained on a very large and heterogeneous French corpus. Models of different sizes are trained using the n

Code for EMNLP20 paper: "ProphetNet: Predicting Future N-gram for Sequence-to-Sequence Pre-training"

ProphetNet-X This repo provides the code for reproducing the experiments in ProphetNet. In the paper, we propose a new pre-trained language model call

DeBERTa: Decoding-enhanced BERT with Disentangled Attention

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

CodeBERT: A Pre-Trained Model for Programming and Natural Languages.

CodeBERT This repo provides the code for reproducing the experiments in CodeBERT: A Pre-Trained Model for Programming and Natural Languages. CodeBERT

ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators

ELECTRA Introduction ELECTRA is a method for self-supervised language representation learning. It can be used to pre-train transformer networks using

Conditional Transformer Language Model for Controllable Generation

CTRL - A Conditional Transformer Language Model for Controllable Generation Authors: Nitish Shirish Keskar, Bryan McCann, Lav Varshney, Caiming Xiong,

An implementation of model parallel GPT-2 and GPT-3-style models using the mesh-tensorflow library.

GPT Neo 🎉 1T or bust my dudes 🎉 An implementation of model & data parallel GPT3-like models using the mesh-tensorflow library. If you're just here t

Awesome Treasure of Transformers Models Collection

💁 Awesome Treasure of Transformers Models for Natural Language processing contains papers, videos, blogs, official repo along with colab Notebooks. 🛫☑️

Memory-Augmented Model Predictive Control

Memory-Augmented Model Predictive Control This repository hosts the source code for the journal article "Composing MPC with LQR and Neural Networks fo

A program that uses an API and a AI model to get info of sotcks

Stock-Market-AI-Analysis I dont mind anyone using this code but please give me credit A program that uses an API and a AI model to get info of stocks

SeqFormer: a Frustratingly Simple Model for Video Instance Segmentation

SeqFormer: a Frustratingly Simple Model for Video Instance Segmentation SeqFormer SeqFormer: a Frustratingly Simple Model for Video Instance Segmentat

A Python library for electronic structure pre/post-processing

PyProcar PyProcar is a robust, open-source Python library used for pre- and post-processing of the electronic structure data coming from DFT calculati

A Python description of the Kinematic Bicycle Model with an animated example.

Kinematic Bicycle Model Abstract A python library for the Kinematic Bicycle model. The Kinematic Bicycle is a compromise between the non-linear and li

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm.

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm. It contains the code to reproduce the results presented in the original paper: https://arxiv.org/abs/2112.03670

Telegram chatbot created with deep learning model (LSTM) and telebot library.

Telegram chatbot Telegram chatbot created with deep learning model (LSTM) and telebot library. Description This program will allow you to create very

A toolkit for document-level event extraction, containing some SOTA model implementations

❤️ A Toolkit for Document-level Event Extraction with & without Triggers Hi, there 👋 . Thanks for your stay in this repo. This project aims at buildi

A 1.3B text-to-image generation model trained on 14 million image-text pairs

minDALL-E on Conceptual Captions minDALL-E, named after minGPT, is a 1.3B text-to-image generation model trained on 14 million image-text pairs for no

Using Bert as the backbone model for lime, designed for NLP task explanation (sentence pair text classification task)

Lime Comparing deep contextualized model for sentences highlighting task. In addition, take the classic explanation model "LIME" with bert-base model

Multiwavelets-based operator model

Multiwavelet model for Operator maps Gaurav Gupta, Xiongye Xiao, and Paul Bogdan Multiwavelet-based Operator Learning for Differential Equations In Ne

GT China coal model

GT China coal model The full version of a China coal transport model with a very high spatial reslution. What it does The code works in a few steps: T

Baseline powergrid model for NY

Baseline-powergrid-model-for-NY Table of Contents About The Project Built With Usage License Contact Acknowledgements About The Project As the urgency

Discovering Explanatory Sentences in Legal Case Decisions Using Pre-trained Language Models.

Statutory Interpretation Data Set This repository contains the data set created for the following research papers: Savelka, Jaromir, and Kevin D. Ashl

flake8 plugin which checks that typing imports are properly guarded

flake8-typing-imports flake8 plugin which checks that typing imports are properly guarded installation pip install flake8-typing-imports flake8 codes

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

keytotext Idea is to build a model which will take keywords as inputs and generate sentences as outputs. Potential use case can include: Marketing Sea

A toolkit for document-level event extraction, containing some SOTA model implementations

Document-level Event Extraction via Heterogeneous Graph-based Interaction Model with a Tracker Source code for ACL-IJCNLP 2021 Long paper: Document-le

Centroid-UNet is deep neural network model to detect centroids from satellite images.

Centroid UNet - Locating Object Centroids in Aerial/Serial Images Introduction Centroid-UNet is deep neural network model to detect centroids from Aer

Anchor Retouching via Model Interaction for Robust Object Detection in Aerial Images

Anchor Retouching via Model Interaction for Robust Object Detection in Aerial Images In this paper, we present an effective Dynamic Enhancement Anchor

Pydantic model generator for easy conversion of JSON, OpenAPI, JSON Schema, and YAML data sources.

datamodel-code-generator This code generator creates pydantic model from an openapi file and others. Help See documentation for more details. Supporte

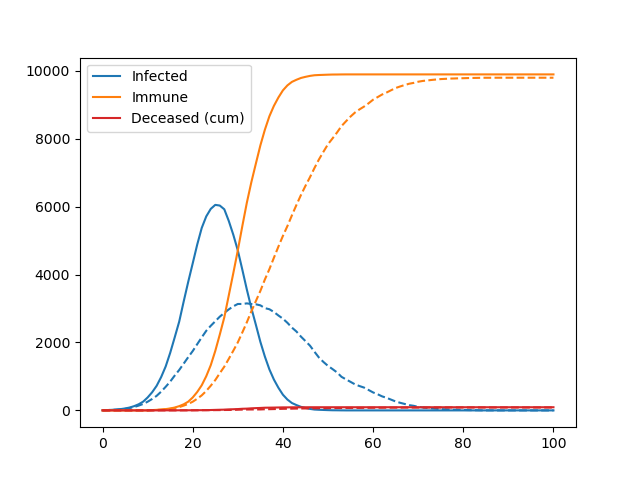

A simple agent-based model used to teach the basics of OOP in my lectures

Pydemic A simple agent-based model of a pandemic. This is used to teach basic principles of object-oriented programming to master students. It is not

A minimal code for fairseq vq-wav2vec model inference.

vq-wav2vec inference A minimal code for fairseq vq-wav2vec model inference. Runs without installing the fairseq toolkit and its dependencies. Usage ex

InDuDoNet+: A Model-Driven Interpretable Dual Domain Network for Metal Artifact Reduction in CT Images

InDuDoNet+: A Model-Driven Interpretable Dual Domain Network for Metal Artifact Reduction in CT Images Hong Wang, Yuexiang Li, Haimiao Zhang, Deyu Men

Code for 'Blockwise Sequential Model Learning for Partially Observable Reinforcement Learning' (AAAI 2022)

Blockwise Sequential Model Learning Code for 'Blockwise Sequential Model Learning for Partially Observable Reinforcement Learning' (AAAI 2022) For ins

PyWorld3 is a Python implementation of the World3 model

The World3 model revisited in Python Install & Hello World3 How to tune your own simulation Licence How to cite PyWorld3 with Bibtex References & ackn

Codes to pre-train T5 (Text-to-Text Transfer Transformer) models pre-trained on Japanese web texts

t5-japanese Codes to pre-train T5 (Text-to-Text Transfer Transformer) models pre-trained on Japanese web texts. The following is a list of models that

A super lightweight Lagrangian model for calculating millions of trajectories using ERA5 data

Easy-ERA5-Trck Easy-ERA5-Trck Galleries Install Usage Repository Structure Module Files Version iteration Easy-ERA5-Trck is a super lightweight Lagran

A library for researching neural networks compression and acceleration methods.

A library for researching neural networks compression and acceleration methods.

Easy Language Model Pretraining leveraging Huggingface's Transformers and Datasets

Easy Language Model Pretraining leveraging Huggingface's Transformers and Datasets What is LASSL • How to Use What is LASSL LASSL은 LAnguage Semi-Super

Neural-PIL: Neural Pre-Integrated Lighting for Reflectance Decomposition - NeurIPS2021

Neural-PIL: Neural Pre-Integrated Lighting for Reflectance Decomposition Project Page | Video | Paper Implementation for Neural-PIL. A novel method wh

Cortex-compatible model server for Python and TensorFlow

Nucleus model server Nucleus is a model server for TensorFlow and generic Python models. It is compatible with Cortex clusters, Kubernetes clusters, a

A python code to convert Keras pre-trained weights to Pytorch version

Weights_Keras_2_Pytorch 最近想在Pytorch项目里使用一下谷歌的NIMA,但是发现没有预训练好的pytorch权重,于是整理了一下将Keras预训练权重转为Pytorch的代码,目前是支持Keras的Conv2D, Dense, DepthwiseConv2D, Batch

Model of an AI powered sign language interpreter.

TEXT AND SPEECH TO SIGN LANGUAGE. A web application which takes in text or live audio speech recording as input, converts and displays the relevant Si

The Pytorch implementation for "Video-Text Pre-training with Learned Regions"

Region_Learner The Pytorch implementation for "Video-Text Pre-training with Learned Regions" (arxiv) We are still cleaning up the code further and pre

Run isort, pyupgrade, mypy, pylint, flake8, and more on Jupyter Notebooks

Run isort, pyupgrade, mypy, pylint, flake8, mdformat, black, blacken-docs, and more on Jupyter Notebooks ✅ handles IPython magics robustly ✅ respects

Code for ShadeGAN (NeurIPS2021) A Shading-Guided Generative Implicit Model for Shape-Accurate 3D-Aware Image Synthesis

A Shading-Guided Generative Implicit Model for Shape-Accurate 3D-Aware Image Synthesis Project Page | Paper A Shading-Guided Generative Implicit Model

Hardware-accelerated DNN model inference ROS2 packages using NVIDIA Triton/TensorRT for both Jetson and x86_64 with CUDA-capable GPU

Isaac ROS DNN Inference Overview This repository provides two NVIDIA GPU-accelerated ROS2 nodes that perform deep learning inference using custom mode

Codes for CyGen, the novel generative modeling framework proposed in "On the Generative Utility of Cyclic Conditionals" (NeurIPS-21)

On the Generative Utility of Cyclic Conditionals This repository is the official implementation of "On the Generative Utility of Cyclic Conditionals"

Autoregressive Models in PyTorch.

Autoregressive This repository contains all the necessary PyTorch code, tailored to my presentation, to train and generate data from WaveNet-like auto

Official repository for Fourier model that can generate periodic signals

Conditional Generation of Periodic Signals with Fourier-Based Decoder Jiyoung Lee, Wonjae Kim, Daehoon Gwak, Edward Choi This repository provides offi

Python 3.6+ Asyncio PostgreSQL query builder and model

windyquery - A non-blocking Python PostgreSQL query builder Windyquery is a non-blocking PostgreSQL query builder with Asyncio. Installation $ pip ins

rastrainer is a QGIS plugin to training remote sensing semantic segmentation model based on PaddlePaddle.

rastrainer rastrainer is a QGIS plugin to training remote sensing semantic segmentation model based on PaddlePaddle. UI TODO Init UI. Add Block. Add l

Causal Imitative Model for Autonomous Driving

Causal Imitative Model for Autonomous Driving Mohammad Reza Samsami, Mohammadhossein Bahari, Saber Salehkaleybar, Alexandre Alahi. arXiv 2021. [Projec

Official Implementation of SimIPU: Simple 2D Image and 3D Point Cloud Unsupervised Pre-Training for Spatial-Aware Visual Representations

Official Implementation of SimIPU SimIPU: Simple 2D Image and 3D Point Cloud Unsupervised Pre-Training for Spatial-Aware Visual Representations Since

Convert openmmlab (not only mmdetection) series model to tensorrt

MMDet to TensorRT This project aims to convert the mmdetection model to TensorRT model end2end. Focus on object detection for now. Mask support is exp

VarCLR: Variable Semantic Representation Pre-training via Contrastive Learning

VarCLR: Variable Representation Pre-training via Contrastive Learning New: Paper accepted by ICSE 2022. Preprint at arXiv! This repository contain

iBOT: Image BERT Pre-Training with Online Tokenizer

Image BERT Pre-Training with iBOT Official PyTorch implementation and pretrained models for paper iBOT: Image BERT Pre-Training with Online Tokenizer.

K-PLUG: Knowledge-injected Pre-trained Language Model for Natural Language Understanding and Generation in E-Commerce (EMNLP Founding 2021)

Introduction K-PLUG: Knowledge-injected Pre-trained Language Model for Natural Language Understanding and Generation in E-Commerce. Installation PyTor

RuleBERT: Teaching Soft Rules to Pre-Trained Language Models

RuleBERT: Teaching Soft Rules to Pre-Trained Language Models (Paper) (Slides) (Video) RuleBERT is a pre-trained language model that has been fine-tune

Concept drift monitoring for HA model servers.

{Fast, Correct, Simple} - pick three Easily compare training and production ML data & model distributions Goals Boxkite is an instrumentation library

Conceptual 12M is a dataset containing (image-URL, caption) pairs collected for vision-and-language pre-training.

Conceptual 12M We introduce the Conceptual 12M (CC12M), a dataset with ~12 million image-text pairs meant to be used for vision-and-language pre-train

Machine Learning automation and tracking

The Open-Source MLOps Orchestration Framework MLRun is an open-source MLOps framework that offers an integrative approach to managing your machine-lea

iBOT: Image BERT Pre-Training with Online Tokenizer

Image BERT Pre-Training with iBOT Official PyTorch implementation and pretrained models for paper iBOT: Image BERT Pre-Training with Online Tokenizer.

A simple and lightweight genetic algorithm for optimization of any machine learning model

geneticml This package contains a simple and lightweight genetic algorithm for optimization of any machine learning model. Installation Use pip to ins

An end to end ASR Transformer model training repo

END TO END ASR TRANSFORMER 本项目基于transformer 6*encoder+6*decoder的基本结构构造的端到端的语音识别系统 Model Instructions 1.数据准备: 自行下载数据,遵循文件结构如下: ├── data │ ├── train │

Model Validation Toolkit is a collection of tools to assist with validating machine learning models prior to deploying them to production and monitoring them after deployment to production.

Model Validation Toolkit is a collection of tools to assist with validating machine learning models prior to deploying them to production and monitoring them after deployment to production.

Template for pre-commit hooks

Pre-commit hook template This repo is a template for a pre-commit hook. Try it out by running: pre-commit try-repo https://github.com/stefsmeets/pre-c

DOP-Tuning(Domain-Oriented Prefix-tuning model)

DOP-Tuning DOP-Tuning(Domain-Oriented Prefix-tuning model)代码基于Prefix-Tuning改进. Files ├── seq2seq # Code for encoder-decoder arch

Interactive Visualization to empower domain experts to align ML model behaviors with their knowledge.

An interactive visualization system designed to helps domain experts responsibly edit Generalized Additive Models (GAMs). For more information, check

Suite of 500 procedurally-generated NLP tasks to study language model adaptability

TaskBench500 The TaskBench500 dataset and code for generating tasks. Data The TaskBench dataset is available under wget http://web.mit.edu/bzl/www/Tas

GLIP: Grounded Language-Image Pre-training

GLIP: Grounded Language-Image Pre-training Updates 12/06/2021: GLIP paper on arxiv https://arxiv.org/abs/2112.03857. Code and Model are under internal

A Conditional Point Diffusion-Refinement Paradigm for 3D Point Cloud Completion

A Conditional Point Diffusion-Refinement Paradigm for 3D Point Cloud Completion This repo intends to release code for our work: Zhaoyang Lyu*, Zhifeng

RaceBERT -- A transformer based model to predict race and ethnicty from names

RaceBERT -- A transformer based model to predict race and ethnicty from names Installation pip install racebert Using a virtual environment is highly

BrainGNN - A deep learning model for data-driven discovery of functional connectivity

A deep learning model for data-driven discovery of functional connectivity https://doi.org/10.3390/a14030075 Usman Mahmood, Zengin Fu, Vince D. Calhou

Pretrained Cost Model for Distributed Constraint Optimization Problems

Pretrained Cost Model for Distributed Constraint Optimization Problems Requirements PyTorch 1.9.0 PyTorch Geometric 1.7.1 Directory structure baseline

Suite of 500 procedurally-generated NLP tasks to study language model adaptability

TaskBench500 The TaskBench500 dataset and code for generating tasks. Data The TaskBench dataset is available under wget http://web.mit.edu/bzl/www/Tas

![[NeurIPS 2021] COCO-LM: Correcting and Contrasting Text Sequences for Language Model Pretraining](https://github.com/microsoft/COCO-LM/raw/main/coco-lm.png)

[NeurIPS 2021] COCO-LM: Correcting and Contrasting Text Sequences for Language Model Pretraining

COCO-LM This repository contains the scripts for fine-tuning COCO-LM pretrained models on GLUE and SQuAD 2.0 benchmarks. Paper: COCO-LM: Correcting an

Exploit grafana Pre-Auth LFI

Grafana-LFI-8.x Exploit grafana Pre-Auth LFI How to use python3

A simple and lightweight genetic algorithm for optimization of any machine learning model

geneticml This package contains a simple and lightweight genetic algorithm for optimization of any machine learning model. Installation Use pip to ins

My tensorflow implementation of "A neural conversational model", a Deep learning based chatbot

Deep Q&A Table of Contents Presentation Installation Running Chatbot Web interface Results Pretrained model Improvements Upgrade Presentation This wor

Translate darknet to tensorflow. Load trained weights, retrain/fine-tune using tensorflow, export constant graph def to mobile devices

Intro Real-time object detection and classification. Paper: version 1, version 2. Read more about YOLO (in darknet) and download weight files here. In

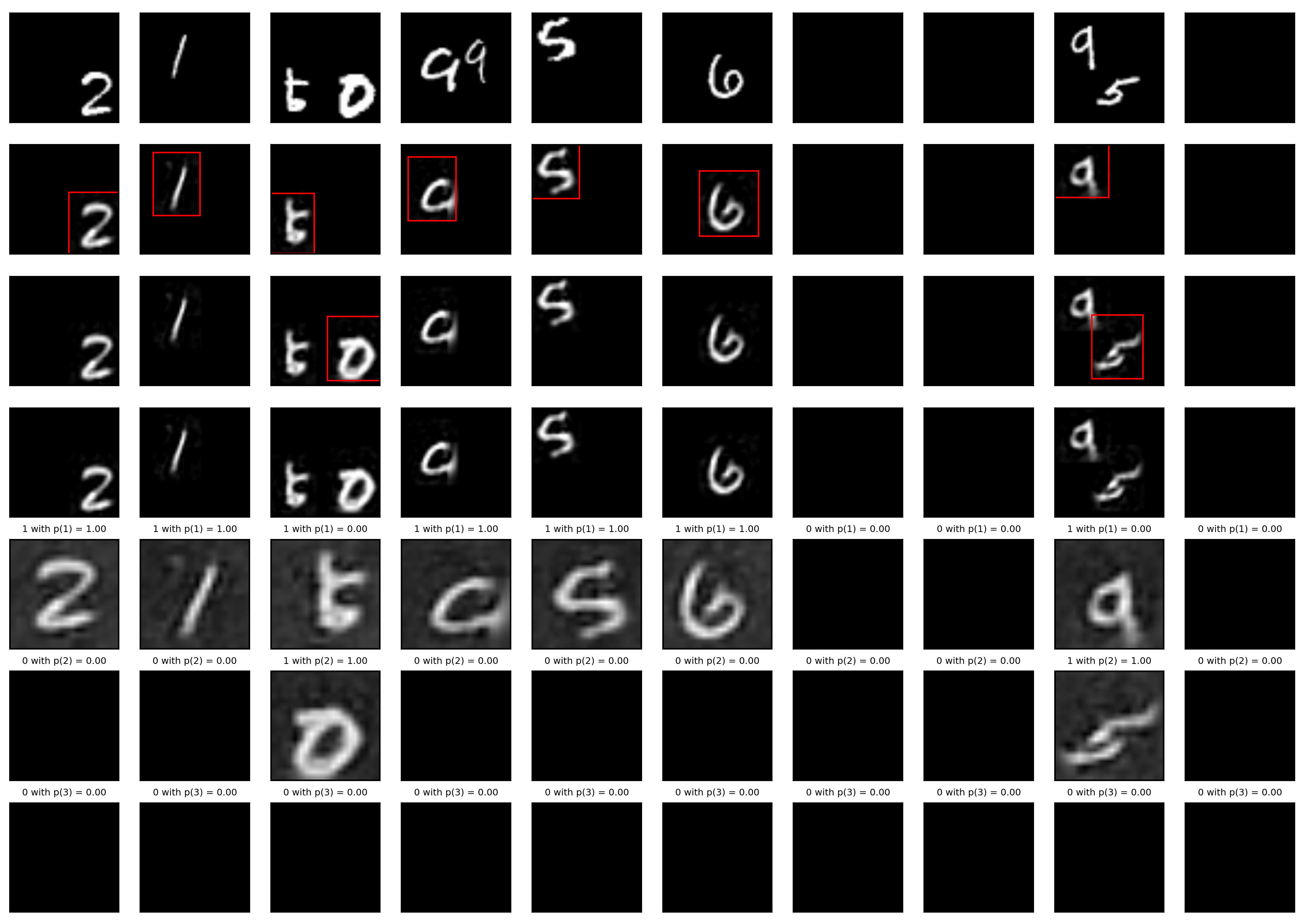

A Tensorfflow implementation of Attend, Infer, Repeat

Attend, Infer, Repeat: Fast Scene Understanding with Generative Models This is an unofficial Tensorflow implementation of Attend, Infear, Repeat (AIR)

Sarus implementation of classical ML models. The models are implemented using the Keras API of tensorflow 2. Vizualization are implemented and can be seen in tensorboard.

Sarus published models Sarus implementation of classical ML models. The models are implemented using the Keras API of tensorflow 2. Vizualization are

Defending against Model Stealing via Verifying Embedded External Features

Defending against Model Stealing Attacks via Verifying Embedded External Features This is the official implementation of our paper Defending against M

Unofficial Implementation of MLP-Mixer, Image Classification Model

MLP-Mixer Unoffical Implementation of MLP-Mixer, easy to use with terminal. Train and test easly. https://arxiv.org/abs/2105.01601 MLP-Mixer is an arc

SLAMP: Stochastic Latent Appearance and Motion Prediction

SLAMP: Stochastic Latent Appearance and Motion Prediction Official implementation of the paper SLAMP: Stochastic Latent Appearance and Motion Predicti

A PaddlePaddle implementation of STGCN with a few modifications in the model architecture in order to forecast traffic jam.

About This repository contains the code of a PaddlePaddle implementation of STGCN based on the paper Spatio-Temporal Graph Convolutional Networks: A D

VarCLR: Variable Semantic Representation Pre-training via Contrastive Learning

VarCLR: Variable Representation Pre-training via Contrastive Learning New: Paper accepted by ICSE 2022. Preprint at arXiv! This repository contain

The Codebase for Causal Distillation for Language Models.

Causal Distillation for Language Models Zhengxuan Wu*,Atticus Geiger*, Josh Rozner, Elisa Kreiss, Hanson Lu, Thomas Icard, Christopher Potts, Noah D.

A new play-and-plug method of controlling an existing generative model with conditioning attributes and their compositions.

Controllable and Compositional Generation with Latent-Space Energy-Based Models Official PyTorch implementation of the NeurIPS 2021 paper: Controllabl

Simple bots or Simbots is a library designed to create simple bots using the power of python. This library utilises Intent, Entity, Relation and Context model to create bots .

Simple bots or Simbots is a library designed to create simple chat bots using the power of python. This library utilises Intent, Entity, Relation and

A fast and easy python virtual environment creator for linux with some pre-installed libraries.

python-venv-creator A fast and easy python virtual environment created for linux with some optional pre-installed libraries. Dependencies: The followi

Convert ONNX model graph to Keras model format.

Convert ONNX model graph to Keras model format.

Yas CRNN model training - Yet Another Genshin Impact Scanner

Yas-Train Yet Another Genshin Impact Scanner 又一个原神圣遗物导出器 介绍 该仓库为 Yas 的模型训练程序 相关资料 MobileNetV3 CRNN 使用 假设你会设置基本的pytorch环境。 生成数据集 python main.py gen 训练

Flask pre-setup architecture. This can be used in any flask project for a faster and better project code structure.

Flask pre-setup architecture. This can be used in any flask project for a faster and better project code structure. All the required libraries are already installed easily to use in any big project.

The Pytorch implementation for "Video-Text Pre-training with Learned Regions"

Region_Learner The Pytorch implementation for "Video-Text Pre-training with Learned Regions" (arxiv) We are still cleaning up the code further and pre

Deep learning model for EEG artifact removal

DeepSeparator Introduction Electroencephalogram (EEG) recordings are often contaminated with artifacts. Various methods have been developed to elimina

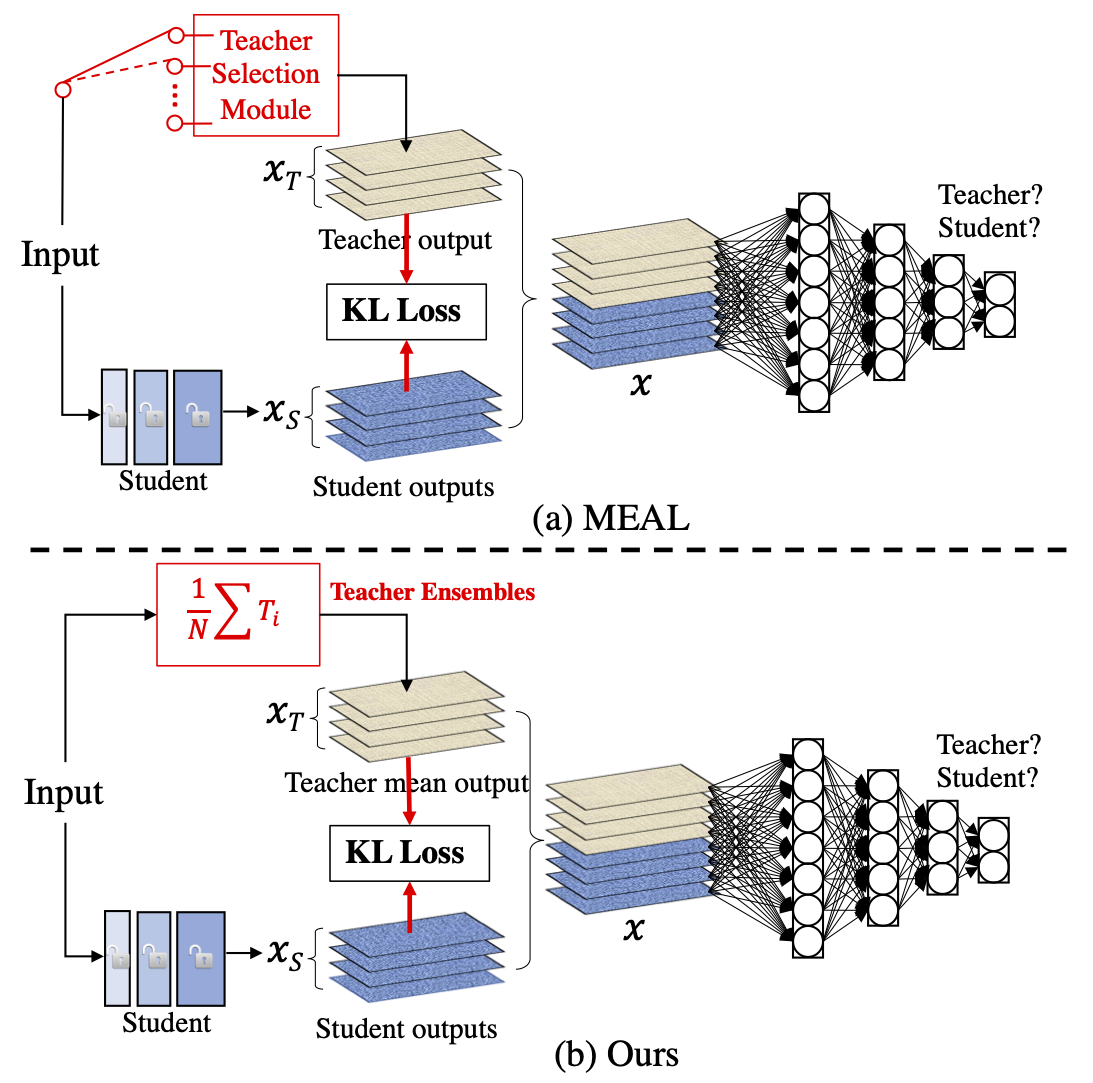

MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tricks

MEAL-V2 This is the official pytorch implementation of our paper: "MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tric