5292 Repositories

Python sequence-learning Libraries

Is RobustBench/AutoAttack a suitable Benchmark for Adversarial Robustness?

Adversrial Machine Learning Benchmarks This code belongs to the papers: Is RobustBench/AutoAttack a suitable Benchmark for Adversarial Robustness? Det

Application of the L2HMC algorithm to simulations in lattice QCD.

l2hmc-qcd 📊 Slides Recent talk on Training Topological Samplers for Lattice Gauge Theory from the Machine Learning for High Energy Physics, on and of

Source code and dataset of the paper "Contrastive Adaptive Propagation Graph Neural Networks forEfficient Graph Learning"

CAPGNN Source code and dataset of the paper "Contrastive Adaptive Propagation Graph Neural Networks forEfficient Graph Learning" Paper URL: https://ar

Deep learning model for EEG artifact removal

DeepSeparator Introduction Electroencephalogram (EEG) recordings are often contaminated with artifacts. Various methods have been developed to elimina

Official PyTorch implementation of our AAAI22 paper: TransMEF: A Transformer-Based Multi-Exposure Image Fusion Framework via Self-Supervised Multi-Task Learning. Code will be available soon.

Official-PyTorch-Implementation-of-TransMEF Official PyTorch implementation of our AAAI22 paper: TransMEF: A Transformer-Based Multi-Exposure Image Fu

Python implementation of Bayesian optimization over permutation spaces.

Bayesian Optimization over Permutation Spaces This repository contains the source code and the resources related to the paper "Bayesian Optimization o

TC-GNN with Pytorch integration

TC-GNN (Running Sparse GNN on Dense Tensor Core on Ampere GPU) Cite this project and paper. @inproceedings{TC-GNN, title={TC-GNN: Accelerating Spars

Sacred is a tool to help you configure, organize, log and reproduce experiments developed at IDSIA.

Sacred Every experiment is sacred Every experiment is great If an experiment is wasted God gets quite irate Sacred is a tool to help you configure, or

Machine learning model evaluation made easy: plots, tables, HTML reports, experiment tracking and Jupyter notebook analysis.

sklearn-evaluation Machine learning model evaluation made easy: plots, tables, HTML reports, experiment tracking, and Jupyter notebook analysis. Suppo

A comprehensive set of fairness metrics for datasets and machine learning models, explanations for these metrics, and algorithms to mitigate bias in datasets and models.

AI Fairness 360 (AIF360) The AI Fairness 360 toolkit is an extensible open-source library containg techniques developed by the research community to h

Adaptive: parallel active learning of mathematical functions

adaptive Adaptive: parallel active learning of mathematical functions. adaptive is an open-source Python library designed to make adaptive parallel fu

PennyLane is a cross-platform Python library for differentiable programming of quantum computers

PennyLane is a cross-platform Python library for differentiable programming of quantum computers. Train a quantum computer the same way as a neural ne

QML: A Python Toolkit for Quantum Machine Learning

QML is a Python2/3-compatible toolkit for representation learning of properties of molecules and solids.

![[NeurIPS 2021] ORL: Unsupervised Object-Level Representation Learning from Scene Images](https://github.com/Jiahao000/ORL/raw/master/highlights.png)

[NeurIPS 2021] ORL: Unsupervised Object-Level Representation Learning from Scene Images

Unsupervised Object-Level Representation Learning from Scene Images This repository contains the official PyTorch implementation of the ORL algorithm

MetaAvatar: Learning Animatable Clothed Human Models from Few Depth Images

MetaAvatar: Learning Animatable Clothed Human Models from Few Depth Images This repository contains the implementation of our paper MetaAvatar: Learni

Official PyTorch implementation of "Contrastive Learning from Extremely Augmented Skeleton Sequences for Self-supervised Action Recognition" in AAAI2022.

AimCLR This is an official PyTorch implementation of "Contrastive Learning from Extremely Augmented Skeleton Sequences for Self-supervised Action Reco

Code repository for "Reducing Underflow in Mixed Precision Training by Gradient Scaling" presented at IJCAI '20

Reducing Underflow in Mixed Precision Training by Gradient Scaling This project implements the gradient scaling method to improve the performance of m

Official Pytorch implementation for 2021 ICCV paper "Learning Motion Priors for 4D Human Body Capture in 3D Scenes" and trained models / data

Learning Motion Priors for 4D Human Body Capture in 3D Scenes (LEMO) Official Pytorch implementation for 2021 ICCV (oral) paper "Learning Motion Prior

OpenDILab Multi-Agent Environment

Go-Bigger: Multi-Agent Decision Intelligence Environment GoBigger Doc (中文版) Ongoing 2021.11.13 We are holding a competition —— Go-Bigger: Multi-Agent

Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch

NÜWA - Pytorch (wip) Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch. This repository will be popul

Simple, high-school-leveled sequence library written in Python / 간단한 고등학교 수준 수열 라이브러리 (Python)

Simple, high-school-leveled sequence library written in Python

DiffWave is a fast, high-quality neural vocoder and waveform synthesizer.

DiffWave DiffWave is a fast, high-quality neural vocoder and waveform synthesizer. It starts with Gaussian noise and converts it into speech via itera

Reference implementation for Deep Unsupervised Learning using Nonequilibrium Thermodynamics

Diffusion Probabilistic Models This repository provides a reference implementation of the method described in the paper: Deep Unsupervised Learning us

Scalable machine learning based time series forecasting

mlforecast Scalable machine learning based time series forecasting. Install PyPI pip install mlforecast Optional dependencies If you want more functio

PyTorch toolkit for biomedical imaging

farabio is a minimal PyTorch toolkit for out-of-the-box deep learning support in biomedical imaging. For further information, see Wikis and Docs.

A collection of resources and papers on Diffusion Models, a darkhorse in the field of Generative Models

This repository contains a collection of resources and papers on Diffusion Models and Score-based Models. If there are any missing valuable resources

Neural HMMs are all you need (for high-quality attention-free TTS)

Neural HMMs are all you need (for high-quality attention-free TTS) Shivam Mehta, Éva Székely, Jonas Beskow, and Gustav Eje Henter This is the official

Model-based Reinforcement Learning Improves Autonomous Racing Performance

Racing Dreamer: Model-based versus Model-free Deep Reinforcement Learning for Autonomous Racing Cars In this work, we propose to learn a racing contro

Rainbow DQN implementation accompanying the paper "Fast and Data-Efficient Training of Rainbow" which reaches 205.7 median HNS after 10M frames. 🌈

Rainbow 🌈 An implementation of Rainbow DQN which reaches a median HNS of 205.7 after only 10M frames (the original Rainbow from Hessel et al. 2017 re

PyTorch implementation of Decoupling Value and Policy for Generalization in Reinforcement Learning

PyTorch implementation of Decoupling Value and Policy for Generalization in Reinforcement Learning

Code for EMNLP 2021 paper: "Learning Implicit Sentiment in Aspect-based Sentiment Analysis with Supervised Contrastive Pre-Training"

SCAPT-ABSA Code for EMNLP2021 paper: "Learning Implicit Sentiment in Aspect-based Sentiment Analysis with Supervised Contrastive Pre-Training" Overvie

Automatic learning-rate scheduler

AutoLRS This is the PyTorch code implementation for the paper AutoLRS: Automatic Learning-Rate Schedule by Bayesian Optimization on the Fly published

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

Single-Stage Instance Shadow Detection with Bidirectional Relation Learning (CVPR 2021 Oral)

Single-Stage Instance Shadow Detection with Bidirectional Relation Learning (CVPR 2021 Oral) Tianyu Wang*, Xiaowei Hu*, Chi-Wing Fu, and Pheng-Ann Hen

Learning to Draw: Emergent Communication through Sketching

Learning to Draw: Emergent Communication through Sketching This is the official code for the paper "Learning to Draw: Emergent Communication through S

PyTorch implementation of Off-policy Learning in Two-stage Recommender Systems

Off-Policy-2-Stage This repo provides a PyTorch implementation of the MovieLens experiments for the following paper: Off-policy Learning in Two-stage

Quick program made to generate alpha and delta tables for Hidden Markov Models

HMM_Calc Functions for generating Alpha and Delta tables from a Hidden Markov Model. Parameters: a: Matrix of transition probabilities. a[i][j] = a_{i

Sample Prior Guided Robust Model Learning to Suppress Noisy Labels

PGDF This repo is the official implementation of our paper "Sample Prior Guided Robust Model Learning to Suppress Noisy Labels ". Citation If you use

Simple API for UCI Machine Learning Dataset Repository (search, download, analyze)

A simple API for working with University of California, Irvine (UCI) Machine Learning (ML) repository Table of Contents Introduction About Page of the

Joint Detection and Identification Feature Learning for Person Search

Person Search Project This repository hosts the code for our paper Joint Detection and Identification Feature Learning for Person Search. The code is

Tensorflow implementation of Semi-supervised Sequence Learning (https://arxiv.org/abs/1511.01432)

Transfer Learning for Text Classification with Tensorflow Tensorflow implementation of Semi-supervised Sequence Learning(https://arxiv.org/abs/1511.01

TextWorld is a sandbox learning environment for the training and evaluation of reinforcement learning (RL) agents on text-based games.

TextWorld A text-based game generator and extensible sandbox learning environment for training and testing reinforcement learning (RL) agents. Also ch

torchbearer: A model fitting library for PyTorch

Note: We're moving to PyTorch Lightning! Read about the move here. From the end of February, torchbearer will no longer be actively maintained. We'll

A project to make Amazon Echo respond to sign language using your webcam

Making Alexa respond to Sign Language using Tensorflow.js Try the live demo Read the Blog Post on Tensorflow's Blog Coming Soon Watch the video This p

Pipeline for training LSA models using Scikit-Learn.

Latent Semantic Analysis Pipeline for training LSA models using Scikit-Learn. Usage Instead of writing custom code for latent semantic analysis, you j

SentAugment is a data augmentation technique for semi-supervised learning in NLP.

SentAugment SentAugment is a data augmentation technique for semi-supervised learning in NLP. It uses state-of-the-art sentence embeddings to structur

A single model that parses Universal Dependencies across 75 languages.

A single model that parses Universal Dependencies across 75 languages. Given a sentence, jointly predicts part-of-speech tags, morphology tags, lemmas, and dependency trees.

PyTorch code for EMNLP 2019 paper "LXMERT: Learning Cross-Modality Encoder Representations from Transformers".

LXMERT: Learning Cross-Modality Encoder Representations from Transformers Our servers break again :(. I have updated the links so that they should wor

Code for ICLR 2020 paper "VL-BERT: Pre-training of Generic Visual-Linguistic Representations".

VL-BERT By Weijie Su, Xizhou Zhu, Yue Cao, Bin Li, Lewei Lu, Furu Wei, Jifeng Dai. This repository is an official implementation of the paper VL-BERT:

Research code for ECCV 2020 paper "UNITER: UNiversal Image-TExt Representation Learning"

UNITER: UNiversal Image-TExt Representation Learning This is the official repository of UNITER (ECCV 2020). This repository currently supports finetun

Research Code for NeurIPS 2020 Spotlight paper "Large-Scale Adversarial Training for Vision-and-Language Representation Learning": UNITER adversarial training part

VILLA: Vision-and-Language Adversarial Training This is the official repository of VILLA (NeurIPS 2020 Spotlight). This repository currently supports

![[CVPR 2021] VirTex: Learning Visual Representations from Textual Annotations](https://github.com/kdexd/virtex/raw/master/docs/_static/system_figure.jpg)

[CVPR 2021] VirTex: Learning Visual Representations from Textual Annotations

VirTex: Learning Visual Representations from Textual Annotations Karan Desai and Justin Johnson University of Michigan CVPR 2021 arxiv.org/abs/2006.06

Train Dense Passage Retriever (DPR) with a single GPU

Gradient Cached Dense Passage Retrieval Gradient Cached Dense Passage Retrieval (GC-DPR) - is an extension of the original DPR library. We introduce G

Toolkit for developing and maintaining ML models

modelkit Python framework for production ML systems. modelkit is a minimalist yet powerful MLOps library for Python, built for people who want to depl

Learn Python Regular Expressions step by step from beginner to advanced levels

Python re(gex)? Learn Python Regular Expressions step by step from beginner to advanced levels with hundreds of examples and exercises The book also i

Paddle Graph Learning (PGL) is an efficient and flexible graph learning framework based on PaddlePaddle

DOC | Quick Start | 中文 Breaking News !! 🔥 🔥 🔥 OGB-LSC KDD CUP 2021 winners announced!! (2021.06.17) Super excited to announce our PGL team won TWO

The official implementation of Relative Uncertainty Learning for Facial Expression Recognition

Relative Uncertainty Learning for Facial Expression Recognition The official implementation of the following paper at NeurIPS2021: Title: Relative Unc

[NeurIPS'21 Spotlight] PyTorch code for our paper "Aligned Structured Sparsity Learning for Efficient Image Super-Resolution"

ASSL This repository is for a new network pruning method (Aligned Structured Sparsity Learning, ASSL) for efficient single image super-resolution (SR)

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

![Code and Data for the paper: Molecular Contrastive Learning with Chemical Element Knowledge Graph [AAAI 2022]](https://github.com/Fangyin1994/KCL/raw/main/fig/overview.png)

Code and Data for the paper: Molecular Contrastive Learning with Chemical Element Knowledge Graph [AAAI 2022]

Knowledge-enhanced Contrastive Learning (KCL) Molecular Contrastive Learning with Chemical Element Knowledge Graph [ AAAI 2022 ]. We construct a Chemi

Learn to code in any language. If

Learn to Code It is an intiiative undertaken by Student Ambassadors Club, Jamshoro for students who are absolute begineers in programming and want to

A fast, pure python implementation of the MuyGPs Gaussian process realization and training algorithm.

Fast implementation of the MuyGPs Gaussian process hyperparameter estimation algorithm MuyGPs is a GP estimation method that affords fast hyperparamet

Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving

GSAN Introduction Code for paper GSAN: Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving, wh

Self-Supervised Learning with Kernel Dependence Maximization

Self-Supervised Learning with Kernel Dependence Maximization This is the code for SSL-HSIC, a self-supervised learning loss proposed in the paper Self

Deep Q Learning with OpenAI Gym and Pokemon Showdown

pokemon-deep-learning An openAI gym project for pokemon involving deep q learning. Made by myself, Sam Little, and Layton Webber. This code captures g

PLUR is a collection of source code datasets suitable for graph-based machine learning.

PLUR (Programming-Language Understanding and Repair) is a collection of source code datasets suitable for graph-based machine learning. We provide scripts for downloading, processing, and loading the datasets. This is done by offering a unified API and data structures for all datasets.

Code for A Volumetric Transformer for Accurate 3D Tumor Segmentation

VT-UNet This repo contains the supported pytorch code and configuration files to reproduce 3D medical image segmentaion results of VT-UNet. Environmen

PyTorch trainer and model for Sequence Classification

PyTorch-trainer-and-model-for-Sequence-Classification After cloning the repository, modify your training data so that the training data is a .csv file

Implementation of "Semi-supervised Domain Adaptive Structure Learning"

Semi-supervised Domain Adaptive Structure Learning - ASDA This repo contains the source code and dataset for our ASDA paper. Illustration of the propo

Upgini : data search library for your machine learning pipelines

Automated data search library for your machine learning pipelines → find & deliver relevant external data & features to boost ML accuracy :chart_with_upwards_trend:

Learning to Rewrite for Non-Autoregressive Neural Machine Translation

RewriteNAT This repo provides the code for reproducing our proposed RewriteNAT in EMNLP 2021 paper entitled "Learning to Rewrite for Non-Autoregressiv

Behind the Curtain: Learning Occluded Shapes for 3D Object Detection

Behind the Curtain: Learning Occluded Shapes for 3D Object Detection Acknowledgement We implement our model, BtcDet, based on [OpenPcdet 0.3.0]. Insta

Built on python (Mathematical straight fit line coordinates error predictor machine learning foundational model)

Sum-Square_Error-Business-Analytical-Tool- Built on python (Mathematical straight fit line coordinates error predictor machine learning foundational m

Milano is a tool for automating hyper-parameters search for your models on a backend of your choice.

Milano (This is a research project, not an official NVIDIA product.) Documentation https://nvidia.github.io/Milano Milano (Machine learning autotuner

Code release for Hu et al., Learning to Segment Every Thing. in CVPR, 2018.

Learning to Segment Every Thing This repository contains the code for the following paper: R. Hu, P. Dollár, K. He, T. Darrell, R. Girshick, Learning

Core ML tools contain supporting tools for Core ML model conversion, editing, and validation.

Core ML Tools Use coremltools to convert machine learning models from third-party libraries to the Core ML format. The Python package contains the sup

Open source annotation tool for machine learning practitioners.

doccano doccano is an open source text annotation tool for humans. It provides annotation features for text classification, sequence labeling and sequ

DISTIL: Deep dIverSified inTeractIve Learning.

DISTIL: Deep dIverSified inTeractIve Learning. An active/inter-active learning library built on py-torch for reducing labeling costs.

EMNLP 2021 paper The Devil is in the Detail: Simple Tricks Improve Systematic Generalization of Transformers.

Codebase for training transformers on systematic generalization datasets. The official repository for our EMNLP 2021 paper The Devil is in the Detail:

Code for the paper "Are Sixteen Heads Really Better than One?"

Are Sixteen Heads Really Better than One? This repository contains code to reproduce the experiments in our paper Are Sixteen Heads Really Better than

The repository for the paper "When Do You Need Billions of Words of Pretraining Data?"

pretraining-learning-curves This is the repository for the paper When Do You Need Billions of Words of Pretraining Data? Edge Probing We use jiant1 fo

ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators

ELECTRA Introduction ELECTRA is a method for self-supervised language representation learning. It can be used to pre-train transformer networks using

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed+Megatron trained the world's most powerful language model: MT-530B DeepSpeed is hiring, come join us! DeepSpeed is a deep learning optimizat

ALBERT: A Lite BERT for Self-supervised Learning of Language Representations

ALBERT ***************New March 28, 2020 *************** Add a colab tutorial to run fine-tuning for GLUE datasets. ***************New January 7, 2020

This is the code for Compressing BERT: Studying the Effects of Weight Pruning on Transfer Learning

This is the code for Compressing BERT: Studying the Effects of Weight Pruning on Transfer Learning It includes /bert, which is the original BERT repos

Super Tickets in Pre-Trained Language Models: From Model Compression to Improving Generalization (ACL 2021)

Structured Super Lottery Tickets in BERT This repo contains our codes for the paper "Super Tickets in Pre-Trained Language Models: From Model Compress

Research code for "What to Pre-Train on? Efficient Intermediate Task Selection", EMNLP 2021

efficient-task-transfer This repository contains code for the experiments in our paper "What to Pre-Train on? Efficient Intermediate Task Selection".

Multi-Task Deep Neural Networks for Natural Language Understanding

New Release We released Adversarial training for both LM pre-training/finetuning and f-divergence. Large-scale Adversarial training for LMs: ALUM code

Codes for our paper The Stem Cell Hypothesis: Dilemma behind Multi-Task Learning with Transformer Encoders published to EMNLP 2021.

The Stem Cell Hypothesis Codes for our paper The Stem Cell Hypothesis: Dilemma behind Multi-Task Learning with Transformer Encoders published to EMNLP

Training and evaluation codes for the BertGen paper (ACL-IJCNLP 2021)

BERTGEN This repository is the implementation of the paper "BERTGEN: Multi-task Generation through BERT" (https://arxiv.org/abs/2106.03484). The codeb

Pytorch implementation of Bert and Pals: Projected Attention Layers for Efficient Adaptation in Multi-Task Learning

PyTorch implementation of BERT and PALs Introduction Work by Asa Cooper Stickland and Iain Murray, University of Edinburgh. Code for BERT and PALs; mo

Adapter-BERT: Parameter-Efficient Transfer Learning for NLP.

Adapter-BERT: Parameter-Efficient Transfer Learning for NLP.

Zero-shot Learning by Generating Task-specific Adapters

Code for "Zero-shot Learning by Generating Task-specific Adapters" This is the repository containing code for "Zero-shot Learning by Generating Task-s

This repository contains the code for "Exploiting Cloze Questions for Few-Shot Text Classification and Natural Language Inference"

Pattern-Exploiting Training (PET) This repository contains the code for Exploiting Cloze Questions for Few-Shot Text Classification and Natural Langua

ACL'2021: LM-BFF: Better Few-shot Fine-tuning of Language Models

LM-BFF (Better Few-shot Fine-tuning of Language Models) This is the implementation of the paper Making Pre-trained Language Models Better Few-shot Lea

EasyTransfer is designed to make the development of transfer learning in NLP applications easier.

EasyTransfer is designed to make the development of transfer learning in NLP applications easier. The literature has witnessed the success of applying

Revisiting Self-Training for Few-Shot Learning of Language Model.

SFLM This is the implementation of the paper Revisiting Self-Training for Few-Shot Learning of Language Model. SFLM is short for self-training for few

PyTorch source code of NAACL 2019 paper "An Embarrassingly Simple Approach for Transfer Learning from Pretrained Language Models"

This repository contains source code for NAACL 2019 paper "An Embarrassingly Simple Approach for Transfer Learning from Pretrained Language Models" (P

🥇Samsung AI Challenge 2021 1등 솔루션입니다🥇

MoT - Molecular Transformer Large-scale Pretraining for Molecular Property Prediction Samsung AI Challenge for Scientific Discovery This repository is

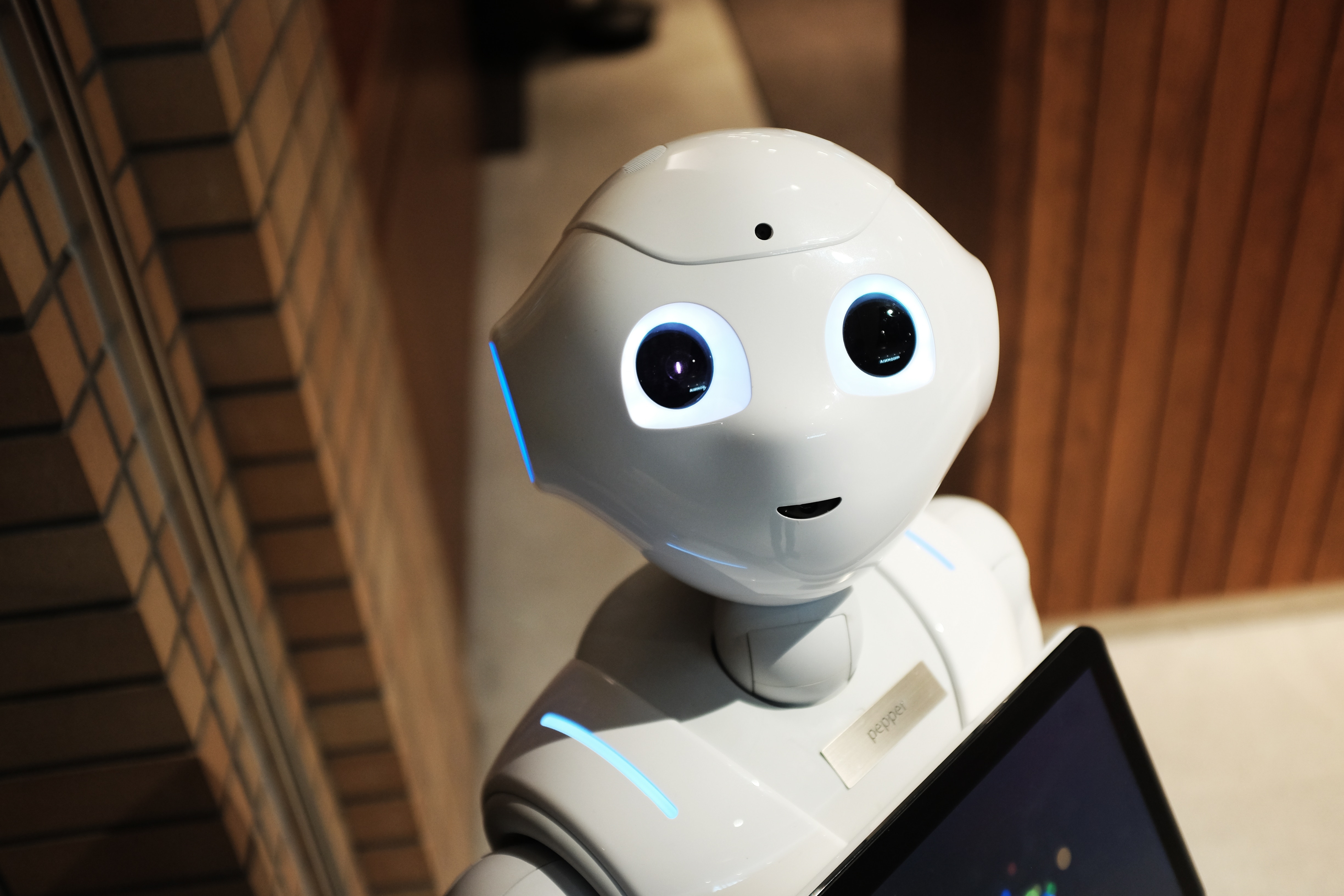

The code is the training example of AAAI2022 Security AI Challenger Program Phase 8: Data Centric Robot Learning on ML models.

Example code of [Tianchi AAAI2022 Security AI Challenger Program Phase 8]