752 Repositories

Python visual-attention Libraries

Flow-based visual scripting for Python

A simple visual node editor for Python Ryven combines flow-based visual scripting with Python. It gives you absolute freedom for your nodes and a simp

Seq2seq attn - Use the Seq2Seq method to implement machine translation and introduce Attention mechanism to improve the results

Seq2seq_attn Use the Seq2Seq method to implement machine translation and use the

Unofficial PyTorch Implementation of "Augmenting Convolutional networks with attention-based aggregation"

Pytorch Implementation of Augmenting Convolutional networks with attention-based aggregation This is the unofficial PyTorch Implementation of "Augment

Escaping the Gradient Vanishing: Periodic Alternatives of Softmax in Attention Mechanism

Period-alternatives-of-Softmax Experimental Demo for our paper 'Escaping the Gradient Vanishing: Periodic Alternatives of Softmax in Attention Mechani

Official implementation of "Refiner: Refining Self-attention for Vision Transformers".

RefinerViT This repo is the official implementation of "Refiner: Refining Self-attention for Vision Transformers". The repo is build on top of timm an

PyTorch code for the NAACL 2021 paper "Improving Generation and Evaluation of Visual Stories via Semantic Consistency"

Improving Generation and Evaluation of Visual Stories via Semantic Consistency PyTorch code for the NAACL 2021 paper "Improving Generation and Evaluat

Visual dialog agents with pre-trained vision-and-language encoders.

Learning Better Visual Dialog Agents with Pretrained Visual-Linguistic Representation Or READ-UP: Referring Expression Agent Dialog with Unified Pretr

RETRO-pytorch - Implementation of RETRO, Deepmind's Retrieval based Attention net, in Pytorch

RETRO - Pytorch (wip) Implementation of RETRO, Deepmind's Retrieval based Attent

Implementations for the ICLR-2021 paper: SEED: Self-supervised Distillation For Visual Representation.

Implementations for the ICLR-2021 paper: SEED: Self-supervised Distillation For Visual Representation.

PyFlow is a general purpose visual scripting framework for python

PyFlow is a general purpose visual scripting framework for python. State Base structure of program implemented, such things as packages disco

Implementation of Memory-Compressed Attention, from the paper "Generating Wikipedia By Summarizing Long Sequences"

Memory Compressed Attention Implementation of the Self-Attention layer of the proposed Memory-Compressed Attention, in Pytorch. This repository offers

Official PyTorch code for "BAM: Bottleneck Attention Module (BMVC2018)" and "CBAM: Convolutional Block Attention Module (ECCV2018)"

BAM and CBAM Official PyTorch code for "BAM: Bottleneck Attention Module (BMVC2018)" and "CBAM: Convolutional Block Attention Module (ECCV2018)" Updat

An implementation of the efficient attention module.

Efficient Attention An implementation of the efficient attention module. Description Efficient attention is an attention mechanism that substantially

This repository contains an implementation of the Permutohedral Attention Module in Pytorch

Permutohedral_attention_module This repository contains an implementation of the Permutohedral Attention Module

The code for Expectation-Maximization Attention Networks for Semantic Segmentation (ICCV'2019 Oral)

EMANet News The bug in loading the pretrained model is now fixed. I have updated the .pth. To use it, download it again. EMANet-101 gets 80.99 on the

Pytorch implementation of Compressive Transformers, from Deepmind

Compressive Transformer in Pytorch Pytorch implementation of Compressive Transformers, a variant of Transformer-XL with compressed memory for long-ran

Implementation of Axial attention - attending to multi-dimensional data efficiently

Axial Attention Implementation of Axial attention in Pytorch. A simple but powerful technique to attend to multi-dimensional data efficiently. It has

Implementing SYNTHESIZER: Rethinking Self-Attention in Transformer Models using Pytorch

Implementing SYNTHESIZER: Rethinking Self-Attention in Transformer Models using Pytorch Reference Paper URL Author: Yi Tay, Dara Bahri, Donald Metzler

My take on a practical implementation of Linformer for Pytorch.

Linformer Pytorch Implementation A practical implementation of the Linformer paper. This is attention with only linear complexity in n, allowing for v

Implementation of Kronecker Attention in Pytorch

Kronecker Attention Pytorch Implementation of Kronecker Attention in Pytorch. Results look less than stellar, but if someone found some context where

Implementation of Memformer, a Memory-augmented Transformer, in Pytorch

Memformer - Pytorch Implementation of Memformer, a Memory-augmented Transformer, in Pytorch. It includes memory slots, which are updated with attentio

![[NeurIPS 2020] Official Implementation:](https://github.com/giannisdaras/smyrf/raw/master/visuals/quality_degradation.png)

[NeurIPS 2020] Official Implementation: "SMYRF: Efficient Attention using Asymmetric Clustering".

SMYRF: Efficient attention using asymmetric clustering Get started: Abstract We propose a novel type of balanced clustering algorithm to approximate a

Reproducing-BowNet: Learning Representations by Predicting Bags of Visual Words

Reproducing-BowNet Our reproducibility effort based on the 2020 ML Reproducibility Challenge. We are reproducing the results of this CVPR 2020 paper:

![[ACM MM 2021] TSA-Net: Tube Self-Attention Network for Action Quality Assessment](https://github.com/Shunli-Wang/TSA-Net/raw/main/fig/TSA-Net.jpg)

[ACM MM 2021] TSA-Net: Tube Self-Attention Network for Action Quality Assessment

Tube Self-Attention Network (TSA-Net) This repository contains the PyTorch implementation for paper TSA-Net: Tube Self-Attention Network for Action Qu

Source codes for Improved Few-Shot Visual Classification (CVPR 2020), Enhancing Few-Shot Image Classification with Unlabelled Examples

Source codes for Improved Few-Shot Visual Classification (CVPR 2020), Enhancing Few-Shot Image Classification with Unlabelled Examples (WACV 2022) and Beyond Simple Meta-Learning: Multi-Purpose Models for Multi-Domain, Active and Continual Few-Shot Learning (TPAMI 2022 - in submission)

LightNet++: Boosted Light-weighted Networks for Real-time Semantic Segmentation

LightNet++ !!!New Repo.!!! ⇒ EfficientNet.PyTorch: Concise, Modular, Human-friendly PyTorch implementation of EfficientNet with Pre-trained Weights !!

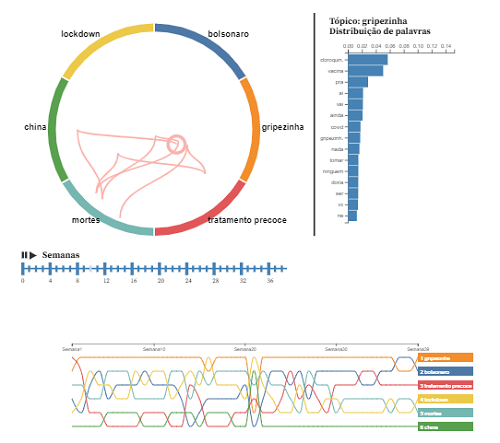

Project for the discipline of Visual Data Analysis at EMAp FGV.

Analysis of the dissemination of fake news about COVID-19 on Twitter This project was the final work for the discipline of Visual Data Analysis of the

The official implementation of ELSA: Enhanced Local Self-Attention for Vision Transformer

ELSA: Enhanced Local Self-Attention for Vision Transformer By Jingkai Zhou, Pich

Repository of Vision Transformer with Deformable Attention

Vision Transformer with Deformable Attention This repository contains the code for the paper Vision Transformer with Deformable Attention [arXiv]. Int

VL-LTR: Learning Class-wise Visual-Linguistic Representation for Long-Tailed Visual Recognition

VL-LTR: Learning Class-wise Visual-Linguistic Representation for Long-Tailed Visual Recognition Usage First, install PyTorch 1.7.1+, torchvision 0.8.2

This repository provides the official implementation of 'Learning to ignore: rethinking attention in CNNs' accepted in BMVC 2021.

inverse_attention This repository provides the official implementation of 'Learning to ignore: rethinking attention in CNNs' accepted in BMVC 2021. Le

PyTorch implementation of Lip to Speech Synthesis with Visual Context Attentional GAN (NeurIPS2021)

Lip to Speech Synthesis with Visual Context Attentional GAN This repository contains the PyTorch implementation of the following paper: Lip to Speech

Unofficial PyTorch reimplementation of the paper Swin Transformer V2: Scaling Up Capacity and Resolution

PyTorch reimplementation of the paper Swin Transformer V2: Scaling Up Capacity and Resolution [arXiv 2021].

The official TensorFlow implementation of the paper Action Transformer: A Self-Attention Model for Short-Time Pose-Based Human Action Recognition

Action Transformer A Self-Attention Model for Short-Time Human Action Recognition This repository contains the official TensorFlow implementation of t

Alignment Attention Fusion framework for Few-Shot Object Detection

AAF framework Framework generalities This repository contains the code of the AAF framework proposed in this paper. The main idea behind this work is

RARA: Zero-shot Sim2Real Visual Navigation with Following Foreground Cues

RARA: Zero-shot Sim2Real Visual Navigation with Following Foreground Cues FGBG (foreground-background) pytorch package for defining and training model

Implementation of a Transformer using ReLA (Rectified Linear Attention)

ReLA (Rectified Linear Attention) Transformer Implementation of a Transformer using ReLA (Rectified Linear Attention). It will also contain an attempt

Gathers machine learning and Tensorflow deep learning models for NLP problems, 1.13 Tensorflow 2.0

NLP-Models-Tensorflow, Gathers machine learning and tensorflow deep learning models for NLP problems, code simplify inside Jupyter Notebooks 100%. Tab

Housing Price Prediction Using Machine Learning.

HOUSING PRICE PREDICTION USING MACHINE LEARNING DESCRIPTION Housing Price Prediction Using Machine Learning is to predict the data of housings. Here I

Which Apple Keeps Which Doctor Away? Colorful Word Representations with Visual Oracles

Which Apple Keeps Which Doctor Away? Colorful Word Representations with Visual Oracles (TASLP 2022)

MVS2D: Efficient Multi-view Stereo via Attention-Driven 2D Convolutions

MVS2D: Efficient Multi-view Stereo via Attention-Driven 2D Convolutions Project Page | Paper If you find our work useful for your research, please con

PyTorch implementaton of our CVPR 2021 paper "Bridging the Visual Gap: Wide-Range Image Blending"

Bridging the Visual Gap: Wide-Range Image Blending PyTorch implementaton of our CVPR 2021 paper "Bridging the Visual Gap: Wide-Range Image Blending".

Official PyTorch implementation of "The Center of Attention: Center-Keypoint Grouping via Attention for Multi-Person Pose Estimation" (ICCV 21).

CenterGroup This the official implementation of our ICCV 2021 paper The Center of Attention: Center-Keypoint Grouping via Attention for Multi-Person P

Code for "Multi-Time Attention Networks for Irregularly Sampled Time Series", ICLR 2021.

Multi-Time Attention Networks (mTANs) This repository contains the PyTorch implementation for the paper Multi-Time Attention Networks for Irregularly

This is a super simple visualization toolbox (script) for transformer attention visualization ✌

Trans_attention_vis This is a super simple visualization toolbox (script) for transformer attention visualization ✌ 1. How to prepare your attention m

RaftMLP: How Much Can Be Done Without Attention and with Less Spatial Locality?

RaftMLP RaftMLP: How Much Can Be Done Without Attention and with Less Spatial Locality? By Yuki Tatsunami and Masato Taki (Rikkyo University) [arxiv]

A self-supervised learning framework for audio-visual speech

AV-HuBERT (Audio-Visual Hidden Unit BERT) Learning Audio-Visual Speech Representation by Masked Multimodal Cluster Prediction Robust Self-Supervised A

In this project we use both Resnet and Self-attention layer for cat, dog and flower classification.

cdf_att_classification classes = {0: 'cat', 1: 'dog', 2: 'flower'} In this project we use both Resnet and Self-attention layer for cdf-Classification.

Datasets, tools, and benchmarks for representation learning of code.

The CodeSearchNet challenge has been concluded We would like to thank all participants for their submissions and we hope that this challenge provided

GAT - Graph Attention Network (PyTorch) 💻 + graphs + 📣 = ❤️

GAT - Graph Attention Network (PyTorch) 💻 + graphs + 📣 = ❤️ This repo contains a PyTorch implementation of the original GAT paper ( 🔗 Veličković et

Fast convergence of detr with spatially modulated co-attention

Fast convergence of detr with spatially modulated co-attention Usage There are no extra compiled components in SMCA DETR and package dependencies are

SCOUTER: Slot Attention-based Classifier for Explainable Image Recognition

SCOUTER: Slot Attention-based Classifier for Explainable Image Recognition PDF Abstract Explainable artificial intelligence has been gaining attention

We propose the adversarial blur attack (ABA) against visual object tracking.

ABA We propose the adversarial blur attack (ABA) against visual object tracking. The ICCV link: https://arxiv.org/abs/2107.12085 and, https://openacce

Train Scene Graph Generation for Visual Genome and GQA in PyTorch = 1.2 with improved zero and few-shot generalization.

Scene Graph Generation Object Detections Ground truth Scene Graph Generated Scene Graph In this visualization, woman sitting on rock is a zero-shot tr

Custom studies about block sparse attention.

Block Sparse Attention 研究总结 本人近半年来对Block Sparse Attention(块稀疏注意力)的研究总结(持续更新中)。按时间顺序,主要分为如下三部分: PyTorch 自定义 CUDA 算子——以矩阵乘法为例 基于 Triton 的 Block Sparse A

Attention-based Transformation from Latent Features to Point Clouds (AAAI 2022)

Attention-based Transformation from Latent Features to Point Clouds This repository contains a PyTorch implementation of the paper: Attention-based Tr

Code and Experiments for ACL-IJCNLP 2021 Paper Mind Your Outliers! Investigating the Negative Impact of Outliers on Active Learning for Visual Question Answering.

Code and Experiments for ACL-IJCNLP 2021 Paper Mind Your Outliers! Investigating the Negative Impact of Outliers on Active Learning for Visual Question Answering.

Bottom-up attention model for image captioning and VQA, based on Faster R-CNN and Visual Genome

bottom-up-attention This code implements a bottom-up attention model, based on multi-gpu training of Faster R-CNN with ResNet-101, using object and at

Go from graph data to a secure and interactive visual graph app in 15 minutes. Batteries-included self-hosting of graph data apps with Streamlit, Graphistry, RAPIDS, and more!

✔️ Linux ✔️ OS X ❌ Windows (#39) Welcome to graph-app-kit Turn your graph data into a secure and interactive visual graph app in 15 minutes! Why This

DeepSpamReview: Detection of Fake Reviews on Online Review Platforms using Deep Learning Architectures. Summer Internship project at CoreView Systems.

Detection of Fake Reviews on Online Review Platforms using Deep Learning Architectures Dataset: https://s3.amazonaws.com/fast-ai-nlp/yelp_review_polar

SAFL: A Self-Attention Scene Text Recognizer with Focal Loss

SAFL: A Self-Attention Scene Text Recognizer with Focal Loss This repository implements the SAFL in pytorch. Installation conda env create -f environm

Referring Video Object Segmentation

Awesome-Referring-Video-Object-Segmentation Welcome to starts ⭐ & comments 💹 & sharing 😀 !! - 2021.12.12: Recent papers (from 2021) - welcome to ad

DAGAN - Dual Attention GANs for Semantic Image Synthesis

Contents Semantic Image Synthesis with DAGAN Installation Dataset Preparation Generating Images Using Pretrained Model Train and Test New Models Evalu

AttentionGAN for Unpaired Image-to-Image Translation & Multi-Domain Image-to-Image Translation

AttentionGAN-v2 for Unpaired Image-to-Image Translation AttentionGAN-v2 Framework The proposed generator learns both foreground and background attenti

A unified framework to jointly model images, text, and human attention traces.

connect-caption-and-trace This repository contains the reference code for our paper Connecting What to Say With Where to Look by Modeling Human Attent

PyTorch code for: Learning to Generate Grounded Visual Captions without Localization Supervision

Learning to Generate Grounded Visual Captions without Localization Supervision This is the PyTorch implementation of our paper: Learning to Generate G

Meshed-Memory Transformer for Image Captioning. CVPR 2020

M²: Meshed-Memory Transformer This repository contains the reference code for the paper Meshed-Memory Transformer for Image Captioning (CVPR 2020). Pl

![Implementation of 'X-Linear Attention Networks for Image Captioning' [CVPR 2020]](https://github.com/JDAI-CV/image-captioning/raw/master/images/framework.jpg)

Implementation of 'X-Linear Attention Networks for Image Captioning' [CVPR 2020]

Introduction This repository is for X-Linear Attention Networks for Image Captioning (CVPR 2020). The original paper can be found here. Please cite wi

Code for paper Adaptively Aligned Image Captioning via Adaptive Attention Time

Adaptively Aligned Image Captioning via Adaptive Attention Time This repository includes the implementation for Adaptively Aligned Image Captioning vi

This repository focus on Image Captioning & Video Captioning & Seq-to-Seq Learning & NLP

Awesome-Visual-Captioning Table of Contents ACL-2021 CVPR-2021 AAAI-2021 ACMMM-2020 NeurIPS-2020 ECCV-2020 CVPR-2020 ACL-2020 AAAI-2020 ACL-2019 NeurI

Tensorflow implementation of soft-attention mechanism for video caption generation.

SA-tensorflow Tensorflow implementation of soft-attention mechanism for video caption generation. An example of soft-attention mechanism. The attentio

Show-attend-and-tell - TensorFlow Implementation of "Show, Attend and Tell"

Show, Attend and Tell Update (December 2, 2016) TensorFlow implementation of Show, Attend and Tell: Neural Image Caption Generation with Visual Attent

Image captioning - Tensorflow implementation of Show, Attend and Tell: Neural Image Caption Generation with Visual Attention

Introduction This neural system for image captioning is roughly based on the paper "Show, Attend and Tell: Neural Image Caption Generation with Visual

![[3DV 2021] Channel-Wise Attention-Based Network for Self-Supervised Monocular Depth Estimation](https://github.com/kamiLight/CADepth-master/raw/main/images/Quantitative_result.png)

[3DV 2021] Channel-Wise Attention-Based Network for Self-Supervised Monocular Depth Estimation

Channel-Wise Attention-Based Network for Self-Supervised Monocular Depth Estimation This is the official implementation for the method described in Ch

NFT-Price-Prediction-CNN - Using visual feature extraction, prices of NFTs are predicted via CNN (Alexnet and Resnet) architectures.

NFT-Price-Prediction-CNN - Using visual feature extraction, prices of NFTs are predicted via CNN (Alexnet and Resnet) architectures.

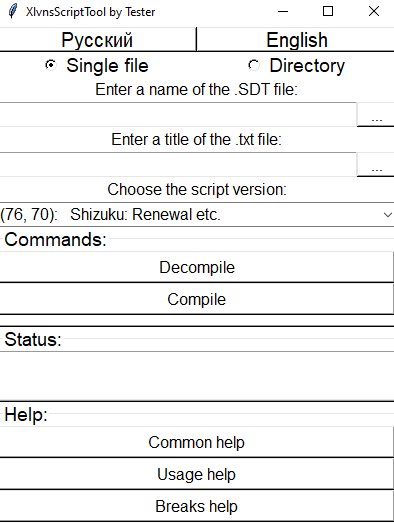

XlvnsScriptTool - Tool for decompilation and compilation of scripts .SDT from the visual novel's engine xlvns

XlvnsScriptTool English Dual languaged (rus+eng) tool for decompiling and compiling (actually, this tool is more than just (dis)assenbler, but less th

AlexaUsingPython - Alexa will pay attention to your order, as: Hello Alexa, play music, Hello Alexa

AlexaUsingPython - Alexa will pay attention to your order, as: Hello Alexa, play music, Hello Alexa, what's the time? Alexa will pay attention to your order, get it, and afterward do some activity as indicated by your order.

Awesome Graph Classification - A collection of important graph embedding, classification and representation learning papers with implementations.

A collection of graph classification methods, covering embedding, deep learning, graph kernel and factorization papers

Pseudo lidar - (CVPR 2019) Pseudo-LiDAR from Visual Depth Estimation: Bridging the Gap in 3D Object Detection for Autonomous Driving

Pseudo-LiDAR from Visual Depth Estimation: Bridging the Gap in 3D Object Detection for Autonomous Driving This paper has been accpeted by Conference o

Transformer - A TensorFlow Implementation of the Transformer: Attention Is All You Need

[UPDATED] A TensorFlow Implementation of Attention Is All You Need When I opened this repository in 2017, there was no official code yet. I tried to i

SPT_LSA_ViT - Implementation for Visual Transformer for Small-size Datasets

Vision Transformer for Small-Size Datasets Seung Hoon Lee and Seunghyun Lee and Byung Cheol Song | Paper Inha University Abstract Recently, the Vision

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

Self-supervised Equivariant Attention Mechanism for Weakly Supervised Semantic Segmentation, CVPR 2020 (Oral)

SEAM The implementation of Self-supervised Equivariant Attention Mechanism for Weakly Supervised Semantic Segmentaion. You can also download the repos

Scaling and Benchmarking Self-Supervised Visual Representation Learning

FAIR Self-Supervision Benchmark is deprecated. Please see VISSL, a ground-up rewrite of benchmark in PyTorch. FAIR Self-Supervision Benchmark This cod

A PyTorch implementation of "SelfGNN: Self-supervised Graph Neural Networks without explicit negative sampling"

SelfGNN A PyTorch implementation of "SelfGNN: Self-supervised Graph Neural Networks without explicit negative sampling" paper, which will appear in Th

Official implementation of CATs: Cost Aggregation Transformers for Visual Correspondence NeurIPS'21

CATs: Cost Aggregation Transformers for Visual Correspondence NeurIPS'21 For more information, check out the paper on [arXiv]. Training with different

🔀 Visual Room Rearrangement

AI2-THOR Rearrangement Challenge Welcome to the 2021 AI2-THOR Rearrangement Challenge hosted at the CVPR'21 Embodied-AI Workshop. The goal of this cha

For storing the complete exploration of Visual Question Answering for our B.Tech Project

Multi-Image vqa @authors: Akhilesh, Janhavi, Harsh Paper summary, Ideas tried and their corresponding results: on wiki Other discussions: on discussio

Source code of the "Graph-Bert: Only Attention is Needed for Learning Graph Representations" paper

Graph-Bert Source code of "Graph-Bert: Only Attention is Needed for Learning Graph Representations". Please check the script.py as the entry point. We

Code to generate datasets used in "How Useful is Self-Supervised Pretraining for Visual Tasks?"

Synthetic dataset rendering Framework for producing the synthetic datasets used in: How Useful is Self-Supervised Pretraining for Visual Tasks? Alejan

PyTorch implementation of MoCo: Momentum Contrast for Unsupervised Visual Representation Learning

MoCo: Momentum Contrast for Unsupervised Visual Representation Learning This is a PyTorch implementation of the MoCo paper: @Article{he2019moco, aut

![[arXiv 2020] Video Representation Learning with Visual Tempo Consistency](https://github.com/decisionforce/VTHCL/raw/master/docs/figures/pipeline.png)

[arXiv 2020] Video Representation Learning with Visual Tempo Consistency

Video Representation Learning with Visual Tempo Consistency [Paper] [Project Page] News Full codebae is coming soon Pretained Models For now, we provi

An unsupervised learning framework for depth and ego-motion estimation from monocular videos

SfMLearner This codebase implements the system described in the paper: Unsupervised Learning of Depth and Ego-Motion from Video Tinghui Zhou, Matthew

Code for the paper: Audio-Visual Scene Analysis with Self-Supervised Multisensory Features

[Paper] [Project page] This repository contains code for the paper: Andrew Owens, Alexei A. Efros. Audio-Visual Scene Analysis with Self-Supervised Mu

Percy visual testing for Python Selenium

percy-selenium-python Percy visual testing for Python Selenium. Installation npm install @percy/cli: $ npm install --save-dev @percy/cli pip install P

A small script written in Python3 that generates a visual representation of the Mandelbrot set.

Mandelbrot Set Generator A small script written in Python3 that generates a visual representation of the Mandelbrot set. Abstract The colors in the ou

TensorFlow implementation of "Attention is all you need (Transformer)"

[TensorFlow 2] Attention is all you need (Transformer) TensorFlow implementation of "Attention is all you need (Transformer)" Dataset The MNIST datase

The code for SAG-DTA: Prediction of Drug–Target Affinity Using Self-Attention Graph Network.

SAG-DTA The code is the implementation for the paper 'SAG-DTA: Prediction of Drug–Target Affinity Using Self-Attention Graph Network'. Requirements py

![[ACMMM 2021, Oral] Code release for](https://github.com/yikaiw/EIP/raw/main/assets/intro.png)

[ACMMM 2021, Oral] Code release for "Elastic Tactile Simulation Towards Tactile-Visual Perception"

EIP: Elastic Interaction of Particles Code release for "Elastic Tactile Simulation Towards Tactile-Visual Perception", in ACMMM (Oral) 2021. By Yikai

XViT - Space-time Mixing Attention for Video Transformer

XViT - Space-time Mixing Attention for Video Transformer This is the official implementation of the XViT paper: @inproceedings{bulat2021space, title