93 Repositories

Python KNN-With-Spark Libraries

A data engineering project with Kafka, Spark Streaming, dbt, Docker, Airflow, Terraform, GCP and much more!

Streamify A data pipeline with Kafka, Spark Streaming, dbt, Docker, Airflow, Terraform, GCP and much more! Description Objective The project will stre

Free Data Engineering course!

Data Engineering Zoomcamp Register in DataTalks.Club's Slack Join the #course-data-engineering channel The videos are published to DataTalks.Club's Yo

Databricks Certified Associate Spark Developer preparation toolkit to setup single node Standalone Spark Cluster along with material in the form of Jupyter Notebooks.

Databricks Certification Spark Databricks Certified Associate Spark Developer preparation toolkit to setup single node Standalone Spark Cluster along

Containerized Demo of Apache Spark MLlib on a Data Lakehouse (2022)

Spark-DeltaLake-Demo Reliable, Scalable Machine Learning (2022) This project was completed in an attempt to become better acquainted with the latest b

Esse é o meu primeiro repo tratando de fim a fim, uma pipeline de dados abertos do governo brasileiro relacionado a compras de contrato e cronogramas anuais com spark, em pyspark e SQL!

Olá! Esse é o meu primeiro repo tratando de fim a fim, uma pipeline de dados abertos do governo brasileiro relacionado a compras de contrato e cronogr

Product-based-recommendation-system - A product based recommendation system which uses Machine learning algorithm such as KNN and cosine similarity

Product-based-recommendation-system A product based recommendation system which

🏅 Top 5% in 제2회 연구개발특구 인공지능 경진대회 AI SPARK 챌린지

AI_SPARK_CHALLENG_Object_Detection 제2회 연구개발특구 인공지능 경진대회 AI SPARK 챌린지 🏅 Top 5% in mAP(0.75) (443명 중 13등, mAP: 0.98116) 대회 설명 Edge 환경에서의 가축 Object Dete

Sleep stages are classified with the help of ML. We have used 4 different ML algorithms (SVM, KNN, RF, NN) to demonstrate them

Sleep stages are classified with the help of ML. We have used 4 different ML algorithms (SVM, KNN, RF, NN) to demonstrate them.

Built various Machine Learning algorithms (Logistic Regression, Random Forest, KNN, Gradient Boosting and XGBoost. etc)

Built various Machine Learning algorithms (Logistic Regression, Random Forest, KNN, Gradient Boosting and XGBoost. etc). Structured a custom ensemble model and a neural network. Found a outperformed model for heart failure prediction accuracy of 88 percent.

A data structure that extends pyspark.sql.DataFrame with metadata information.

MetaFrame A data structure that extends pyspark.sql.DataFrame with metadata info

PySpark Structured Streaming ROS Kafka ApacheSpark Cassandra

PySpark-Structured-Streaming-ROS-Kafka-ApacheSpark-Cassandra The purpose of this project is to demonstrate a structured streaming pipeline with Apache

GPU implementation of $k$-Nearest Neighbors and Shared-Nearest Neighbors

GPU implementation of kNN and SNN GPU implementation of $k$-Nearest Neighbors and Shared-Nearest Neighbors Supported by numba cuda and faiss library E

This project has Classification and Clustering done Via kNN and K-Means respectfully

This project has Classification and Clustering done Via kNN and K-Means respectfully. It later tests its efficiency via F1/accuracy/recall/precision for kNN and Davies-Bouldin Index for Clustering. The Data is also visually represented.

SynapseML - an open source library to simplify the creation of scalable machine learning pipelines

Synapse Machine Learning SynapseML (previously MMLSpark) is an open source library to simplify the creation of scalable machine learning pipelines. Sy

Fully Automated YouTube Channel ▶️with Added Extra Features.

Fully Automated Youtube Channel ▒█▀▀█ █▀▀█ ▀▀█▀▀ ▀▀█▀▀ █░░█ █▀▀▄ █▀▀ █▀▀█ ▒█▀▀▄ █░░█ ░░█░░ ░▒█░░ █░░█ █▀▀▄ █▀▀ █▄▄▀ ▒█▄▄█ ▀▀▀▀ ░░▀░░ ░▒█░░ ░▀▀▀ ▀▀▀░

macOS development environment setup: Setting up a new developer machine can be an ad-hoc, manual, and time-consuming process.

dev-setup Motivation Setting up a new developer machine can be an ad-hoc, manual, and time-consuming process. dev-setup aims to simplify the process w

A Time Series Library for Apache Spark

Flint: A Time Series Library for Apache Spark The ability to analyze time series data at scale is critical for the success of finance and IoT applicat

This is my implementation on the K-nearest neighbors algorithm from scratch using Python

K Nearest Neighbors (KNN) algorithm In this Machine Learning world, there are various algorithms designed for classification problems such as Logistic

Apache Spark & Python (pySpark) tutorials for Big Data Analysis and Machine Learning as IPython / Jupyter notebooks

Spark Python Notebooks This is a collection of IPython notebook/Jupyter notebooks intended to train the reader on different Apache Spark concepts, fro

Distributed deep learning on Hadoop and Spark clusters.

Note: we're lovingly marking this project as Archived since we're no longer supporting it. You are welcome to read the code and fork your own version

BigDL - Evaluate the performance of BigDL (Distributed Deep Learning on Apache Spark) in big data analysis problems

Evaluate the performance of BigDL (Distributed Deep Learning on Apache Spark) in big data analysis problems.

Implementation of hyperparameter optimization/tuning methods for machine learning & deep learning models

Hyperparameter Optimization of Machine Learning Algorithms This code provides a hyper-parameter optimization implementation for machine learning algor

Spark-movie-lens - An on-line movie recommender using Spark, Python Flask, and the MovieLens dataset

A scalable on-line movie recommender using Spark and Flask This Apache Spark tutorial will guide you step-by-step into how to use the MovieLens datase

Use Jupyter Notebooks to demonstrate how to build a Recommender with Apache Spark & Elasticsearch

Recommendation engines are one of the most well known, widely used and highest value use cases for applying machine learning. Despite this, while there are many resources available for the basics of training a recommendation model, there are relatively few that explain how to actually deploy these models to create a large-scale recommender system.

X-news - Pipeline data use scrapy, kafka, spark streaming, spark ML and elasticsearch, Kibana

X-news - Pipeline data use scrapy, kafka, spark streaming, spark ML and elasticsearch, Kibana

Team nan solution repository for FPT data-centric competition. Data augmentation, Albumentation, Mosaic, Visualization, KNN application

FPT_data_centric_competition - Team nan solution repository for FPT data-centric competition. Data augmentation, Albumentation, Mosaic, Visualization, KNN application

City-seeds - A random generator of cultural characteristics intended to spark ideas and help draw threads

City Seeds This is a random generator of cultural characteristics intended to sp

This mini project showcase how to build and debug Apache Spark application using Python

Spark app can't be debugged using normal procedure. This mini project showcase how to build and debug Apache Spark application using Python programming language. There are also options to run Spark application on Spark container

Using Bayesian, KNN, Logistic Regression to classify spam and non-spam.

Make Sure the dataset file "spamData.mat" is in the folder spam\src Environment: Python --version = 3.7 Third Party: numpy, matplotlib, math, scipy

Pandas and Spark DataFrame comparison for humans

DataComPy DataComPy is a package to compare two Pandas DataFrames. Originally started to be something of a replacement for SAS's PROC COMPARE for Pand

Make Your Company Data Driven. Connect to any data source, easily visualize, dashboard and share your data.

Redash is designed to enable anyone, regardless of the level of technical sophistication, to harness the power of data big and small. SQL users levera

Python KNN model: Predicting a probability of getting a work visa. Tableau: Non-immigrant visas over the years.

The value of international students to the United States. Probability of getting a non-immigrant visa. Project timeline: Jan 2021 - April 2021 Project

Monitor the stability of a pandas or spark dataframe ⚙︎

Population Shift Monitoring popmon is a package that allows one to check the stability of a dataset. popmon works with both pandas and spark datasets.

Implementation of K-Nearest Neighbors Algorithm Using PySpark

KNN With Spark Implementation of KNN using PySpark. The KNN was used on two separate datasets (https://archive.ics.uci.edu/ml/datasets/iris and https:

Apache (Py)Spark type annotations (stub files).

PySpark Stubs A collection of the Apache Spark stub files. These files were generated by stubgen and manually edited to include accurate type hints. T

Weighted K Nearest Neighbors (kNN) algorithm implemented on python from scratch.

kNN_From_Scratch I implemented the k nearest neighbors (kNN) classification algorithm on python. This algorithm is used to predict the classes of new

Pyspark project that able to do joins on the spark data frames.

SPARK JOINS This project is to perform inner, all outer joins and semi joins. create_df.py: load_data.py : helps to put data into Spark data frames. d

Delta Sharing: An Open Protocol for Secure Data Sharing

Delta Sharing: An Open Protocol for Secure Data Sharing Delta Sharing is an open protocol for secure real-time exchange of large datasets, which enabl

Machine Learning algorithms implementation.

Machine Learning Algorithms Machine Learning algorithms implementation. What can I find here? ML Algorithms KNN K-Means-Clustering SVM (MultiClass) Pe

The Spark Challenge Student Check-In/Out Tracking Script

The Spark Challenge Student Check-In/Out Tracking Script This Python Script uses the Student ID Database to match the entries with the ID Card Swipe a

Reading streams of Twitter data, save them to Kafka, then process with Kafka Stream API and Spark Streaming

Using Streaming Twitter Data with Kafka and Spark Reading streams of Twitter data, publishing them to Kafka topic, process message using Kafka Stream

Simple and Distributed Machine Learning

Synapse Machine Learning SynapseML (previously MMLSpark) is an open source library to simplify the creation of scalable machine learning pipelines. Sy

Deep Learning Pipelines for Apache Spark

Deep Learning Pipelines for Apache Spark The repo only contains HorovodRunner code for local CI and API docs. To use HorovodRunner for distributed tra

PyNNDescent is a Python nearest neighbor descent for approximate nearest neighbors.

PyNNDescent PyNNDescent is a Python nearest neighbor descent for approximate nearest neighbors. It provides a python implementation of Nearest Neighbo

Code base of KU AIRS: SPARK Autonomous Vehicle Team

KU AIRS: SPARK Autonomous Vehicle Project Check this link for the blog post describing this project and the video of SPARK in simulation and on parkou

Building house price data pipelines with Apache Beam and Spark on GCP

This project contains the process from building a web crawler to extract the raw data of house price to create ETL pipelines using Google Could Platform services.

Non-Metric Space Library (NMSLIB): An efficient similarity search library and a toolkit for evaluation of k-NN methods for generic non-metric spaces.

Non-Metric Space Library (NMSLIB) Important Notes NMSLIB is generic but fast, see the results of ANN benchmarks. A standalone implementation of our fa

implementation of the KNN algorithm on crab biometrics dataset for CS16

crab-knn implementation of the KNN algorithm in Python applied to biometrics data of purple rock crabs (leptograpsus variegatus) to classify the sex o

Data science Python notebooks: Deep learning (TensorFlow, Theano, Caffe, Keras), scikit-learn, Kaggle, big data (Spark, Hadoop MapReduce, HDFS), matplotlib, pandas, NumPy, SciPy, Python essentials, AWS, and various command lines.

Data science Python notebooks: Deep learning (TensorFlow, Theano, Caffe, Keras), scikit-learn, Kaggle, big data (Spark, Hadoop MapReduce, HDFS), matplotlib, pandas, NumPy, SciPy, Python essentials, AWS, and various command lines.

List of Data Science Cheatsheets to rule the world

Data Science Cheatsheets List of Data Science Cheatsheets to rule the world. Table of Contents Business Science Business Science Problem Framework Dat

Sentiment analysis on streaming twitter data using Spark Structured Streaming & Python

Sentiment analysis on streaming twitter data using Spark Structured Streaming & Python This project is a good starting point for those who have little

Instant search for and access to many datasets in Pyspark.

SparkDataset Provides instant access to many datasets right from Pyspark (in Spark DataFrame structure). Drop a star if you like the project. 😃 Motiv

My project contrasts K-Nearest Neighbors and Random Forrest Regressors on Real World data

kNN-vs-RFR My project contrasts K-Nearest Neighbors and Random Forrest Regressors on Real World data In many areas, rental bikes have been launched to

A simple voice detection system which can be applied practically for designing a device with capability to detect a baby’s cry and automatically turning on music

Auto-Baby-Cry-Detection-with-Music-Player A simple voice detection system which can be applied practically for designing a device with capability to d

A collection of neat and practical data science and machine learning projects

Data Science A collection of neat and practical data science and machine learning projects Explore the docs » Report Bug · Request Feature Table of Co

Implementations of Machine Learning models, Regularizers, Optimizers and different Cost functions.

Linear Models Implementations of LinearRegression, LassoRegression and RidgeRegression with appropriate Regularizers and Optimizers. Linear Regression

Spark development environment for k8s

Local Spark Dev Env with Docker Development environment for k8s. Using the spark-operator image to ensure it will be the same environment. Start conta

TensorFlowOnSpark brings TensorFlow programs to Apache Spark clusters.

TensorFlowOnSpark TensorFlowOnSpark brings scalable deep learning to Apache Hadoop and Apache Spark clusters. By combining salient features from the T

PySpark Cheat Sheet - learn PySpark and develop apps faster

This cheat sheet will help you learn PySpark and write PySpark apps faster. Everything in here is fully functional PySpark code you can run or adapt to your programs.

Visions provides an extensible suite of tools to support common data analysis operations

Visions And these visions of data types, they kept us up past the dawn. Visions provides an extensible suite of tools to support common data analysis

Industrial knn-based anomaly detection for images. Visit streamlit link to check out the demo.

Industrial KNN-based Anomaly Detection ⭐ Now has streamlit support! ⭐ Run $ streamlit run streamlit_app.py This repo aims to reproduce the results of

ANNchor is a python library which constructs approximate k-nearest neighbour graphs for slow metrics.

Fast k-NN graph construction for slow metrics

The MLOps platform for innovators 🚀

DS2.ai is an integrated AI operation solution that supports all stages from custom AI development to deployment. It is an AI-specialized platform service that collects data, builds a training dataset through data labeling, and enables automatic development of artificial intelligence and easy deployment and operation.

30 Days Of Machine Learning Using Pytorch

Objective of the repository is to learn and build machine learning models using Pytorch. 30DaysofML Using Pytorch

Official code for "Mean Shift for Self-Supervised Learning"

MSF Official code for "Mean Shift for Self-Supervised Learning" Requirements Python = 3.7.6 PyTorch = 1.4 torchvision = 0.5.0 faiss-gpu = 1.6.1 In

Objective of the repository is to learn and build machine learning models using Pytorch. 30DaysofML Using Pytorch

30 Days Of Machine Learning Using Pytorch Objective of the repository is to learn and build machine learning models using Pytorch. List of Algorithms

Automatically create Faiss knn indices with the most optimal similarity search parameters.

It selects the best indexing parameters to achieve the highest recalls given memory and query speed constraints.

[DEPRECATED] Tensorflow wrapper for DataFrames on Apache Spark

TensorFrames (Deprecated) Note: TensorFrames is deprecated. You can use pandas UDF instead. Experimental TensorFlow binding for Scala and Apache Spark

Distributed scikit-learn meta-estimators in PySpark

sk-dist: Distributed scikit-learn meta-estimators in PySpark What is it? sk-dist is a Python package for machine learning built on top of scikit-learn

Distributed Tensorflow, Keras and PyTorch on Apache Spark/Flink & Ray

A unified Data Analytics and AI platform for distributed TensorFlow, Keras and PyTorch on Apache Spark/Flink & Ray What is Analytics Zoo? Analytics Zo

Microsoft Machine Learning for Apache Spark

Microsoft Machine Learning for Apache Spark MMLSpark is an ecosystem of tools aimed towards expanding the distributed computing framework Apache Spark

TensorFlowOnSpark brings TensorFlow programs to Apache Spark clusters.

TensorFlowOnSpark TensorFlowOnSpark brings scalable deep learning to Apache Hadoop and Apache Spark clusters. By combining salient features from the T

Distributed Deep learning with Keras & Spark

Elephas: Distributed Deep Learning with Keras & Spark Elephas is an extension of Keras, which allows you to run distributed deep learning models at sc

BigDL: Distributed Deep Learning Framework for Apache Spark

BigDL: Distributed Deep Learning on Apache Spark What is BigDL? BigDL is a distributed deep learning library for Apache Spark; with BigDL, users can w

Distributed training framework for TensorFlow, Keras, PyTorch, and Apache MXNet.

Horovod Horovod is a distributed deep learning training framework for TensorFlow, Keras, PyTorch, and Apache MXNet. The goal of Horovod is to make dis

Distributed training framework for TensorFlow, Keras, PyTorch, and Apache MXNet.

Horovod Horovod is a distributed deep learning training framework for TensorFlow, Keras, PyTorch, and Apache MXNet. The goal of Horovod is to make dis

Koalas: pandas API on Apache Spark

pandas API on Apache Spark Explore Koalas docs » Live notebook · Issues · Mailing list Help Thirsty Koalas Devastated by Recent Fires The Koalas proje

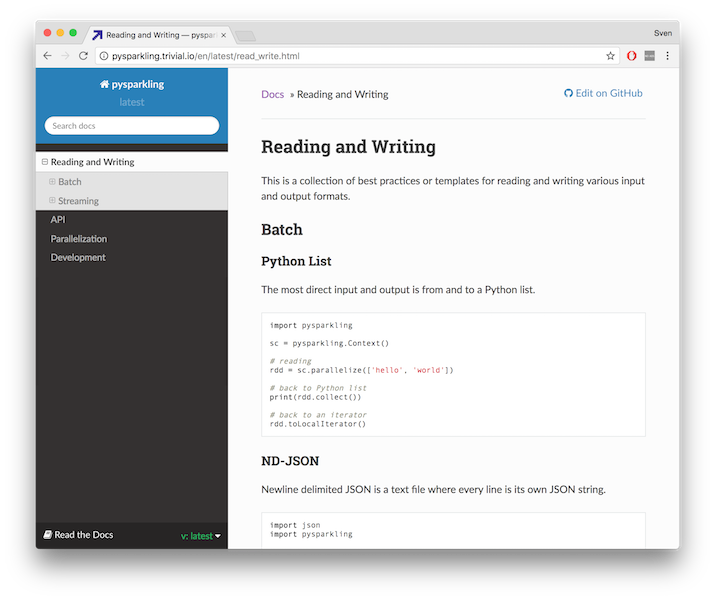

A pure Python implementation of Apache Spark's RDD and DStream interfaces.

pysparkling Pysparkling provides a faster, more responsive way to develop programs for PySpark. It enables code intended for Spark applications to exe

Distributed Deep learning with Keras & Spark

Elephas: Distributed Deep Learning with Keras & Spark Elephas is an extension of Keras, which allows you to run distributed deep learning models at sc

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

PySpark + Scikit-learn = Sparkit-learn

Sparkit-learn PySpark + Scikit-learn = Sparkit-learn GitHub: https://github.com/lensacom/sparkit-learn About Sparkit-learn aims to provide scikit-lear

Open source platform for the machine learning lifecycle

MLflow: A Machine Learning Lifecycle Platform MLflow is a platform to streamline machine learning development, including tracking experiments, packagi

State of the Art Natural Language Processing

Spark NLP: State of the Art Natural Language Processing Spark NLP is a Natural Language Processing library built on top of Apache Spark ML. It provide

State of the Art Natural Language Processing

Spark NLP: State of the Art Natural Language Processing Spark NLP is a Natural Language Processing library built on top of Apache Spark ML. It provide

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

Apache Spark - A unified analytics engine for large-scale data processing

Apache Spark Spark is a unified analytics engine for large-scale data processing. It provides high-level APIs in Scala, Java, Python, and R, and an op

Machine learning, in numpy

numpy-ml Ever wish you had an inefficient but somewhat legible collection of machine learning algorithms implemented exclusively in NumPy? No? Install

python-timbl, originally developed by Sander Canisius, is a Python extension module wrapping the full TiMBL C++ programming interface. With this module, all functionality exposed through the C++ interface is also available to Python scripts. Being able to access the API from Python greatly facilitates prototyping TiMBL-based applications.

README: python-timbl Authors: Sander Canisius, Maarten van Gompel Contact: [email protected] Web site: https://github.com/proycon/python-timbl/ pytho

Serverless proxy for Spark cluster

Hydrosphere Mist Hydrosphere Mist is a serverless proxy for Spark cluster. Mist provides a new functional programming framework and deployment model f

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

H2O is an Open Source, Distributed, Fast & Scalable Machine Learning Platform: Deep Learning, Gradient Boosting (GBM) & XGBoost, Random Forest, Generalized Linear Modeling (GLM with Elastic Net), K-Means, PCA, Generalized Additive Models (GAM), RuleFit, Support Vector Machine (SVM), Stacked Ensembles, Automatic Machine Learning (AutoML), etc.

H2O H2O is an in-memory platform for distributed, scalable machine learning. H2O uses familiar interfaces like R, Python, Scala, Java, JSON and the Fl

:truck: Agile Data Preparation Workflows made easy with dask, cudf, dask_cudf and pyspark

To launch a live notebook server to test optimus using binder or Colab, click on one of the following badges: Optimus is the missing framework to prof