1112 Repositories

Python Non-AR-Spatial-Temporal-Transformer Libraries

Natural language processing summarizer using 3 state of the art Transformer models: BERT, GPT2, and T5

NLP-Summarizer Natural language processing summarizer using 3 state of the art Transformer models: BERT, GPT2, and T5 This project aimed to provide in

Temporal Alignment Prediction for Supervised Representation Learning and Few-Shot Sequence Classification

Temporal Alignment Prediction for Supervised Representation Learning and Few-Shot Sequence Classification Introduction. This package includes the pyth

ANN model for prediction a spatio-temporal distribution of supercooled liquid in mixed-phase clouds using Doppler cloud radar spectra.

VOODOO Revealing supercooled liquid beyond lidar attenuation Explore the docs » Report Bug · Request Feature Table of Contents About The Project Built

Mae segmentation - Reproduction of semantic segmentation using masked autoencoder (mae)

ADE20k Semantic segmentation with MAE Getting started Install the mmsegmentation

The official code repo of "HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound Classification and Detection"

Hierarchical Token Semantic Audio Transformer Introduction The Code Repository for "HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound

A simple non-official manager interface I'm using for my Raspberry Pis.

My Raspberry Pi Manager Overview I have two Raspberry Pi 4 Model B devices that I hooked up to my two TVs (one in my bedroom and the other in my new g

This repo contains the code required to train the multivariate time-series Transformer.

Multi-Variate Time-Series Transformer This repo contains the code required to train the multivariate time-series Transformer. Download the data The No

MoRecon - A tool for reconstructing missing frames in motion capture data.

MoRecon - A tool for reconstructing missing frames in motion capture data.

PyTorch code for BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation

BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation

An implementation of the "Attention is all you need" paper without extra bells and whistles, or difficult syntax

Simple Transformer An implementation of the "Attention is all you need" paper without extra bells and whistles, or difficult syntax. Note: The only ex

DocEnTr: An end-to-end document image enhancement transformer

DocEnTR Description Pytorch implementation of the paper DocEnTr: An End-to-End Document Image Enhancement Transformer. This model is implemented on to

RuCLIP-SB (Russian Contrastive Language–Image Pretraining SWIN-BERT) is a multimodal model for obtaining images and text similarities and rearranging captions and pictures. Unlike other versions of the model we use BERT for text encoder and SWIN transformer for image encoder.

ruCLIP-SB RuCLIP-SB (Russian Contrastive Language–Image Pretraining SWIN-BERT) is a multimodal model for obtaining images and text similarities and re

Computer Vision Paper Reviews with Key Summary of paper, End to End Code Practice and Jupyter Notebook converted papers

Computer-Vision-Paper-Reviews Computer Vision Paper Reviews with Key Summary along Papers & Codes. Jonathan Choi 2021 The repository provides 100+ Pap

PyTorch implementation of "VRT: A Video Restoration Transformer"

VRT: A Video Restoration Transformer Jingyun Liang, Jiezhang Cao, Yuchen Fan, Kai Zhang, Rakesh Ranjan, Yawei Li, Radu Timofte, Luc Van Gool Computer

This repository contains code accompanying the paper "An End-to-End Chinese Text Normalization Model based on Rule-Guided Flat-Lattice Transformer"

FlatTN This repository contains code accompanying the paper "An End-to-End Chinese Text Normalization Model based on Rule-Guided Flat-Lattice Transfor

Goal of the project : Detecting Temporal Boundaries in Sign Language videos

MVA RecVis course final project : Goal of the project : Detecting Temporal Boundaries in Sign Language videos. Sign language automatic indexing is an

Decision Transformer: A brand new Offline RL Pattern

DecisionTransformer_StepbyStep Intro Decision Transformer: A brand new Offline RL Pattern. 这是关于NeurIPS 2021 热门论文Decision Transformer的复现。 👍 原文地址: Deci

Geometric Interpretation of Matrix Square Root and Inverse Square Root

Fast Differentiable Matrix Sqrt Root Geometric Interpretation of Matrix Square Root and Inverse Square Root This repository constains the official Pyt

This repository contains the code for TABS, a 3D CNN-Transformer hybrid automated brain tissue segmentation algorithm using T1w structural MRI scans

This repository contains the code for TABS, a 3D CNN-Transformer hybrid automated brain tissue segmentation algorithm using T1w structural MRI scans. TABS relies on a Res-Unet backbone, with a Vision Transformer embedded between the encoder and decoder layers.

SegTransVAE: Hybrid CNN - Transformer with Regularization for medical image segmentation

SegTransVAE: Hybrid CNN - Transformer with Regularization for medical image segmentation This repo is the official implementation for SegTransVAE. Seg

PyTorch implementation for the paper Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime

Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime Created by Prarthana Bhattacharyya. Disclaimer: This is n

Transformer based SAR image despeckling

Transformer based SAR image despeckling Using the code: The code is stable while using Python 3.6.13, CUDA =10.1 Clone this repository: git clone htt

Pytorch implementation of the paper DocEnTr: An End-to-End Document Image Enhancement Transformer.

DocEnTR Description Pytorch implementation of the paper DocEnTr: An End-to-End Document Image Enhancement Transformer. This model is implemented on to

Blind Video Temporal Consistency via Deep Video Prior

deep-video-prior (DVP) Code for NeurIPS 2020 paper: Blind Video Temporal Consistency via Deep Video Prior PyTorch implementation | paper | project web

A script to find the people whom you follow, but they don't follow you back

insta-non-followers A script to find the people whom you follow, but they don't follow you back Dependencies: python3 libraries - instaloader, getpass

Easy to use and customizable SOTA Semantic Segmentation models with abundant datasets in PyTorch

Semantic Segmentation Easy to use and customizable SOTA Semantic Segmentation models with abundant datasets in PyTorch Features Applicable to followin

OptiPLANT is a cloud-based based system that empowers professional and non-professional data scientists to build high-quality predictive models

OptiPLANT OptiPLANT is a cloud-based based system that empowers professional and non-professional data scientists to build high-quality predictive mod

Code for our paper A Transformer-Based Feature Segmentation and Region Alignment Method For UAV-View Geo-Localization,

FSRA This repository contains the dataset link and the code for our paper A Transformer-Based Feature Segmentation and Region Alignment Method For UAV

EdiBERT is a generative model based on a bi-directional transformer, suited for image manipulation

EdiBERT, a generative model for image editing EdiBERT is a generative model based on a bi-directional transformer, suited for image manipulation. The

On the Adversarial Robustness of Visual Transformer

On the Adversarial Robustness of Visual Transformer Code for our paper "On the Adversarial Robustness of Visual Transformers"

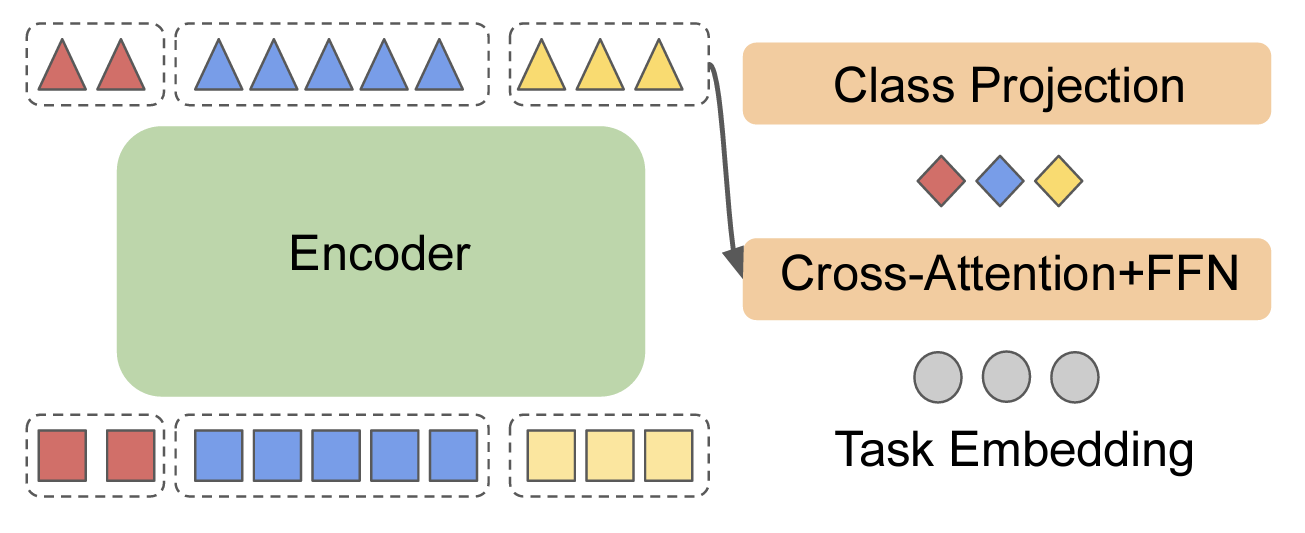

EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks

EncT5 (Unofficial) Pytorch Implementation of EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks About Finetune T5 model for classification & r

This is the repo of the manuscript "Dual-branch Attention-In-Attention Transformer for speech enhancement"

DB-AIAT: A Dual-branch attention-in-attention transformer for single-channel SE

RoNER is a Named Entity Recognition model based on a pre-trained BERT transformer model trained on RONECv2

RoNER RoNER is a Named Entity Recognition model based on a pre-trained BERT transformer model trained on RONECv2. It is meant to be an easy to use, hi

Vit-ImageClassification - Pytorch ViT for Image classification on the CIFAR10 dataset

Vit-ImageClassification Introduction This project uses ViT to perform image clas

STonKGs is a Sophisticated Transformer that can be jointly trained on biomedical text and knowledge graphs

STonKGs STonKGs is a Sophisticated Transformer that can be jointly trained on biomedical text and knowledge graphs. This multimodal Transformer combin

Simple and understandable swin-transformer OCR project

swin-transformer-ocr ocr with swin-transformer Overview Simple and understandable swin-transformer OCR project. The model in this repository heavily r

Multimodal Co-Attention Transformer (MCAT) for Survival Prediction in Gigapixel Whole Slide Images

Multimodal Co-Attention Transformer (MCAT) for Survival Prediction in Gigapixel Whole Slide Images [ICCV 2021] © Mahmood Lab - This code is made avail

RATCHET is a Medical Transformer for Chest X-ray Diagnosis and Reporting

RATCHET: RAdiological Text Captioning for Human Examined Thoraxes RATCHET is a Medical Transformer for Chest X-ray Diagnosis and Reporting. Based on t

Generating Radiology Reports via Memory-driven Transformer

R2Gen This is the implementation of Generating Radiology Reports via Memory-driven Transformer at EMNLP-2020. Citations If you use or extend our work,

This code is for our paper "VTGAN: Semi-supervised Retinal Image Synthesis and Disease Prediction using Vision Transformers"

ICCV Workshop 2021 VTGAN This code is for our paper "VTGAN: Semi-supervised Retinal Image Synthesis and Disease Prediction using Vision Transformers"

Task Transformer Network for Joint MRI Reconstruction and Super-Resolution (MICCAI 2021)

T2Net Task Transformer Network for Joint MRI Reconstruction and Super-Resolution (MICCAI 2021) [Paper][Code] Dependencies numpy==1.18.5 scikit_image==

COVID-VIT: Classification of Covid-19 from CT chest images based on vision transformer models

COVID-ViT COVID-VIT: Classification of Covid-19 from CT chest images based on vision transformer models This code is to response to te MIA-COV19 compe

TransMIL: Transformer based Correlated Multiple Instance Learning for Whole Slide Image Classification

TransMIL: Transformer based Correlated Multiple Instance Learning for Whole Slide Image Classification [NeurIPS 2021] Abstract Multiple instance learn

Mixed Transformer UNet for Medical Image Segmentation

MT-UNet Update 2022/01/05 By another round of training based on previous weights, our model also achieved a better performance on ACDC (91.61% DSC). W

Official repository for the ISBI 2021 paper Transformer Assisted Convolutional Neural Network for Cell Instance Segmentation

SegPC-2021 This is the official repository for the ISBI 2021 paper Transformer Assisted Convolutional Neural Network for Cell Instance Segmentation by

This repo is the official implementation of "UCTransNet: Rethinking the Skip Connections in U-Net from a Channel-wise Perspective with Transformer"

[AAAI2022] UCTransNet This repo is the official implementation of "UCTransNet: Rethinking the Skip Connections in U-Net from a Channel-wise Perspectiv

nnFormer: Interleaved Transformer for Volumetric Segmentation

nnFormer: Interleaved Transformer for Volumetric Segmentation Code for paper "nnFormer: Interleaved Transformer for Volumetric Segmentation ". Please

Band-Adaptive Spectral-Spatial Feature Learning Neural Network for Hyperspectral Image Classification

Band-Adaptive Spectral-Spatial Feature Learning Neural Network for Hyperspectral Image Classification

Learning to Segment Instances in Videos with Spatial Propagation Network

Learning to Segment Instances in Videos with Spatial Propagation Network This paper is available at the 2017 DAVIS Challenge website. Check our result

Starlite-tile38 - Showcase using Tile38 via pyle38 in a Starlite application

Starlite-Tile38 Showcase using Tile38 via pyle38 in a Starlite application. Repo

This project is the implementation template for HW 0 and HW 1 for both the programming and non-programming tracks

This project is the implementation template for HW 0 and HW 1 for both the programming and non-programming tracks

ESGD-M - A stochastic non-convex second order optimizer, suitable for training deep learning models, for PyTorch

ESGD-M - A stochastic non-convex second order optimizer, suitable for training deep learning models, for PyTorch

Pytorch implementation of ICASSP 2022 paper Attention Probe: Vision Transformer Distillation in the Wild

Attention Probe: Vision Transformer Distillation in the Wild Jiahao Wang, Mingdeng Cao, Shuwei Shi, Baoyuan Wu, Yujiu Yang In ICASSP 2022 This code is

Attention Probe: Vision Transformer Distillation in the Wild

Attention Probe: Vision Transformer Distillation in the Wild Jiahao Wang, Mingdeng Cao, Shuwei Shi, Baoyuan Wu, Yujiu Yang In ICASSP 2022 This code is

Fast Differentiable Matrix Sqrt Root

Official Pytorch implementation of ICLR 22 paper Fast Differentiable Matrix Square Root

This implementation contains the application of GPlearn's symbolic transformer on a commodity futures sector of the financial market.

GPlearn_finiance_stock_futures_extension This implementation contains the application of GPlearn's symbolic transformer on a commodity futures sector

ServiceX Transformer that converts flat ROOT ntuples into columnwise data

ServiceX_Uproot_Transformer ServiceX Transformer that converts flat ROOT ntuples into columnwise data Usage You can invoke the transformer from the co

Collect some papers about transformer with vision. Awesome Transformer with Computer Vision (CV)

Awesome Visual-Transformer Collect some Transformer with Computer-Vision (CV) papers. If you find some overlooked papers, please open issues or pull r

Transformer in Vision

Transformer-in-Vision Recent Transformer-based CV and related works. Welcome to comment/contribute! Keep updated. Resource SCENIC: A JAX Library for C

Transformer in Computer Vision

Transformer-in-Vision A paper list of some recent Transformer-based CV works. If you find some ignored papers, please open issues or pull requests. **

A curated list of efficient attention modules

awesome-fast-attention A curated list of efficient attention modules

Compact Bidirectional Transformer for Image Captioning

Compact Bidirectional Transformer for Image Captioning Requirements Python 3.8 Pytorch 1.6 lmdb h5py tensorboardX Prepare Data Please use git clone --

Video-Music Transformer

VMT Video-Music Transformer (VMT) is an attention-based multi-modal model, which generates piano music for a given video. Paper https://arxiv.org/abs/

Detail-Preserving Transformer for Light Field Image Super-Resolution

DPT Official Pytorch implementation of the paper "Detail-Preserving Transformer for Light Field Image Super-Resolution" accepted by AAAI 2022 . Update

Full Transformer Framework for Robust Point Cloud Registration with Deep Information Interaction

Full Transformer Framework for Robust Point Cloud Registration with Deep Information Interaction. arxiv This repository contains python scripts for tr

TransZero++: Cross Attribute-guided Transformer for Zero-Shot Learning

TransZero++ This repository contains the testing code for the paper "TransZero++: Cross Attribute-guided Transformer for Zero-Shot Learning" submitted

TransVTSpotter: End-to-end Video Text Spotter with Transformer

TransVTSpotter: End-to-end Video Text Spotter with Transformer Introduction A Multilingual, Open World Video Text Dataset and End-to-end Video Text Sp

Local-Global Stratified Transformer for Efficient Video Recognition

DualFormer This repo is the implementation of our manuscript entitled "Local-Global Stratified Transformer for Efficient Video Recognition". Our model

MADT: Offline Pre-trained Multi-Agent Decision Transformer

MADT: Offline Pre-trained Multi-Agent Decision Transformer A link to our paper can be found on Arxiv. Overview Official codebase for Offline Pre-train

Separation of Mainlobes and Sidelobes in the Ultrasound Image Based on the Spatial Covariance (MIST) and Aperture-Domain Spectrum of Received Signals

Separation of Mainlobes and Sidelobes in the Ultrasound Image Based on the Spatial Covariance (MIST) and Aperture-Domain Spectrum of Received Signals

TCNN Temporal convolutional neural network for real-time speech enhancement in the time domain

TCNN Pandey A, Wang D L. TCNN: Temporal convolutional neural network for real-time speech enhancement in the time domain[C]//ICASSP 2019-2019 IEEE Int

Implementation of "With a Little Help from my Temporal Context: Multimodal Egocentric Action Recognition, BMVC, 2021" in PyTorch

Multimodal Temporal Context Network (MTCN) This repository implements the model proposed in the paper: Evangelos Kazakos, Jaesung Huh, Arsha Nagrani,

The official code for “DocTr: Document Image Transformer for Geometric Unwarping and Illumination Correction”, ACM MM, Oral Paper, 2021.

Good news! Our new work exhibits state-of-the-art performances on DocUNet benchmark dataset: DocScanner: Robust Document Image Rectification with Prog

![[BMVC2021]](https://github.com/HowieMa/TransFusion-Pose/raw/main/images/framework.jpg)

[BMVC2021] "TransFusion: Cross-view Fusion with Transformer for 3D Human Pose Estimation"

TransFusion-Pose TransFusion: Cross-view Fusion with Transformer for 3D Human Pose Estimation Haoyu Ma, Liangjian Chen, Deying Kong, Zhe Wang, Xingwei

Pose Transformers: Human Motion Prediction with Non-Autoregressive Transformers

Pose Transformers: Human Motion Prediction with Non-Autoregressive Transformers This is the repo used for human motion prediction with non-autoregress

This repository contains demos I made with the Transformers library by HuggingFace.

Transformers-Tutorials Hi there! This repository contains demos I made with the Transformers library by 🤗 HuggingFace. Currently, all of them are imp

Cross-modal Retrieval using Transformer Encoder Reasoning Networks (TERN). With use of Metric Learning and FAISS for fast similarity search on GPU

Cross-modal Retrieval using Transformer Encoder Reasoning Networks This project reimplements the idea from "Transformer Reasoning Network for Image-Te

Official implementation of "UCTransNet: Rethinking the Skip Connections in U-Net from a Channel-wise Perspective with Transformer"

[AAAI2022] UCTransNet This repo is the official implementation of "UCTransNet: Rethinking the Skip Connections in U-Net from a Channel-wise Perspectiv

[ACM MM 2021] Multiview Detection with Shadow Transformer (and View-Coherent Data Augmentation)

Multiview Detection with Shadow Transformer (and View-Coherent Data Augmentation) [arXiv] [paper] @inproceedings{hou2021multiview, title={Multiview

Unifying Global-Local Representations in Salient Object Detection with Transformer

GLSTR (Global-Local Saliency Transformer) This is the official implementation of paper "Unifying Global-Local Representations in Salient Object Detect

Official PyTorch Implementation of paper EAN: Event Adaptive Network for Efficient Action Recognition

Official PyTorch Implementation of paper EAN: Event Adaptive Network for Efficient Action Recognition

This is a code repository for paper OODformer: Out-Of-Distribution Detection Transformer

OODformer: Out-Of-Distribution Detection Transformer This repo is the official the implementation of the OODformer: Out-Of-Distribution Detection Tran

Image Fusion Transformer

Image-Fusion-Transformer Platform Python 3.7 Pytorch =1.0 Training Dataset MS-COCO 2014 (T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ram

Official implementation of Sparse Transformer-based Action Recognition

STAR Official implementation of S parse T ransformer-based A ction R ecognition Dataset download NTU RGB+D 60 action recognition of 2D/3D skeleton fro

MlTr: Multi-label Classification with Transformer

MlTr: Multi-label Classification with Transformer This is official implement of "MlTr: Multi-label Classification with Transformer". Abstract The task

Pyramid Pooling Transformer for Scene Understanding

Pyramid Pooling Transformer for Scene Understanding Requirements: torch 1.6+ torchvision 0.7.0 timm==0.3.2 Validated on torch 1.6.0, torchvision 0.7.0

Deep learning transformer model that generates unique music sequences.

music-ai Deep learning transformer model that generates unique music sequences. Abstract In 2017, a new state-of-the-art was published for natural lan

PyTorch implementation of Higher Order Recurrent Space-Time Transformer

Higher Order Recurrent Space-Time Transformer (HORST) This is the official PyTorch implementation of Higher Order Recurrent Space-Time Transformer. Th

Pytorch implementation of Decoupled Spatial-Temporal Transformer for Video Inpainting

Decoupled Spatial-Temporal Transformer for Video Inpainting By Rui Liu, Hanming Deng, Yangyi Huang, Xiaoyu Shi, Lewei Lu, Wenxiu Sun, Xiaogang Wang, J

Official PyTorch Implementation of "AgentFormer: Agent-Aware Transformers for Socio-Temporal Multi-Agent Forecasting".

AgentFormer This repo contains the official implementation of our paper: AgentFormer: Agent-Aware Transformers for Socio-Temporal Multi-Agent Forecast

Single-Shot Motion Completion with Transformer

Single-Shot Motion Completion with Transformer 👉 [Preprint] 👈 Abstract Motion completion is a challenging and long-discussed problem, which is of gr

![[ICCV 2021] Relaxed Transformer Decoders for Direct Action Proposal Generation](https://github.com/MCG-NJU/RTD-Action/raw/main/rtd_overview.png)

[ICCV 2021] Relaxed Transformer Decoders for Direct Action Proposal Generation

RTD-Net (ICCV 2021) This repo holds the codes of paper: "Relaxed Transformer Decoders for Direct Action Proposal Generation", accepted in ICCV 2021. N

IOT: Instance-wise Layer Reordering for Transformer Structures

Introduction This repository contains the code for Instance-wise Ordered Transformer (IOT), which is introduced in the ICLR2021 paper IOT: Instance-wi

Code and Resources for the Transformer Encoder Reasoning Network (TERN)

Transformer Encoder Reasoning Network Code for the cross-modal visual-linguistic retrieval method from "Transformer Reasoning Network for Image-Text M

Effective Use of Transformer Networks for Entity Tracking

Effective Use of Transformer Networks for Entity Tracking (EMNLP19) This is a PyTorch implementation of our EMNLP paper on the effectiveness of pre-tr

Pytorch implementation of set transformer

set_transformer Official PyTorch implementation of the paper Set Transformer: A Framework for Attention-based Permutation-Invariant Neural Networks .

GCNet: Non-local Networks Meet Squeeze-Excitation Networks and Beyond

GCNet for Object Detection By Yue Cao, Jiarui Xu, Stephen Lin, Fangyun Wei, Han Hu. This repo is a official implementation of "GCNet: Non-local Networ

Pytorch implementation of Compressive Transformers, from Deepmind

Compressive Transformer in Pytorch Pytorch implementation of Compressive Transformers, a variant of Transformer-XL with compressed memory for long-ran

Code repo for "Transformer on a Diet" paper

Transformer on a Diet Reference: C Wang, Z Ye, A Zhang, Z Zhang, A Smola. "Transformer on a Diet". arXiv preprint arXiv (2020). Installation pip insta

Implementing SYNTHESIZER: Rethinking Self-Attention in Transformer Models using Pytorch

Implementing SYNTHESIZER: Rethinking Self-Attention in Transformer Models using Pytorch Reference Paper URL Author: Yi Tay, Dara Bahri, Donald Metzler