718 Repositories

Python Self-Tuning Libraries

VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training

Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training [Arxiv] VideoMAE: Masked Autoencoders are Data-Efficient Learne

![[CVPR 2022] PoseTriplet: Co-evolving 3D Human Pose Estimation, Imitation, and Hallucination under Self-supervision (Oral)](https://github.com/Garfield-kh/PoseTriplet/raw/main/assets/dual-loop-detail-v3.jpg)

[CVPR 2022] PoseTriplet: Co-evolving 3D Human Pose Estimation, Imitation, and Hallucination under Self-supervision (Oral)

PoseTriplet: Co-evolving 3D Human Pose Estimation, Imitation, and Hallucination under Self-supervision Kehong Gong*, Bingbing Li*, Jianfeng Zhang*, Ta

PyTorch implementation of U-TAE and PaPs for satellite image time series panoptic segmentation.

Panoptic Segmentation of Satellite Image Time Series with Convolutional Temporal Attention Networks (ICCV 2021) This repository is the official implem

Explorer is a Autonomous (self-hosted) Bittorrent Network Search Engine.

Explorer Explorer is a Autonomous (self-hosted) Bittorrent Network Search Engine. About The Project Screenshots Supported features Number Feature 1 DH

Code for T-Few from "Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learning"

T-Few This repository contains the official code for the paper: "Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learni

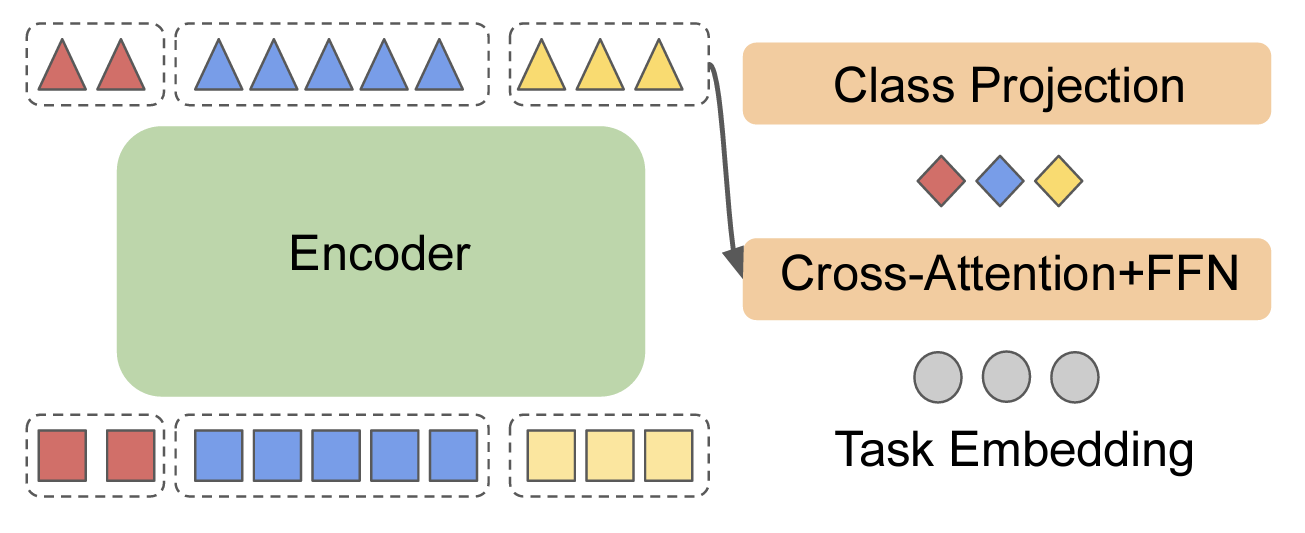

A PyTorch implementation of Mugs proposed by our paper "Mugs: A Multi-Granular Self-Supervised Learning Framework".

Mugs: A Multi-Granular Self-Supervised Learning Framework This is a PyTorch implementation of Mugs proposed by our paper "Mugs: A Multi-Granular Self-

Official repository for the paper "Self-Supervised Models are Continual Learners" (CVPR 2022)

Self-Supervised Models are Continual Learners This is the official repository for the paper: Self-Supervised Models are Continual Learners Enrico Fini

FreeSOLO for unsupervised instance segmentation, CVPR 2022

FreeSOLO: Learning to Segment Objects without Annotations This project hosts the code for implementing the FreeSOLO algorithm for unsupervised instanc

A library built upon PyTorch for building embeddings on discrete event sequences using self-supervision

pytorch-lifestream a library built upon PyTorch for building embeddings on discrete event sequences using self-supervision. It can process terabyte-si

(CVPR 2022) A minimalistic mapless end-to-end stack for joint perception, prediction, planning and control for self driving.

LAV Learning from All Vehicles Dian Chen, Philipp Krähenbühl CVPR 2022 (also arXiV 2203.11934) This repo contains code for paper Learning from all veh

![[CVPR 2022 Oral] Crafting Better Contrastive Views for Siamese Representation Learning](https://github.com/xyupeng/ContrastiveCrop/raw/main/figs/motivation.png)

[CVPR 2022 Oral] Crafting Better Contrastive Views for Siamese Representation Learning

Crafting Better Contrastive Views for Siamese Representation Learning (CVPR 2022 Oral) 2022-03-29: The paper was selected as a CVPR 2022 Oral paper! 2

Official implementation of "CrossPoint: Self-Supervised Cross-Modal Contrastive Learning for 3D Point Cloud Understanding" (CVPR, 2022)

CrossPoint: Self-Supervised Cross-Modal Contrastive Learning for 3D Point Cloud Understanding (CVPR'22) Paper Link | Project Page Abstract : Manual an

Unsupervised phone and word segmentation using dynamic programming on self-supervised VQ features.

Unsupervised Phone and Word Segmentation using Vector-Quantized Neural Networks Overview Unsupervised phone and word segmentation on speech data is pe

Implementation of CaiT models in TensorFlow and ImageNet-1k checkpoints. Includes code for inference and fine-tuning.

CaiT-TF (Going deeper with Image Transformers) This repository provides TensorFlow / Keras implementations of different CaiT [1] variants from Touvron

Azure free vpn for students only! (Self hosted/No sketchy services/Fast and free)

Azpn-Azure-Free-VPN Azure free vpn for students only! (Self hosted/No sketchy services/Fast and free) This is an alternative secure way of accessing f

PyTorch implementation of "data2vec: A General Framework for Self-supervised Learning in Speech, Vision and Language" from Meta AI

data2vec-pytorch PyTorch implementation of "data2vec: A General Framework for Self-supervised Learning in Speech, Vision and Language" from Meta AI (F

(CVPR 2022) Pytorch implementation of "Self-supervised transformers for unsupervised object discovery using normalized cut"

(CVPR 2022) TokenCut Pytorch implementation of Tokencut: Self-supervised Transformers for Unsupervised Object Discovery using Normalized Cut Yangtao W

Includes PyTorch - Keras model porting code for ConvNeXt family of models with fine-tuning and inference notebooks.

ConvNeXt-TF This repository provides TensorFlow / Keras implementations of different ConvNeXt [1] variants. It also provides the TensorFlow / Keras mo

Self-Supervised Vision Transformers Learn Visual Concepts in Histopathology (LMRL Workshop, NeurIPS 2021)

Self-Supervised Vision Transformers Learn Visual Concepts in Histopathology Self-Supervised Vision Transformers Learn Visual Concepts in Histopatholog

Prompt tuning toolkit for GPT-2 and GPT-Neo

mkultra mkultra is a prompt tuning toolkit for GPT-2 and GPT-Neo. Prompt tuning injects a string of 20-100 special tokens into the context in order to

Multilingual Emotion classification using BERT (fine-tuning). Published at the WASSA workshop (ACL2022).

XLM-EMO: Multilingual Emotion Prediction in Social Media Text Abstract Detecting emotion in text allows social and computational scientists to study h

Open source implementation of "A Self-Supervised Descriptor for Image Copy Detection" (SSCD).

A Self-Supervised Descriptor for Image Copy Detection (SSCD) This is the open-source codebase for "A Self-Supervised Descriptor for Image Copy Detecti

PromptDet: Expand Your Detector Vocabulary with Uncurated Images

PromptDet: Expand Your Detector Vocabulary with Uncurated Images Paper Website Introduction The goal of this work is to establish a scalable pipeline

SelfRemaster: SSL Speech Restoration

SelfRemaster: Self-Supervised Speech Restoration Official implementation of SelfRemaster: Self-Supervised Speech Restoration with Analysis-by-Synthesi

LightHuBERT: Lightweight and Configurable Speech Representation Learning with Once-for-All Hidden-Unit BERT

LightHuBERT LightHuBERT: Lightweight and Configurable Speech Representation Learning with Once-for-All Hidden-Unit BERT | Github | Huggingface | SUPER

Official implementation of "Watermarking Images in Self-Supervised Latent-Spaces"

🔍 Watermarking Images in Self-Supervised Latent-Spaces PyTorch implementation and pretrained models for the paper. For details, see Watermarking Imag

Repository for fine-tuning Transformers 🤗 based seq2seq speech models in JAX/Flax.

Seq2Seq Speech in JAX A JAX/Flax repository for combining a pre-trained speech encoder model (e.g. Wav2Vec2, HuBERT, WavLM) with a pre-trained text de

Code for the Findings of NAACL 2022(Long Paper): AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks

AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks arXiv link: upcoming To be published in Findings of NA

The code is for the paper "A Self-Distillation Embedded Supervised Affinity Attention Model for Few-Shot Segmentation"

SD-AANet The code is for the paper "A Self-Distillation Embedded Supervised Affinity Attention Model for Few-Shot Segmentation" [arxiv] Overview confi

The offcial repository for 'CharacterBERT and Self-Teaching for Improving the Robustness of Dense Retrievers on Queries with Typos', SIGIR2022

CharacterBERT-DR The offcial repository for CharacterBERT and Self-Teaching for Improving the Robustness of Dense Retrievers on Queries with Typos, Sh

Codes for SIGIR'22 Paper 'On-Device Next-Item Recommendation with Self-Supervised Knowledge Distillation'

OD-Rec Codes for SIGIR'22 Paper 'On-Device Next-Item Recommendation with Self-Supervised Knowledge Distillation' Paper, saved teacher models and Andro

Code for our SIGIR 2022 accepted paper : P3 Ranker: Mitigating the Gaps between Pre-training and Ranking Fine-tuning with Prompt-based Learning and Pre-finetuning

P3 Ranker Implementation for our SIGIR2022 accepted paper: P3 Ranker: Mitigating the Gaps between Pre-training and Ranking Fine-tuning with Prompt-bas

RODD: A Self-Supervised Approach for Robust Out-of-Distribution Detection

RODD Official Implementation of 2022 CVPRW Paper RODD: A Self-Supervised Approach for Robust Out-of-Distribution Detection Introduction: Recent studie

Implementation of the Hybrid Perception Block and Dual-Pruned Self-Attention block from the ITTR paper for Image to Image Translation using Transformers

ITTR - Pytorch Implementation of the Hybrid Perception Block (HPB) and Dual-Pruned Self-Attention (DPSA) block from the ITTR paper for Image to Image

In this project we predict the forest cover type using the cartographic variables in the training/test datasets.

Kaggle Competition: Forest Cover Type Prediction In this project we predict the forest cover type (the predominant kind of tree cover) using the carto

Under the hood working of transformers, fine-tuning GPT-3 models, DeBERTa, vision models, and the start of Metaverse, using a variety of NLP platforms: Hugging Face, OpenAI API, Trax, and AllenNLP

Transformers-for-NLP-2nd-Edition @copyright 2022, Packt Publishing, Denis Rothman Contact me for any question you have on LinkedIn Get the book on Ama

Ensemble Knowledge Guided Sub-network Search and Fine-tuning for Filter Pruning

Ensemble Knowledge Guided Sub-network Search and Fine-tuning for Filter Pruning This repository is official Tensorflow implementation of paper: Ensemb

Code for ACL 2022 main conference paper "STEMM: Self-learning with Speech-text Manifold Mixup for Speech Translation".

STEMM: Self-learning with Speech-Text Manifold Mixup for Speech Translation This is a PyTorch implementation for the ACL 2022 main conference paper ST

This is an official implementation of the CVPR2022 paper "Blind2Unblind: Self-Supervised Image Denoising with Visible Blind Spots".

Blind2Unblind: Self-Supervised Image Denoising with Visible Blind Spots Blind2Unblind Citing Blind2Unblind @inproceedings{wang2022blind2unblind, tit

code for the ICLR'22 paper: On Robust Prefix-Tuning for Text Classification

On Robust Prefix-Tuning for Text Classification Prefix-tuning has drawed much attention as it is a parameter-efficient and modular alternative to adap

Simple Self-Bot for Discord

KeunoBot 🐼 -Simple Self-Bot for Discord KEUNOBOT 🐼 - Run KeunoBot : /* - Install KeunoBot - Extract it - Run setup.bat - Set token and prefi

Implementation of a memory efficient multi-head attention as proposed in the paper, "Self-attention Does Not Need O(n²) Memory"

Memory Efficient Attention Pytorch Implementation of a memory efficient multi-head attention as proposed in the paper, Self-attention Does Not Need O(

Self-driving car env with PPO algorithm from stable baseline3

Self-driving car with RL stable baseline3 Most of the project develop from https://github.com/GerardMaggiolino/Gym-Medium-Post Please check it out! Th

Codes for "Template-free Prompt Tuning for Few-shot NER".

EntLM The source codes for EntLM. Dependencies: Cuda 10.1, python 3.6.5 To install the required packages by following commands: $ pip3 install -r requ

Tensorflow 1.13.X implementation for our NN paper: Wei Xia, Sen Wang, Ming Yang, Quanxue Gao, Jungong Han, Xinbo Gao: Multi-view graph embedding clustering network: Joint self-supervision and block diagonal representation. Neural Networks 145: 1-9 (2022)

Multi-view graph embedding clustering network: Joint self-supervision and block diagonal representation Simple implementation of our paper MVGC. The d

E2e music remastering system - End-to-end Music Remastering System Using Self-supervised and Adversarial Training

End-to-end Music Remastering System This repository includes source code and pre

Smart edu-autobooking - Johnson @ DMI-UNICT study room self-booking system

smart_edu-autobooking Sistema di autoprenotazione per l'aula studio Johnson@DMI-

Discord-selfbot - Very basic discord self bot

discord-selfbot Very basic discord self bot still being actively developed requi

SUPERVISED-CONTRASTIVE-LEARNING-FOR-PRE-TRAINED-LANGUAGE-MODEL-FINE-TUNING - The Facebook paper about fine tuning RoBERTa with contrastive loss

"# SUPERVISED-CONTRASTIVE-LEARNING-FOR-PRE-TRAINED-LANGUAGE-MODEL-FINE-TUNING" i

OpenDelta - An Open-Source Framework for Paramter Efficient Tuning.

OpenDelta is a toolkit for parameter efficient methods (we dub it as delta tuning), by which users could flexibly assign (or add) a small amount parameters to update while keeping the most paramters frozen. By using OpenDelta, users could easily implement prefix-tuning, adapters, Lora, or any other types of delta tuning with preferred PTMs.

Code and Datasets from the paper "Self-supervised contrastive learning for volcanic unrest detection from InSAR data"

Code and Datasets from the paper "Self-supervised contrastive learning for volcanic unrest detection from InSAR data" You can download the pretrained

A Broad Study on the Transferability of Visual Representations with Contrastive Learning

A Broad Study on the Transferability of Visual Representations with Contrastive Learning This repository contains code for the paper: A Broad Study on

From Canonical Correlation Analysis to Self-supervised Graph Neural Networks

Code for CCA-SSG model proposed in the NeurIPS 2021 paper From Canonical Correlation Analysis to Self-supervised Graph Neural Networks.

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers This repo contains our codes for the paper "No Parameters Left Behind: Sensitivity Gu

Self-Correcting Quantum Many-Body Control using Reinforcement Learning with Tensor Networks

Self-Correcting Quantum Many-Body Control using Reinforcement Learning with Tensor Networks This repository contains the code and data for the corresp

Implementation of SOMs (Self-Organizing Maps) with neighborhood-based map topologies.

py-self-organizing-maps Simple implementation of self-organizing maps (SOMs) A SOM is an unsupervised method for learning a mapping from a discrete ne

Large-scale Knowledge Graph Construction with Prompting

Large-scale Knowledge Graph Construction with Prompting across tasks (predictive and generative), and modalities (language, image, vision + language, etc.)

SubOmiEmbed: Self-supervised Representation Learning of Multi-omics Data for Cancer Type Classification

SubOmiEmbed: Self-supervised Representation Learning of Multi-omics Data for Cancer Type Classification

Byzantine-robust decentralized learning via self-centered clipping

Byzantine-robust decentralized learning via self-centered clipping In this paper, we study the challenging task of Byzantine-robust decentralized trai

The mini-AlphaStar (mini-AS, or mAS) - mini-scale version (non-official) of the AlphaStar (AS)

A mini-scale reproduction code of the AlphaStar program. Note: the original AlphaStar is the AI proposed by DeepMind to play StarCraft II.

Simple implementation of Self Organizing Maps (SOMs) with rectangular and hexagonal grid topologies

py-self-organizing-map Simple implementation of Self Organizing Maps (SOMs) with rectangular and hexagonal grid topologies. A SOM is a simple unsuperv

Implementation of the method described in the Speech Resynthesis from Discrete Disentangled Self-Supervised Representations.

Speech Resynthesis from Discrete Disentangled Self-Supervised Representations Implementation of the method described in the Speech Resynthesis from Di

Open source simulator for autonomous vehicles built on Unreal Engine / Unity, from Microsoft AI & Research

Welcome to AirSim AirSim is a simulator for drones, cars and more, built on Unreal Engine (we now also have an experimental Unity release). It is open

Ssl-tool - A simple interactive CLI wrapper around openssl to make creation and installation of self-signed certs easy

What's this? A simple interactive CLI wrapper around openssl to make self-signin

Convolutional neural network that analyzes self-generated images in a variety of languages to find etymological similarities

This project is a convolutional neural network (CNN) that analyzes self-generated images in a variety of languages to find etymological similarities. Specifically, the goal is to prove that computer vision can be used to identify cognates known to exist, and perhaps lead linguists to evidence of unknown cognates.

Mae segmentation - Reproduction of semantic segmentation using masked autoencoder (mae)

ADE20k Semantic segmentation with MAE Getting started Install the mmsegmentation

Real-time domain adaptation for semantic segmentation

Advanced-Machine-Learning This repository contains the code for the project Real

PyTorch implementation of DirectCLR from paper Understanding Dimensional Collapse in Contrastive Self-supervised Learning

DirectCLR DirectCLR is a simple contrastive learning model for visual representation learning. It does not require a trainable projector as SimCLR. It

Pytorch Performace Tuning, WandB, AMP, Multi-GPU, TensorRT, Triton

Plant Pathology 2020 FGVC7 Introduction A deep learning model pipeline for training, experimentaiton and deployment for the Kaggle Competition, Plant

Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners

DART Implementation for ICLR2022 paper Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners. Environment [email protected] Use pi

Pytorch Implementation of Neural Analysis and Synthesis: Reconstructing Speech from Self-Supervised Representations

NANSY: Unofficial Pytorch Implementation of Neural Analysis and Synthesis: Reconstructing Speech from Self-Supervised Representations Notice Papers' D

Self-supervised learning algorithms provide a way to train Deep Neural Networks in an unsupervised way using contrastive losses

Self-supervised learning Self-supervised learning algorithms provide a way to train Deep Neural Networks in an unsupervised way using contrastive loss

CAST: Character labeling in Animation using Self-supervision by Tracking

CAST: Character labeling in Animation using Self-supervision by Tracking (Published as a conference paper at EuroGraphics 2022) Note: The CAST paper c

Using Self-Supervised Pretext Tasks for Active Learning - Official Pytorch Implementation

Using Self-Supervised Pretext Tasks for Active Learning - Official Pytorch Implementation Experiment Setting: CIFAR10 (downloaded and saved in ./DATA

Self-Supervised Deep Blind Video Super-Resolution

Self-Blind-VSR Paper | Discussion Self-Supervised Deep Blind Video Super-Resolution By Haoran Bai and Jinshan Pan Abstract Existing deep learning-base

Generate fine-tuning samples & Fine-tuning the model & Generate samples by transferring Note On

UPMT Generate fine-tuning samples & Fine-tuning the model & Generate samples by transferring Note On See main.py as an example: from model import PopM

PyTorch implementation for the paper Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime

Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime Created by Prarthana Bhattacharyya. Disclaimer: This is n

Hyperparameters tuning and features selection are two common steps in every machine learning pipeline.

shap-hypetune A python package for simultaneous Hyperparameters Tuning and Features Selection for Gradient Boosting Models. Overview Hyperparameters t

This tool is created by Shahzain and is one of the best self bots out there!

Shahzain SelfBot This tool is created by Shahzain and is one of the best self bots out there! Features Token Destroyer! Server Nuker(50-100 Bans Per S

Innocent-Bot - A Discord client self-bot for destroying, nuking and causing mischief in servers

Innocent-bot A Discord client self-bot for destroying, nuking and causing mischi

SAS: Self-Augmentation Strategy for Language Model Pre-training

SAS: Self-Augmentation Strategy for Language Model Pre-training This repository

Cycle Self-Training for Domain Adaptation (NeurIPS 2021)

CST Code release for "Cycle Self-Training for Domain Adaptation" (NeurIPS 2021) Prerequisites torch=1.7.0 torchvision qpsolvers numpy prettytable tqd

EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks

EncT5 (Unofficial) Pytorch Implementation of EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks About Finetune T5 model for classification & r

Self-supervised learning (SSL) is a method of machine learning

Self-supervised learning (SSL) is a method of machine learning. It learns from unlabeled sample data. It can be regarded as an intermediate form between supervised and unsupervised learning.

My self-hosting infrastructure, fully automated from empty disk to operating services

Khue's Homelab Current status: ALPHA This project utilizes Infrastructure as Code to automate provisioning, operating, and updating self-hosted servic

Source code of all the projects of Udacity Self-Driving Car Engineer Nanodegree.

self-driving-car In this repository I will share the source code of all the projects of Udacity Self-Driving Car Engineer Nanodegree. Hope this might

This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML)

package tests docs license stats support This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML

MODSKIN-LOLPRO-updater: The mod is fkn 10y old and has'nt a self-updater

The mod is fkn 10y old and has'nt a self-updater. To use it just run the exec, wait some seconds, and it will run the new modsk

HistoSeg : Quick attention with multi-loss function for multi-structure segmentation in digital histology images

HistoSeg : Quick attention with multi-loss function for multi-structure segmentation in digital histology images Histological Image Segmentation This

BioPy is a collection (in-progress) of biologically-inspired algorithms written in Python

BioPy is a collection (in-progress) of biologically-inspired algorithms written in Python. Some of the algorithms included are mor

PyToch implementation of A Novel Self-supervised Learning Task Designed for Anomaly Segmentation

Self-Supervised Anomaly Segmentation Intorduction This is a PyToch implementation of A Novel Self-supervised Learning Task Designed for Anomaly Segmen

Transformer in Vision

Transformer-in-Vision Recent Transformer-based CV and related works. Welcome to comment/contribute! Keep updated. Resource SCENIC: A JAX Library for C

A curated list of efficient attention modules

awesome-fast-attention A curated list of efficient attention modules

Original Implementation of Prompt Tuning from Lester, et al, 2021

Prompt Tuning This is the code to reproduce the experiments from the EMNLP 2021 paper "The Power of Scale for Parameter-Efficient Prompt Tuning" (Lest

Meta Self-learning for Multi-Source Domain Adaptation: A Benchmark

Meta Self-Learning for Multi-Source Domain Adaptation: A Benchmark Project | Arxiv | YouTube | | Abstract In recent years, deep learning-based methods

Fuzzware is a project for automated, self-configuring fuzzing of firmware images

Fuzzware Fuzzware is a project for automated, self-configuring fuzzing of firmware images. The idea of this project is to configure the memory ranges

A handy tool for common machine learning models' hyper-parameter tuning.

Common machine learning models' hyperparameter tuning This repo is for a collection of hyper-parameter tuning for "common" machine learning models, in

In this project, we compared Spanish BERT and Multilingual BERT in the Sentiment Analysis task.

Applying BERT Fine Tuning to Sentiment Classification on Amazon Reviews Abstract Sentiment analysis has made great progress in recent years, due to th

A complete, self-contained example for training ImageNet at state-of-the-art speed with FFCV

ffcv ImageNet Training A minimal, single-file PyTorch ImageNet training script designed for hackability. Run train_imagenet.py to get... ...high accur