415 Repositories

Python motion-synthesis Libraries

High-Resolution Image Synthesis with Latent Diffusion Models

Latent Diffusion Models arXiv | BibTeX High-Resolution Image Synthesis with Latent Diffusion Models Robin Rombach*, Andreas Blattmann*, Dominik Lorenz

Deep motion transfer

animation-with-keypoint-mask Paper The right most square is the final result. Softmax mask (circles): \ Heatmap mask: \ conda env create -f environmen

Differential rendering based motion capture blender project.

TraceArmature Summary TraceArmature is currently a set of python scripts that allow for high fidelity motion capture through the use of AI pose estima

A minimal TPU compatible Jax implementation of NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis

NeRF Minimal Jax implementation of NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. Result of Tiny-NeRF RGB Depth

Official PyTorch Implementation of paper "NeLF: Neural Light-transport Field for Single Portrait View Synthesis and Relighting", EGSR 2021.

NeLF: Neural Light-transport Field for Single Portrait View Synthesis and Relighting Official PyTorch Implementation of paper "NeLF: Neural Light-tran

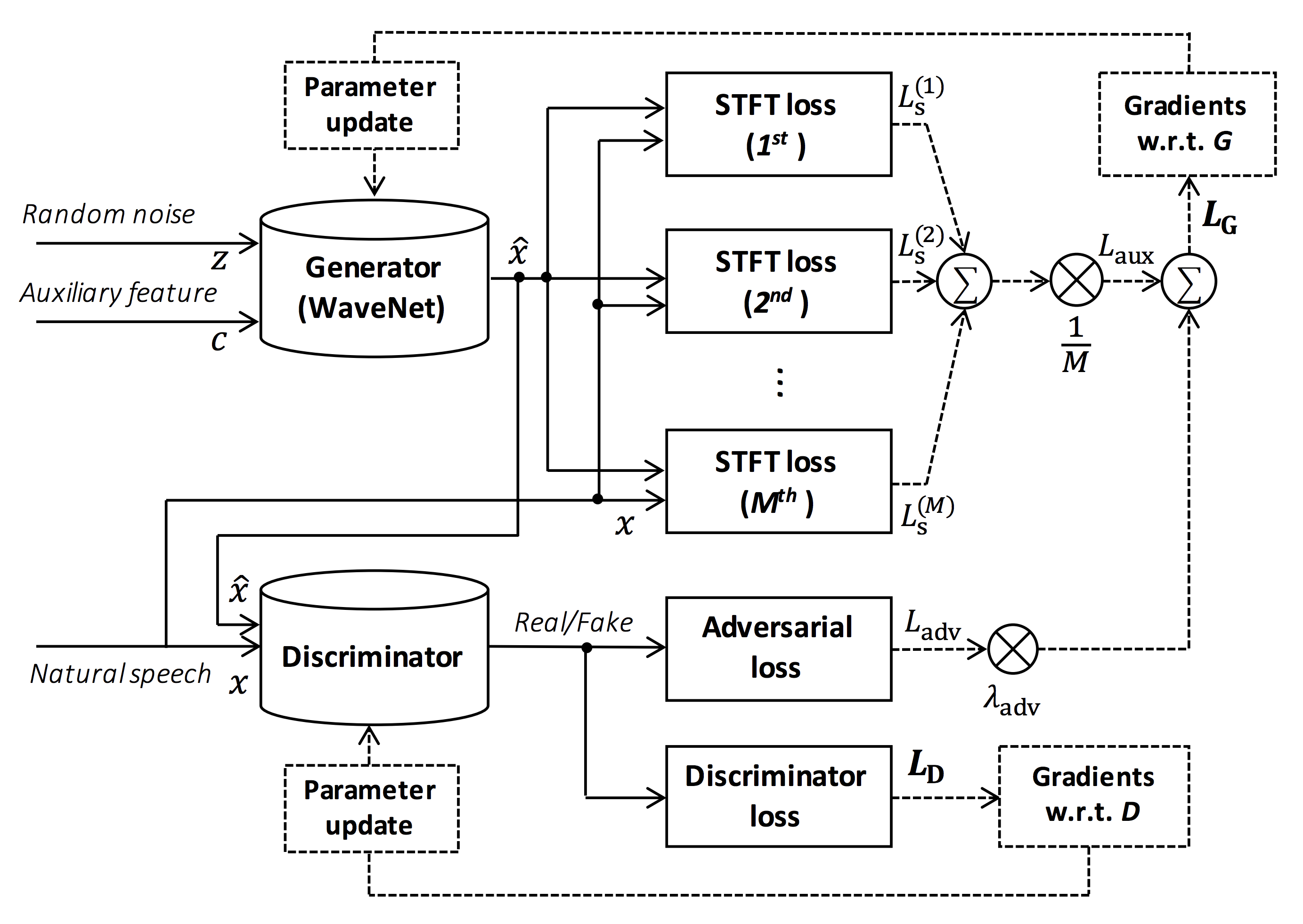

Unofficial Parallel WaveGAN (+ MelGAN & Multi-band MelGAN & HiFi-GAN & StyleMelGAN) with Pytorch

Parallel WaveGAN implementation with Pytorch This repository provides UNOFFICIAL pytorch implementations of the following models: Parallel WaveGAN Mel

PyTorch implementation of Super SloMo by Jiang et al.

Super-SloMo PyTorch implementation of "Super SloMo: High Quality Estimation of Multiple Intermediate Frames for Video Interpolation" by Jiang H., Sun

Code release for the paper “Worldsheet Wrapping the World in a 3D Sheet for View Synthesis from a Single Image”, ICCV 2021.

Worldsheet: Wrapping the World in a 3D Sheet for View Synthesis from a Single Image This repository contains the code for the following paper: R. Hu,

StyleSwin: Transformer-based GAN for High-resolution Image Generation

StyleSwin This repo is the official implementation of "StyleSwin: Transformer-based GAN for High-resolution Image Generation". By Bowen Zhang, Shuyang

Fast (simple) spectral synthesis and emission-line fitting of DESI spectra.

FastSpecFit Introduction This repository contains code and documentation to perform fast, simple spectral synthesis and emission-line fitting of DESI

High-Resolution Image Synthesis with Latent Diffusion Models

Latent Diffusion Models Requirements A suitable conda environment named ldm can be created and activated with: conda env create -f environment.yaml co

PyTorch implementation of our paper: Decoupling and Recoupling Spatiotemporal Representation for RGB-D-based Motion Recognition

Decoupling and Recoupling Spatiotemporal Representation for RGB-D-based Motion Recognition, arxiv This is a PyTorch implementation of our paper. 1. Re

A warping based image translation model focusing on upper body synthesis.

Pose2Img Upper body image synthesis from skeleton(Keypoints). Sub module in the ICCV-2021 paper "Speech Drives Templates: Co-Speech Gesture Synthesis

Pytorch implementation of the paper Improving Text-to-Image Synthesis Using Contrastive Learning

T2I_CL This is the official Pytorch implementation of the paper Improving Text-to-Image Synthesis Using Contrastive Learning Requirements Linux Python

DiffSinger: Singing Voice Synthesis via Shallow Diffusion Mechanism (SVS & TTS); AAAI 2022; Official code

DiffSinger: Singing Voice Synthesis via Shallow Diffusion Mechanism This repository is the official PyTorch implementation of our AAAI-2022 paper, in

Putting NeRF on a Diet: Semantically Consistent Few-Shot View Synthesis

Putting NeRF on a Diet: Semantically Consistent Few-Shot View Synthesis Website | ICCV paper | arXiv | Twitter This repository contains the official i

J.A.R.V.I.S is an AI virtual assistant made in python.

J.A.R.V.I.S is an AI virtual assistant made in python. Running JARVIS Without Python To run JARVIS without python: 1. Head over to our installation pa

DiffSinger: Singing Voice Synthesis via Shallow Diffusion Mechanism (SVS & TTS); AAAI 2022

DiffSinger: Singing Voice Synthesis via Shallow Diffusion Mechanism This repository is the official PyTorch implementation of our AAAI-2022 paper, in

Stochastic Tensor Optimization for Robot Motion - A GPU Robot Motion Toolkit

STORM Stochastic Tensor Optimization for Robot Motion - A GPU Robot Motion Toolkit [Install Instructions] [Paper] [Website] This package contains code

![[ICCV 2021] Our work presents a novel neural rendering approach that can efficiently reconstruct geometric and neural radiance fields for view synthesis.](https://github.com/apchenstu/mvsnerf/raw/main/configs/pipeline.png)

[ICCV 2021] Our work presents a novel neural rendering approach that can efficiently reconstruct geometric and neural radiance fields for view synthesis.

MVSNeRF Project page | Paper This repository contains a pytorch lightning implementation for the ICCV 2021 paper: MVSNeRF: Fast Generalizable Radiance

C3DPO - Canonical 3D Pose Networks for Non-rigid Structure From Motion.

C3DPO: Canonical 3D Pose Networks for Non-Rigid Structure From Motion By: David Novotny, Nikhila Ravi, Benjamin Graham, Natalia Neverova, Andrea Vedal

Human-Pose-and-Motion History

Human Pose and Motion Scientist Approach Eadweard Muybridge, The Galloping Horse Portfolio, 1887 Etienne-Jules Marey, Descent of Inclined Plane, Chron

Use .csv files to record, play and evaluate motion capture data.

Purpose These scripts allow you to record mocap data to, and play from .csv files. This approach facilitates parsing of body movement data in statisti

Convert human motion from video to .bvh

video_to_bvh Convert human motion from video to .bvh with Google Colab Usage 1. Open video_to_bvh.ipynb in Google Colab Go to https://colab.research.g

Crowd-sourced Annotation of Human Motion.

Motion Annotation Tool Live: https://motion-annotation.humanoids.kit.edu Paper: The KIT Motion-Language Dataset Installation Start by installing all P

Tools for the Cleveland State Human Motion and Control Lab

Introduction This is a collection of tools that are helpful for gait analysis. Some are specific to the needs of the Human Motion and Control Lab at C

The code written during my Bachelor Thesis "Classification of Human Whole-Body Motion using Hidden Markov Models".

This code was written during the course of my Bachelor thesis Classification of Human Whole-Body Motion using Hidden Markov Models. Some things might

A motion detection system with RaspberryPi, OpenCV, Python

Human Detection System using Raspberry Pi Functionality Activates a relay on detecting motion. You may need following components to get the expected R

Python package for analyzing sensor-collected human motion data

Python package for analyzing sensor-collected human motion data

Implementation of Auto-Conditioned Recurrent Networks for Extended Complex Human Motion Synthesis

acLSTM_motion This folder contains an implementation of acRNN for the CMU motion database written in Pytorch. See the following links for more backgro

A Re-implementation of the paper "A Deep Learning Framework for Character Motion Synthesis and Editing"

What is This This is a simple re-implementation of the paper "A Deep Learning Framework for Character Motion Synthesis and Editing"(1). Only Sections

Tensorflow Implementation of ECCV'18 paper: Multimodal Human Motion Synthesis

MT-VAE for Multimodal Human Motion Synthesis This is the code for ECCV 2018 paper MT-VAE: Learning Motion Transformations to Generate Multimodal Human

Adversarial Learning for Modeling Human Motion

Adversarial Learning for Modeling Human Motion This repository contains the open source code which reproduces the results for the paper: Adversarial l

Human motion synthesis using Unity3D

Human motion synthesis using Unity3D Prerequisite: Software: amc2bvh.exe, Unity 2017, Blender. Unity: RockVR (Video Capture), scenes, character models

An LSTM based GAN for Human motion synthesis

GAN-motion-Prediction An LSTM based GAN for motion synthesis has a few issues reading H3.6M data from A.Jain et al , will fix soon. Prediction of the

An implementation of "Learning human behaviors from motion capture by adversarial imitation"

Merel-MoCap-GAIL An implementation of Merel et al.'s paper on generative adversarial imitation learning (GAIL) using motion capture (MoCap) data: Lear

Motion Reconstruction Code and Data for Skills from Videos (SFV)

Motion Reconstruction Code and Data for Skills from Videos (SFV) This repo contains the data and the code for motion reconstruction component of the S

pyo is a Python module written in C to help digital signal processing script creation.

pyo is a Python module written in C to help digital signal processing script creation.

Code for "Neural Body: Implicit Neural Representations with Structured Latent Codes for Novel View Synthesis of Dynamic Humans" CVPR 2021 best paper candidate

News 05/17/2021 To make the comparison on ZJU-MoCap easier, we save quantitative and qualitative results of other methods at here, including Neural Vo

Tensorflow implementation of ID-Unet: Iterative Soft and Hard Deformation for View Synthesis.

ID-Unet: Iterative-view-synthesis(CVPR2021 Oral) Tensorflow implementation of ID-Unet: Iterative Soft and Hard Deformation for View Synthesis. Overvie

Code repository for "Stable View Synthesis".

Stable View Synthesis Code repository for "Stable View Synthesis". Setup Install the following Python packages in your Python environment - numpy (1.1

Code for paper Novel View Synthesis via Depth-guided Skip Connections

Novel View Synthesis via Depth-guided Skip Connections Code for paper Novel View Synthesis via Depth-guided Skip Connections @InProceedings{Hou_2021_W

Code repository for "Free View Synthesis", ECCV 2020.

Free View Synthesis Code repository for "Free View Synthesis", ECCV 2020. Setup Install the following Python packages in your Python environment - num

Code for TIP 2017 paper --- Illumination Decomposition for Photograph with Multiple Light Sources.

Illumination_Decomposition Code for TIP 2017 paper --- Illumination Decomposition for Photograph with Multiple Light Sources. This code implements the

🏃♀️ A curated list about human motion capture, analysis and synthesis.

Awesome Human Motion 🏃♀️ A curated list about human motion capture, analysis and synthesis. Contents Introduction Human Models Datasets Data Process

![An end-to-end library for editing and rendering motion of 3D characters with deep learning [SIGGRAPH 2020]](https://github.com/DeepMotionEditing/deep-motion-editing/raw/master/images/retargeting_teaser.gif)

An end-to-end library for editing and rendering motion of 3D characters with deep learning [SIGGRAPH 2020]

Deep-motion-editing This library provides fundamental and advanced functions to work with 3D character animation in deep learning with Pytorch. The co

This is the official PyTorch implementation of the CVPR 2020 paper "TransMoMo: Invariance-Driven Unsupervised Video Motion Retargeting".

TransMoMo: Invariance-Driven Unsupervised Video Motion Retargeting Project Page | YouTube | Paper This is the official PyTorch implementation of the C

This repository contains the source code for the paper First Order Motion Model for Image Animation

!!! Check out our new paper and framework improved for articulated objects First Order Motion Model for Image Animation This repository contains the s

PyTorch implementation of our ICCV 2019 paper: Liquid Warping GAN: A Unified Framework for Human Motion Imitation, Appearance Transfer and Novel View Synthesis

Impersonator PyTorch implementation of our ICCV 2019 paper: Liquid Warping GAN: A Unified Framework for Human Motion Imitation, Appearance Transfer an

PyTorch implementation for our paper Learning Character-Agnostic Motion for Motion Retargeting in 2D, SIGGRAPH 2019

Learning Character-Agnostic Motion for Motion Retargeting in 2D We provide PyTorch implementation for our paper Learning Character-Agnostic Motion for

Dynamic View Synthesis from Dynamic Monocular Video

Dynamic View Synthesis from Dynamic Monocular Video Project Website | Video | Paper Dynamic View Synthesis from Dynamic Monocular Video Chen Gao, Ayus

Physical Anomalous Trajectory or Motion (PHANTOM) Dataset

Physical Anomalous Trajectory or Motion (PHANTOM) Dataset Description This dataset contains the six different classes as described in our paper[]. The

Dynamic View Synthesis from Dynamic Monocular Video

Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-shot Cross-dataset Transfer This repository contains code to compute depth from a

A minimal, standalone viewer for 3D animations stored as stop-motion sequences of individual .obj mesh files.

ObjSequenceViewer V0.5 A minimal, standalone viewer for 3D animations stored as stop-motion sequences of individual .obj mesh files. Installation: pip

A C-like hardware description language (HDL) adding high level synthesis(HLS)-like automatic pipelining as a language construct/compiler feature.

██████╗ ██╗██████╗ ███████╗██╗ ██╗███╗ ██╗███████╗ ██████╗ ██╔══██╗██║██╔══██╗██╔════╝██║ ██║████╗ ██║██╔════╝██╔════╝ ██████╔╝██║██████╔╝█

Generative Art Synthesizer - a python program that generates python programs that generates generative art

GAS - Generative Art Synthesizer Generative Art Synthesizer - a python program that generates python programs that generates generative art. Examples

Computer Vision Script to recognize first person motion, developed as final project for the course "Machine Learning and Deep Learning"

Overview of The Code BaseColab/MLDL_FPAR.pdf: it contains the full explanation of our work Base Colab: it contains the base colab used to perform all

Code for ShadeGAN (NeurIPS2021) A Shading-Guided Generative Implicit Model for Shape-Accurate 3D-Aware Image Synthesis

A Shading-Guided Generative Implicit Model for Shape-Accurate 3D-Aware Image Synthesis Project Page | Paper A Shading-Guided Generative Implicit Model

The codebase for our paper "Generative Occupancy Fields for 3D Surface-Aware Image Synthesis" (NeurIPS 2021)

Generative Occupancy Fields for 3D Surface-Aware Image Synthesis (NeurIPS 2021) Project Page | Paper Xudong Xu, Xingang Pan, Dahua Lin and Bo Dai GOF

Official implementation of "A Shared Representation for Photorealistic Driving Simulators" in PyTorch.

A Shared Representation for Photorealistic Driving Simulators The official code for the paper: "A Shared Representation for Photorealistic Driving Sim

Official implementation of Self-supervised Image-to-text and Text-to-image Synthesis

Self-supervised Image-to-text and Text-to-image Synthesis This is the official implementation of Self-supervised Image-to-text and Text-to-image Synth

![[NeurIPS 2021] Shape from Blur: Recovering Textured 3D Shape and Motion of Fast Moving Objects](https://github.com/rozumden/ShapeFromBlur/raw/main/examples/imgs/aerobie.gif)

[NeurIPS 2021] Shape from Blur: Recovering Textured 3D Shape and Motion of Fast Moving Objects

[NeurIPS 2021] Shape from Blur: Recovering Textured 3D Shape and Motion of Fast Moving Objects YouTube | arXiv Prerequisites Kaolin is available here:

YourTTS: Towards Zero-Shot Multi-Speaker TTS and Zero-Shot Voice Conversion for everyone

YourTTS: Towards Zero-Shot Multi-Speaker TTS and Zero-Shot Voice Conversion for everyone In our recent paper we propose the YourTTS model. YourTTS bri

ImageBART: Bidirectional Context with Multinomial Diffusion for Autoregressive Image Synthesis

ImageBART NeurIPS 2021 Patrick Esser*, Robin Rombach*, Andreas Blattmann*, Björn Ommer * equal contribution arXiv | BibTeX | Poster Requirements A sui

Generative Adversarial Text to Image Synthesis

Text To Image Synthesis This is a tensorflow implementation of synthesizing images. The images are synthesized using the GAN-CLS Algorithm from the pa

Multi-Person Extreme Motion Prediction

Multi-Person Extreme Motion Prediction Implementation for paper Wen Guo, Xiaoyu Bie, Xavier Alameda-Pineda, Francesc Moreno-Noguer, Multi-Person Extre

SLAMP: Stochastic Latent Appearance and Motion Prediction

SLAMP: Stochastic Latent Appearance and Motion Prediction Official implementation of the paper SLAMP: Stochastic Latent Appearance and Motion Predicti

A simple library that implements CLIP guided loss in PyTorch.

pytorch_clip_guided_loss: Pytorch implementation of the CLIP guided loss for Text-To-Image, Image-To-Image, or Image-To-Text generation. A simple libr

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

Code to reproduce experiments in the paper "Task-Oriented Dialogue as Dataflow Synthesis" (TACL 2020).

Code to reproduce experiments in the paper "Task-Oriented Dialogue as Dataflow Synthesis" (TACL 2020).

Official Pytorch implementation for 2021 ICCV paper "Learning Motion Priors for 4D Human Body Capture in 3D Scenes" and trained models / data

Learning Motion Priors for 4D Human Body Capture in 3D Scenes (LEMO) Official Pytorch implementation for 2021 ICCV (oral) paper "Learning Motion Prior

Any-to-any voice conversion using synthetic specific-speaker speeches as intermedium features

MediumVC MediumVC is an utterance-level method towards any-to-any VC. Before that, we propose SingleVC to perform A2O tasks(Xi → Ŷi) , Xi means utter

SingleVC performs any-to-one VC, which is an important component of MediumVC project.

SingleVC performs any-to-one VC, which is an important component of MediumVC project. Here is the official implementation of the paper, MediumVC.

Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch

NÜWA - Pytorch (wip) Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch. This repository will be popul

Implementation of Google Brain's WaveGrad high-fidelity vocoder

WaveGrad Implementation (PyTorch) of Google Brain's high-fidelity WaveGrad vocoder (paper). First implementation on GitHub with high-quality generatio

DiffWave is a fast, high-quality neural vocoder and waveform synthesizer.

DiffWave DiffWave is a fast, high-quality neural vocoder and waveform synthesizer. It starts with Gaussian noise and converts it into speech via itera

Motion planning algorithms commonly used on autonomous vehicles. (path planning + path tracking)

Overview This repository implemented some common motion planners used on autonomous vehicles, including Hybrid A* Planner Frenet Optimal Trajectory Hi

Neural HMMs are all you need (for high-quality attention-free TTS)

Neural HMMs are all you need (for high-quality attention-free TTS) Shivam Mehta, Éva Székely, Jonas Beskow, and Gustav Eje Henter This is the official

A TensorFlow implementation of Neural Program Synthesis from Diverse Demonstration Videos

ViZDoom http://vizdoom.cs.put.edu.pl ViZDoom allows developing AI bots that play Doom using only the visual information (the screen buffer). It is pri

End-2-end speech synthesis with recurrent neural networks

Introduction New: Interactive demo using Google Colaboratory can be found here TTS-Cube is an end-2-end speech synthesis system that provides a full p

An 16kHz implementation of HiFi-GAN for soft-vc.

HiFi-GAN An 16kHz implementation of HiFi-GAN for soft-vc. Relevant links: Official HiFi-GAN repo HiFi-GAN paper Soft-VC repo Soft-VC paper Example Usa

Research code for Arxiv paper "Camera Motion Agnostic 3D Human Pose Estimation"

GMR(Camera Motion Agnostic 3D Human Pose Estimation) This repo provides the source code of our arXiv paper: Seong Hyun Kim, Sunwon Jeong, Sungbum Park

PLUR is a collection of source code datasets suitable for graph-based machine learning.

PLUR (Programming-Language Understanding and Repair) is a collection of source code datasets suitable for graph-based machine learning. We provide scripts for downloading, processing, and loading the datasets. This is done by offering a unified API and data structures for all datasets.

Exploring Versatile Prior for Human Motion via Motion Frequency Guidance (3DV2021)

Exploring Versatile Prior for Human Motion via Motion Frequency Guidance This is the codebase for video-based human motion reconstruction in human-mot

A unified 3D Transformer Pipeline for visual synthesis

Overview This is the official repo for the paper: NÜWA: Visual Synthesis Pre-training for Neural visUal World creAtion. NÜWA is a unified multimodal p

Making Structure-from-Motion (COLMAP) more robust to symmetries and duplicated structures

SfM disambiguation with COLMAP About Structure-from-Motion generally fails when the scene exhibits symmetries and duplicated structures. In this repos

DanceTrack: Multiple Object Tracking in Uniform Appearance and Diverse Motion

DanceTrack DanceTrack is a benchmark for tracking multiple objects in uniform appearance and diverse motion. DanceTrack provides box and identity anno

Code for "ATISS: Autoregressive Transformers for Indoor Scene Synthesis", NeurIPS 2021

ATISS: Autoregressive Transformers for Indoor Scene Synthesis This repository contains the code that accompanies our paper ATISS: Autoregressive Trans

Official implementation of VQ-Diffusion: Vector Quantized Diffusion Model for Text-to-Image Synthesis

Official implementation of VQ-Diffusion: Vector Quantized Diffusion Model for Text-to-Image Synthesis

![[NeurIPS 2021] Deceive D: Adaptive Pseudo Augmentation for GAN Training with Limited Data](https://github.com/EndlessSora/DeceiveD/raw/main/resources/teaser.jpg)

[NeurIPS 2021] Deceive D: Adaptive Pseudo Augmentation for GAN Training with Limited Data

Deceive D: Adaptive Pseudo Augmentation for GAN Training with Limited Data (NeurIPS 2021) This repository provides the official PyTorch implementation

Exploring Versatile Prior for Human Motion via Motion Frequency Guidance (3DV2021)

Exploring Versatile Prior for Human Motion via Motion Frequency Guidance [Video Demo] [Paper] Installation Requirements Python 3.6 PyTorch 1.1.0 Pleas

Hierarchical Motion Encoder-Decoder Network for Trajectory Forecasting (HMNet)

Hierarchical Motion Encoder-Decoder Network for Trajectory Forecasting (HMNet) Our paper: https://arxiv.org/abs/2111.13324 We will release the complet

Code release for Local Light Field Fusion at SIGGRAPH 2019

Local Light Field Fusion Project | Video | Paper Tensorflow implementation for novel view synthesis from sparse input images. Local Light Field Fusion

Latent Execution for Neural Program Synthesis

Latent Execution for Neural Program Synthesis This repo provides the code to replicate the experiments in the paper Xinyun Chen, Dawn Song, Yuandong T

Semantic Image Synthesis with SPADE

Semantic Image Synthesis with SPADE New implementation available at imaginaire repository We have a reimplementation of the SPADE method that is more

720p FPGA Media Player (RISC-V + Motion JPEG + SD + HDMI on an Artix 7)

FPGA Media Player This project is a FPGA based media player which is capable of playing Motion JPEG encoded video over HDMI or VGA on commonly availab

A unified 3D Transformer Pipeline for visual synthesis

Overview This is the official repo for the paper: "NÜWA: Visual Synthesis Pre-training for Neural visUal World creAtion". NÜWA is a unified multimodal

A minimal solution to hand motion capture from a single color camera at over 100fps. Easy to use, plug to run.

Minimal Hand A minimal solution to hand motion capture from a single color camera at over 100fps. Easy to use, plug to run. This project provides the

Code release for Local Light Field Fusion at SIGGRAPH 2019

Local Light Field Fusion Project | Video | Paper Tensorflow implementation for novel view synthesis from sparse input images. Local Light Field Fusion

An implementation for the ICCV 2021 paper Deep Permutation Equivariant Structure from Motion.

Deep Permutation Equivariant Structure from Motion Paper | Poster This repository contains an implementation for the ICCV 2021 paper Deep Permutation