683 Repositories

Python transformers-regression Libraries

An implementation of model parallel GPT-2 and GPT-3-style models using the mesh-tensorflow library.

GPT Neo 🎉 1T or bust my dudes 🎉 An implementation of model & data parallel GPT3-like models using the mesh-tensorflow library. If you're just here t

Awesome Treasure of Transformers Models Collection

💁 Awesome Treasure of Transformers Models for Natural Language processing contains papers, videos, blogs, official repo along with colab Notebooks. 🛫☑️

DANet for Tabular data classification/ regression.

Deep Abstract Networks A pyTorch implementation for AAAI-2022 paper DANets: Deep Abstract Networks for Tabular Data Classification and Regression. Bri

Text Classification in Turkish Texts with Bert

You can watch the details of the project on my youtube channel Project Interface Project Second Interface Goal= Correctly guessing the classification

Powerful unsupervised domain adaptation method for dense retrieval.

Powerful unsupervised domain adaptation method for dense retrieval

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

keytotext Idea is to build a model which will take keywords as inputs and generate sentences as outputs. Potential use case can include: Marketing Sea

Learning with Subset Stacking

Learning with Subset Stacking (LESS) LESS is a new supervised learning algorithm that is based on training many local estimators on subsets of a given

Mercer Gaussian Process (MGP) and Fourier Gaussian Process (FGP) Regression

Mercer Gaussian Process (MGP) and Fourier Gaussian Process (FGP) Regression We provide the code used in our paper "How Good are Low-Rank Approximation

codes for Self-paced Deep Regression Forests with Consideration on Ranking Fairness

Self-paced Deep Regression Forests with Consideration on Ranking Fairness This is official codes for paper Self-paced Deep Regression Forests with Con

A repository with exploration into using transformers to predict DNA ↔ transcription factor binding

Transcription Factor binding predictions with Attention and Transformers A repository with exploration into using transformers to predict DNA ↔ transc

Ml based project which uses regression technique to predict the price.

Price-Predictor Ml based project which uses regression technique to predict the price. I have used various regression models and finds the model with

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

PyTorch implementation of a collections of scalable Video Transformer Benchmarks.

PyTorch implementation of Video Transformer Benchmarks This repository is mainly built upon Pytorch and Pytorch-Lightning. We wish to maintain a colle

Easy Language Model Pretraining leveraging Huggingface's Transformers and Datasets

Easy Language Model Pretraining leveraging Huggingface's Transformers and Datasets What is LASSL • How to Use What is LASSL LASSL은 LAnguage Semi-Super

Pangu-Alpha for Transformers

Pangu-Alpha for Transformers Usage Download MindSpore FP32 weights for GPU from here to data/Pangu-alpha_2.6B.ckpt Activate MindSpore environment and

a wrapper around pytest for executing tests to look for test flakiness and runtime regression

bubblewrap a wrapper around pytest for assessing flakiness and runtime regressions a cs implementations practice project How to Run: First, install de

![[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)](https://github.com/ytongbai/ViTs-vs-CNNs/raw/main/resources/teaser.png)

[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)

Are Transformers More Robust Than CNNs? Pytorch implementation for NeurIPS 2021 Paper: Are Transformers More Robust Than CNNs? Our implementation is b

2D Human Pose estimation using transformers. Implementation in Pytorch

PE-former: Pose Estimation Transformer Vision transformer architectures perform very well for image classification tasks. Efforts to solve more challe

Experiments and examples converting Transformers to ONNX

Experiments and examples converting Transformers to ONNX This repository containes experiments and examples on converting different Transformers to ON

State-of-the-art NLP through transformer models in a modular design and consistent APIs.

Trapper (Transformers wRAPPER) Trapper is an NLP library that aims to make it easier to train transformer based models on downstream tasks. It wraps h

Hashformers is a framework for hashtag segmentation with transformers.

Hashtag segmentation is the task of automatically inserting the missing spaces between the words in a hashtag. Hashformers applies Transformer models

DANet for Tabular data classification/ regression.

Deep Abstract Networks A PyTorch code implemented for the submission DANets: Deep Abstract Networks for Tabular Data Classification and Regression. Do

The best solution of the Weather Prediction track in the Yandex Shifts challenge

yandex-shifts-weather The repository contains information about my solution for the Weather Prediction track in the Yandex Shifts challenge https://re

Implementation of paper "Decision-based Black-box Attack Against Vision Transformers via Patch-wise Adversarial Removal"

Patch-wise Adversarial Removal Implementation of paper "Decision-based Black-box Attack Against Vision Transformers via Patch-wise Adversarial Removal

Post-Training Quantization for Vision transformers.

PTQ4ViT Post-Training Quantization Framework for Vision Transformers. We use the twin uniform quantization method to reduce the quantization error on

![[NeurIPS 2021] COCO-LM: Correcting and Contrasting Text Sequences for Language Model Pretraining](https://github.com/microsoft/COCO-LM/raw/main/coco-lm.png)

[NeurIPS 2021] COCO-LM: Correcting and Contrasting Text Sequences for Language Model Pretraining

COCO-LM This repository contains the scripts for fine-tuning COCO-LM pretrained models on GLUE and SQuAD 2.0 benchmarks. Paper: COCO-LM: Correcting an

This is a re-implementation of TransGAN: Two Pure Transformers Can Make One Strong GAN (CVPR 2021) in PyTorch.

TransGAN: Two Transformers Can Make One Strong GAN [YouTube Video] Paper Authors: Yifan Jiang, Shiyu Chang, Zhangyang Wang CVPR 2021 This is re-implem

Implementation of N-Grammer, augmenting Transformers with latent n-grams, in Pytorch

N-Grammer - Pytorch Implementation of N-Grammer, augmenting Transformers with latent n-grams, in Pytorch Install $ pip install n-grammer-pytorch Usage

Train and use generative text models in a few lines of code.

blather Train and use generative text models in a few lines of code. To see blather in action check out the colab notebook! Installation Use the packa

PyTorch implementation of Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets

Simple PyTorch Implementation of "Grokking" Implementation of Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets Usage Running

Official PyTorch implementation for paper "Efficient Two-Stage Detection of Human–Object Interactions with a Novel Unary–Pairwise Transformer"

UPT: Unary–Pairwise Transformers This repository contains the official PyTorch implementation for the paper Frederic Z. Zhang, Dylan Campbell and Step

Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch

NÜWA - Pytorch (wip) Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch. This repository will be popul

Air Pollution Prediction System using Linear Regression and ANN

AirPollution Pollution Weather Prediction System: Smart Outdoor Pollution Monitoring and Prediction for Healthy Breathing and Living Publication Link:

Mastering Transformers, published by Packt

Mastering Transformers This is the code repository for Mastering Transformers, published by Packt. Build state-of-the-art models from scratch with adv

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

Panoptic SegFormer: Delving Deeper into Panoptic Segmentation with Transformers

Panoptic SegFormer: Delving Deeper into Panoptic Segmentation with Transformers Results results on COCO val Backbone Method Lr Schd PQ Config Download

ROMP: Monocular, One-stage, Regression of Multiple 3D People, ICCV21

Monocular, One-stage, Regression of Multiple 3D People ROMP, accepted by ICCV 2021, is a concise one-stage network for multi-person 3D mesh recovery f

Simple API for UCI Machine Learning Dataset Repository (search, download, analyze)

A simple API for working with University of California, Irvine (UCI) Machine Learning (ML) repository Table of Contents Introduction About Page of the

Revisiting Pre-trained Models for Chinese Natural Language Processing (Findings of EMNLP 2020)

This repository contains the resources in our paper "Revisiting Pre-trained Models for Chinese Natural Language Processing", which will be published i

Code for ACL 2019 Paper: "COMET: Commonsense Transformers for Automatic Knowledge Graph Construction"

To run a generation experiment (either conceptnet or atomic), follow these instructions: First Steps First clone, the repo: git clone https://github.c

Code for the ACL 2021 paper "Structural Guidance for Transformer Language Models"

Structural Guidance for Transformer Language Models This repository accompanies the paper, Structural Guidance for Transformer Language Models, publis

PyTorch code for EMNLP 2019 paper "LXMERT: Learning Cross-Modality Encoder Representations from Transformers".

LXMERT: Learning Cross-Modality Encoder Representations from Transformers Our servers break again :(. I have updated the links so that they should wor

Research code for ECCV 2020 paper "UNITER: UNiversal Image-TExt Representation Learning"

UNITER: UNiversal Image-TExt Representation Learning This is the official repository of UNITER (ECCV 2020). This repository currently supports finetun

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

Code for Editing Factual Knowledge in Language Models

KnowledgeEditor Code for Editing Factual Knowledge in Language Models (https://arxiv.org/abs/2104.08164). @inproceedings{decao2021editing, title={Ed

OOD Generalization and Detection (ACL 2020)

Pretrained Transformers Improve Out-of-Distribution Robustness How does pretraining affect out-of-distribution robustness? We create an OOD benchmark

EMNLP 2021 paper The Devil is in the Detail: Simple Tricks Improve Systematic Generalization of Transformers.

Codebase for training transformers on systematic generalization datasets. The official repository for our EMNLP 2021 paper The Devil is in the Detail:

Understanding the Difficulty of Training Transformers

Admin Understanding the Difficulty of Training Transformers Guided by our analyses, we propose Adaptive Model Initialization (Admin), which successful

Optimizing Deeper Transformers on Small Datasets

DT-Fixup Optimizing Deeper Transformers on Small Datasets Paper published in ACL 2021: arXiv Detailed instructions to replicate our results in the pap

Official Pytorch Implementation of Length-Adaptive Transformer (ACL 2021)

Length-Adaptive Transformer This is the official Pytorch implementation of Length-Adaptive Transformer. For detailed information about the method, ple

Research code for "What to Pre-Train on? Efficient Intermediate Task Selection", EMNLP 2021

efficient-task-transfer This repository contains code for the experiments in our paper "What to Pre-Train on? Efficient Intermediate Task Selection".

PyTorch implementation of the ACL, 2021 paper Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks.

Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks This repo contains the PyTorch implementation of the ACL, 2021 pa

Implementation of Advantage-Weighted Regression: Simple and Scalable Off-Policy Reinforcement Learning

advantage-weighted-regression Implementation of Advantage-Weighted Regression: Simple and Scalable Off-Policy Reinforcement Learning, by Peng et al. (

This repository contains the implementation of the paper: Federated Distillation of Natural Language Understanding with Confident Sinkhorns

Federated Distillation of Natural Language Understanding with Confident Sinkhorns This repository provides an alternative method for ensembled distill

Code for "ATISS: Autoregressive Transformers for Indoor Scene Synthesis", NeurIPS 2021

ATISS: Autoregressive Transformers for Indoor Scene Synthesis This repository contains the code that accompanies our paper ATISS: Autoregressive Trans

End-to-End Referring Video Object Segmentation with Multimodal Transformers

End-to-End Referring Video Object Segmentation with Multimodal Transformers This repo contains the official implementation of the paper: End-to-End Re

Pre-Training 3D Point Cloud Transformers with Masked Point Modeling

Point-BERT: Pre-Training 3D Point Cloud Transformers with Masked Point Modeling Created by Xumin Yu*, Lulu Tang*, Yongming Rao*, Tiejun Huang, Jie Zho

Predicting diabetes over a five year period using logistic regression and the Pima First-Nation dataset

Diabetes This script uses the Pima First Nations dataset to create a model to predict whether or not an individual will develop Diabetes Mellitus Type

Code for our ICCV 2021 Paper "OadTR: Online Action Detection with Transformers".

Code for our ICCV 2021 Paper "OadTR: Online Action Detection with Transformers".

Official Pytorch Code for the paper TransWeather

TransWeather Official Code for the paper TransWeather, Arxiv Tech Report 2021 Paper | Website About this repo: This repo hosts the implentation code,

A logistic regression model for health insurance purchasing prediction

Logistic_Regression_Model A logistic regression model for health insurance purchasing prediction This code is using these packages, so please make sur

Haystack is an open source NLP framework that leverages Transformer models.

Haystack is an end-to-end framework that enables you to build powerful and production-ready pipelines for different search use cases. Whether you want

KakaoBrain KoGPT (Korean Generative Pre-trained Transformer)

KoGPT KoGPT (Korean Generative Pre-trained Transformer) https://github.com/kakaobrain/kogpt https://huggingface.co/kakaobrain/kogpt Model Descriptions

Conversational text Analysis using various NLP techniques

PyConverse Let me try first Installation pip install pyconverse Usage Please try this notebook that demos the core functionalities: basic usage noteb

A linear regression model for house price prediction

Linear_Regression_Model A linear regression model for house price prediction. This code is using these packages, so please make sure your have install

Package to compute Mauve, a similarity score between neural text and human text. Install with `pip install mauve-text`.

MAUVE MAUVE is a library built on PyTorch and HuggingFace Transformers to measure the gap between neural text and human text with the eponymous MAUVE

Make differentially private training of transformers easy for everyone

private-transformers This codebase facilitates fast experimentation of differentially private training of Hugging Face transformers. What is this? Why

PeCo: Perceptual Codebook for BERT Pre-training of Vision Transformers

PeCo: Perceptual Codebook for BERT Pre-training of Vision Transformers

Spectralformer: Rethinking hyperspectral image classification with transformers

Spectralformer: Rethinking hyperspectral image classification with transformers Danfeng Hong, Zhu Han, Jing Yao, Lianru Gao, Bing Zhang, Antonio Plaza

Automated question generation and question answering from Turkish texts using text-to-text transformers

Turkish Question Generation Offical source code for "Automated question generation & question answering from Turkish texts using text-to-text transfor

Tandem Mass Spectrum Prediction with Graph Transformers

MassFormer This is the original implementation of MassFormer, a graph transformer for small molecule MS/MS prediction. Check out the preprint on arxiv

Unleashing Transformers: Parallel Token Prediction with Discrete Absorbing Diffusion for Fast High-Resolution Image Generation from Vector-Quantized Codes

Unleashing Transformers: Parallel Token Prediction with Discrete Absorbing Diffusion for Fast High-Resolution Image Generation from Vector-Quantized C

This thesis is mainly concerned with state-space methods for a class of deep Gaussian process (DGP) regression problems

Doctoral dissertation of Zheng Zhao This thesis is mainly concerned with state-space methods for a class of deep Gaussian process (DGP) regression pro

CLIPImageClassifier wraps clip image model from transformers

CLIPImageClassifier CLIPImageClassifier wraps clip image model from transformers. CLIPImageClassifier is initialized with the argument classes, these

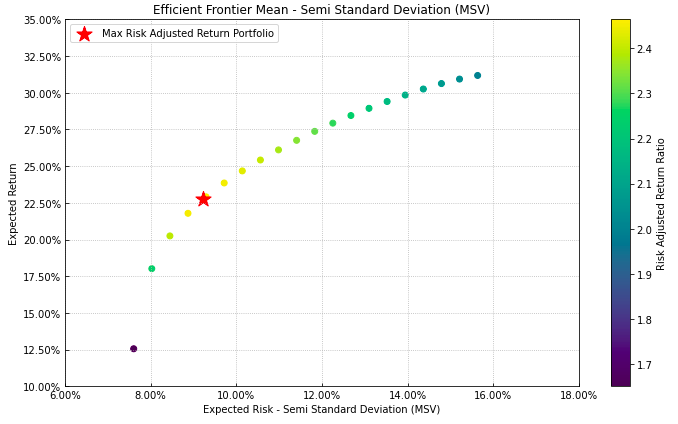

Portfolio Optimization and Quantitative Strategic Asset Allocation in Python

Riskfolio-Lib Quantitative Strategic Asset Allocation, Easy for Everyone. Description Riskfolio-Lib is a library for making quantitative strategic ass

An easy to use Natural Language Processing library and framework for predicting, training, fine-tuning, and serving up state-of-the-art NLP models.

Welcome to AdaptNLP A high level framework and library for running, training, and deploying state-of-the-art Natural Language Processing (NLP) models

Use fastai-v2 with HuggingFace's pretrained transformers

FastHugs Use fastai v2 with HuggingFace's pretrained transformers, see the notebooks below depending on your task: Text classification: fasthugs_seq_c

A library that integrates huggingface transformers with the world of fastai, giving fastai devs everything they need to train, evaluate, and deploy transformer specific models.

blurr A library that integrates huggingface transformers with version 2 of the fastai framework Install You can now pip install blurr via pip install

Deep Reinforced Attention Regression for Partial Sketch Based Image Retrieval.

DARP-SBIR Intro This repository contains the source code implementation for ICDM submission paper Deep Reinforced Attention Regression for Partial Ske

This is the official PyTorch implementation for "Mesa: A Memory-saving Training Framework for Transformers".

A Memory-saving Training Framework for Transformers This is the official PyTorch implementation for Mesa: A Memory-saving Training Framework for Trans

Auto-Encoding Score Distribution Regression for Action Quality Assessment

DAE-AQA It is an open source program reference to paper Auto-Encoding Score Distribution Regression for Action Quality Assessment. 1.Introduction DAE

Feature-engine is a Python library with multiple transformers to engineer and select features for use in machine learning models.

Feature-engine is a Python library with multiple transformers to engineer and select features for use in machine learning models. Feature-engine's transformers follow scikit-learn's functionality with fit() and transform() methods to first learn the transforming parameters from data and then transform the data.

PyCaret is an open-source, low-code machine learning library in Python that automates machine learning workflows.

An open-source, low-code machine learning library in Python 🚀 Version 2.3.5 out now! Check out the release notes here. Official • Docs • Install • Tu

Bayesian Additive Regression Trees For Python

BartPy Introduction BartPy is a pure python implementation of the Bayesian additive regressions trees model of Chipman et al [1]. Reasons to use BART

Multi-modal Vision Transformers Excel at Class-agnostic Object Detection

Multi-modal Vision Transformers Excel at Class-agnostic Object Detection

This is the official PyTorch implementation for "Mesa: A Memory-saving Training Framework for Transformers".

Mesa: A Memory-saving Training Framework for Transformers This is the official PyTorch implementation for Mesa: A Memory-saving Training Framework for

Backprop makes it simple to use, finetune, and deploy state-of-the-art ML models.

Backprop makes it simple to use, finetune, and deploy state-of-the-art ML models. Solve a variety of tasks with pre-trained models or finetune them in

This repository contains the code used for the implementation of the paper "Probabilistic Regression with HuberDistributions"

Public_prob_regression_with_huber_distributions This repository contains the code used for the implementation of the paper "Probabilistic Regression w

![[BMVC'21] Official PyTorch Implementation of Grounded Situation Recognition with Transformers](https://user-images.githubusercontent.com/55849968/135632419-e5a10fb9-ec2c-465c-8b9b-4d0c6f69b5b4.png)

[BMVC'21] Official PyTorch Implementation of Grounded Situation Recognition with Transformers

Grounded Situation Recognition with Transformers Paper | Model Checkpoint This is the official PyTorch implementation of Grounded Situation Recognitio

A pairs trade is a market neutral trading strategy enabling traders to profit from virtually any market conditions.

A pairs trade is a market neutral trading strategy enabling traders to profit from virtually any market conditions. This strategy is categorized as a statistical arbitrage and convergence trading strategy.

Python module for performing linear regression for data with measurement errors and intrinsic scatter

Linear regression for data with measurement errors and intrinsic scatter (BCES) Python module for performing robust linear regression on (X,Y) data po

A machine learning project that predicts the price of used cars in the UK

Car Price Prediction Image Credit: AA Cars Project Overview Scraped 3000 used cars data from AA Cars website using Python and BeautifulSoup. Cleaned t

Introducing neural networks to predict stock prices

IntroNeuralNetworks in Python: A Template Project IntroNeuralNetworks is a project that introduces neural networks and illustrates an example of how o

This project uses unsupervised machine learning to identify correlations between daily inoculation rates in the USA and twitter sentiment in regards to COVID-19.

Twitter COVID-19 Sentiment Analysis Members: Christopher Bach | Khalid Hamid Fallous | Jay Hirpara | Jing Tang | Graham Thomas | David Wetherhold Pro

This repository includes the official project for the paper: TransMix: Attend to Mix for Vision Transformers.

TransMix: Attend to Mix for Vision Transformers This repository includes the official project for the paper: TransMix: Attend to Mix for Vision Transf

Official Matlab Implementation for "Tiny Obstacle Discovery by Occlusion-aware Multilayer Regression", TIP 2020

Tiny Obstacle Discovery by Occlusion-aware Multilayer Regression Official Matlab Implementation for "Tiny Obstacle Discovery by Occlusion-aware Multil

Code and experiments for "Deep Neural Networks for Rank Consistent Ordinal Regression based on Conditional Probabilities"

corn-ordinal-neuralnet This repository contains the orginal model code and experiment logs for the paper "Deep Neural Networks for Rank Consistent Ord

This repository includes the official project for the paper: TransMix: Attend to Mix for Vision Transformers.

TransMix: Attend to Mix for Vision Transformers This repository includes the official project for the paper: TransMix: Attend to Mix for Vision Transf

![[BMVC'21] Official PyTorch Implementation of Grounded Situation Recognition with Transformers](https://user-images.githubusercontent.com/55849968/135632419-e5a10fb9-ec2c-465c-8b9b-4d0c6f69b5b4.png)

[BMVC'21] Official PyTorch Implementation of Grounded Situation Recognition with Transformers

Grounded Situation Recognition with Transformers Paper | Model Checkpoint This is the official PyTorch implementation of Grounded Situation Recognitio

Official source for spanish Language Models and resources made @ BSC-TEMU within the "Plan de las Tecnologías del Lenguaje" (Plan-TL).

Spanish Language Models 💃🏻 A repository part of the MarIA project. Corpora 📃 Corpora Number of documents Number of tokens Size (GB) BNE 201,080,084