683 Repositories

Python transformers-regression Libraries

The Dual Memory is build from a simple CNN for the deep memory and Linear Regression fro the fast Memory

Simple-DMA a simple Dual Memory Architecture for classifications. based on the paper Dual-Memory Deep Learning Architectures for Lifelong Learning of

Hitters Linear Regression - Hitters Linear Regression With Python

Hitters_Linear_Regression Kullanacağımız veri seti Carnegie Mellon Üniversitesi'

Sinkformers: Transformers with Doubly Stochastic Attention

Code for the paper : "Sinkformers: Transformers with Doubly Stochastic Attention" Paper You will find our paper here. Compat This package has been dev

RoNER is a Named Entity Recognition model based on a pre-trained BERT transformer model trained on RONECv2

RoNER RoNER is a Named Entity Recognition model based on a pre-trained BERT transformer model trained on RONECv2. It is meant to be an easy to use, hi

A curated list and survey of awesome Vision Transformers.

English | 简体中文 A curated list and survey of awesome Vision Transformers. You can use mind mapping software to open the mind mapping source file. You c

BERTopic is a topic modeling technique that leverages 🤗 transformers and c-TF-IDF to create dense clusters allowing for easily interpretable topics whilst keeping important words in the topic descriptions

BERTopic BERTopic is a topic modeling technique that leverages 🤗 transformers and c-TF-IDF to create dense clusters allowing for easily interpretable

Awesome Transformers in Medical Imaging

This repo supplements our Survey on Transformers in Medical Imaging Fahad Shamshad, Salman Khan, Syed Waqas Zamir, Muhammad Haris Khan, Munawar Hayat,

Pytorch Implementation of Residual Vision Transformers(ResViT)

ResViT Official Pytorch Implementation of Residual Vision Transformers(ResViT) which is described in the following paper: Onat Dalmaz and Mahmut Yurt

This code is for our paper "VTGAN: Semi-supervised Retinal Image Synthesis and Disease Prediction using Vision Transformers"

ICCV Workshop 2021 VTGAN This code is for our paper "VTGAN: Semi-supervised Retinal Image Synthesis and Disease Prediction using Vision Transformers"

Unsupervised MRI Reconstruction via Zero-Shot Learned Adversarial Transformers

Official TensorFlow implementation of the unsupervised reconstruction model using zero-Shot Learned Adversarial TransformERs (SLATER). (https://arxiv.

Accelerated Multi-Modal MR Imaging with Transformers

Accelerated Multi-Modal MR Imaging with Transformers Dependencies numpy==1.18.5 scikit_image==0.16.2 torchvision==0.8.1 torch==1.7.0 runstats==1.8.0 p

This repo holds the code of TransFuse: Fusing Transformers and CNNs for Medical Image Segmentation

TransFuse This repo holds the code of TransFuse: Fusing Transformers and CNNs for Medical Image Segmentation Requirements Pytorch=1.6.0, 1.9.0 (=1.

ChatBot-Pytorch - A GPT-2 ChatBot implemented using Pytorch and Huggingface-transformers

ChatBot-Pytorch A GPT-2 ChatBot implemented using Pytorch and Huggingface-transf

Use AutoModelForSeq2SeqLM in Huggingface Transformers to train COMET

Training COMET using seq2seq setting Use AutoModelForSeq2SeqLM in Huggingface Transformers to train COMET. The codes are modified from run_summarizati

Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents

Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents [Project Page] [Paper] [Video] Wenlong Huang1, Pieter Abbee

Transformer in Vision

Transformer-in-Vision Recent Transformer-based CV and related works. Welcome to comment/contribute! Keep updated. Resource SCENIC: A JAX Library for C

Dynamic Token Normalization Improves Vision Transformers

Dynamic Token Normalization Improves Vision Transformers This is the PyTorch implementation of the paper Dynamic Token Normalization Improves Vision T

Look Closer: Bridging Egocentric and Third-Person Views with Transformers for Robotic Manipulation

Look Closer: Bridging Egocentric and Third-Person Views with Transformers for Robotic Manipulation Official PyTorch implementation for the paper Look

TransMVSNet: Global Context-aware Multi-view Stereo Network with Transformers.

TransMVSNet This repository contains the official implementation of the paper: "TransMVSNet: Global Context-aware Multi-view Stereo Network with Trans

3D-RETR: End-to-End Single and Multi-View3D Reconstruction with Transformers

3D-RETR: End-to-End Single and Multi-View 3D Reconstruction with Transformers (BMVC 2021) Zai Shi*, Zhao Meng*, Yiran Xing, Yunpu Ma, Roger Wattenhofe

Pose Transformers: Human Motion Prediction with Non-Autoregressive Transformers

Pose Transformers: Human Motion Prediction with Non-Autoregressive Transformers This is the repo used for human motion prediction with non-autoregress

In this project, we compared Spanish BERT and Multilingual BERT in the Sentiment Analysis task.

Applying BERT Fine Tuning to Sentiment Classification on Amazon Reviews Abstract Sentiment analysis has made great progress in recent years, due to th

This repository contains demos I made with the Transformers library by HuggingFace.

Transformers-Tutorials Hi there! This repository contains demos I made with the Transformers library by 🤗 HuggingFace. Currently, all of them are imp

Used Logistic Regression, Random Forest, and XGBoost to predict the outcome of Search & Destroy games from the Call of Duty World League for the 2018 and 2019 seasons.

Call of Duty World League: Search & Destroy Outcome Predictions Growing up as an avid Call of Duty player, I was always curious about what factors led

Implementation of ML models like Decision tree, Naive Bayes, Logistic Regression and many other

ML_Model_implementaion Implementation of ML models like Decision tree, Naive Bayes, Logistic Regression and many other dectree_model: Implementation o

No-Reference Image Quality Assessment via Transformers, Relative Ranking, and Self-Consistency

This repository contains the implementation for the paper: No-Reference Image Quality Assessment via Transformers, Relative Ranking, and Self-Consiste

HAT: Hierarchical Aggregation Transformers for Person Re-identification

HAT: Hierarchical Aggregation Transformers for Person Re-identification

"Exploring Vision Transformers for Fine-grained Classification" at CVPRW FGVC8

FGVC8 Exploring Vision Transformers for Fine-grained Classification paper presented at the CVPR 2021, The Eight Workshop on Fine-Grained Visual Catego

Official implementation of "Refiner: Refining Self-attention for Vision Transformers".

RefinerViT This repo is the official implementation of "Refiner: Refining Self-attention for Vision Transformers". The repo is build on top of timm an

RETRO-pytorch - Implementation of RETRO, Deepmind's Retrieval based Attention net, in Pytorch

RETRO - Pytorch (wip) Implementation of RETRO, Deepmind's Retrieval based Attent

A Unified Framework and Analysis for Structured Knowledge Grounding

UnifiedSKG 📚 : Unifying and Multi-Tasking Structured Knowledge Grounding with Text-to-Text Language Models Code for paper UnifiedSKG: Unifying and Mu

![[CVPR 2021]](https://github.com/decisionforce/mmTransformer/raw/main/figs/model.png)

[CVPR 2021] "Multimodal Motion Prediction with Stacked Transformers": official code implementation and project page.

mmTransformer Introduction This repo is official implementation for mmTransformer in pytorch. Currently, the core code of mmTransformer is implemented

Official PyTorch Implementation of "AgentFormer: Agent-Aware Transformers for Socio-Temporal Multi-Agent Forecasting".

AgentFormer This repo contains the official implementation of our paper: AgentFormer: Agent-Aware Transformers for Socio-Temporal Multi-Agent Forecast

"Investigating the Limitations of Transformers with Simple Arithmetic Tasks", 2021

transformers-arithmetic This repository contains the code to reproduce the experiments from the paper: Nogueira, Jiang, Lin "Investigating the Limitat

Pytorch implementation of Compressive Transformers, from Deepmind

Compressive Transformer in Pytorch Pytorch implementation of Compressive Transformers, a variant of Transformer-XL with compressed memory for long-ran

The accompanying code for the paper "GMAT: Global Memory Augmentation for Transformers" (Ankit Gupta and Jonathan Berant).

GMAT: Global Memory Augmentation for Transformers This repository contains the accompanying code for the paper: "GMAT: Global Memory Augmentation for

Implementation of Memformer, a Memory-augmented Transformer, in Pytorch

Memformer - Pytorch Implementation of Memformer, a Memory-augmented Transformer, in Pytorch. It includes memory slots, which are updated with attentio

Official code repository of the paper Linear Transformers Are Secretly Fast Weight Programmers.

Linear Transformers Are Secretly Fast Weight Programmers This repository contains the code accompanying the paper Linear Transformers Are Secretly Fas

Node-level Graph Regression with Deep Gaussian Process Models

Node-level Graph Regression with Deep Gaussian Process Models Prerequests our implementation is mainly based on tensorflow 1.x and gpflow 1.x: python

Unofficial JAX implementations of Deep Learning models

JAX Models Table of Contents About The Project Getting Started Prerequisites Installation Usage Contributing License Contact About The Project The JAX

Source code for the BMVC-2021 paper "SimReg: Regression as a Simple Yet Effective Tool for Self-supervised Knowledge Distillation".

SimReg: A Simple Regression Based Framework for Self-supervised Knowledge Distillation Source code for the paper "SimReg: Regression as a Simple Yet E

Sentiment-Analysis and EDA on the IMDB Movie Review Dataset

Sentiment-Analysis and EDA on the IMDB Movie Review Dataset The main part of the work focuses on the exploration and study of different approaches whi

To Design and Implement Logistic Regression to Classify Between Benign and Malignant Cancer Types

To Design and Implement Logistic Regression to Classify Between Benign and Malignant Cancer Types, from a Database Taken From Dr. Wolberg reports his Clinic Cases.

Python Machine Learning Jupyter Notebooks (ML website)

Python Machine Learning Jupyter Notebooks (ML website) Dr. Tirthajyoti Sarkar, Fremont, California (Please feel free to connect on LinkedIn here) Also

A Transformer Implementation that is easy to understand and customizable.

Simple Transformer I've written a series of articles on the transformer architecture and language models on Medium. This repository contains an implem

Specification language for generating Generalized Linear Models (with or without mixed effects) from conceptual models

tisane Tisane: Authoring Statistical Models via Formal Reasoning from Conceptual and Data Relationships TL;DR: Analysts can use Tisane to author gener

This repo contains the implementation of YOLOv2 in Keras with Tensorflow backend.

Easy training on custom dataset. Various backends (MobileNet and SqueezeNet) supported. A YOLO demo to detect raccoon run entirely in brower is accessible at https://git.io/vF7vI (not on Windows).

🙄 Difficult algorithm, Simple code.

🎉TensorFlow2.0-Examples🎉! "Talk is cheap, show me the code." ----- Linus Torvalds Created by YunYang1994 This tutorial was designed for easily divin

Paddle pit - Rethinking Spatial Dimensions of Vision Transformers

基于Paddle实现PiT ——Rethinking Spatial Dimensions of Vision Transformers,arxiv 官方原版代

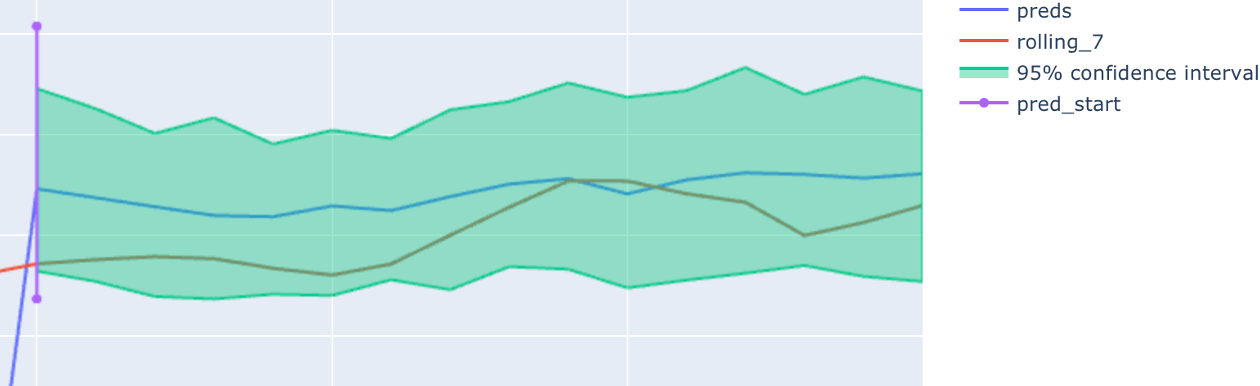

Deep learning PyTorch library for time series forecasting, classification, and anomaly detection

Deep learning for time series forecasting Flow forecast is an open-source deep learning for time series forecasting framework. It provides all the lat

Survival analysis in Python

What is survival analysis and why should I learn it? Survival analysis was originally developed and applied heavily by the actuarial and medical commu

Transformers based fully on MLPs

Awesome MLP-based Transformers papers An up-to-date list of Transformers based fully on MLPs without attention! Why this repo? After transformers and

A curated list of the top 10 computer vision papers in 2021 with video demos, articles, code and paper reference.

The Top 10 Computer Vision Papers of 2021 The top 10 computer vision papers in 2021 with video demos, articles, code, and paper reference. While the w

General Assembly's 2015 Data Science course in Washington, DC

DAT8 Course Repository Course materials for General Assembly's Data Science course in Washington, DC (8/18/15 - 10/29/15). Instructor: Kevin Markham (

Pytorch implementations of Bayes By Backprop, MC Dropout, SGLD, the Local Reparametrization Trick, KF-Laplace, SG-HMC and more

Bayesian Neural Networks Pytorch implementations for the following approximate inference methods: Bayes by Backprop Bayes by Backprop + Local Reparame

Repository for Project Insight: NLP as a Service

Project Insight NLP as a Service Contents Introduction Features Installation Setup and Documentation Project Details Demonstration Directory Details H

A Streamlit web app that generates Rick and Morty stories using GPT2.

Rick and Morty Story Generator This project uses a pre-trained GPT2 model, which was fine-tuned on Rick and Morty transcripts, to generate new stories

Rhyme with AI

Local development Create a conda virtual environment and activate it: conda env create --file environment.yml conda activate rhyme-with-ai Install the

Masked regression code - Masked Regression

Masked Regression MR - Python Implementation This repositery provides a python implementation of MR (Masked Regression). MR can efficiently synthesize

Iowa Project - My second project done at General Assembly, focused on feature engineering and understanding Linear Regression as a concept

Project 2 - Ames Housing Data and Kaggle Challenge PROBLEM STATEMENT Inferring or Predicting? What's more valuable for a housing model? When creating

KoBERT - Korean BERT pre-trained cased (KoBERT)

KoBERT KoBERT Korean BERT pre-trained cased (KoBERT) Why'?' Training Environment Requirements How to install How to use Using with PyTorch Using with

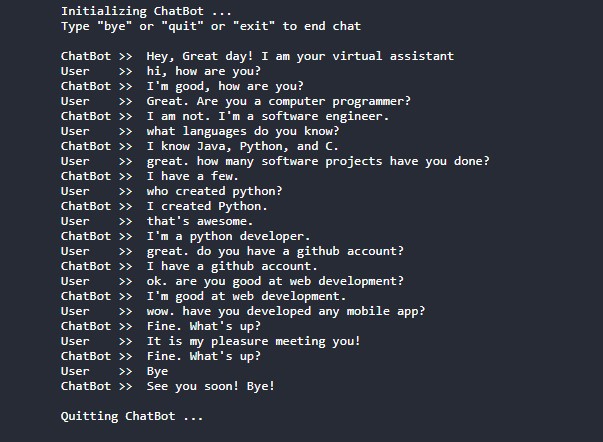

Conversational-AI-ChatBot - Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users!

Conversational AI ChatBot Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users! In this project? Thi

LinearRegression2 Tvads and CarSales

LinearRegression2_Tvads_and_CarSales This project infers the insight that how the TV ads for cars and car Sales are being linked with each other. It i

Price-Prediction-For-a-Dream-Home - A machine learning based linear regression trained model for house price prediction.

Price-Prediction-For-a-Dream-Home ROADMAP TO THIS LINEAR REGRESSION BASED HOUSE PRICE PREDICTION PREDICTION MODEL Import all the dependencies of the p

Image-popularity-score - A novel deep regression method for image scoring.

Image-popularity-score - A novel deep regression method for image scoring.

Constrained Logistic Regression - How to apply specific constraints to logistic regression's coefficients

Constrained Logistic Regression Sample implementation of constructing a logistic regression with given ranges on each of the feature's coefficients (v

Flask app to predict daily radiation from the time series of Solcast from Islamabad, Pakistan

Solar-radiation-ISB-MLOps - Flask app to predict daily radiation from the time series of Solcast from Islamabad, Pakistan.

A minimal implementation of Gaussian process regression in PyTorch

pytorch-minimal-gaussian-process In search of truth, simplicity is needed. There exist heavy-weighted libraries, but as you know, we need to go bare b

Code that accompanies the paper Semi-supervised Deep Kernel Learning: Regression with Unlabeled Data by Minimizing Predictive Variance

Semi-supervised Deep Kernel Learning This is the code that accompanies the paper Semi-supervised Deep Kernel Learning: Regression with Unlabeled Data

Official implementation of CATs: Cost Aggregation Transformers for Visual Correspondence NeurIPS'21

CATs: Cost Aggregation Transformers for Visual Correspondence NeurIPS'21 For more information, check out the paper on [arXiv]. Training with different

A Python Package for Convex Regression and Frontier Estimation

pyStoNED pyStoNED is a Python package that provides functions for estimating multivariate convex regression, convex quantile regression, convex expect

Python script that analyses the given datasets and comes up with the best polynomial regression representation with the smallest polynomial degree possible

Python script that analyses the given datasets and comes up with the best polynomial regression representation with the smallest polynomial degree possible, to be the most reliable with the least complexity possible

Source code of AAAI 2022 paper "Towards End-to-End Image Compression and Analysis with Transformers".

Towards End-to-End Image Compression and Analysis with Transformers Source code of our AAAI 2022 paper "Towards End-to-End Image Compression and Analy

Pre-training of Graph Augmented Transformers for Medication Recommendation

G-Bert Pre-training of Graph Augmented Transformers for Medication Recommendation Intro G-Bert combined the power of Graph Neural Networks and BERT (B

Implementation of the paper "Fine-Tuning Transformers: Vocabulary Transfer"

Transformer-vocabulary-transfer Implementation of the paper "Fine-Tuning Transfo

XViT - Space-time Mixing Attention for Video Transformer

XViT - Space-time Mixing Attention for Video Transformer This is the official implementation of the XViT paper: @inproceedings{bulat2021space, title

Using Bayesian, KNN, Logistic Regression to classify spam and non-spam.

Make Sure the dataset file "spamData.mat" is in the folder spam\src Environment: Python --version = 3.7 Third Party: numpy, matplotlib, math, scipy

Utilize Korean BERT model in sentence-transformers library

ko-sentence-transformers 이 프로젝트는 KoBERT 모델을 sentence-transformers 에서 보다 쉽게 사용하기 위해 만들어졌습니다. Ko-Sentence-BERT-SKTBERT 프로젝트에서는 KoBERT 모델을 sentence-trans

PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation.

ALiBi PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation. Quickstart Clone this reposit

Data Model built using Logistic Regression Algorithm on Python.

Logistic-Regression Problem Statement: Your client is a retail banking institution. Term deposits are a major source of income for a bank. A term depo

jiant is an NLP toolkit

🚨 Update 🚨 : As of 2021/10/17, the jiant project is no longer being actively maintained. This means there will be no plans to add new models, tasks,

Implemented four supervised learning Machine Learning algorithms

Implemented four supervised learning Machine Learning algorithms from an algorithmic family called Classification and Regression Trees (CARTs), details see README_Report.

SeMask: Semantically Masked Transformers for Semantic Segmentation.

SeMask: Semantically Masked Transformers Jitesh Jain, Anukriti Singh, Nikita Orlov, Zilong Huang, Jiachen Li, Steven Walton, Humphrey Shi This repo co

Code implementation from my Medium blog post: [Transformers from Scratch in PyTorch]

transformer-from-scratch Code for my Medium blog post: Transformers from Scratch in PyTorch Note: This Transformer code does not include masked attent

Decision Tree Regression algorithm implemented on Python from scratch.

Decision_Tree_Regression I implemented the decision tree regression algorithm on Python. Unlike regular linear regression, this algorithm is used when

Touca SDK for Python

Touca SDK For Python Touca helps you understand the true impact of your day to day code changes on the behavior and performance of your overall softwa

⛵️The official PyTorch implementation for "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing" (EMNLP 2020).

BERT-of-Theseus Code for paper "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing". BERT-of-Theseus is a new compressed BERT by progre

A quick recipe to learn all about Transformers

Transformers have accelerated the development of new techniques and models for natural language processing (NLP) tasks.

Yuno is context based search engine for anime.

Yuno yuno.mp4 Table of Contents Introduction Power Of Yuno Try Yuno How Yuno was created? References Introduction Yuno is a context based search engin

Code for the ICCV'21 paper "Context-aware Scene Graph Generation with Seq2Seq Transformers"

ICCV'21 Context-aware Scene Graph Generation with Seq2Seq Transformers Authors: Yichao Lu*, Himanshu Rai*, Cheng Chang*, Boris Knyazev†, Guangwei Yu,

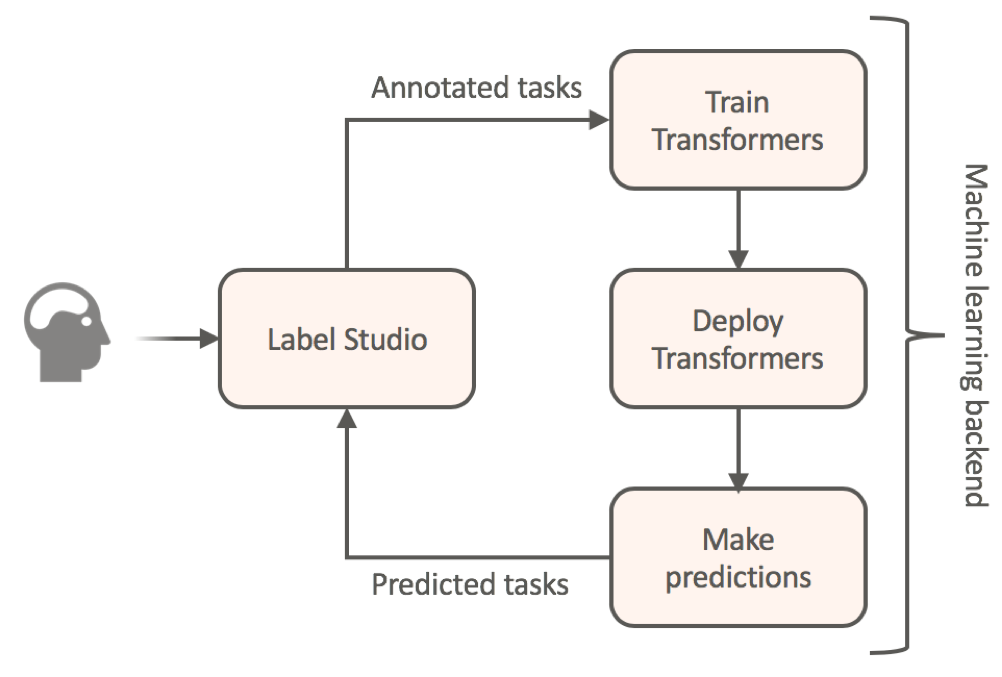

Label data using HuggingFace's transformers and automatically get a prediction service

Label Studio for Hugging Face's Transformers Website • Docs • Twitter • Join Slack Community Transfer learning for NLP models by annotating your textu

Model parallel transformers in JAX and Haiku

Table of contents Mesh Transformer JAX Updates Pretrained Models GPT-J-6B Links Acknowledgments License Model Details Zero-Shot Evaluations Architectu

GANformer: Generative Adversarial Transformers

GANformer: Generative Adversarial Transformers Drew A. Hudson* & C. Lawrence Zitnick Update: We released the new GANformer2 paper! *I wish to thank Ch

The code for the Subformer, from the EMNLP 2021 Findings paper: "Subformer: Exploring Weight Sharing for Parameter Efficiency in Generative Transformers", by Machel Reid, Edison Marrese-Taylor, and Yutaka Matsuo

Subformer This repository contains the code for the Subformer. To help overcome this we propose the Subformer, allowing us to retain performance while

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Sinkhorn Transformer This is a reproduction of the work outlined in Sparse Sinkhorn Attention, with additional enhancements. It includes a parameteriz

DeLighT: Very Deep and Light-Weight Transformers

DeLighT: Very Deep and Light-weight Transformers This repository contains the source code of our work on building efficient sequence models: DeFINE (I

This repository contains the code for running the character-level Sandwich Transformers from our ACL 2020 paper on Improving Transformer Models by Reordering their Sublayers.

Improving Transformer Models by Reordering their Sublayers This repository contains the code for running the character-level Sandwich Transformers fro

Examples of using sparse attention, as in "Generating Long Sequences with Sparse Transformers"

Status: Archive (code is provided as-is, no updates expected) Update August 2020: For an example repository that achieves state-of-the-art modeling pe