2158 Repositories

Python vision-transformer-search-space Libraries

Tool for generating Memory.scan() compatible instruction search patterns

scanpat Tool for generating Frida Memory.scan() compatible instruction search patterns. Powered by r2. Examples $ ./scanpat.py arm.ks:64 'sub sp, sp,

Transformer model implemented with Pytorch

transformer-pytorch Transformer model implemented with Pytorch Attention is all you need-[Paper] Architecture Self-Attention self_attention.py class

Fog Simulation on Real LiDAR Point Clouds for 3D Object Detection in Adverse Weather

LiDAR fog simulation Created by Martin Hahner at the Computer Vision Lab of ETH Zurich. This is the official code release of the paper Fog Simulation

QTool: A Low-bit Quantization Toolbox for Deep Neural Networks in Computer Vision

This project provides abundant choices of quantization strategies (such as the quantization algorithms, training schedules and empirical tricks) for quantizing the deep neural networks into low-bit counterparts.

Extract knowledge from raw text

Extract knowledge from raw text This repository is a nearly copy-paste of "From Text to Knowledge: The Information Extraction Pipeline" with some cosm

pix2tex: Using a ViT to convert images of equations into LaTeX code.

The goal of this project is to create a learning based system that takes an image of a math formula and returns corresponding LaTeX code.

Official implementation of the ICCV 2021 paper "Conditional DETR for Fast Training Convergence".

The DETR approach applies the transformer encoder and decoder architecture to object detection and achieves promising performance. In this paper, we handle the critical issue, slow training convergence, and present a conditional cross-attention mechanism for fast DETR training. Our approach is motivated by that the cross-attention in DETR relies highly on the content embeddings and that the spatial embeddings make minor contributions, increasing the need for high-quality content embeddings and thus increasing the training difficulty.

The official code for paper "R2D2: Recursive Transformer based on Differentiable Tree for Interpretable Hierarchical Language Modeling".

R2D2 This is the official code for paper titled "R2D2: Recursive Transformer based on Differentiable Tree for Interpretable Hierarchical Language Mode

Towards Interpretable Deep Metric Learning with Structural Matching

DIML Created by Wenliang Zhao*, Yongming Rao*, Ziyi Wang, Jiwen Lu, Jie Zhou This repository contains PyTorch implementation for paper Towards Interpr

CM-NAS: Cross-Modality Neural Architecture Search for Visible-Infrared Person Re-Identification (ICCV2021)

CM-NAS Official Pytorch code of paper CM-NAS: Cross-Modality Neural Architecture Search for Visible-Infrared Person Re-Identification in ICCV2021. Vis

Towers of Babel: Combining Images, Language, and 3D Geometry for Learning Multimodal Vision. ICCV 2021.

Towers of Babel: Combining Images, Language, and 3D Geometry for Learning Multimodal Vision Download links and PyTorch implementation of "Towers of Ba

This is an official implementation of the High-Resolution Transformer for Dense Prediction.

High-Resolution Transformer for Dense Prediction Introduction This is the official implementation of High-Resolution Transformer (HRT). We present a H

Official PaddlePaddle implementation of Paint Transformer

Paint Transformer: Feed Forward Neural Painting with Stroke Prediction [Paper] [Paddle Implementation] Update We have optimized the serial inference p

An organized collection of tutorials and projects created for aspriring computer vision students.

A repository created with the purpose of teaching students in BME lab 308A- Hanoi University of Science and Technology

In this project we investigate the performance of the SetCon model on realistic video footage. Therefore, we implemented the model in PyTorch and tested the model on two example videos.

Contrastive Learning of Object Representations Supervisor: Prof. Dr. Gemma Roig Institutions: Goethe University CVAI - Computational Vision & Artifici

FastReID is a research platform that implements state-of-the-art re-identification algorithms.

FastReID is a research platform that implements state-of-the-art re-identification algorithms.

Vision-Language Transformer and Query Generation for Referring Segmentation (ICCV 2021)

Vision-Language Transformer and Query Generation for Referring Segmentation Please consider citing our paper in your publications if the project helps

LONG-TERM SERIES FORECASTING WITH QUERYSELECTOR – EFFICIENT MODEL OF SPARSEATTENTION

Query Selector Here you can find code and data loaders for the paper https://arxiv.org/pdf/2107.08687v1.pdf . Query Selector is a novel approach to sp

A "gym" style toolkit for building lightweight Neural Architecture Search systems

A "gym" style toolkit for building lightweight Neural Architecture Search systems

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

Script de monitoramento de telemetria para missões espaciais, cansat e foguetemodelismo.

Aeroespace_GroundStation Script de monitoramento de telemetria para missões espaciais, cansat e foguetemodelismo. Imagem 1 - Dashboard realizando moni

Telegram bot to search quotes from brainyquote.com

Brainy Quote Bot @BrainQuoteBot A star ⭐ from you means a lot to us! Telegram bot to search quotes from brainyquote.com Usage Deploy to Heroku Tap on

10th place solution for Google Smartphone Decimeter Challenge at kaggle.

Under refactoring 10th place solution for Google Smartphone Decimeter Challenge at kaggle. Google Smartphone Decimeter Challenge Global Navigation Sat

Learn meanings behind words is a key element in NLP. This project concentrates on the disambiguation of preposition senses. Therefore, we train a bert-transformer model and surpass the state-of-the-art.

New State-of-the-Art in Preposition Sense Disambiguation Supervisor: Prof. Dr. Alexander Mehler Alexander Henlein Institutions: Goethe University TTLa

"Segmenter: Transformer for Semantic Segmentation" reproduced via mmsegmentation

Segmenter-based-on-OpenMMLab "Segmenter: Transformer for Semantic Segmentation, arxiv 2105.05633." reproduced via mmsegmentation. We reproduce Segment

Search Youtube Video and Get Video info

PyYouTube Get Video Data from YouTube link Installation pip install PyYouTube How to use it ? Get Videos Data from pyyoutube import Data yt = Data("ht

Learning and Building Convolutional Neural Networks using PyTorch

Image Classification Using Deep Learning Learning and Building Convolutional Neural Networks using PyTorch. Models, selected are based on number of ci

Ongoing research training transformer language models at scale, including: BERT & GPT-2

What is this fork of Megatron-LM and Megatron-DeepSpeed This is a detached fork of https://github.com/microsoft/Megatron-DeepSpeed, which in itself is

A google search telegram bot.

Google-Search-Bot A google search telegram bot. Made with Python3 (C) @FayasNoushad Copyright permission under MIT License License - https://github.c

Extreme Rotation Estimation using Dense Correlation Volumes

Extreme Rotation Estimation using Dense Correlation Volumes This repository contains a PyTorch implementation of the paper: Extreme Rotation Estimatio

We evaluate our method on different datasets (including ShapeNet, CUB-200-2011, and Pascal3D+) and achieve state-of-the-art results, outperforming all the other supervised and unsupervised methods and 3D representations, all in terms of performance, accuracy, and training time.

An Effective Loss Function for Generating 3D Models from Single 2D Image without Rendering Papers with code | Paper Nikola Zubić Pietro Lio University

A DNN inference latency prediction toolkit for accurately modeling and predicting the latency on diverse edge devices.

Note: This is an alpha (preview) version which is still under refining. nn-Meter is a novel and efficient system to accurately predict the inference l

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

Official code for "Simpler is Better: Few-shot Semantic Segmentation with Classifier Weight Transformer. ICCV2021".

Simpler is Better: Few-shot Semantic Segmentation with Classifier Weight Transformer. ICCV2021. Introduction We proposed a novel model training paradi

Vision Transformer and MLP-Mixer Architectures

Vision Transformer and MLP-Mixer Architectures Update (2.7.2021): Added the "When Vision Transformers Outperform ResNets..." paper, and SAM (Sharpness

Official implementation for ICDAR 2021 paper "Handwritten Mathematical Expression Recognition with Bidirectionally Trained Transformer"

Handwritten Mathematical Expression Recognition with Bidirectionally Trained Transformer Description Convert offline handwritten mathematical expressi

This is the official implementation for "Do Transformers Really Perform Bad for Graph Representation?".

Graphormer By Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng*, Guolin Ke, Di He*, Yanming Shen and Tie-Yan Liu. This repo is the official impl

Homepage of paper: Paint Transformer: Feed Forward Neural Painting with Stroke Prediction, ICCV 2021.

Paint Transformer: Feed Forward Neural Painting with Stroke Prediction [Paper] [PaddlePaddle Implementation] Homepage of paper: Paint Transformer: Fee

This repository contains PyTorch models for SpecTr (Spectral Transformer).

SpecTr: Spectral Transformer for Hyperspectral Pathology Image Segmentation This repository contains PyTorch models for SpecTr (Spectral Transformer).

Code for "Multi-Compound Transformer for Accurate Biomedical Image Segmentation"

News The code of MCTrans has been released. if you are interested in contributing to the standardization of the medical image analysis community, plea

Sign Language Translation with Transformers (COLING'2020, ECCV'20 SLRTP Workshop)

transformer-slt This repository gathers data and code supporting the experiments in the paper Better Sign Language Translation with STMC-Transformer.

Pre-Trained Image Processing Transformer (IPT)

Pre-Trained Image Processing Transformer (IPT) By Hanting Chen, Yunhe Wang, Tianyu Guo, Chang Xu, Yiping Deng, Zhenhua Liu, Siwei Ma, Chunjing Xu, Cha

Official PyTorch implementation of Segmenter: Transformer for Semantic Segmentation

Segmenter: Transformer for Semantic Segmentation Segmenter: Transformer for Semantic Segmentation by Robin Strudel*, Ricardo Garcia*, Ivan Laptev and

This repository is an official implementation of the paper MOTR: End-to-End Multiple-Object Tracking with TRansformer.

MOTR: End-to-End Multiple-Object Tracking with TRansformer This repository is an official implementation of the paper MOTR: End-to-End Multiple-Object

This is an official implementation for "Self-Supervised Learning with Swin Transformers".

Self-Supervised Learning with Vision Transformers By Zhenda Xie*, Yutong Lin*, Zhuliang Yao, Zheng Zhang, Qi Dai, Yue Cao and Han Hu This repo is the

Code for the paper "VisualBERT: A Simple and Performant Baseline for Vision and Language"

This repository contains code for the following two papers: VisualBERT: A Simple and Performant Baseline for Vision and Language (arxiv) with a short

Multi Task Vision and Language

12-in-1: Multi-Task Vision and Language Representation Learning Please cite the following if you use this code. Code and pre-trained models for 12-in-

(CVPR2021) Kaleido-BERT: Vision-Language Pre-training on Fashion Domain

Kaleido-BERT: Vision-Language Pre-training on Fashion Domain Mingchen Zhuge*, Dehong Gao*, Deng-Ping Fan#, Linbo Jin, Ben Chen, Haoming Zhou, Minghui

Code of PVTv2 is released! PVTv2 largely improves PVTv1 and works better than Swin Transformer with ImageNet-1K pre-training.

Updates (2020/06/21) Code of PVTv2 is released! PVTv2 largely improves PVTv1 and works better than Swin Transformer with ImageNet-1K pre-training. Pyr

LightSeq is a high performance training and inference library for sequence processing and generation implemented in CUDA

LightSeq: A High Performance Library for Sequence Processing and Generation

Full-text multi-table search application for Django. Easy to install and use, with good performance.

django-watson django-watson is a fast multi-model full-text search plugin for Django. It is easy to install and use, and provides high quality search

nn-Meter is a novel and efficient system to accurately predict the inference latency of DNN models on diverse edge devices

A DNN inference latency prediction toolkit for accurately modeling and predicting the latency on diverse edge devices.

Emotional conditioned music generation using transformer-based model.

This is the official repository of EMOPIA: A Multi-Modal Pop Piano Dataset For Emotion Recognition and Emotion-based Music Generation. The paper has b

Exploring Image Deblurring via Blur Kernel Space (CVPR'21)

Exploring Image Deblurring via Encoded Blur Kernel Space About the project We introduce a method to encode the blur operators of an arbitrary dataset

Official implementation of the paper Vision Transformer with Progressive Sampling, ICCV 2021.

Vision Transformer with Progressive Sampling This is the official implementation of the paper Vision Transformer with Progressive Sampling, ICCV 2021.

This is official implementaion of paper "Token Shift Transformer for Video Classification".

This is official implementaion of paper "Token Shift Transformer for Video Classification". We achieve SOTA performance 80.40% on Kinetics-400 val. Paper link

These scripts send notifications to a Webex space when a new IP is banned by Expressway, and allow to request more info or change the ban status

Spam Call and Toll Fraud Mitigation Cisco Expressway release X14 is able to mitigate spam calls and toll fraud attempts by jailing the spam IP address

Code for generating a single image pretraining dataset

Single Image Pretraining of Visual Representations As shown in the paper A critical analysis of self-supervision, or what we can learn from a single i

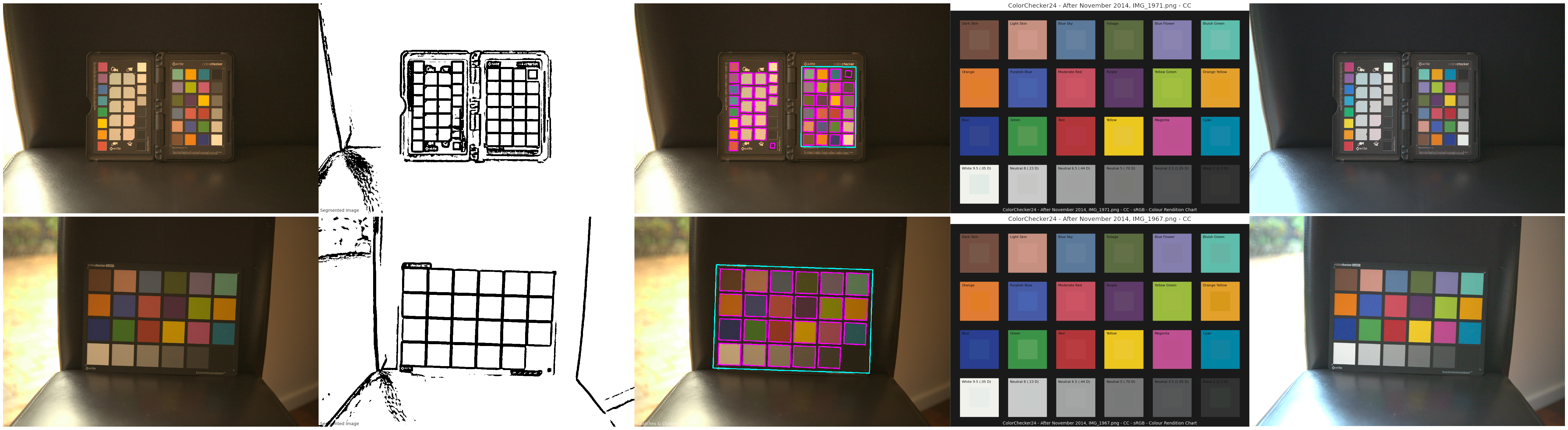

A Python package implementing various colour checker detection algorithms and related utilities.

A Python package implementing various colour checker detection algorithms and related utilities.

Implementation for our ICCV2021 paper: Internal Video Inpainting by Implicit Long-range Propagation

Implicit Internal Video Inpainting Implementation for our ICCV2021 paper: Internal Video Inpainting by Implicit Long-range Propagation paper | project

Video Contrastive Learning with Global Context

Video Contrastive Learning with Global Context (VCLR) This is the official PyTorch implementation of our VCLR paper. Install dependencies environments

Official implementation of paper "Query2Label: A Simple Transformer Way to Multi-Label Classification".

Introdunction This is the official implementation of the paper "Query2Label: A Simple Transformer Way to Multi-Label Classification". Abstract This pa

Scenic: A Jax Library for Computer Vision and Beyond

Scenic Scenic is a codebase with a focus on research around attention-based models for computer vision. Scenic has been successfully used to develop c

Ceres is a combine harvester designed to harvest plots for Chia blockchain and its forks using proof-of-space-and-time(PoST) consensus algorithm.

Ceres Combine-Harvester Ceres is a combine harvester designed to harvest plots for Chia blockchain and its forks using proof-of-space-and-time(PoST) c

Google Project: Search and auto-complete sentences within given input text files, manipulating data with complex data-structures.

Auto-Complete Google Project In this project there is an implementation for one feature of Google's search engines - AutoComplete. Autocomplete, or wo

An implementation for Neural Architecture Search with Random Labels (CVPR 2021 poster) on Pytorch.

Neural Architecture Search with Random Labels(RLNAS) Introduction This project provides an implementation for Neural Architecture Search with Random L

FPGA: Fast Patch-Free Global Learning Framework for Fully End-to-End Hyperspectral Image Classification

FPGA & FreeNet Fast Patch-Free Global Learning Framework for Fully End-to-End Hyperspectral Image Classification by Zhuo Zheng, Yanfei Zhong, Ailong M

Byte-based multilingual transformer TTS for low-resource/few-shot language adaptation.

One model to speak them all 🌎 Audio Language Text ▷ Chinese 人人生而自由,在尊严和权利上一律平等。 ▷ English All human beings are born free and equal in dignity and rig

DPT: Deformable Patch-based Transformer for Visual Recognition (ACM MM2021)

DPT This repo is the official implementation of DPT: Deformable Patch-based Transformer for Visual Recognition (ACM MM2021). We provide code and model

Official implementation of Long-Short Transformer in PyTorch.

Long-Short Transformer (Transformer-LS) This repository hosts the code and models for the paper: Long-Short Transformer: Efficient Transformers for La

A library for finding knowledge neurons in pretrained transformer models.

knowledge-neurons An open source repository replicating the 2021 paper Knowledge Neurons in Pretrained Transformers by Dai et al., and extending the t

PyTorch implementation of the Transformer in Post-LN (Post-LayerNorm) and Pre-LN (Pre-LayerNorm).

Transformer-PyTorch A PyTorch implementation of the Transformer from the paper Attention is All You Need in both Post-LN (Post-LayerNorm) and Pre-LN (

Code for our CVPR 2021 Paper "Rethinking Style Transfer: From Pixels to Parameterized Brushstrokes".

Rethinking Style Transfer: From Pixels to Parameterized Brushstrokes (CVPR 2021) Project page | Paper | Colab | Colab for Drawing App Rethinking Style

Punctuation Restoration using Transformer Models for High-and Low-Resource Languages

Punctuation Restoration using Transformer Models This repository contins official implementation of the paper Punctuation Restoration using Transforme

Open-World Entity Segmentation

Open-World Entity Segmentation Project Website Lu Qi*, Jason Kuen*, Yi Wang, Jiuxiang Gu, Hengshuang Zhao, Zhe Lin, Philip Torr, Jiaya Jia This projec

A project for developing transformer-based models for clinical relation extraction

Clinical Relation Extration with Transformers Aim This package is developed for researchers easily to use state-of-the-art transformers models for ext

The personal repository of the work: *DanceNet3D: Music Based Dance Generation with Parametric Motion Transformer*.

DanceNet3D The personal repository of the work: DanceNet3D: Music Based Dance Generation with Parametric Motion Transformer. Dataset and Results Pleas

MODALS: Modality-agnostic Automated Data Augmentation in the Latent Space

Update (20 Jan 2020): MODALS on text data is avialable MODALS MODALS: Modality-agnostic Automated Data Augmentation in the Latent Space Table of Conte

TF2 implementation of knowledge distillation using the "function matching" hypothesis from the paper Knowledge distillation: A good teacher is patient and consistent by Beyer et al.

FunMatch-Distillation TF2 implementation of knowledge distillation using the "function matching" hypothesis from the paper Knowledge distillation: A g

PyTorch implementation of MuseMorphose, a Transformer-based model for music style transfer.

MuseMorphose This repository contains the official implementation of the following paper: Shih-Lun Wu, Yi-Hsuan Yang MuseMorphose: Full-Song and Fine-

An application that maps an image of a LaTeX math equation to LaTeX code.

Convert images of LaTex math equations into LaTex code.

A PyTorch implementation of Radio Transformer Networks from the paper "An Introduction to Deep Learning for the Physical Layer".

An Introduction to Deep Learning for the Physical Layer An usable PyTorch implementation of the noisy autoencoder infrastructure in the paper "An Intr

A library for finding knowledge neurons in pretrained transformer models.

knowledge-neurons An open source repository replicating the 2021 paper Knowledge Neurons in Pretrained Transformers by Dai et al., and extending the t

The code for our paper CrossFormer: A Versatile Vision Transformer Based on Cross-scale Attention.

CrossFormer This repository is the code for our paper CrossFormer: A Versatile Vision Transformer Based on Cross-scale Attention. Introduction Existin

HiFT: Hierarchical Feature Transformer for Aerial Tracking (ICCV2021)

HiFT: Hierarchical Feature Transformer for Aerial Tracking Ziang Cao, Changhong Fu, Junjie Ye, Bowen Li, and Yiming Li Our paper is Accepted by ICCV 2

This project is a sample demo of Arxiv search related to AI/ML Papers built using Streamlit, sentence-transformers and Faiss.

This project is a sample demo of Arxiv search related to AI/ML Papers built using Streamlit, sentence-transformers and Faiss.

ANNchor is a python library which constructs approximate k-nearest neighbour graphs for slow metrics.

Fast k-NN graph construction for slow metrics

meProp: Sparsified Back Propagation for Accelerated Deep Learning

meProp The codes were used for the paper meProp: Sparsified Back Propagation for Accelerated Deep Learning with Reduced Overfitting (ICML 2017) [pdf]

Image Deblurring using Generative Adversarial Networks

DeblurGAN arXiv Paper Version Pytorch implementation of the paper DeblurGAN: Blind Motion Deblurring Using Conditional Adversarial Networks. Our netwo

Synthesizing and manipulating 2048x1024 images with conditional GANs

pix2pixHD Project | Youtube | Paper Pytorch implementation of our method for high-resolution (e.g. 2048x1024) photorealistic image-to-image translatio

PyTorch implementation of the YOLO (You Only Look Once) v2

PyTorch implementation of the YOLO (You Only Look Once) v2 The YOLOv2 is one of the most popular one-stage object detector. This project adopts PyTorc

PyTorch Implementation of Realtime Multi-Person Pose Estimation project.

PyTorch Realtime Multi-Person Pose Estimation This is a pytorch version of Realtime_Multi-Person_Pose_Estimation, origin code is here Realtime_Multi-P

PyTorch implementation of 1712.06087 "Zero-Shot" Super-Resolution using Deep Internal Learning

Unofficial PyTorch implementation of "Zero-Shot" Super-Resolution using Deep Internal Learning Unofficial Implementation of 1712.06087 "Zero-Shot" Sup

pytorch implementation of Attention is all you need

A Pytorch Implementation of the Transformer: Attention Is All You Need Our implementation is largely based on Tensorflow implementation Requirements N

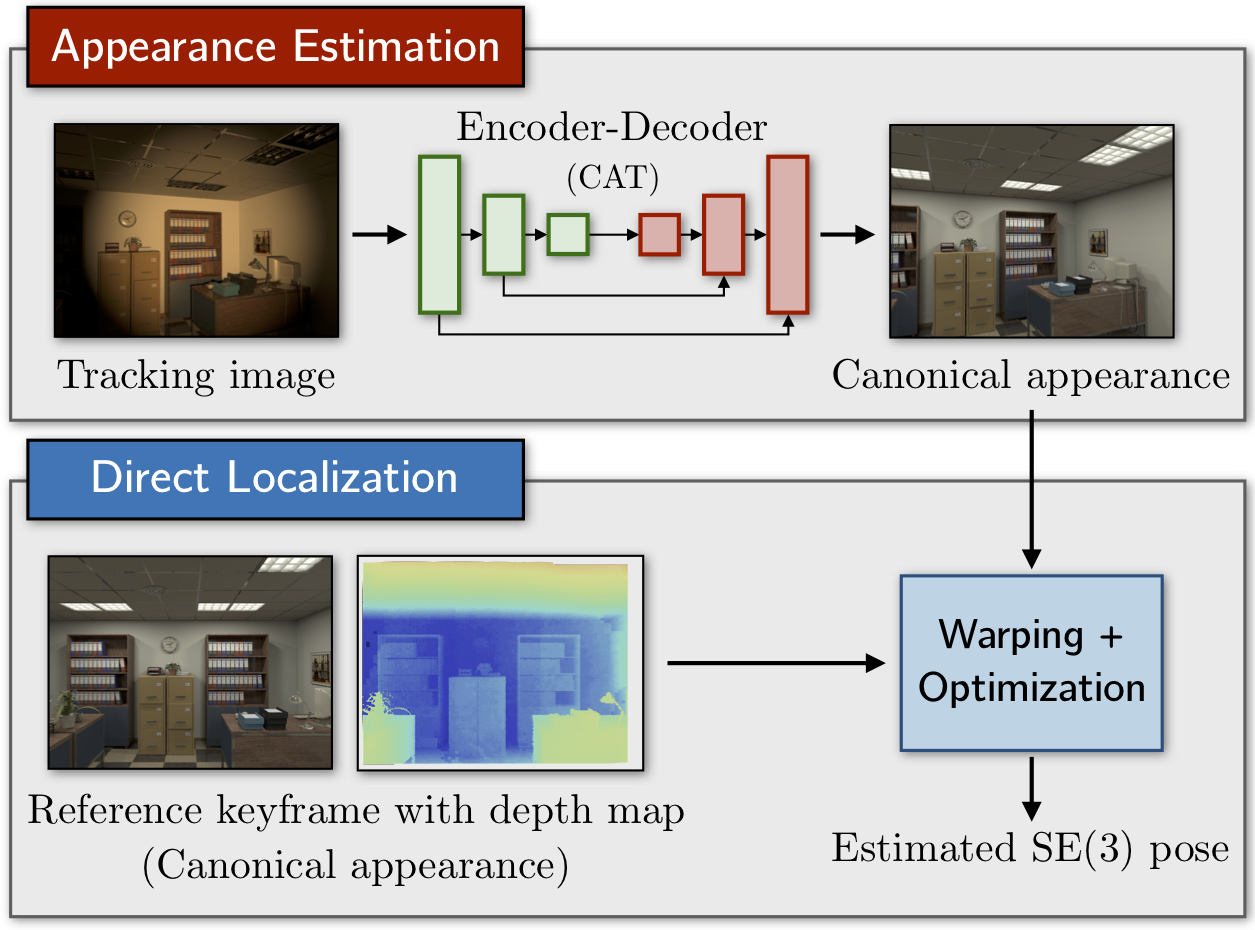

Canonical Appearance Transformations

CAT-Net: Learning Canonical Appearance Transformations Code to accompany our paper "How to Train a CAT: Learning Canonical Appearance Transformations

Minimal PyTorch implementation of Generative Latent Optimization from the paper "Optimizing the Latent Space of Generative Networks"

Minimal PyTorch implementation of Generative Latent Optimization This is a reimplementation of the paper Piotr Bojanowski, Armand Joulin, David Lopez-

This's an implementation of deepmind Visual Interaction Networks paper using pytorch

Visual-Interaction-Networks An implementation of Deepmind visual interaction networks in Pytorch. Introduction For the purpose of understanding the ch

PyTorch implementation of "Efficient Neural Architecture Search via Parameters Sharing"

Efficient Neural Architecture Search (ENAS) in PyTorch PyTorch implementation of Efficient Neural Architecture Search via Parameters Sharing. ENAS red

A PyTorch Implementation of Neural IMage Assessment

NIMA: Neural IMage Assessment This is a PyTorch implementation of the paper NIMA: Neural IMage Assessment (accepted at IEEE Transactions on Image Proc