1182 Repositories

Python AI-DAY-WORKSHOP-NLP-TRANSFORMERS Libraries

A modified Sequential and NLP based Bot

A modified Sequential and NLP based Bot I improvised this bot a bit with some implementations as a part of my own hobby project :) Note: I do not own

A Lightweight NLP Data Loader for All Deep Learning Frameworks in Python

LineFlow: Framework-Agnostic NLP Data Loader in Python LineFlow is a simple text dataset loader for NLP deep learning tasks. LineFlow was designed to

Spokestack is a library that allows a user to easily incorporate a voice interface into any Python application with a focus on embedded systems.

Welcome to Spokestack Python! This library is intended for developing voice interfaces in Python. This can include anything from Raspberry Pi applicat

Fast and robust date extraction from web pages, with Python or on the command-line

Find original and updated publication dates of any web page. From the command-line or within Python, all the steps needed from web page download to HTML parsing, scraping, and text analysis are included.

SeMask: Semantically Masked Transformers for Semantic Segmentation.

SeMask: Semantically Masked Transformers Jitesh Jain, Anukriti Singh, Nikita Orlov, Zilong Huang, Jiachen Li, Steven Walton, Humphrey Shi This repo co

Code implementation from my Medium blog post: [Transformers from Scratch in PyTorch]

transformer-from-scratch Code for my Medium blog post: Transformers from Scratch in PyTorch Note: This Transformer code does not include masked attent

Practical Natural Language Processing Tools for Humans is build on the top of Senna Natural Language Processing (NLP)

Practical Natural Language Processing Tools for Humans is build on the top of Senna Natural Language Processing (NLP) predictions: part-of-speech (POS) tags, chunking (CHK), name entity recognition (NER), semantic role labeling (SRL) and syntactic parsing (PSG) with skip-gram all in Python and still more features will be added. The website give is for downlarding Senna tool

Simple multilingual lemmatizer for Python, especially useful for speed and efficiency

Simplemma: a simple multilingual lemmatizer for Python Purpose Lemmatization is the process of grouping together the inflected forms of a word so they

Faster, modernized fork of the language identification tool langid.py

py3langid py3langid is a fork of the standalone language identification tool langid.py by Marco Lui. Original license: BSD-2-Clause. Fork license: BSD

Quick insights from Zoom meeting transcripts using Graph + NLP

Transcript Analysis - Graph + NLP This program extracts insights from Zoom Meeting Transcripts (.vtt) using TigerGraph and NLTK. In order to run this

Unofficial Python library for using the Polish Wordnet (plWordNet / Słowosieć)

Polish Wordnet Python library Simple, easy-to-use and reasonably fast library for using the Słowosieć (also known as PlWordNet) - a lexico-semantic da

Tutorial for the PERFECTING FACTORY 5.0 WITH EDGE-POWERED AI workshop

Workshop Advantech Jetson Nano This tutorial has been designed for the PERFECTING FACTORY 5.0 WITH EDGE-POWERED AI workshop in collaboration with Adva

A Japanese tokenizer based on recurrent neural networks

Nagisa is a python module for Japanese word segmentation/POS-tagging. It is designed to be a simple and easy-to-use tool. This tool has the following

Crawl BookCorpus

These are scripts to reproduce BookCorpus by yourself.

⛵️The official PyTorch implementation for "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing" (EMNLP 2020).

BERT-of-Theseus Code for paper "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing". BERT-of-Theseus is a new compressed BERT by progre

Basic yet complete Machine Learning pipeline for NLP tasks

Basic yet complete Machine Learning pipeline for NLP tasks This repository accompanies the article on building basic yet complete ML pipelines for sol

Code for the project carried out fulfilling the course requirements for Fall 2021 NLP at NYU

Introduction Fairseq(-py) is a sequence modeling toolkit that allows researchers and developers to train custom models for translation, summarization,

Sentiment Analysis web app using Streamlit - American Airlines Tweets

Analyse des sentiments à partir des Tweets L'application est développée par Streamlit L'analyse sentimentale est effectuée sur l'ensemble de données d

The source code of "Language Models are Few-shot Multilingual Learners" (MRL @ EMNLP 2021)

Language Models are Few-shot Multilingual Learners Paper This is the source code of the paper [Arxiv] [ACL Anthology]: This code has been written usin

Lightweight utility tools for the detection of multiple spellings, meanings, and language-specific terminology in British and American English

Breame ( British English and American English) Breame is a lightweight Python package with a number of utility tools to aid in the detection of words

Materials (slides, code, assignments) for the NYU class I teach on NLP and ML Systems (Master of Engineering).

FREE_7773 Repo containing material for the NYU class (Master of Engineering) I teach on NLP, ML Sys etc. For context on what the class is trying to ac

A quick recipe to learn all about Transformers

Transformers have accelerated the development of new techniques and models for natural language processing (NLP) tasks.

BARTpho: Pre-trained Sequence-to-Sequence Models for Vietnamese

Table of contents Introduction Using BARTpho with fairseq Using BARTpho with transformers Notes BARTpho: Pre-trained Sequence-to-Sequence Models for V

Contains links to publicly available datasets for modeling health outcomes using speech and language.

speech-nlp-datasets Contains links to publicly available datasets for modeling various health outcomes using speech and language. Speech-based Corpora

People log into different sites every day to get information and browse through these sites one by one

HyperLink People log into different sites every day to get information and browse through these sites one by one. And they are exposed to advertisemen

Tracking development of the Class Schedule Siri Shortcut, an iOS program that checks the type of school day and tells you class scheduling.

Class Schedule Shortcut Tracking development of the Class Schedule Siri Shortcut, an iOS program that checks the type of school day and tells you clas

A PyTorch-based model pruning toolkit for pre-trained language models

English | 中文说明 TextPruner是一个为预训练语言模型设计的模型裁剪工具包,通过轻量、快速的裁剪方法对模型进行结构化剪枝,从而实现压缩模型体积、提升模型速度。 其他相关资源: 知识蒸馏工具TextBrewer:https://github.com/airaria/TextBrewe

Yuno is context based search engine for anime.

Yuno yuno.mp4 Table of Contents Introduction Power Of Yuno Try Yuno How Yuno was created? References Introduction Yuno is a context based search engin

BERT, LDA, and TFIDF based keyword extraction in Python

BERT, LDA, and TFIDF based keyword extraction in Python kwx is a toolkit for multilingual keyword extraction based on Google's BERT and Latent Dirichl

BERT-based Financial Question Answering System

BERT-based Financial Question Answering System In this example, we use Jina, PyTorch, and Hugging Face transformers to build a production-ready BERT-b

Code for the ICCV'21 paper "Context-aware Scene Graph Generation with Seq2Seq Transformers"

ICCV'21 Context-aware Scene Graph Generation with Seq2Seq Transformers Authors: Yichao Lu*, Himanshu Rai*, Cheng Chang*, Boris Knyazev†, Guangwei Yu,

Chinese NER with albert/electra or other bert descendable model (keras)

Chinese NLP (albert/electra with Keras) Named Entity Recognization Project Structure ./ ├── NER │ ├── __init__.py │ ├── log

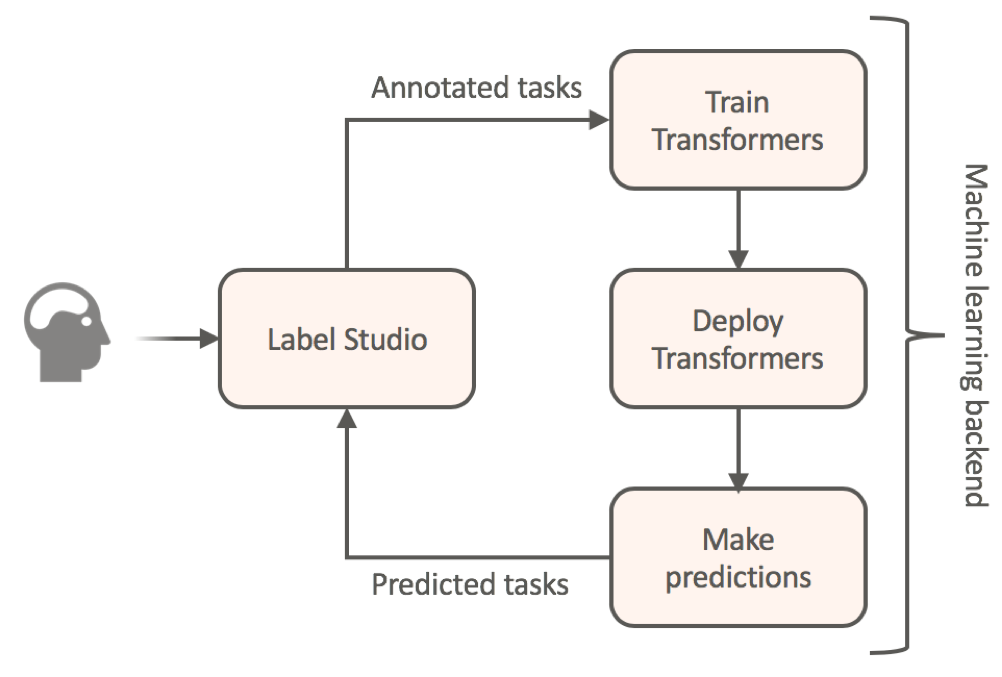

Label data using HuggingFace's transformers and automatically get a prediction service

Label Studio for Hugging Face's Transformers Website • Docs • Twitter • Join Slack Community Transfer learning for NLP models by annotating your textu

Model parallel transformers in JAX and Haiku

Table of contents Mesh Transformer JAX Updates Pretrained Models GPT-J-6B Links Acknowledgments License Model Details Zero-Shot Evaluations Architectu

Code for CodeT5: a new code-aware pre-trained encoder-decoder model.

CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation This is the official PyTorch implementation

GANformer: Generative Adversarial Transformers

GANformer: Generative Adversarial Transformers Drew A. Hudson* & C. Lawrence Zitnick Update: We released the new GANformer2 paper! *I wish to thank Ch

The code for the Subformer, from the EMNLP 2021 Findings paper: "Subformer: Exploring Weight Sharing for Parameter Efficiency in Generative Transformers", by Machel Reid, Edison Marrese-Taylor, and Yutaka Matsuo

Subformer This repository contains the code for the Subformer. To help overcome this we propose the Subformer, allowing us to retain performance while

Transcribing audio files using Hugging Face's implementation of Wav2Vec2 + "chain-linking" NLP tasks to combine speech-to-text with downstream tasks like translation and summarisation.

PART 2: CHAIN LINKING AUDIO-TO-TEXT NLP TASKS 2A: TRANSCRIBE-TRANSLATE-SENTIMENT-ANALYSIS In notebook3.0, I demo a simple workflow to: transcribe a lo

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Large-scale Self-supervised Pre-training Across Tasks, Languages, and Modalities

Hiring We are hiring at all levels (including FTE researchers and interns)! If you are interested in working with us on NLP and large-scale pre-traine

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Sinkhorn Transformer This is a reproduction of the work outlined in Sparse Sinkhorn Attention, with additional enhancements. It includes a parameteriz

DeLighT: Very Deep and Light-Weight Transformers

DeLighT: Very Deep and Light-weight Transformers This repository contains the source code of our work on building efficient sequence models: DeFINE (I

ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators

ELECTRA Introduction ELECTRA is a method for self-supervised language representation learning. It can be used to pre-train transformer networks using

This repository contains the code for running the character-level Sandwich Transformers from our ACL 2020 paper on Improving Transformer Models by Reordering their Sublayers.

Improving Transformer Models by Reordering their Sublayers This repository contains the code for running the character-level Sandwich Transformers fro

Examples of using sparse attention, as in "Generating Long Sequences with Sparse Transformers"

Status: Archive (code is provided as-is, no updates expected) Update August 2020: For an example repository that achieves state-of-the-art modeling pe

An implementation of model parallel GPT-2 and GPT-3-style models using the mesh-tensorflow library.

GPT Neo 🎉 1T or bust my dudes 🎉 An implementation of model & data parallel GPT3-like models using the mesh-tensorflow library. If you're just here t

Awesome Treasure of Transformers Models Collection

💁 Awesome Treasure of Transformers Models for Natural Language processing contains papers, videos, blogs, official repo along with colab Notebooks. 🛫☑️

Text Classification in Turkish Texts with Bert

You can watch the details of the project on my youtube channel Project Interface Project Second Interface Goal= Correctly guessing the classification

Whole-day timezone comparison

Timezone Converter Compare a full day of your local timezone with foreign ones $ timezone-converter tijuana --zone $ timezone-converter tijuana new_yo

The projects lets you extract glossary words and their definitions from a given piece of text automatically using NLP techniques

Unsupervised technique to Glossary and Definition Extraction Code Files GPT2-DefinitionModel.ipynb - GPT-2 model for definition generation. Data_Gener

Powerful unsupervised domain adaptation method for dense retrieval.

Powerful unsupervised domain adaptation method for dense retrieval

Converts a Bangla numeric string to literal words.

Bangla Number in Words Converts a Bangla numeric string to literal words. Install $ pip install banglanum2words Usage

Text completion with Hugging Face and TensorFlow.js running on Node.js

Katana ML Text Completion 🤗 Description Runs with with Hugging Face DistilBERT and TensorFlow.js on Node.js distilbert-model - converter from Hugging

Using Bert as the backbone model for lime, designed for NLP task explanation (sentence pair text classification task)

Lime Comparing deep contextualized model for sentences highlighting task. In addition, take the classic explanation model "LIME" with bert-base model

Official code base for the poster "On the use of Cortical Magnification and Saccades as Biological Proxies for Data Augmentation" published in NeurIPS 2021 Workshop (SVRHM)

Self-Supervised Learning (SimCLR) with Biological Plausible Image Augmentations Official code base for the poster "On the use of Cortical Magnificatio

ALIbaba's Collection of Encoder-decoders from MinD (Machine IntelligeNce of Damo) Lab

AliceMind AliceMind: ALIbaba's Collection of Encoder-decoders from MinD (Machine IntelligeNce of Damo) Lab This repository provides pre-trained encode

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

keytotext Idea is to build a model which will take keywords as inputs and generate sentences as outputs. Potential use case can include: Marketing Sea

A toolkit for document-level event extraction, containing some SOTA model implementations

Document-level Event Extraction via Heterogeneous Graph-based Interaction Model with a Tracker Source code for ACL-IJCNLP 2021 Long paper: Document-le

A repository with exploration into using transformers to predict DNA ↔ transcription factor binding

Transcription Factor binding predictions with Attention and Transformers A repository with exploration into using transformers to predict DNA ↔ transc

RCT-ART is an NLP pipeline built with spaCy for converting clinical trial result sentences into tables through jointly extracting intervention, outcome and outcome measure entities and their relations.

Randomised controlled trial abstract result tabulator RCT-ART is an NLP pipeline built with spaCy for converting clinical trial result sentences into

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

PyTorch implementation of a collections of scalable Video Transformer Benchmarks.

PyTorch implementation of Video Transformer Benchmarks This repository is mainly built upon Pytorch and Pytorch-Lightning. We wish to maintain a colle

Easy Language Model Pretraining leveraging Huggingface's Transformers and Datasets

Easy Language Model Pretraining leveraging Huggingface's Transformers and Datasets What is LASSL • How to Use What is LASSL LASSL은 LAnguage Semi-Super

Pangu-Alpha for Transformers

Pangu-Alpha for Transformers Usage Download MindSpore FP32 weights for GPU from here to data/Pangu-alpha_2.6B.ckpt Activate MindSpore environment and

NLP tool to extract emotional phrase from tweets 🤩

Emotional phrase extractor Extract phrase in the given text that is used to express the sentiment. Capturing sentiment in language is important in the

![[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)](https://github.com/ytongbai/ViTs-vs-CNNs/raw/main/resources/teaser.png)

[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)

Are Transformers More Robust Than CNNs? Pytorch implementation for NeurIPS 2021 Paper: Are Transformers More Robust Than CNNs? Our implementation is b

Analyse the limit order book in seconds. Zoom to tick level or get yourself an overview of the trading day.

Analyse the limit order book in seconds. Zoom to tick level or get yourself an overview of the trading day. Correlate the market activity with the Apple Keynote presentations.

2D Human Pose estimation using transformers. Implementation in Pytorch

PE-former: Pose Estimation Transformer Vision transformer architectures perform very well for image classification tasks. Efforts to solve more challe

Experiments and examples converting Transformers to ONNX

Experiments and examples converting Transformers to ONNX This repository containes experiments and examples on converting different Transformers to ON

Continuously update some NLP practice based on different tasks.

NLP_practice We will continuously update some NLP practice based on different tasks. prerequisites Software pytorch = 1.10 torchtext = 0.11.0 sklear

State-of-the-art NLP through transformer models in a modular design and consistent APIs.

Trapper (Transformers wRAPPER) Trapper is an NLP library that aims to make it easier to train transformer based models on downstream tasks. It wraps h

ALIbaba's Collection of Encoder-decoders from MinD (Machine IntelligeNce of Damo) Lab

AliceMind AliceMind: ALIbaba's Collection of Encoder-decoders from MinD (Machine IntelligeNce of Damo) Lab This repository provides pre-trained encode

This is a project of data parallel that running on NLP tasks.

This is a project of data parallel that running on NLP tasks.

Hashformers is a framework for hashtag segmentation with transformers.

Hashtag segmentation is the task of automatically inserting the missing spaces between the words in a hashtag. Hashformers applies Transformer models

Suite of 500 procedurally-generated NLP tasks to study language model adaptability

TaskBench500 The TaskBench500 dataset and code for generating tasks. Data The TaskBench dataset is available under wget http://web.mit.edu/bzl/www/Tas

Implementation of paper "Decision-based Black-box Attack Against Vision Transformers via Patch-wise Adversarial Removal"

Patch-wise Adversarial Removal Implementation of paper "Decision-based Black-box Attack Against Vision Transformers via Patch-wise Adversarial Removal

Post-Training Quantization for Vision transformers.

PTQ4ViT Post-Training Quantization Framework for Vision Transformers. We use the twin uniform quantization method to reduce the quantization error on

Suite of 500 procedurally-generated NLP tasks to study language model adaptability

TaskBench500 The TaskBench500 dataset and code for generating tasks. Data The TaskBench dataset is available under wget http://web.mit.edu/bzl/www/Tas

![[NeurIPS 2021] COCO-LM: Correcting and Contrasting Text Sequences for Language Model Pretraining](https://github.com/microsoft/COCO-LM/raw/main/coco-lm.png)

[NeurIPS 2021] COCO-LM: Correcting and Contrasting Text Sequences for Language Model Pretraining

COCO-LM This repository contains the scripts for fine-tuning COCO-LM pretrained models on GLUE and SQuAD 2.0 benchmarks. Paper: COCO-LM: Correcting an

This is a re-implementation of TransGAN: Two Pure Transformers Can Make One Strong GAN (CVPR 2021) in PyTorch.

TransGAN: Two Transformers Can Make One Strong GAN [YouTube Video] Paper Authors: Yifan Jiang, Shiyu Chang, Zhangyang Wang CVPR 2021 This is re-implem

This repository contains Python scripts for extracting linguistic features from Filipino texts.

Filipino Text Linguistic Feature Extractors This repository contains scripts for extracting linguistic features from Filipino texts. The scripts were

Chatbot in 200 lines of code using TensorLayer

Seq2Seq Chatbot This is a 200 lines implementation of Twitter/Cornell-Movie Chatbot, please read the following references before you read the code: Pr

End-To-End Memory Network using Tensorflow

MemN2N Implementation of End-To-End Memory Networks with sklearn-like interface using Tensorflow. Tasks are from the bAbl dataset. Get Started git clo

Tensorflow implementation of Character-Aware Neural Language Models.

Character-Aware Neural Language Models Tensorflow implementation of Character-Aware Neural Language Models. The original code of author can be found h

A design of MIDI language for music generation task, specifically for Natural Language Processing (NLP) models.

MIDI Language Introduction Reference Paper: Pop Music Transformer: Beat-based Modeling and Generation of Expressive Pop Piano Compositions: code This

Course project of NLP@UCAS

NaiveMT Prepare Clone this repository git clone [email protected]:Poeroz/NaiveMT.git Install Please first install PyTorch = 1.5.0, then type the followi

Official repository for Automated Learning Rate Scheduler for Large-Batch Training (8th ICML Workshop on AutoML)

Automated Learning Rate Scheduler for Large-Batch Training The official repository for Automated Learning Rate Scheduler for Large-Batch Training (8th

Implementation of N-Grammer, augmenting Transformers with latent n-grams, in Pytorch

N-Grammer - Pytorch Implementation of N-Grammer, augmenting Transformers with latent n-grams, in Pytorch Install $ pip install n-grammer-pytorch Usage

Simple bots or Simbots is a library designed to create simple bots using the power of python. This library utilises Intent, Entity, Relation and Context model to create bots .

Simple bots or Simbots is a library designed to create simple chat bots using the power of python. This library utilises Intent, Entity, Relation and

The Playwright Workshop for TAU: The Homecoming

tau-playwright-workshop This repository contains the instructions and example code for the Playwright workshop for TAU: The Homecoming on December 1,

Train and use generative text models in a few lines of code.

blather Train and use generative text models in a few lines of code. To see blather in action check out the colab notebook! Installation Use the packa

PyTorch implementation of Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets

Simple PyTorch Implementation of "Grokking" Implementation of Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets Usage Running

Official PyTorch implementation for paper "Efficient Two-Stage Detection of Human–Object Interactions with a Novel Unary–Pairwise Transformer"

UPT: Unary–Pairwise Transformers This repository contains the official PyTorch implementation for the paper Frederic Z. Zhang, Dylan Campbell and Step

A Chinese to English Neural Model Translation Project

ZH-EN NMT Chinese to English Neural Machine Translation This project is inspired by Stanford's CS224N NMT Project Dataset used in this project: News C

Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch

NÜWA - Pytorch (wip) Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch. This repository will be popul

An assignment from my grad-level data mining course demonstrating some experience with NLP/neural networks/Pytorch

NLP-Pytorch-Assignment An assignment from my grad-level data mining course (before I started personal projects) demonstrating some experience with NLP

Mastering Transformers, published by Packt

Mastering Transformers This is the code repository for Mastering Transformers, published by Packt. Build state-of-the-art models from scratch with adv

Panoptic SegFormer: Delving Deeper into Panoptic Segmentation with Transformers

Panoptic SegFormer: Delving Deeper into Panoptic Segmentation with Transformers Results results on COCO val Backbone Method Lr Schd PQ Config Download

Deploying a Text Summarization NLP use case on Docker Container Utilizing Nvidia GPU

GPU Docker NLP Application Deployment Deploying a Text Summarization NLP use case on Docker Container Utilizing Nvidia GPU, to setup the enviroment on

Natural Language Processing Tasks and Examples.

Natural Language Processing Tasks and Examples With the advancement of A.I. technology in recent years, natural language processing technology has bee