335 Repositories

Python Robust-Prefix-Tuning Libraries

The official repo for OC-SORT: Observation-Centric SORT on video Multi-Object Tracking. OC-SORT is simple, online and robust to occlusion/non-linear motion.

OC-SORT Observation-Centric SORT (OC-SORT) is a pure motion-model-based multi-object tracker. It aims to improve tracking robustness in crowded scenes

Official code for ROCA: Robust CAD Model Retrieval and Alignment from a Single Image (CVPR 2022)

ROCA: Robust CAD Model Alignment and Retrieval from a Single Image (CVPR 2022) Code release of our paper ROCA. Check out our video, paper, and website

A Text Attention Network for Spatial Deformation Robust Scene Text Image Super-resolution (CVPR2022)

A Text Attention Network for Spatial Deformation Robust Scene Text Image Super-resolution (CVPR2022) https://arxiv.org/abs/2203.09388 Jianqi Ma, Zheto

Code for T-Few from "Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learning"

T-Few This repository contains the official code for the paper: "Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learni

Implementation of CaiT models in TensorFlow and ImageNet-1k checkpoints. Includes code for inference and fine-tuning.

CaiT-TF (Going deeper with Image Transformers) This repository provides TensorFlow / Keras implementations of different CaiT [1] variants from Touvron

Includes PyTorch - Keras model porting code for ConvNeXt family of models with fine-tuning and inference notebooks.

ConvNeXt-TF This repository provides TensorFlow / Keras implementations of different ConvNeXt [1] variants. It also provides the TensorFlow / Keras mo

Prompt tuning toolkit for GPT-2 and GPT-Neo

mkultra mkultra is a prompt tuning toolkit for GPT-2 and GPT-Neo. Prompt tuning injects a string of 20-100 special tokens into the context in order to

Multilingual Emotion classification using BERT (fine-tuning). Published at the WASSA workshop (ACL2022).

XLM-EMO: Multilingual Emotion Prediction in Social Media Text Abstract Detecting emotion in text allows social and computational scientists to study h

ACL22 paper: Imputing Out-of-Vocabulary Embeddings with LOVE Makes Language Models Robust with Little Cost

Imputing Out-of-Vocabulary Embeddings with LOVE Makes Language Models Robust with Little Cost LOVE is accpeted by ACL22 main conference as a long pape

Official Pytorch implementation of "Learning to Estimate Robust 3D Human Mesh from In-the-Wild Crowded Scenes", CVPR 2022

Learning to Estimate Robust 3D Human Mesh from In-the-Wild Crowded Scenes / 3DCrowdNet News 💪 3DCrowdNet achieves the state-of-the-art accuracy on 3D

Repository for fine-tuning Transformers 🤗 based seq2seq speech models in JAX/Flax.

Seq2Seq Speech in JAX A JAX/Flax repository for combining a pre-trained speech encoder model (e.g. Wav2Vec2, HuBERT, WavLM) with a pre-trained text de

Implementation of "Generalizable Neural Performer: Learning Robust Radiance Fields for Human Novel View Synthesis"

Generalizable Neural Performer: Learning Robust Radiance Fields for Human Novel View Synthesis Abstract: This work targets at using a general deep lea

Code for the Findings of NAACL 2022(Long Paper): AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks

AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks arXiv link: upcoming To be published in Findings of NA

Code for our SIGIR 2022 accepted paper : P3 Ranker: Mitigating the Gaps between Pre-training and Ranking Fine-tuning with Prompt-based Learning and Pre-finetuning

P3 Ranker Implementation for our SIGIR2022 accepted paper: P3 Ranker: Mitigating the Gaps between Pre-training and Ranking Fine-tuning with Prompt-bas

RODD: A Self-Supervised Approach for Robust Out-of-Distribution Detection

RODD Official Implementation of 2022 CVPRW Paper RODD: A Self-Supervised Approach for Robust Out-of-Distribution Detection Introduction: Recent studie

In this project we predict the forest cover type using the cartographic variables in the training/test datasets.

Kaggle Competition: Forest Cover Type Prediction In this project we predict the forest cover type (the predominant kind of tree cover) using the carto

Under the hood working of transformers, fine-tuning GPT-3 models, DeBERTa, vision models, and the start of Metaverse, using a variety of NLP platforms: Hugging Face, OpenAI API, Trax, and AllenNLP

Transformers-for-NLP-2nd-Edition @copyright 2022, Packt Publishing, Denis Rothman Contact me for any question you have on LinkedIn Get the book on Ama

Ensemble Knowledge Guided Sub-network Search and Fine-tuning for Filter Pruning

Ensemble Knowledge Guided Sub-network Search and Fine-tuning for Filter Pruning This repository is official Tensorflow implementation of paper: Ensemb

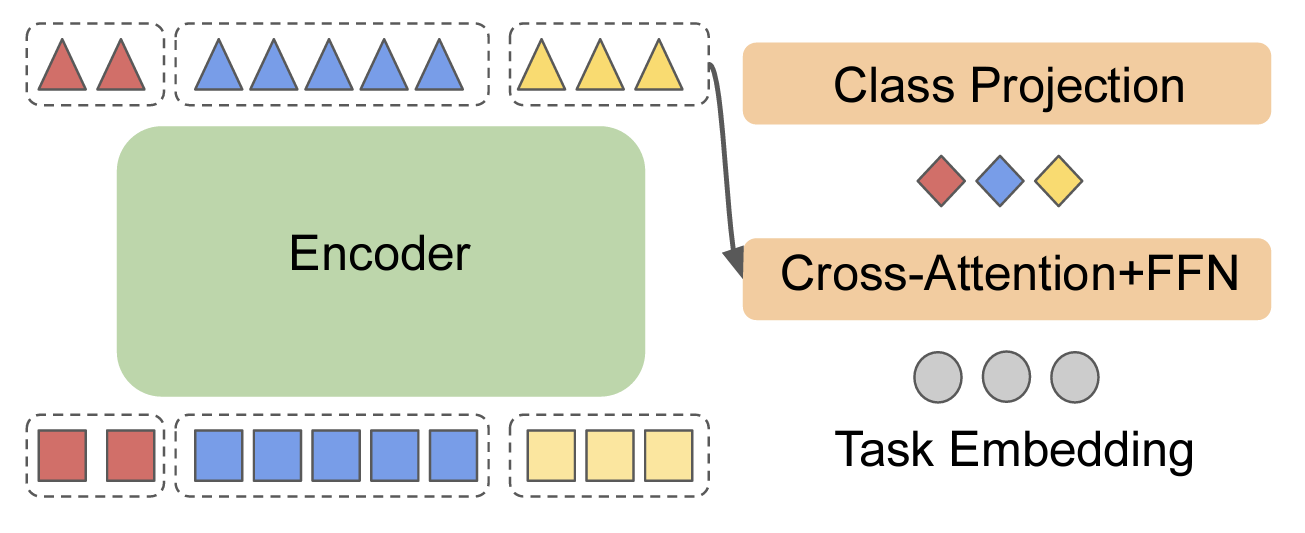

code for the ICLR'22 paper: On Robust Prefix-Tuning for Text Classification

On Robust Prefix-Tuning for Text Classification Prefix-tuning has drawed much attention as it is a parameter-efficient and modular alternative to adap

Codes for "Template-free Prompt Tuning for Few-shot NER".

EntLM The source codes for EntLM. Dependencies: Cuda 10.1, python 3.6.5 To install the required packages by following commands: $ pip3 install -r requ

SUPERVISED-CONTRASTIVE-LEARNING-FOR-PRE-TRAINED-LANGUAGE-MODEL-FINE-TUNING - The Facebook paper about fine tuning RoBERTa with contrastive loss

"# SUPERVISED-CONTRASTIVE-LEARNING-FOR-PRE-TRAINED-LANGUAGE-MODEL-FINE-TUNING" i

Adversarial-Information-Bottleneck - Distilling Robust and Non-Robust Features in Adversarial Examples by Information Bottleneck (NeurIPS21)

NeurIPS 2021 Title: Distilling Robust and Non-Robust Features in Adversarial Exa

GeoTransformer - Geometric Transformer for Fast and Robust Point Cloud Registration

Geometric Transformer for Fast and Robust Point Cloud Registration PyTorch imple

OpenDelta - An Open-Source Framework for Paramter Efficient Tuning.

OpenDelta is a toolkit for parameter efficient methods (we dub it as delta tuning), by which users could flexibly assign (or add) a small amount parameters to update while keeping the most paramters frozen. By using OpenDelta, users could easily implement prefix-tuning, adapters, Lora, or any other types of delta tuning with preferred PTMs.

Memory Defense: More Robust Classificationvia a Memory-Masking Autoencoder

Memory Defense: More Robust Classificationvia a Memory-Masking Autoencoder Authors: - Eashan Adhikarla - Dan Luo - Dr. Brian D. Davison Abstract Many

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers This repo contains our codes for the paper "No Parameters Left Behind: Sensitivity Gu

Large-scale Knowledge Graph Construction with Prompting

Large-scale Knowledge Graph Construction with Prompting across tasks (predictive and generative), and modalities (language, image, vision + language, etc.)

Byzantine-robust decentralized learning via self-centered clipping

Byzantine-robust decentralized learning via self-centered clipping In this paper, we study the challenging task of Byzantine-robust decentralized trai

Doubly Robust Off-Policy Evaluation for Ranking Policies under the Cascade Behavior Model

Doubly Robust Off-Policy Evaluation for Ranking Policies under the Cascade Behavior Model About This repository contains the code to replicate the syn

RipsNet: a general architecture for fast and robust estimation of the persistent homology of point clouds

RipsNet: a general architecture for fast and robust estimation of the persistent homology of point clouds This repository contains the code asscoiated

Finding Biological Plausibility for Adversarially Robust Features via Metameric Tasks

Adversarially-Robust-Periphery Code + Data from the paper "Finding Biological Plausibility for Adversarially Robust Features via Metameric Tasks" by A

Drslmarkov - Distributionally Robust Structure Learning for Discrete Pairwise Markov Networks

Distributionally Robust Structure Learning for Discrete Pairwise Markov Networks

Robust and blazing fast open-redirect vulnerability scanner with ability of recursevely crawling all of web-forms, entry points, or links with data.

After Golismero project got dead there is no more any up to date open-source tool that can collect links with parametrs and web-forms and then test th

Pytorch Performace Tuning, WandB, AMP, Multi-GPU, TensorRT, Triton

Plant Pathology 2020 FGVC7 Introduction A deep learning model pipeline for training, experimentaiton and deployment for the Kaggle Competition, Plant

Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners

DART Implementation for ICLR2022 paper Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners. Environment [email protected] Use pi

Code for "Steerable Pyramid Transform Enables Robust Left Ventricle Quantification"

Code for "Steerable Pyramid Transform Enables Robust Left Ventricle Quantification" This is an end-to-end framework for accurate and robust left ventr

Generate fine-tuning samples & Fine-tuning the model & Generate samples by transferring Note On

UPMT Generate fine-tuning samples & Fine-tuning the model & Generate samples by transferring Note On See main.py as an example: from model import PopM

This repository comes with the paper "On the Robustness of Counterfactual Explanations to Adverse Perturbations"

Robust Counterfactual Explanations This repository comes with the paper "On the Robustness of Counterfactual Explanations to Adverse Perturbations". I

PaRT: Parallel Learning for Robust and Transparent AI

PaRT: Parallel Learning for Robust and Transparent AI This repository contains the code for PaRT, an algorithm for training a base network on multiple

The Python code for the paper A Hybrid Quantum-Classical Algorithm for Robust Fitting

About The Python code for the paper A Hybrid Quantum-Classical Algorithm for Robust Fitting The demo program was only tested under Conda in a standard

PyTorch implementation of our paper How robust are discriminatively trained zero-shot learning models?

How robust are discriminatively trained zero-shot learning models? This repository contains the PyTorch implementation of our paper How robust are dis

Hyperparameters tuning and features selection are two common steps in every machine learning pipeline.

shap-hypetune A python package for simultaneous Hyperparameters Tuning and Features Selection for Gradient Boosting Models. Overview Hyperparameters t

EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks

EncT5 (Unofficial) Pytorch Implementation of EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks About Finetune T5 model for classification & r

This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML)

package tests docs license stats support This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML

Full Transformer Framework for Robust Point Cloud Registration with Deep Information Interaction

Full Transformer Framework for Robust Point Cloud Registration with Deep Information Interaction. arxiv This repository contains python scripts for tr

Original Implementation of Prompt Tuning from Lester, et al, 2021

Prompt Tuning This is the code to reproduce the experiments from the EMNLP 2021 paper "The Power of Scale for Parameter-Efficient Prompt Tuning" (Lest

A handy tool for common machine learning models' hyper-parameter tuning.

Common machine learning models' hyperparameter tuning This repo is for a collection of hyper-parameter tuning for "common" machine learning models, in

Code to reproduce the results for Statistically Robust Neural Network Classification, published in UAI 2021

Code to reproduce the results for Statistically Robust Neural Network Classification, published in UAI 2021

In this project, we compared Spanish BERT and Multilingual BERT in the Sentiment Analysis task.

Applying BERT Fine Tuning to Sentiment Classification on Amazon Reviews Abstract Sentiment analysis has made great progress in recent years, due to th

Source code for paper "Black-Box Tuning for Language-Model-as-a-Service"

Black-Box-Tuning Source code for paper "Black-Box Tuning for Language-Model-as-a-Service". Being busy recently, the code in this repo and this tutoria

Code for Robust Contrastive Learning against Noisy Views

Robust Contrastive Learning against Noisy Views This repository provides a PyTorch implementation of the Robust InfoNCE loss proposed in paper Robust

Black-Box-Tuning - Black-Box Tuning for Language-Model-as-a-Service

Black-Box-Tuning Source code for paper "Black-Box Tuning for Language-Model-as-a

We present a regularized self-labeling approach to improve the generalization and robustness properties of fine-tuning.

Overview This repository provides the implementation for the paper "Improved Regularization and Robustness for Fine-tuning in Neural Networks", which

Intel® Neural Compressor is an open-source Python library running on Intel CPUs and GPUs

Intel® Neural Compressor targeting to provide unified APIs for network compression technologies, such as low precision quantization, sparsity, pruning, knowledge distillation, across different deep learning frameworks to pursue optimal inference performance.

FEMDA: Robust classification with Flexible Discriminant Analysis in heterogeneous data

FEMDA: Robust classification with Flexible Discriminant Analysis in heterogeneous data. Flexible EM-Inspired Discriminant Analysis is a robust supervised classification algorithm that performs well in noisy and contaminated datasets.

Open-source implementation of Google Vizier for hyper parameters tuning

Advisor Introduction Advisor is the hyper parameters tuning system for black box optimization. It is the open-source implementation of Google Vizier w

CMS for everyone, easy to deploy and scale, robust modular system with many packages.

Django-Leonardo Full featured platform for fast and easy building extensible web applications. Don't waste your time searching stable solution for dai

Fine tuning keras-ocr python package with custom synthetic dataset from scratch

OCR-Pipeline-with-Keras The keras-ocr package generally consists of two parts: a Detector and a Recognizer: Detector is responsible for creating bound

Super Pix Adv - Offical implemention of Robust Superpixel-Guided Attentional Adversarial Attack (CVPR2020)

Super_Pix_Adv Offical implemention of Robust Superpixel-Guided Attentional Adver

Reverse the infix string. Note that while reversing the string you must interchange left and right parentheses

Reverse the infix string. Note that while reversing the string you must interchange left and right parentheses. Obtain the postfix expression of the infix expression Step 1.Reverse the postfix expression to get the prefix expression

Calibrated Hyperspectral Image Reconstruction via Graph-based Self-Tuning Network.

mask-uncertainty-in-HSI This repository contains the testing code and pre-trained models for the paper Calibrated Hyperspectral Image Reconstruction v

Self-supervised learning optimally robust representations for domain generalization.

OptDom: Learning Optimal Representations for Domain Generalization This repository contains the official implementation for Optimal Representations fo

Building a Robust IOT device which is customizable, encrypted, secure and user friendly

Building a Robust IOT device which is customizable, encrypted, secure and user friendly, which uses a single GPIO pin to extract multiple sensor values

Distributed Grid Descent: an algorithm for hyperparameter tuning guided by Bayesian inference, designed to run on multiple processes and potentially many machines with no central point of control

Distributed Grid Descent: an algorithm for hyperparameter tuning guided by Bayesian inference, designed to run on multiple processes and potentially many machines with no central point of control.

Tensorflow solution of NER task Using BiLSTM-CRF model with Google BERT Fine-tuning And private Server services

Tensorflow solution of NER task Using BiLSTM-CRF model with Google BERT Fine-tuning

Notes, programming assignments and quizzes from all courses within the Coursera Deep Learning specialization offered by deeplearning.ai

Coursera-deep-learning-specialization - Notes, programming assignments and quizzes from all courses within the Coursera Deep Learning specialization offered by deeplearning.ai: (i) Neural Networks and Deep Learning; (ii) Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization; (iii) Structuring Machine Learning Projects; (iv) Convolutional Neural Networks; (v) Sequence Models

Implementation of hyperparameter optimization/tuning methods for machine learning & deep learning models

Hyperparameter Optimization of Machine Learning Algorithms This code provides a hyper-parameter optimization implementation for machine learning algor

Hyperopt for solving CIFAR-100 with a convolutional neural network (CNN) built with Keras and TensorFlow, GPU backend

Hyperopt for solving CIFAR-100 with a convolutional neural network (CNN) built with Keras and TensorFlow, GPU backend This project acts as both a tuto

“Robust Lightweight Facial Expression Recognition Network with Label Distribution Training”, AAAI 2021.

EfficientFace Zengqun Zhao, Qingshan Liu, Feng Zhou. "Robust Lightweight Facial Expression Recognition Network with Label Distribution Training". AAAI

The codebase for Data-driven general-purpose voice activity detection.

Data driven GPVAD Repository for the work in TASLP 2021 Voice activity detection in the wild: A data-driven approach using teacher-student training. S

CCCL: Contrastive Cascade Graph Learning.

CCGL: Contrastive Cascade Graph Learning This repo provides a reference implementation of Contrastive Cascade Graph Learning (CCGL) framework as descr

Pytorch implementation of the paper "COAD: Contrastive Pre-training with Adversarial Fine-tuning for Zero-shot Expert Linking."

Expert-Linking Pytorch implementation of the paper "COAD: Contrastive Pre-training with Adversarial Fine-tuning for Zero-shot Expert Linking." This is

Implementation of the paper "Fine-Tuning Transformers: Vocabulary Transfer"

Transformer-vocabulary-transfer Implementation of the paper "Fine-Tuning Transfo

Robust, highly tunable and easy-to-integrate in-memory cache solution written in pure Python, with no dependencies.

Omoide Cache Caching doesn't need to be hard anymore. With just a few lines of code Omoide Cache will instantly bring your Python services to the next

Simple and Robust Loss Design for Multi-Label Learning with Missing Labels

Simple and Robust Loss Design for Multi-Label Learning with Missing Labels Official PyTorch Implementation of the paper Simple and Robust Loss Design

Baleen: Robust Multi-Hop Reasoning at Scale via Condensed Retrieval (NeurIPS'21)

Baleen Baleen is a state-of-the-art model for multi-hop reasoning, enabling scalable multi-hop search over massive collections for knowledge-intensive

Python package to transfer data in a fast, reliable, and packetized form.

pySerialTransfer Python package to transfer data in a fast, reliable, and packetized form.

Implementation of paper "Towards a Unified View of Parameter-Efficient Transfer Learning"

A Unified Framework for Parameter-Efficient Transfer Learning This is the official implementation of the paper: Towards a Unified View of Parameter-Ef

Fast and robust date extraction from web pages, with Python or on the command-line

Find original and updated publication dates of any web page. From the command-line or within Python, all the steps needed from web page download to HTML parsing, scraping, and text analysis are included.

Implementation of "The Power of Scale for Parameter-Efficient Prompt Tuning"

Prompt-Tuning Implementation of "The Power of Scale for Parameter-Efficient Prompt Tuning" Currently, we support the following huggigface models: Bart

The best discord.py template with a changeable prefix

Discord.py Bot Template By noma4321#0035 With A Custom Prefix To Every Guild Function Features Has a custom prefix that is changeable for every guild

PyTorch implementation of DeepUME: Learning the Universal Manifold Embedding for Robust Point Cloud Registration (BMVC 2021)

DeepUME: Learning the Universal Manifold Embedding for Robust Point Cloud Registration [video] [paper] [supplementary] [data] [thesis] Introduction De

Robust fine-tuning of zero-shot models

Robust fine-tuning of zero-shot models This repository contains code for the paper Robust fine-tuning of zero-shot models by Mitchell Wortsman*, Gabri

Benchmark for the generalization of 3D machine learning models across different remeshing/samplings of a surface.

Discretization Robust Correspondence Benchmark One challenge of machine learning on 3D surfaces is that there are many different representations/sampl

Feature rich robust FastAPI template.

Flexible and Lightweight general-purpose template for FastAPI. Usage ⚠️ Git, Python and Poetry must be installed and accessible ⚠️ Poetry version must

scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms.

Sklearn-genetic-opt scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms. This is meant to be an alternativ

Code of TIP2021 Paper《SFace: Sigmoid-Constrained Hypersphere Loss for Robust Face Recognition》. We provide both MxNet and Pytorch versions.

SFace Code of TIP2021 Paper 《SFace: Sigmoid-Constrained Hypersphere Loss for Robust Face Recognition》. We provide both MxNet, PyTorch and Jittor versi

A high-level yet extensible library for fast language model tuning via automatic prompt search

ruPrompts ruPrompts is a high-level yet extensible library for fast language model tuning via automatic prompt search, featuring integration with Hugg

Fine-tuning scripts for evaluating transformer-based models on KLEJ benchmark.

The KLEJ Benchmark Baselines The KLEJ benchmark (Kompleksowa Lista Ewaluacji Językowych) is a set of nine evaluation tasks for the Polish language und

Code for paper "Learning to Reweight Examples for Robust Deep Learning"

learning-to-reweight-examples Code for paper Learning to Reweight Examples for Robust Deep Learning. [arxiv] Environment We tested the code on tensorf

PyTorch Implementation of the paper Learning to Reweight Examples for Robust Deep Learning

Learning to Reweight Examples for Robust Deep Learning Unofficial PyTorch implementation of Learning to Reweight Examples for Robust Deep Learning. Th

Artifacts for paper "MMO: Meta Multi-Objectivization for Software Configuration Tuning"

MMO: Meta Multi-Objectivization for Software Configuration Tuning This repository contains the data and code for the following paper that is currently

Anchor Retouching via Model Interaction for Robust Object Detection in Aerial Images

Anchor Retouching via Model Interaction for Robust Object Detection in Aerial Images In this paper, we present an effective Dynamic Enhancement Anchor

![[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)](https://github.com/ytongbai/ViTs-vs-CNNs/raw/main/resources/teaser.png)

[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)

Are Transformers More Robust Than CNNs? Pytorch implementation for NeurIPS 2021 Paper: Are Transformers More Robust Than CNNs? Our implementation is b

This is Unofficial Repo. Lips Don't Lie: A Generalisable and Robust Approach to Face Forgery Detection (CVPR 2021)

Lips Don't Lie: A Generalisable and Robust Approach to Face Forgery Detection This is a PyTorch implementation of the LipForensics paper. This is an U

ICRA 2021 - Robust Place Recognition using an Imaging Lidar

Robust Place Recognition using an Imaging Lidar A place recognition package using high-resolution imaging lidar. For best performance, a lidar equippe

Imbalaced Classification and Robust Semantic Segmentation

Imbalaced Classification and Robust Semantic Segmentation This repo implements two algoritms. The imbalance clibration (IC) algorithm for image classi

Official Code for AdvRush: Searching for Adversarially Robust Neural Architectures (ICCV '21)

AdvRush Official Code for AdvRush: Searching for Adversarially Robust Neural Architectures (ICCV '21) Environmental Set-up Python == 3.6.12, PyTorch =

DOP-Tuning(Domain-Oriented Prefix-tuning model)

DOP-Tuning DOP-Tuning(Domain-Oriented Prefix-tuning model)代码基于Prefix-Tuning改进. Files ├── seq2seq # Code for encoder-decoder arch

RID-Noise: Towards Robust Inverse Design under Noisy Environments

This is code of RID-Noise. Reproduce RID-Noise Results Toy tasks Please refer to the notebook ridnoise.ipynb to view experiments on three toy tasks. B