156 Repositories

Python compression-artifact-reduction Libraries

ACL'22: Structured Pruning Learns Compact and Accurate Models

☕ CoFiPruning: Structured Pruning Learns Compact and Accurate Models This repository contains the code and pruned models for our ACL'22 paper Structur

A fast hierarchical dimensionality reduction algorithm.

h-NNE: Hierarchical Nearest Neighbor Embedding A fast hierarchical dimensionality reduction algorithm. h-NNE is a general purpose dimensionality reduc

🧬 Non-linear feature reduction using Deep Autoencoders and Breast Cancer classification.

Project summary This repository contains the implementation of my bachelor degree project. The aim of the project is to apply non-linear feature reduc

A lossless neural compression framework built on top of JAX.

Kompressor Branch CI Coverage main (active) main development A neural compression framework built on top of JAX. Install setup.py assumes a compatible

Convert BART models to ONNX with quantization. 3X reduction in size, and upto 3X boost in inference speed

fast-Bart Reduction of BART model size by 3X, and boost in inference speed up to 3X BART implementation of the fastT5 library (https://github.com/Ki6a

PyTorch Implementation of the SuRP algorithm by the authors of the AISTATS 2022 paper "An Information-Theoretic Justification for Model Pruning"

PyTorch Implementation of the SuRP algorithm by the authors of the AISTATS 2022 paper "An Information-Theoretic Justification for Model Pruning".

Explore extreme compression for pre-trained language models

Code for paper "Exploring extreme parameter compression for pre-trained language models ICLR2022"

FewBit — a library for memory efficient training of large neural networks

FewBit FewBit — a library for memory efficient training of large neural networks. Its efficiency originates from storage optimizations applied to back

A test repository to build a python package and publish the package to Artifact Registry using GCB

A test repository to build a python package and publish the package to Artifact Registry using GCB. Then have the package be a dependency in a GCF function.

DimReductionClustering - Dimensionality Reduction + Clustering + Unsupervised Score Metrics

Dimensionality Reduction + Clustering + Unsupervised Score Metrics Introduction

Attempts to crack the compression puzzle.

The Compression Puzzle One lovely Friday we were faced with this nice yet intriguing programming puzzle. One shall write a program that compresses str

High-fidelity 3D Model Compression based on Key Spheres

High-fidelity 3D Model Compression based on Key Spheres This repository contains the implementation of the paper: Yuanzhan Li, Yuqi Liu, Yujie Lu, Siy

Application of K-means algorithm on a music dataset after a dimensionality reduction with PCA

PCA for dimensionality reduction combined with Kmeans Goal The Goal of this notebook is to apply a dimensionality reduction on a big dataset in order

A library for benchmarking, developing and deploying deep learning anomaly detection algorithms

A library for benchmarking, developing and deploying deep learning anomaly detection algorithms Key Features • Getting Started • Docs • License Introd

Can We Find Neurons that Cause Unrealistic Images in Deep Generative Networks?

Can We Find Neurons that Cause Unrealistic Images in Deep Generative Networks? Artifact Detection/Correction - Offcial PyTorch Implementation This rep

Fully Adaptive Bayesian Algorithm for Data Analysis (FABADA) is a new approach of noise reduction methods. In this repository is shown the package developed for this new method based on \citepaper.

Fully Adaptive Bayesian Algorithm for Data Analysis FABADA FABADA is a novel non-parametric noise reduction technique which arise from the point of vi

CVPR2021 Content-Aware GAN Compression

Content-Aware GAN Compression [ArXiv] Paper accepted to CVPR2021. @inproceedings{liu2021content, title = {Content-Aware GAN Compression}, auth

Python Machine Learning Jupyter Notebooks (ML website)

Python Machine Learning Jupyter Notebooks (ML website) Dr. Tirthajyoti Sarkar, Fremont, California (Please feel free to connect on LinkedIn here) Also

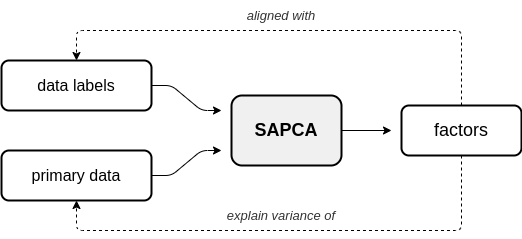

Repository for the AugmentedPCA Python package.

Overview This Python package provides implementations of Augmented Principal Component Analysis (AugmentedPCA) - a family of linear factor models that

Easy genetic ancestry predictions in Python

ezancestry Easily visualize your direct-to-consumer genetics next to 2500+ samples from the 1000 genomes project. Evaluate the performance of a custom

Interactive dimensionality reduction for large datasets

BlosSOM 🌼 BlosSOM is a graphical environment for running semi-supervised dimensionality reduction with EmbedSOM. You can use it to explore multidimen

CV backbones including GhostNet, TinyNet and TNT, developed by Huawei Noah's Ark Lab.

CV Backbones including GhostNet, TinyNet, TNT (Transformer in Transformer) developed by Huawei Noah's Ark Lab. GhostNet Code TinyNet Code TNT Code Pyr

![[CVPR 2020] GAN Compression: Efficient Architectures for Interactive Conditional GANs](https://github.com/mit-han-lab/gan-compression/raw/master/imgs/teaser.png)

[CVPR 2020] GAN Compression: Efficient Architectures for Interactive Conditional GANs

GAN Compression project | paper | videos | slides [NEW!] GAN Compression is accepted by T-PAMI! We released our T-PAMI version in the arXiv v4! [NEW!]

Image Compression GUI APP Python: PyQt5

Image Compression GUI APP Image Compression GUI APP Python: PyQt5 Use : f5 or debug or simply run it on your ids(vscode , pycham, anaconda etc.) socia

50-days-of-Statistics-for-Data-Science - This repository consist of a 50-day program

50-days-of-Statistics-for-Data-Science - This repository consist of a 50-day program. All the statistics required for the complete understanding of data science will be uploaded in this repository.

Data reduction pipeline for KOALA on the AAT.

KOALA KOALA, the Kilofibre Optical AAT Lenslet Array, is a wide-field, high efficiency, integral field unit used by the AAOmega spectrograph on the 3.

Simple, configuration-driven backup software for servers and workstations

title permalink borgmatic index.html It's your data. Keep it that way. borgmatic is simple, configuration-driven backup software for servers and works

Source code of AAAI 2022 paper "Towards End-to-End Image Compression and Analysis with Transformers".

Towards End-to-End Image Compression and Analysis with Transformers Source code of our AAAI 2022 paper "Towards End-to-End Image Compression and Analy

MMRazor: a model compression toolkit for model slimming and AutoML

Documentation: https://mmrazor.readthedocs.io/ English | 简体中文 Introduction MMRazor is a model compression toolkit for model slimming and AutoML, which

A parser of Windows Defender's DetectionHistory forensic artifact, containing substantial info about quarantined files and executables.

A parser of Windows Defender's DetectionHistory forensic artifact, containing substantial info about quarantined files and executables.

Deduplicating archiver with compression and authenticated encryption.

More screencasts: installation, advanced usage What is BorgBackup? BorgBackup (short: Borg) is a deduplicating backup program. Optionally, it supports

⛵️The official PyTorch implementation for "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing" (EMNLP 2020).

BERT-of-Theseus Code for paper "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing". BERT-of-Theseus is a new compressed BERT by progre

Useful PDF-related productivity tool.

Luftmensch 1.4.7 (Español) | 1.4.3 (English) Version 1.4.7 (Español) released in October 2021. Version 1.4.3 (English) released in September 2021. 🏮

Spatio-Temporal Entropy Model (STEM) for end-to-end leaned video compression.

Spatio-Temporal Entropy Model A Pytorch Reproduction of Spatio-Temporal Entropy Model (STEM) for end-to-end leaned video compression. More details can

Funnels: Exact maximum likelihood with dimensionality reduction.

Funnels This repository contains the code needed to reproduce the experiments from the paper: Funnels: Exact maximum likelihood with dimensionality re

Snakemake workflow for converting FASTQ files to self-contained CRAM files with maximum lossless compression.

Snakemake workflow: name A Snakemake workflow for description Usage The usage of this workflow is described in the Snakemake Workflow Catalog. If

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

An official source code for paper Deep Graph Clustering via Dual Correlation Reduction, accepted by AAAI 2022

Dual Correlation Reduction Network An official source code for paper Deep Graph Clustering via Dual Correlation Reduction, accepted by AAAI 2022. Any

Official Pytorch implementation for Deep Contextual Video Compression, NeurIPS 2021

Introduction Official Pytorch implementation for Deep Contextual Video Compression, NeurIPS 2021 Prerequisites Python 3.8 and conda, get Conda CUDA 11

InDuDoNet+: A Model-Driven Interpretable Dual Domain Network for Metal Artifact Reduction in CT Images

InDuDoNet+: A Model-Driven Interpretable Dual Domain Network for Metal Artifact Reduction in CT Images Hong Wang, Yuexiang Li, Haimiao Zhang, Deyu Men

Network Compression via Central Filter

Network Compression via Central Filter Environments The code has been tested in the following environments: Python 3.8 PyTorch 1.8.1 cuda 10.2 torchsu

A PyTorch library and evaluation platform for end-to-end compression research

CompressAI CompressAI (compress-ay) is a PyTorch library and evaluation platform for end-to-end compression research. CompressAI currently provides: c

A library for researching neural networks compression and acceleration methods.

A library for researching neural networks compression and acceleration methods.

An Image compression simulator that uses Source Extractor and Monte Carlo methods to examine the post compressive effects different compression algorithms have.

ImageCompressionSimulation An Image compression simulator that uses Source Extractor and Monte Carlo methods to examine the post compressive effects o

Research Artifact of USENIX Security 2022 Paper: Automated Side Channel Analysis of Media Software with Manifold Learning

Automated Side Channel Analysis of Media Software with Manifold Learning Official implementation of USENIX Security 2022 paper: Automated Side Channel

Implementation of the ivis algorithm as described in the paper Structure-preserving visualisation of high dimensional single-cell datasets.

Implementation of the ivis algorithm as described in the paper Structure-preserving visualisation of high dimensional single-cell datasets.

Training Structured Neural Networks Through Manifold Identification and Variance Reduction

Training Structured Neural Networks Through Manifold Identification and Variance Reduction This repository is a pytorch implementation of the Regulari

Deep learning model for EEG artifact removal

DeepSeparator Introduction Electroencephalogram (EEG) recordings are often contaminated with artifacts. Various methods have been developed to elimina

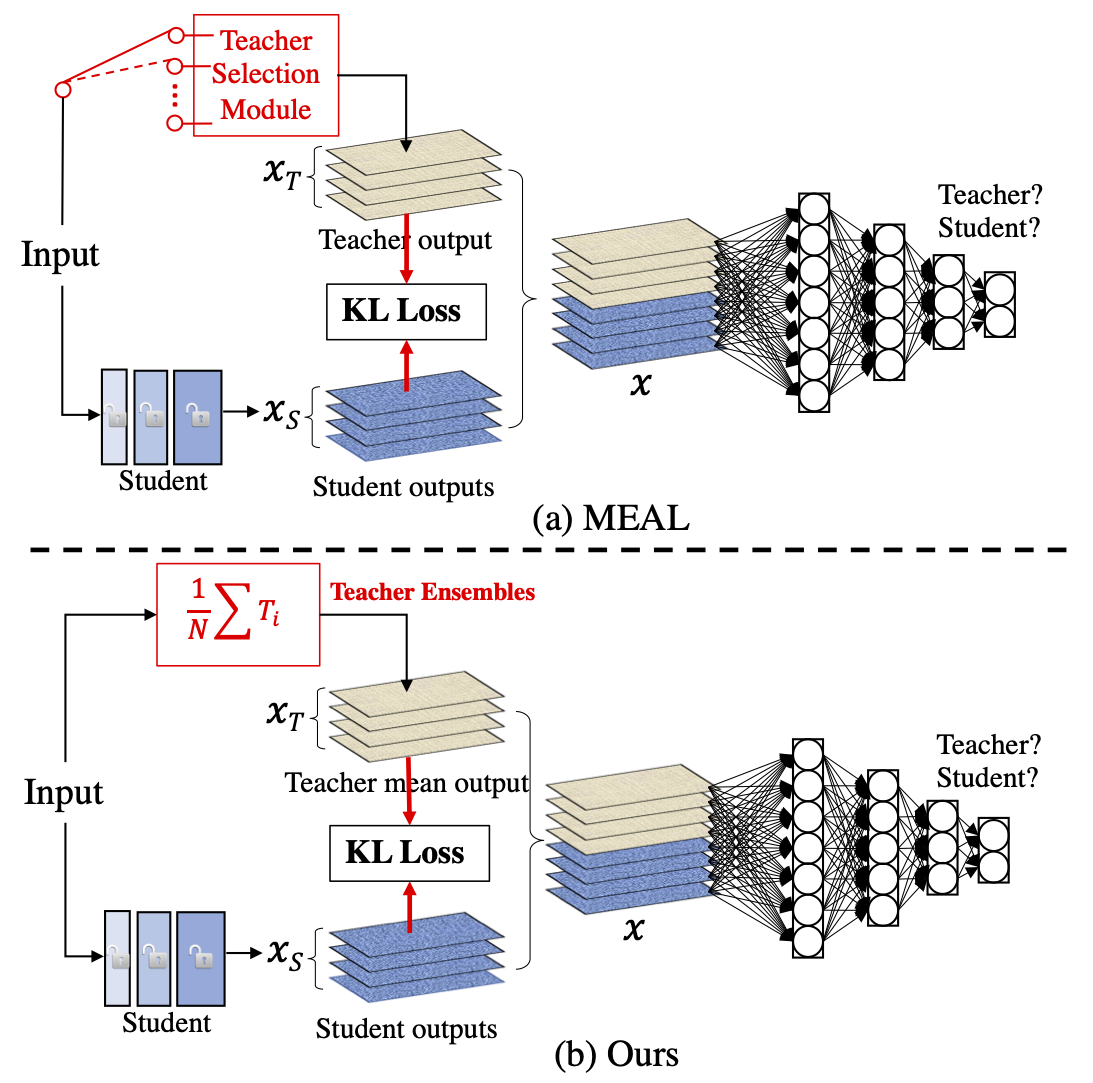

MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tricks

MEAL-V2 This is the official pytorch implementation of our paper: "MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tric

A comprehensive set of fairness metrics for datasets and machine learning models, explanations for these metrics, and algorithms to mitigate bias in datasets and models.

AI Fairness 360 (AIF360) The AI Fairness 360 toolkit is an extensible open-source library containg techniques developed by the research community to h

[NeurIPS'21 Spotlight] PyTorch code for our paper "Aligned Structured Sparsity Learning for Efficient Image Super-Resolution"

ASSL This repository is for a new network pruning method (Aligned Structured Sparsity Learning, ASSL) for efficient single image super-resolution (SR)

Repo for EMNLP 2021 paper "Beyond Preserved Accuracy: Evaluating Loyalty and Robustness of BERT Compression"

beyond-preserved-accuracy Repo for EMNLP 2021 paper "Beyond Preserved Accuracy: Evaluating Loyalty and Robustness of BERT Compression" How to implemen

Super Tickets in Pre-Trained Language Models: From Model Compression to Improving Generalization (ACL 2021)

Structured Super Lottery Tickets in BERT This repo contains our codes for the paper "Super Tickets in Pre-Trained Language Models: From Model Compress

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators This is our Pytorch implementation for t

A code implementation of AC-GC: Activation Compression with Guaranteed Convergence, in NeurIPS 2021.

Code For AC-GC: Lossy Activation Compression with Guaranteed Convergence This code is intended to be used as a supplemental material for submission to

Pytorch implementation for Patient Knowledge Distillation for BERT Model Compression

Patient Knowledge Distillation for BERT Model Compression Knowledge distillation for BERT model Installation Run command below to install the environm

SwinIR: Image Restoration Using Swin Transformer

SwinIR: Image Restoration Using Swin Transformer This repository is the official PyTorch implementation of SwinIR: Image Restoration Using Shifted Win

osqueryIR is an artifact collection tool for Linux systems.

osqueryIR osqueryIR is an artifact collection tool for Linux systems. It provides the following capabilities: Execute osquery SQL queries Collect file

FasterAI: A library to make smaller and faster models with FastAI.

Fasterai fasterai is a library created to make neural network smaller and faster. It essentially relies on common compression techniques for networks

MCML is a toolkit for semi-supervised dimensionality reduction and quantitative analysis of Multi-Class, Multi-Label data

MCML is a toolkit for semi-supervised dimensionality reduction and quantitative analysis of Multi-Class, Multi-Label data. We demonstrate its use

TriMap: Large-scale Dimensionality Reduction Using Triplets

TriMap TriMap is a dimensionality reduction method that uses triplet constraints to form a low-dimensional embedding of a set of points. The triplet c

A Python 3 package for state-of-the-art statistical dimension reduction methods

direpack: a Python 3 library for state-of-the-art statistical dimension reduction techniques This package delivers a scikit-learn compatible Python 3

Quantization library for PyTorch. Support low-precision and mixed-precision quantization, with hardware implementation through TVM.

HAWQ: Hessian AWare Quantization HAWQ is an advanced quantization library written for PyTorch. HAWQ enables low-precision and mixed-precision uniform

DA2Lite is an automated model compression toolkit for PyTorch.

DA2Lite (Deep Architecture to Lite) is a toolkit to compress and accelerate deep network models. ⭐ Star us on GitHub — it helps!! Frameworks & Librari

TensorFlow implementation of Barlow Twins (Barlow Twins: Self-Supervised Learning via Redundancy Reduction)

Barlow-Twins-TF This repository implements Barlow Twins (Barlow Twins: Self-Supervised Learning via Redundancy Reduction) in TensorFlow and demonstrat

ZipFly is a zip archive generator based on zipfile.py

ZipFly is a zip archive generator based on zipfile.py. It was created by Buzon.io to generate very large ZIP archives for immediate sending out to clients, or for writing large ZIP archives without memory inflation.

IntraQ: Learning Synthetic Images with Intra-Class Heterogeneity for Zero-Shot Network Quantization

IntraQ: Learning Synthetic Images with Intra-Class Heterogeneity for Zero-Shot Network Quantization paper Requirements Python = 3.7.10 Pytorch == 1.7

Reference PyTorch implementation of "End-to-end optimized image compression with competition of prior distributions"

PyTorch reference implementation of "End-to-end optimized image compression with competition of prior distributions" by Benoit Brummer and Christophe

Code for IntraQ, PyTorch implementation of our paper under review

IntraQ: Learning Synthetic Images with Intra-Class Heterogeneity for Zero-Shot Network Quantization paper Requirements Python = 3.7.10 Pytorch == 1.7

MiniSom is a minimalistic implementation of the Self Organizing Maps

MiniSom Self Organizing Maps MiniSom is a minimalistic and Numpy based implementation of the Self Organizing Maps (SOM). SOM is a type of Artificial N

🛠️ Tools for Transformers compression using Lightning ⚡

Bert-squeeze is a repository aiming to provide code to reduce the size of Transformer-based models or decrease their latency at inference time.

Iterative Training: Finding Binary Weight Deep Neural Networks with Layer Binarization

Iterative Training: Finding Binary Weight Deep Neural Networks with Layer Binarization This repository contains the source code for the paper (link wi

An implementation of Group Fisher Pruning for Practical Network Compression based on pytorch and mmcv

FisherPruning-Pytorch An implementation of Group Fisher Pruning for Practical Network Compression based on pytorch and mmcv Main Functions Pruning f

STBP is a way to train SNN with datasets by Backward propagation.

Spiking neural network (SNN), compared with depth neural network (DNN), has faster processing speed, lower energy consumption and more biological interpretability, which is expected to approach Strong AI.

An open source AutoML toolkit for automate machine learning lifecycle, including feature engineering, neural architecture search, model compression and hyper-parameter tuning.

NNI Doc | 简体中文 NNI (Neural Network Intelligence) is a lightweight but powerful toolkit to help users automate Feature Engineering, Neural Architecture

An efficient and easy-to-use deep learning model compression framework

TinyNeuralNetwork 简体中文 TinyNeuralNetwork is an efficient and easy-to-use deep learning model compression framework, which contains features like neura

Back to Basics: Efficient Network Compression via IMP

Back to Basics: Efficient Network Compression via IMP Authors: Max Zimmer, Christoph Spiegel, Sebastian Pokutta This repository contains the code to r

Python library for analysis of time series data including dimensionality reduction, clustering, and Markov model estimation

deeptime Releases: Installation via conda recommended. conda install -c conda-forge deeptime pip install deeptime Documentation: deeptime-ml.github.io

SEC'21: Sparse Bitmap Compression for Memory-Efficient Training onthe Edge

Training Deep Learning Models on The Edge Training on the Edge enables continuous learning from new data for deployed neural networks on memory-constr

Generic image compressor for machine learning. Pytorch code for our paper "Lossy compression for lossless prediction".

Lossy Compression for Lossless Prediction Using: Training: This repostiory contains our implementation of the paper: Lossy Compression for Lossless Pr

Pytorch implementation of our paper accepted by NeurIPS 2021 -- Revisiting Discriminator in GAN Compression: A Generator-discriminator Cooperative Compression Scheme

Revisiting Discriminator in GAN Compression: A Generator-discriminator Cooperative Compression Scheme (NeurIPS2021) (Link) Overview Prerequisites Linu

Code for approximate graph reduction techniques for cardinality-based DSFM, from paper

SparseCard Code for approximate graph reduction techniques for cardinality-based DSFM, from paper "Approximate Decomposable Submodular Function Minimi

Revisiting Discriminator in GAN Compression: A Generator-discriminator Cooperative Compression Scheme (NeurIPS2021)

Revisiting Discriminator in GAN Compression: A Generator-discriminator Cooperative Compression Scheme (NeurIPS2021) Overview Prerequisites Linux Pytho

YOLOv5 Series Multi-backbone, Pruning and quantization Compression Tool Box.

YOLOv5-Compression Update News Requirements 环境安装 pip install -r requirements.txt Evaluation metric Visdrone Model mAP mAP@50 Parameters(M) GFLOPs FPS@

A Pytorch Implementation of a continuously rate adjustable learned image compression framework.

GainedVAE A Pytorch Implementation of a continuously rate adjustable learned image compression framework, Gained Variational Autoencoder(GainedVAE). N

Open-source Laplacian Eigenmaps for dimensionality reduction of large data in python.

Fast Laplacian Eigenmaps in python Open-source Laplacian Eigenmaps for dimensionality reduction of large data in python. Comes with an wrapper for NMS

CPSPEC is an astrophysical data reduction software for timing

CPSPEC manual Introduction CPSPEC is an astrophysical data reduction software for timing. Various timing properties, such as power spectra and cross s

10x faster matrix and vector operations

Bolt is an algorithm for compressing vectors of real-valued data and running mathematical operations directly on the compressed representations. If yo

My own Unicode compression algorithm

Zee Code ZCode is a custom compression algorithm I originally developed for a competition held for the Spring 2019 Datastructures and Algorithms cours

This repository contains the code for the paper in EMNLP 2021: "HRKD: Hierarchical Relational Knowledge Distillation for Cross-domain Language Model Compression".

HRKD: Hierarchical Relational Knowledge Distillation for Cross-domain Language Model Compression This repository contains the code for the paper in EM

Patch-Based Deep Autoencoder for Point Cloud Geometry Compression

Patch-Based Deep Autoencoder for Point Cloud Geometry Compression Overview The ever-increasing 3D application makes the point cloud compression unprec

TLDR: Twin Learning for Dimensionality Reduction

TLDR (Twin Learning for Dimensionality Reduction) is an unsupervised dimensionality reduction method that combines neighborhood embedding learning with the simplicity and effectiveness of recent self-supervised learning losses.

Official implementation of "Variable-Rate Deep Image Compression through Spatially-Adaptive Feature Transform", ICCV 2021

Variable-Rate Deep Image Compression through Spatially-Adaptive Feature Transform This repository is the implementation of "Variable-Rate Deep Image C

Towards Flexible Blind JPEG Artifacts Removal (FBCNN, ICCV 2021)

Towards Flexible Blind JPEG Artifacts Removal (FBCNN, ICCV 2021) Jiaxi Jiang, Kai Zhang, Radu Timofte Computer Vision Lab, ETH Zurich, Switzerland 🔥

Fast batch image resizer and rotator for JPEG and PNG images.

imgp is a command line image resizer and rotator for JPEG and PNG images.

Research Artifact of USENIX Security 2022 Paper: Automated Side Channel Analysis of Media Software with Manifold Learning

Manifold-SCA Research Artifact of USENIX Security 2022 Paper: Automated Side Channel Analysis of Media Software with Manifold Learning The repo is org

A Closer Look at Structured Pruning for Neural Network Compression

A Closer Look at Structured Pruning for Neural Network Compression Code used to reproduce experiments in https://arxiv.org/abs/1810.04622. To prune, w

Code for paper "Energy-Constrained Compression for Deep Neural Networks via Weighted Sparse Projection and Layer Input Masking"

model_based_energy_constrained_compression Code for paper "Energy-Constrained Compression for Deep Neural Networks via Weighted Sparse Projection and

Distiller is an open-source Python package for neural network compression research.

Wiki and tutorials | Documentation | Getting Started | Algorithms | Design | FAQ Distiller is an open-source Python package for neural network compres