99 Repositories

Python lzw-compression Libraries

ACL'22: Structured Pruning Learns Compact and Accurate Models

☕ CoFiPruning: Structured Pruning Learns Compact and Accurate Models This repository contains the code and pruned models for our ACL'22 paper Structur

A lossless neural compression framework built on top of JAX.

Kompressor Branch CI Coverage main (active) main development A neural compression framework built on top of JAX. Install setup.py assumes a compatible

PyTorch Implementation of the SuRP algorithm by the authors of the AISTATS 2022 paper "An Information-Theoretic Justification for Model Pruning"

PyTorch Implementation of the SuRP algorithm by the authors of the AISTATS 2022 paper "An Information-Theoretic Justification for Model Pruning".

Explore extreme compression for pre-trained language models

Code for paper "Exploring extreme parameter compression for pre-trained language models ICLR2022"

FewBit — a library for memory efficient training of large neural networks

FewBit FewBit — a library for memory efficient training of large neural networks. Its efficiency originates from storage optimizations applied to back

Attempts to crack the compression puzzle.

The Compression Puzzle One lovely Friday we were faced with this nice yet intriguing programming puzzle. One shall write a program that compresses str

High-fidelity 3D Model Compression based on Key Spheres

High-fidelity 3D Model Compression based on Key Spheres This repository contains the implementation of the paper: Yuanzhan Li, Yuqi Liu, Yujie Lu, Siy

A library for benchmarking, developing and deploying deep learning anomaly detection algorithms

A library for benchmarking, developing and deploying deep learning anomaly detection algorithms Key Features • Getting Started • Docs • License Introd

CVPR2021 Content-Aware GAN Compression

Content-Aware GAN Compression [ArXiv] Paper accepted to CVPR2021. @inproceedings{liu2021content, title = {Content-Aware GAN Compression}, auth

CV backbones including GhostNet, TinyNet and TNT, developed by Huawei Noah's Ark Lab.

CV Backbones including GhostNet, TinyNet, TNT (Transformer in Transformer) developed by Huawei Noah's Ark Lab. GhostNet Code TinyNet Code TNT Code Pyr

![[CVPR 2020] GAN Compression: Efficient Architectures for Interactive Conditional GANs](https://github.com/mit-han-lab/gan-compression/raw/master/imgs/teaser.png)

[CVPR 2020] GAN Compression: Efficient Architectures for Interactive Conditional GANs

GAN Compression project | paper | videos | slides [NEW!] GAN Compression is accepted by T-PAMI! We released our T-PAMI version in the arXiv v4! [NEW!]

Image Compression GUI APP Python: PyQt5

Image Compression GUI APP Image Compression GUI APP Python: PyQt5 Use : f5 or debug or simply run it on your ids(vscode , pycham, anaconda etc.) socia

Simple, configuration-driven backup software for servers and workstations

title permalink borgmatic index.html It's your data. Keep it that way. borgmatic is simple, configuration-driven backup software for servers and works

Source code of AAAI 2022 paper "Towards End-to-End Image Compression and Analysis with Transformers".

Towards End-to-End Image Compression and Analysis with Transformers Source code of our AAAI 2022 paper "Towards End-to-End Image Compression and Analy

MMRazor: a model compression toolkit for model slimming and AutoML

Documentation: https://mmrazor.readthedocs.io/ English | 简体中文 Introduction MMRazor is a model compression toolkit for model slimming and AutoML, which

Deduplicating archiver with compression and authenticated encryption.

More screencasts: installation, advanced usage What is BorgBackup? BorgBackup (short: Borg) is a deduplicating backup program. Optionally, it supports

⛵️The official PyTorch implementation for "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing" (EMNLP 2020).

BERT-of-Theseus Code for paper "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing". BERT-of-Theseus is a new compressed BERT by progre

Useful PDF-related productivity tool.

Luftmensch 1.4.7 (Español) | 1.4.3 (English) Version 1.4.7 (Español) released in October 2021. Version 1.4.3 (English) released in September 2021. 🏮

Spatio-Temporal Entropy Model (STEM) for end-to-end leaned video compression.

Spatio-Temporal Entropy Model A Pytorch Reproduction of Spatio-Temporal Entropy Model (STEM) for end-to-end leaned video compression. More details can

Snakemake workflow for converting FASTQ files to self-contained CRAM files with maximum lossless compression.

Snakemake workflow: name A Snakemake workflow for description Usage The usage of this workflow is described in the Snakemake Workflow Catalog. If

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

Official Pytorch implementation for Deep Contextual Video Compression, NeurIPS 2021

Introduction Official Pytorch implementation for Deep Contextual Video Compression, NeurIPS 2021 Prerequisites Python 3.8 and conda, get Conda CUDA 11

Network Compression via Central Filter

Network Compression via Central Filter Environments The code has been tested in the following environments: Python 3.8 PyTorch 1.8.1 cuda 10.2 torchsu

A PyTorch library and evaluation platform for end-to-end compression research

CompressAI CompressAI (compress-ay) is a PyTorch library and evaluation platform for end-to-end compression research. CompressAI currently provides: c

A library for researching neural networks compression and acceleration methods.

A library for researching neural networks compression and acceleration methods.

An Image compression simulator that uses Source Extractor and Monte Carlo methods to examine the post compressive effects different compression algorithms have.

ImageCompressionSimulation An Image compression simulator that uses Source Extractor and Monte Carlo methods to examine the post compressive effects o

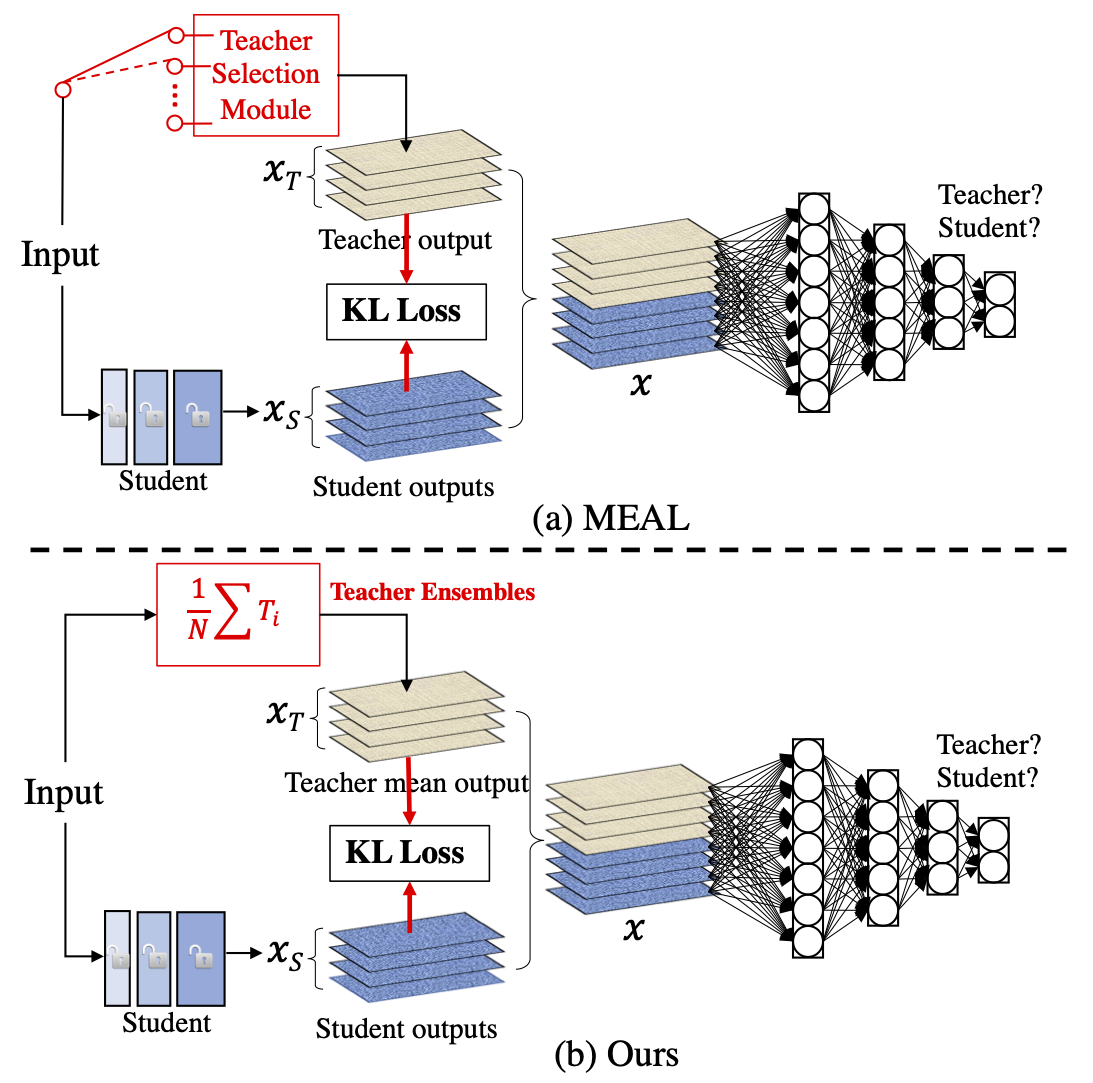

MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tricks

MEAL-V2 This is the official pytorch implementation of our paper: "MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tric

[NeurIPS'21 Spotlight] PyTorch code for our paper "Aligned Structured Sparsity Learning for Efficient Image Super-Resolution"

ASSL This repository is for a new network pruning method (Aligned Structured Sparsity Learning, ASSL) for efficient single image super-resolution (SR)

Repo for EMNLP 2021 paper "Beyond Preserved Accuracy: Evaluating Loyalty and Robustness of BERT Compression"

beyond-preserved-accuracy Repo for EMNLP 2021 paper "Beyond Preserved Accuracy: Evaluating Loyalty and Robustness of BERT Compression" How to implemen

Super Tickets in Pre-Trained Language Models: From Model Compression to Improving Generalization (ACL 2021)

Structured Super Lottery Tickets in BERT This repo contains our codes for the paper "Super Tickets in Pre-Trained Language Models: From Model Compress

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators This is our Pytorch implementation for t

A code implementation of AC-GC: Activation Compression with Guaranteed Convergence, in NeurIPS 2021.

Code For AC-GC: Lossy Activation Compression with Guaranteed Convergence This code is intended to be used as a supplemental material for submission to

Pytorch implementation for Patient Knowledge Distillation for BERT Model Compression

Patient Knowledge Distillation for BERT Model Compression Knowledge distillation for BERT model Installation Run command below to install the environm

SwinIR: Image Restoration Using Swin Transformer

SwinIR: Image Restoration Using Swin Transformer This repository is the official PyTorch implementation of SwinIR: Image Restoration Using Shifted Win

FasterAI: A library to make smaller and faster models with FastAI.

Fasterai fasterai is a library created to make neural network smaller and faster. It essentially relies on common compression techniques for networks

Quantization library for PyTorch. Support low-precision and mixed-precision quantization, with hardware implementation through TVM.

HAWQ: Hessian AWare Quantization HAWQ is an advanced quantization library written for PyTorch. HAWQ enables low-precision and mixed-precision uniform

DA2Lite is an automated model compression toolkit for PyTorch.

DA2Lite (Deep Architecture to Lite) is a toolkit to compress and accelerate deep network models. ⭐ Star us on GitHub — it helps!! Frameworks & Librari

ZipFly is a zip archive generator based on zipfile.py

ZipFly is a zip archive generator based on zipfile.py. It was created by Buzon.io to generate very large ZIP archives for immediate sending out to clients, or for writing large ZIP archives without memory inflation.

IntraQ: Learning Synthetic Images with Intra-Class Heterogeneity for Zero-Shot Network Quantization

IntraQ: Learning Synthetic Images with Intra-Class Heterogeneity for Zero-Shot Network Quantization paper Requirements Python = 3.7.10 Pytorch == 1.7

Reference PyTorch implementation of "End-to-end optimized image compression with competition of prior distributions"

PyTorch reference implementation of "End-to-end optimized image compression with competition of prior distributions" by Benoit Brummer and Christophe

Code for IntraQ, PyTorch implementation of our paper under review

IntraQ: Learning Synthetic Images with Intra-Class Heterogeneity for Zero-Shot Network Quantization paper Requirements Python = 3.7.10 Pytorch == 1.7

🛠️ Tools for Transformers compression using Lightning ⚡

Bert-squeeze is a repository aiming to provide code to reduce the size of Transformer-based models or decrease their latency at inference time.

Iterative Training: Finding Binary Weight Deep Neural Networks with Layer Binarization

Iterative Training: Finding Binary Weight Deep Neural Networks with Layer Binarization This repository contains the source code for the paper (link wi

An implementation of Group Fisher Pruning for Practical Network Compression based on pytorch and mmcv

FisherPruning-Pytorch An implementation of Group Fisher Pruning for Practical Network Compression based on pytorch and mmcv Main Functions Pruning f

STBP is a way to train SNN with datasets by Backward propagation.

Spiking neural network (SNN), compared with depth neural network (DNN), has faster processing speed, lower energy consumption and more biological interpretability, which is expected to approach Strong AI.

An open source AutoML toolkit for automate machine learning lifecycle, including feature engineering, neural architecture search, model compression and hyper-parameter tuning.

NNI Doc | 简体中文 NNI (Neural Network Intelligence) is a lightweight but powerful toolkit to help users automate Feature Engineering, Neural Architecture

An efficient and easy-to-use deep learning model compression framework

TinyNeuralNetwork 简体中文 TinyNeuralNetwork is an efficient and easy-to-use deep learning model compression framework, which contains features like neura

Back to Basics: Efficient Network Compression via IMP

Back to Basics: Efficient Network Compression via IMP Authors: Max Zimmer, Christoph Spiegel, Sebastian Pokutta This repository contains the code to r

SEC'21: Sparse Bitmap Compression for Memory-Efficient Training onthe Edge

Training Deep Learning Models on The Edge Training on the Edge enables continuous learning from new data for deployed neural networks on memory-constr

Generic image compressor for machine learning. Pytorch code for our paper "Lossy compression for lossless prediction".

Lossy Compression for Lossless Prediction Using: Training: This repostiory contains our implementation of the paper: Lossy Compression for Lossless Pr

Pytorch implementation of our paper accepted by NeurIPS 2021 -- Revisiting Discriminator in GAN Compression: A Generator-discriminator Cooperative Compression Scheme

Revisiting Discriminator in GAN Compression: A Generator-discriminator Cooperative Compression Scheme (NeurIPS2021) (Link) Overview Prerequisites Linu

Revisiting Discriminator in GAN Compression: A Generator-discriminator Cooperative Compression Scheme (NeurIPS2021)

Revisiting Discriminator in GAN Compression: A Generator-discriminator Cooperative Compression Scheme (NeurIPS2021) Overview Prerequisites Linux Pytho

YOLOv5 Series Multi-backbone, Pruning and quantization Compression Tool Box.

YOLOv5-Compression Update News Requirements 环境安装 pip install -r requirements.txt Evaluation metric Visdrone Model mAP mAP@50 Parameters(M) GFLOPs FPS@

A Pytorch Implementation of a continuously rate adjustable learned image compression framework.

GainedVAE A Pytorch Implementation of a continuously rate adjustable learned image compression framework, Gained Variational Autoencoder(GainedVAE). N

10x faster matrix and vector operations

Bolt is an algorithm for compressing vectors of real-valued data and running mathematical operations directly on the compressed representations. If yo

My own Unicode compression algorithm

Zee Code ZCode is a custom compression algorithm I originally developed for a competition held for the Spring 2019 Datastructures and Algorithms cours

This repository contains the code for the paper in EMNLP 2021: "HRKD: Hierarchical Relational Knowledge Distillation for Cross-domain Language Model Compression".

HRKD: Hierarchical Relational Knowledge Distillation for Cross-domain Language Model Compression This repository contains the code for the paper in EM

Patch-Based Deep Autoencoder for Point Cloud Geometry Compression

Patch-Based Deep Autoencoder for Point Cloud Geometry Compression Overview The ever-increasing 3D application makes the point cloud compression unprec

Official implementation of "Variable-Rate Deep Image Compression through Spatially-Adaptive Feature Transform", ICCV 2021

Variable-Rate Deep Image Compression through Spatially-Adaptive Feature Transform This repository is the implementation of "Variable-Rate Deep Image C

Towards Flexible Blind JPEG Artifacts Removal (FBCNN, ICCV 2021)

Towards Flexible Blind JPEG Artifacts Removal (FBCNN, ICCV 2021) Jiaxi Jiang, Kai Zhang, Radu Timofte Computer Vision Lab, ETH Zurich, Switzerland 🔥

Fast batch image resizer and rotator for JPEG and PNG images.

imgp is a command line image resizer and rotator for JPEG and PNG images.

A Closer Look at Structured Pruning for Neural Network Compression

A Closer Look at Structured Pruning for Neural Network Compression Code used to reproduce experiments in https://arxiv.org/abs/1810.04622. To prune, w

Code for paper "Energy-Constrained Compression for Deep Neural Networks via Weighted Sparse Projection and Layer Input Masking"

model_based_energy_constrained_compression Code for paper "Energy-Constrained Compression for Deep Neural Networks via Weighted Sparse Projection and

Distiller is an open-source Python package for neural network compression research.

Wiki and tutorials | Documentation | Getting Started | Algorithms | Design | FAQ Distiller is an open-source Python package for neural network compres

A tutorial on "Bayesian Compression for Deep Learning" published at NIPS (2017).

Code release for "Bayesian Compression for Deep Learning" In "Bayesian Compression for Deep Learning" we adopt a Bayesian view for the compression of

Towards Flexible Blind JPEG Artifacts Removal (FBCNN, ICCV 2021)

Towards Flexible Blind JPEG Artifacts Removal (FBCNN, ICCV 2021)

Code for the paper in Findings of EMNLP 2021: "EfficientBERT: Progressively Searching Multilayer Perceptron via Warm-up Knowledge Distillation".

This repository contains the code for the paper in Findings of EMNLP 2021: "EfficientBERT: Progressively Searching Multilayer Perceptron via Warm-up Knowledge Distillation".

Must-read papers on improving efficiency for pre-trained language models.

Must-read papers on improving efficiency for pre-trained language models.

Channel Pruning for Accelerating Very Deep Neural Networks (ICCV'17)

Channel Pruning for Accelerating Very Deep Neural Networks (ICCV'17)

Implementation of "A Deep Learning Loss Function based on Auditory Power Compression for Speech Enhancement" by pytorch

This repository is used to suspend the results of our paper "A Deep Learning Loss Function based on Auditory Power Compression for Speech Enhancement"

![[ACMMM 2021 Oral] Enhanced Invertible Encoding for Learned Image Compression](https://github.com/xyq7/InvCompress/raw/main/figures/overview.png)

[ACMMM 2021 Oral] Enhanced Invertible Encoding for Learned Image Compression

InvCompress Official Pytorch Implementation for "Enhanced Invertible Encoding for Learned Image Compression", ACMMM 2021 (Oral) Figure: Our framework

SwinIR: Image Restoration Using Swin Transformer

SwinIR: Image Restoration Using Swin Transformer This repository is the official PyTorch implementation of SwinIR: Image Restoration Using Shifted Win

Online Multi-Granularity Distillation for GAN Compression (ICCV2021)

Online Multi-Granularity Distillation for GAN Compression (ICCV2021) This repository contains the pytorch codes and trained models described in the IC

Easy compression and extraction for any compression or archival format.

Tzar: Tar, Zip, Anything Really Easy compression and extraction for any compression or archival format. Usage/Examples tzar compress large-dir compres

Python implementation of Gorilla time series compression

Gorilla Time Series Compression This is an implementation (with some adaptations) of the compression algorithm described in section 4.1 (Time series c

This is the pytorch implementation for the paper: *Learning Accurate Performance Predictors for Ultrafast Automated Model Compression*, which is in submission to TPAMI

SeerNet This is the pytorch implementation for the paper: Learning Accurate Performance Predictors for Ultrafast Automated Model Compression, which is

Learned Token Pruning for Transformers

LTP: Learned Token Pruning for Transformers Check our paper for more details. Installation We follow the same installation procedure as the original H

![[IJCAI-2021] A benchmark of data-free knowledge distillation from paper](https://github.com/zju-vipa/DataFree/raw/main/assets/cmi.png)

[IJCAI-2021] A benchmark of data-free knowledge distillation from paper "Contrastive Model Inversion for Data-Free Knowledge Distillation"

DataFree A benchmark of data-free knowledge distillation from paper "Contrastive Model Inversion for Data-Free Knowledge Distillation" Authors: Gongfa

NeuralCompression is a Python repository dedicated to research of neural networks that compress data

NeuralCompression is a Python repository dedicated to research of neural networks that compress data. The repository includes tools such as JAX-based entropy coders, image compression models, video compression models, and metrics for image and video evaluation.

A Closer Look at Structured Pruning for Neural Network Compression

A Closer Look at Structured Pruning for Neural Network Compression Code used to reproduce experiments in https://arxiv.org/abs/1810.04622. To prune, w

![[Preprint]](https://github.com/VITA-Group/SViTE/raw/main/Figs/res.png)

[Preprint] "Chasing Sparsity in Vision Transformers: An End-to-End Exploration" by Tianlong Chen, Yu Cheng, Zhe Gan, Lu Yuan, Lei Zhang, Zhangyang Wang

Chasing Sparsity in Vision Transformers: An End-to-End Exploration Codes for [Preprint] Chasing Sparsity in Vision Transformers: An End-to-End Explora

Spectral Tensor Train Parameterization of Deep Learning Layers

Spectral Tensor Train Parameterization of Deep Learning Layers This repository is the official implementation of our AISTATS 2021 paper titled "Spectr

Group Fisher Pruning for Practical Network Compression(ICML2021)

Group Fisher Pruning for Practical Network Compression (ICML2021) By Liyang Liu*, Shilong Zhang*, Zhanghui Kuang, Jing-Hao Xue, Aojun Zhou, Xinjiang W

![[CVPR 2021] Teachers Do More Than Teach: Compressing Image-to-Image Models (CAT)](https://github.com/snap-research/CAT/raw/main/images/comparison.png)

[CVPR 2021] Teachers Do More Than Teach: Compressing Image-to-Image Models (CAT)

CAT arXiv Pytorch implementation of our method for compressing image-to-image models. Teachers Do More Than Teach: Compressing Image-to-Image Models Q

The official implementation of You Only Compress Once: Towards Effective and Elastic BERT Compression via Exploit-Explore Stochastic Nature Gradient.

You Only Compress Once: Towards Effective and Elastic BERT Compression via Exploit-Explore Stochastic Nature Gradient (paper) @misc{zhang2021compress,

Efficient Lottery Ticket Finding: Less Data is More

The lottery ticket hypothesis (LTH) reveals the existence of winning tickets (sparse but critical subnetworks) for dense networks, that can be trained in isolation from random initialization to match the latter’s accuracies.

Learned image compression

Overview Pytorch code of our recent work A Unified End-to-End Framework for Efficient Deep Image Compression. We first release the code for Variationa

(L2ID@CVPR2021) Boosting Co-teaching with Compression Regularization for Label Noise

Nested-Co-teaching (L2ID@CVPR2021) Pytorch implementation of paper "Boosting Co-teaching with Compression Regularization for Label Noise" [PDF] If our

Deep Compression for Dense Point Cloud Maps.

DEPOCO This repository implements the algorithms described in our paper Deep Compression for Dense Point Cloud Maps. How to get started (using Docker)

I-BERT: Integer-only BERT Quantization

I-BERT: Integer-only BERT Quantization HuggingFace Implementation I-BERT is also available in the master branch of HuggingFace! Visit the following li

Pytorch implementation of COIN, a framework for compression with implicit neural representations 🌸

COIN 🌟 This repo contains a Pytorch implementation of COIN: COmpression with Implicit Neural representations, including code to reproduce all experim

Dynamic Slimmable Network (CVPR 2021, Oral)

Dynamic Slimmable Network (DS-Net) This repository contains PyTorch code of our paper: Dynamic Slimmable Network (CVPR 2021 Oral). Architecture of DS-

Code of paper "CDFI: Compression-Driven Network Design for Frame Interpolation", CVPR 2021

CDFI (Compression-Driven-Frame-Interpolation) [Paper] (Coming soon...) | [arXiv] Tianyu Ding*, Luming Liang*, Zhihui Zhu, Ilya Zharkov IEEE Conference

UMEC: Unified Model and Embedding Compression for Efficient Recommendation Systems

[ICLR 2021] "UMEC: Unified Model and Embedding Compression for Efficient Recommendation Systems" by Jiayi Shen, Haotao Wang*, Shupeng Gui*, Jianchao Tan, Zhangyang Wang, and Ji Liu

A curated list of neural network pruning resources.

A curated list of neural network pruning and related resources. Inspired by awesome-deep-vision, awesome-adversarial-machine-learning, awesome-deep-learning-papers and Awesome-NAS.

This project is the official implementation of our accepted ICLR 2021 paper BiPointNet: Binary Neural Network for Point Clouds.

BiPointNet: Binary Neural Network for Point Clouds Created by Haotong Qin, Zhongang Cai, Mingyuan Zhang, Yifu Ding, Haiyu Zhao, Shuai Yi, Xianglong Li

BitPack is a practical tool to efficiently save ultra-low precision/mixed-precision quantized models.

BitPack is a practical tool that can efficiently save quantized neural network models with mixed bitwidth.

Easy, fast, effective, and automatic g-code compression!

Getting to the meat of g-code. Easy, fast, effective, and automatic g-code compression! MeatPack nearly doubles the effective data rate of a standard