102 Repositories

Python gesture-interaction Libraries

Gesture Volume Control v.2

Gesture volume control v.2 In this project I am going to learn how to use Gesture Control to change the volume of a computer. I first look into hand t

TigerLily: Finding drug interactions in silico with the Graph.

Drug Interaction Prediction with Tigerlily Documentation | Example Notebook | Youtube Video | Project Report Tigerlily is a TigerGraph based system de

Code for Ditto: Building Digital Twins of Articulated Objects from Interaction

Ditto: Building Digital Twins of Articulated Objects from Interaction Zhenyu Jiang, Cheng-Chun Hsu, Yuke Zhu CVPR 2022, Oral Project | arxiv News 2022

Code for our CVPR 2022 Paper "GEN-VLKT: Simplify Association and Enhance Interaction Understanding for HOI Detection"

GEN-VLKT Code for our CVPR 2022 paper "GEN-VLKT: Simplify Association and Enhance Interaction Understanding for HOI Detection". Contributed by Yue Lia

![[arXiv22] Disentangled Representation Learning for Text-Video Retrieval](https://github.com/foolwood/DRL/raw/main/demo/pipeline.png)

[arXiv22] Disentangled Representation Learning for Text-Video Retrieval

Disentangled Representation Learning for Text-Video Retrieval This is a PyTorch implementation of the paper Disentangled Representation Learning for T

Gesture recognition on Event Data

Event based Gesture Recognition Gesture recognition on Event Data usually involv

Detecting Human-Object Interactions with Object-Guided Cross-Modal Calibrated Semantics

[AAAI2022] Detecting Human-Object Interactions with Object-Guided Cross-Modal Calibrated Semantics Overall pipeline of OCN. Paper Link: [arXiv] [AAAI

Spectral decomposition for characterizing long-range interaction profiles in Hi-C maps

Inspectral Spectral decomposition for characterizing long-range interaction prof

RDMAss - A Python Discord bot creating an interaction with RDM API

RDMAss A Python Discord bot creating an interaction with RDM API. Features Assig

Codes and models for the paper "Learning Unknown from Correlations: Graph Neural Network for Inter-novel-protein Interaction Prediction".

GNN_PPI Codes and models for the paper "Learning Unknown from Correlations: Graph Neural Network for Inter-novel-protein Interaction Prediction". Lear

Hand gesture recognition model that can be used as a remote control for a smart tv.

Gesture_recognition The training data consists of a few hundred videos categorised into one of the five classes. Each video (typically 2-3 seconds lon

Smart Tech Automation Remote via Kinematics Gesture control for IoT devices

STARK Smart Tech Automation Remote via Kinematics Gesture control for IoT devices View Demo · Report Bug · Request Feature Table of Contents About The

OntoProtein: Protein Pretraining With Ontology Embedding

OntoProtein This is the implement of the paper "OntoProtein: Protein Pretraining With Ontology Embedding". OntoProtein is an effective method that mak

PyTorch implementation of the ideas presented in the paper Interaction Grounded Learning (IGL)

Interaction Grounded Learning This repository contains a simple PyTorch implementation of the ideas presented in the paper Interaction Grounded Learni

Full Transformer Framework for Robust Point Cloud Registration with Deep Information Interaction

Full Transformer Framework for Robust Point Cloud Registration with Deep Information Interaction. arxiv This repository contains python scripts for tr

A CNN model to detect hand gestures.

Software Used python - programming language used, tested on v3.8 miniconda - for managing virtual environment Libraries Used opencv - pip install open

Human pose estimation from video plays a critical role in various applications such as quantifying physical exercises, sign language recognition, and full-body gesture control.

Pose Detection Project Description: Human pose estimation from video plays a critical role in various applications such as quantifying physical exerci

As a part of the HAKE project, includes the reproduced SOTA models and the corresponding HAKE-enhanced versions (CVPR2020).

HAKE-Action HAKE-Action (TensorFlow) is a project to open the SOTA action understanding studies based on our Human Activity Knowledge Engine. It inclu

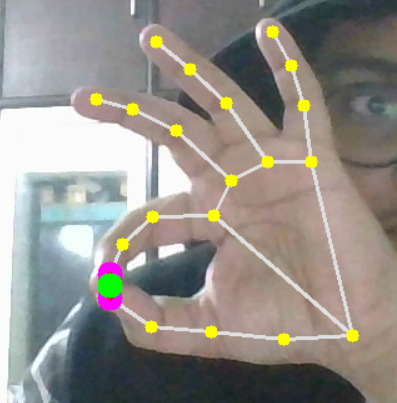

Hand Gesture Volume Control is AIML based project which uses image processing to control the volume of your Computer.

Hand Gesture Volume Control Modules There are basically three modules Handtracking Program Handtracking Module Volume Control Program Handtracking Pro

This repository is for our paper Exploiting Scene Graphs for Human-Object Interaction Detection accepted by ICCV 2021.

SG2HOI This repository is for our paper Exploiting Scene Graphs for Human-Object Interaction Detection accepted by ICCV 2021. Installation Pytorch 1.7

The official code for the ICCV-2021 paper "Speech Drives Templates: Co-Speech Gesture Synthesis with Learned Templates".

SpeechDrivesTemplates The official repo for the ICCV-2021 paper "Speech Drives Templates: Co-Speech Gesture Synthesis with Learned Templates". [arxiv

GazeScroller - Using Facial Movements to perform Hands-free Gesture on the system

GazeScroller Using Facial Movements to perform Hands-free Gesture on the system

AsymmetricGAN - Dual Generator Generative Adversarial Networks for Multi-Domain Image-to-Image Translation

AsymmetricGAN for Image-to-Image Translation AsymmetricGAN Framework for Multi-Domain Image-to-Image Translation AsymmetricGAN Framework for Hand Gest

A web-based analysis toolkit for the System Usability Scale providing calculation, plotting, interpretation and contextualization utility

System Usability Scale Analysis Toolkit The System Usability Scale (SUS) Analysis Toolkit is a web-based python application that provides a compilatio

GestureSSD CBAM - A gesture recognition web system based on SSD and CBAM, using pytorch, flask and node.js

GestureSSD_CBAM A gesture recognition web system based on SSD and CBAM, using pytorch, flask and node.js SSD implementation is based on https://github

Gesture-Volume-Control - This Python program can adjust the system's volume by using hand gestures

Gesture-Volume-Control This Python program can adjust the system's volume by usi

NiceGUI is an easy to use, Python-based UI framework, which renderes to the web browser.

NiceGUI NiceGUI is an easy to use, Python-based UI framework, which renderes to the web browser. You can create buttons, dialogs, markdown, 3D scences

A discord.py extension for sending, receiving and handling ui interactions in discord

discord-ui A discord.py extension for using discord ui/interaction features pip package ▪ read the docs ▪ examples Introduction This is a discord.py u

Virtual hand gesture mouse using a webcam

NonMouse 日本語のREADMEはこちら This is an application that allows you to use your hand itself as a mouse. The program uses a web camera to recognize your han

✌️Using this you can control your PC/Laptop volume by Hand Gestures created with Python.

Hand Gesture Volume Controller ✋ Hand recognition 👆 Finger recognition 🔊 you can decrease and increase volume Demo Code Firstly I have created a Mod

A PyTorch based deep learning library for drug pair scoring.

Documentation | External Resources | Datasets | Examples ChemicalX is a deep learning library for drug-drug interaction, polypharmacy side effect and

An experimentation and research platform to investigate the interaction of automated agents in an abstract simulated network environments.

CyberBattleSim April 8th, 2021: See the announcement on the Microsoft Security Blog. CyberBattleSim is an experimentation research platform to investi

Real-Time and Accurate Full-Body Multi-Person Pose Estimation&Tracking System

News! Aug 2020: v0.4.0 version of AlphaPose is released! Stronger tracking! Include whole body(face,hand,foot) keypoints! Colab now available. Dec 201

Codes for building and training the neural network model described in Domain-informed neural networks for interaction localization within astroparticle experiments.

Domain-informed Neural Networks Codes for building and training the neural network model described in Domain-informed neural networks for interaction

QAHOI: Query-Based Anchors for Human-Object Interaction Detection (paper)

QAHOI QAHOI: Query-Based Anchors for Human-Object Interaction Detection (paper) Requirements PyTorch = 1.5.1 torchvision = 0.6.1 pip install -r requ

People Interaction Graph

Gihan Jayatilaka*, Jameel Hassan*, Suren Sritharan*, Janith Senananayaka, Harshana Weligampola, et. al., 2021. Holistic Interpretation of Public Scenes Using Computer Vision and Temporal Graphs to Identify Social Distancing Violations. arXiv preprint.

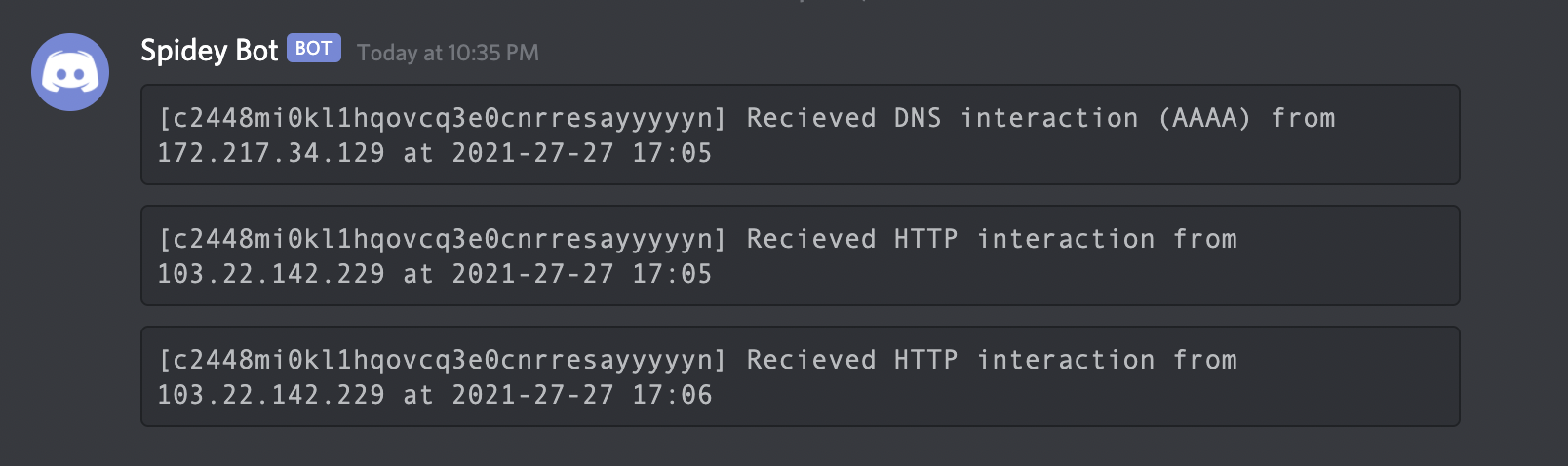

POC for detecting the Log4Shell (Log4J RCE) vulnerability

Interactsh An OOB interaction gathering server and client library Features • Usage • Interactsh Client • Interactsh Server • Interactsh Integration •

Anchor Retouching via Model Interaction for Robust Object Detection in Aerial Images

Anchor Retouching via Model Interaction for Robust Object Detection in Aerial Images In this paper, we present an effective Dynamic Enhancement Anchor

ColBERT: Contextualized Late Interaction over BERT (SIGIR'20)

Update: if you're looking for ColBERTv2 code, you can find it alongside a new simpler API, in the branch new_api. ColBERT ColBERT is a fast and accura

Official PyTorch implementation for paper "Efficient Two-Stage Detection of Human–Object Interactions with a Novel Unary–Pairwise Transformer"

UPT: Unary–Pairwise Transformers This repository contains the official PyTorch implementation for the paper Frederic Z. Zhang, Dylan Campbell and Step

Official Pytorch implementation for 2021 ICCV paper "Learning Motion Priors for 4D Human Body Capture in 3D Scenes" and trained models / data

Learning Motion Priors for 4D Human Body Capture in 3D Scenes (LEMO) Official Pytorch implementation for 2021 ICCV (oral) paper "Learning Motion Prior

This repository provides code for "On Interaction Between Augmentations and Corruptions in Natural Corruption Robustness".

On Interaction Between Augmentations and Corruptions in Natural Corruption Robustness This repository provides the code for the paper On Interaction B

Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving

GSAN Introduction Code for paper GSAN: Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving, wh

A live streaming chatroom involving multiple modalities, such as voice, gesture, and facial expression

HiLive A live streaming chatroom involving multiple modalities, such as voice, gesture, and facial expression. Introduction We focus on demonstrating

Multi-modal co-attention for drug-target interaction annotation and Its Application to SARS-CoV-2

CoaDTI Multi-modal co-attention for drug-target interaction annotation and Its Application to SARS-CoV-2 Abstract Environment The test was conducted i

Graduation Project

Gesture-Detection-and-Depth-Estimation This is my graduation project. (1) In this project, I use the YOLOv3 object detection model to detect gesture i

Ingeniamotion is a library that works over ingenialink and aims to simplify the interaction with Ingenia's drives.

Ingeniamotion Ingeniamotion is a library that works over ingenialink and aims to simplify the interaction with Ingenia's drives. Requirements Python 3

A program that takes in the hand gesture displayed by the user and translates ASL.

Interactive-ASL-Recognition Using the framework mediapipe made by google, OpenCV library and through self teaching, I was able to create a program tha

A hobby project which includes a hand-gesture based virtual piano using a mobile phone camera and OpenCV library functions

Overview This is a hobby project which includes a hand-gesture controlled virtual piano using an android phone camera and some OpenCV library. My moti

Gesture Volume Control Using OpenCV and MediaPipe

This Project Uses OpenCV and MediaPipe Hand solutions to identify hands and Change system volume by taking thumb and index finger positions

AIR^2 for Interaction Prediction

This is the repository for AIR^2 for Interaction Prediction. Explanation of the solution: Video: link License AIR is released under the Apache 2.0 lic

Visual Interaction with Code - A portable visual debugger for python

VIC Visual Interaction with Code A simple tool for debugging and interacting with running python code. This tool is designed to make it easy to inspec

Gesture controlled media player

Media Player Gesture Control Gesture controller for media player with MediaPipe, VLC and OpenCV. Contents About Setup About A tool for using gestures

Readings for "A Unified View of Relational Deep Learning for Polypharmacy Side Effect, Combination Therapy, and Drug-Drug Interaction Prediction."

Polypharmacy - DDI - Synergy Survey The Survey Paper This repository accompanies our survey paper A Unified View of Relational Deep Learning for Polyp

cLoops2: full stack analysis tool for chromatin interactions

cLoops2: full stack analysis tool for chromatin interactions Introduction cLoops2 is an extension of our previous work, cLoops. From loop-calling base

Bioinformatics tool for exploring RNA-Protein interactions

Explore RNA-Protein interactions. RNPFind is a bioinformatics tool. It takes an RNA transcript as input and gives a list of RNA binding protein (RBP)

Readings for "A Unified View of Relational Deep Learning for Polypharmacy Side Effect, Combination Therapy, and Drug-Drug Interaction Prediction."

Polypharmacy - DDI - Synergy Survey The Survey Paper This repository accompanies our survey paper A Unified View of Relational Deep Learning for Polyp

Code for ICMI2020 and ICMI2021 papers: "Studying Person-Specific Pointing and Gaze Behavior for Multimodal Referencing of Outside Objects from a Moving Vehicle" and "ML-PersRef: A Machine Learning-based Personalized Multimodal Fusion Approach for Referencing Outside Objects From a Moving Vehicle"

ML-PersRef This repository has python code (in jupyter notebooks) for both of the following papers: ML-PersRef: A Machine Learning-based Personalized

Allows simplified Python interaction with Rapid7's InsightIDR REST API.

InsightIDR4Py Allows simplified Python interaction with Rapid7's InsightIDR REST API. InsightIDR4Py allows analysts to query log data from Rapid7 Insi

AEI: Actors-Environment Interaction with Adaptive Attention for Temporal Action Proposals Generation

AEI: Actors-Environment Interaction with Adaptive Attention for Temporal Action Proposals Generation A pytorch-version implementation codes of paper:

YouRefIt: Embodied Reference Understanding with Language and Gesture

YouRefIt: Embodied Reference Understanding with Language and Gesture YouRefIt: Embodied Reference Understanding with Language and Gesture by Yixin Che

use machine learning to recognize gesture on raspberrypi

Raspberrypi_Gesture-Recognition use machine learning to recognize gesture on raspberrypi 說明 利用 tensorflow lite 訓練手部辨識模型 分辨 "剪刀"、"石頭"、"布" 之手勢 再將訓練模型匯入

GPT-3 command line interaction

Writer_unblock Straight-forward command line interfacing with GPT-3. Finding yourself stuck at a conceptual stage? Spinning your wheels needlessly on

Official implementation for Multi-Modal Interaction Graph Convolutional Network for Temporal Language Localization in Videos

Multi-modal Interaction Graph Convolutioal Network for Temporal Language Localization in Videos Official implementation for Multi-Modal Interaction Gr

Virtual Zoom Gesture using OpenCV

Virtual_Zoom_Gesture I have created a virtual zoom gesture where we can Zoom in and Zoom out any image and even we can move that image anywhere on the

In this project we will be using the live feed coming from the webcam to create a virtual mouse with complete functionalities.

Virtual Mouse Using OpenCV In this project we will be using the live feed coming from the webcam to create a virtual mouse using hand tracking. Projec

Hand Gesture Volume Control | Open CV | Computer Vision

Gesture Volume Control Hand Gesture Volume Control | Open CV | Computer Vision Use gesture control to change the volume of a computer. First we look i

The GitHub repository for the paper: “Time Series is a Special Sequence: Forecasting with Sample Convolution and Interaction“.

SCINet This is the original PyTorch implementation of the following work: Time Series is a Special Sequence: Forecasting with Sample Convolution and I

GBIM(Gesture-Based Interaction map)

手势交互地图 GBIM(Gesture-Based Interaction map),基于视觉深度神经网络的交互地图,通过电脑摄像头观察使用者的手势变化,进而控制地图进行简单的交互。网络使用PaddleX提供的轻量级模型PPYOLO Tiny以及MobileNet V3 small,使得整个模型大小约10MB左右,即使在CPU下也能快速定位和识别手势。

Volume Control using OpenCV

Gesture-Volume-Control Volume Control using OpenCV Here i made volume control using Python and OpenCV in which we can control the volume of our laptop

Using this you can control your PC/Laptop volume by Hand Gestures (pinch-in, pinch-out) created with Python.

Hand Gesture Volume Controller Using this you can control your PC/Laptop volume by Hand Gestures (pinch-in, pinch-out). Code Firstly I have created a

A python discord client interaction emulator for the DC29 badge code channel

dc29-discord-signalbot A python discord client interaction emulator for the DC29 badge code channel Prep Open Developer mode Open the developer mode f

Deep learning based hand gesture recognition using LSTM and MediaPipie.

Hand Gesture Recognition Deep learning based hand gesture recognition using LSTM and MediaPipie. Demo video using PingPong Robot Files Pretrained mode

Unified learning approach for egocentric hand gesture recognition and fingertip detection

Unified Gesture Recognition and Fingertip Detection A unified convolutional neural network (CNN) algorithm for both hand gesture recognition and finge

Pytorch Implementation of Interaction Networks for Learning about Objects, Relations and Physics

Interaction-Network-Pytorch Pytorch Implementraion of Interaction Networks for Learning about Objects, Relations and Physics. Interaction Network is a

This's an implementation of deepmind Visual Interaction Networks paper using pytorch

Visual-Interaction-Networks An implementation of Deepmind visual interaction networks in Pytorch. Introduction For the purpose of understanding the ch

Implementation of QuickDraw - an online game developed by Google, combined with AirGesture - a simple gesture recognition application

QuickDraw - AirGesture Introduction Here is my python source code for QuickDraw - an online game developed by google, combined with AirGesture - a sim

WILSON Cloud Respwnder is a Web Interaction Logger Sending Out Notifications with the ability to serve custom content in order to appropriately respond to client-issued requests.

WILSON Cloud Respwnder What is this? WILSON Cloud Respwnder is a Web Interaction Logger Sending Out Notifications (WILSON) with the ability to serve c

Gesture-controlled Video Game. Just swing your finger and play the game without touching your PC

Gesture Controlled Video Game Detailed Blog : https://www.analyticsvidhya.com/blog/2021/06/gesture-controlled-video-game/ Introduction This project is

Code for KDD'20 "An Efficient Neighborhood-based Interaction Model for Recommendation on Heterogeneous Graph"

Heterogeneous INteract and aggreGatE (GraphHINGE) This is a pytorch implementation of GraphHINGE model. This is the experiment code in the following w

Hand gesture detection project with aweome UI implementation.

an awesome hand gesture detection project for you to be creative! Imagination is the limit to do with this project.

A gesture recognition system powered by OpenPose, k-nearest neighbours, and local outlier factor.

OpenHands OpenHands is a gesture recognition system powered by OpenPose, k-nearest neighbours, and local outlier factor. Currently the system can iden

Hand gesture recognition based whiteboard that allows you to write on live webcam. This is the first version and has features like 4 different colors, eraser and a recording option that records your session and saves it in a "recordings" folder. Use index finger to draw and two or more fingers to move around and select items. Future version will contain more functionalities like changeable thickness, color palette, integration with zoom and google meet etc.

hand-write Hand gesture recognition based whiteboard that allows you to write on live webcam. This is the first version and has features like 4 differ

learn how to use Gesture Control to change the volume of a computer

Volume-Control-using-gesture In this project we are going to learn how to use Gesture Control to change the volume of a computer. We first look into h

Unofficial TensorFlow implementation of Protein Interface Prediction using Graph Convolutional Networks.

[TensorFlow] Protein Interface Prediction using Graph Convolutional Networks Unofficial TensorFlow implementation of Protein Interface Prediction usin

Official repository for HOTR: End-to-End Human-Object Interaction Detection with Transformers (CVPR'21, Oral Presentation)

Official PyTorch Implementation for HOTR: End-to-End Human-Object Interaction Detection with Transformers (CVPR'2021, Oral Presentation) HOTR: End-to-

This is the research repository for Vid2Doppler: Synthesizing Doppler Radar Data from Videos for Training Privacy-Preserving Activity Recognition.

Vid2Doppler: Synthesizing Doppler Radar Data from Videos for Training Privacy-Preserving Activity Recognition This is the research repository for Vid2

PIGLeT: Language Grounding Through Neuro-Symbolic Interaction in a 3D World [ACL 2021]

piglet PIGLeT: Language Grounding Through Neuro-Symbolic Interaction in a 3D World [ACL 2021] This repo contains code and data for PIGLeT. If you like

NExT-QA: Next Phase of Question-Answering to Explaining Temporal Actions (CVPR2021)

NExT-QA We reproduce some SOTA VideoQA methods to provide benchmark results for our NExT-QA dataset accepted to CVPR2021 (with 1 'Strong Accept' and 2

This is the repo for the paper `SumGNN: Multi-typed Drug Interaction Prediction via Efficient Knowledge Graph Summarization'. (published in Bioinformatics'21)

SumGNN: Multi-typed Drug Interaction Prediction via Efficient Knowledge Graph Summarization This is the code for our paper ``SumGNN: Multi-typed Drug

Shuwa Gesture Toolkit is a framework that detects and classifies arbitrary gestures in short videos

Shuwa Gesture Toolkit is a framework that detects and classifies arbitrary gestures in short videos

Populating 3D Scenes by Learning Human-Scene Interaction https://posa.is.tue.mpg.de/

Populating 3D Scenes by Learning Human-Scene Interaction [Project Page] [Paper] License Software Copyright License for non-commercial scientific resea

Synthesizing Long-Term 3D Human Motion and Interaction in 3D in CVPR2021

Long-term-Motion-in-3D-Scenes This is an implementation of the CVPR'21 paper "Synthesizing Long-Term 3D Human Motion and Interaction in 3D". Please ch

notebookJS: seamless JavaScript integration in Python Notebooks

notebookJS enables the execution of custom JavaScript code in Python Notebooks (Jupyter Notebook and Google Colab). This Python library can be useful for implementing and reusing interactive Data Visualizations in the Notebook environment.

CPF: Learning a Contact Potential Field to Model the Hand-object Interaction

Contact Potential Field This repo contains model, demo, and test codes of our paper: CPF: Learning a Contact Potential Field to Model the Hand-object

[CVPR 2021] Modular Interactive Video Object Segmentation: Interaction-to-Mask, Propagation and Difference-Aware Fusion

[CVPR 2021] Modular Interactive Video Object Segmentation: Interaction-to-Mask, Propagation and Difference-Aware Fusion

Python low-interaction honeyclient

Thug The number of client-side attacks has grown significantly in the past few years shifting focus on poorly protected vulnerable clients. Just as th

Repo for CVPR2021 paper "QPIC: Query-Based Pairwise Human-Object Interaction Detection with Image-Wide Contextual Information"

QPIC: Query-Based Pairwise Human-Object Interaction Detection with Image-Wide Contextual Information by Masato Tamura, Hiroki Ohashi, and Tomoaki Yosh

pytest splinter and selenium integration for anyone interested in browser interaction in tests

Splinter plugin for the pytest runner Install pytest-splinter pip install pytest-splinter Features The plugin provides a set of fixtures to use splin