926 Repositories

Python memory-efficient-attention Libraries

Training code of Spatial Time Memory Network. Semi-supervised video object segmentation.

Training-code-of-STM This repository fully reproduces Space-Time Memory Networks Performance on Davis17 val set&Weights backbone training stage traini

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Implementation of Convolutional enhanced image Transformer

CeiT : Convolutional enhanced image Transformer This is an unofficial PyTorch implementation of Incorporating Convolution Designs into Visual Transfor

Implementation of Perceiver, General Perception with Iterative Attention in TensorFlow

Perceiver This Python package implements Perceiver: General Perception with Iterative Attention by Andrew Jaegle in TensorFlow. This model builds on t

The official pytorch implementation of our paper "Is Space-Time Attention All You Need for Video Understanding?"

TimeSformer This is an official pytorch implementation of Is Space-Time Attention All You Need for Video Understanding?. In this repository, we provid

Visualization Toolbox for Long Short Term Memory networks (LSTMs)

Visualization Toolbox for Long Short Term Memory networks (LSTMs)

Segcache: a memory-efficient and scalable in-memory key-value cache for small objects

Segcache: a memory-efficient and scalable in-memory key-value cache for small objects This repo contains the code of Segcache described in the followi

Robust, modular and efficient implementation of advanced Hamiltonian Monte Carlo algorithms

AdvancedHMC.jl AdvancedHMC.jl provides a robust, modular and efficient implementation of advanced HMC algorithms. An illustrative example for Advanced

I-BERT: Integer-only BERT Quantization

I-BERT: Integer-only BERT Quantization HuggingFace Implementation I-BERT is also available in the master branch of HuggingFace! Visit the following li

SC-GlowTTS: an Efficient Zero-Shot Multi-Speaker Text-To-Speech Model

SC-GlowTTS: an Efficient Zero-Shot Multi-Speaker Text-To-Speech Model Edresson Casanova, Christopher Shulby, Eren Gölge, Nicolas Michael Müller, Frede

Code for the paper "MASTER: Multi-Aspect Non-local Network for Scene Text Recognition" (Pattern Recognition 2021)

MASTER-PyTorch PyTorch reimplementation of "MASTER: Multi-Aspect Non-local Network for Scene Text Recognition" (Pattern Recognition 2021). This projec

Implementation of Cross Transformer for spatially-aware few-shot transfer, in Pytorch

Cross Transformers - Pytorch (wip) Implementation of Cross Transformer for spatially-aware few-shot transfer, in Pytorch Install $ pip install cross-t

Asynchronous Client for the worlds fastest in-memory geo-database Tile38

This is an asynchonous Python client for Tile38 that allows for fast and easy interaction with the worlds fastest in-memory geodatabase Tile38.

Tool for visualizing attention in the Transformer model (BERT, GPT-2, Albert, XLNet, RoBERTa, CTRL, etc.)

Tool for visualizing attention in the Transformer model (BERT, GPT-2, Albert, XLNet, RoBERTa, CTRL, etc.)

Code for AAAI 2021 paper: Sequential End-to-end Network for Efficient Person Search

This repository hosts the source code of our paper: [AAAI 2021]Sequential End-to-end Network for Efficient Person Search. SeqNet achieves the state-of

Learning recognition/segmentation models without end-to-end training. 40%-60% less GPU memory footprint. Same training time. Better performance.

InfoPro-Pytorch The Information Propagation algorithm for training deep networks with local supervision. (ICLR 2021) Revisiting Locally Supervised Lea

![[AAAI 2021] MVFNet: Multi-View Fusion Network for Efficient Video Recognition](https://github.com/whwu95/MVFNet/raw/main/mvfnet.png)

[AAAI 2021] MVFNet: Multi-View Fusion Network for Efficient Video Recognition

MVFNet: Multi-View Fusion Network for Efficient Video Recognition (AAAI 2021) Overview We release the code of the MVFNet (Multi-View Fusion Network).

Implementation of TransGanFormer, an all-attention GAN that combines the finding from the recent GanFormer and TransGan paper

TransGanFormer (wip) Implementation of TransGanFormer, an all-attention GAN that combines the finding from the recent GansFormer and TransGan paper. I

Dynamic Slimmable Network (CVPR 2021, Oral)

Dynamic Slimmable Network (DS-Net) This repository contains PyTorch code of our paper: Dynamic Slimmable Network (CVPR 2021 Oral). Architecture of DS-

Regularizing Generative Adversarial Networks under Limited Data (CVPR 2021)

Regularizing Generative Adversarial Networks under Limited Data [Project Page][Paper] Implementation for our GAN regularization method. The proposed r

PINCE is a front-end/reverse engineering tool for the GNU Project Debugger (GDB), focused on games.

PINCE is a front-end/reverse engineering tool for the GNU Project Debugger (GDB), focused on games. However, it can be used for any reverse-engi

Official PyTorch implementation for Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers, a novel method to visualize any Transformer-based network. Including examples for DETR, VQA.

PyTorch Implementation of Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers 1 Using Colab Please notic

Code for the paper "Graph Attention Tracking". (CVPR2021)

SiamGAT 1. Environment setup This code has been tested on Ubuntu 16.04, Python 3.5, Pytorch 1.2.0, CUDA 9.0. Please install related libraries before r

![[ICLR 2021]](https://github.com/RICE-EIC/CPT/raw/master/imgs/cpt.png)

[ICLR 2021] "CPT: Efficient Deep Neural Network Training via Cyclic Precision" by Yonggan Fu, Han Guo, Meng Li, Xin Yang, Yining Ding, Vikas Chandra, Yingyan Lin

CPT: Efficient Deep Neural Network Training via Cyclic Precision Yonggan Fu, Han Guo, Meng Li, Xin Yang, Yining Ding, Vikas Chandra, Yingyan Lin Accep

Implementation of the Swin Transformer in PyTorch.

Swin Transformer - PyTorch Implementation of the Swin Transformer architecture. This paper presents a new vision Transformer, called Swin Transformer,

Guide: Finetune GPT2-XL (1.5 Billion Parameters) and GPT-NEO (2.7 B) on a single 16 GB VRAM V100 Google Cloud instance with Huggingface Transformers using DeepSpeed

Guide: Finetune GPT2-XL (1.5 Billion Parameters) and GPT-NEO (2.7 Billion Parameters) on a single 16 GB VRAM V100 Google Cloud instance with Huggingfa

Implementation of STAM (Space Time Attention Model), a pure and simple attention model that reaches SOTA for video classification

STAM - Pytorch Implementation of STAM (Space Time Attention Model), yet another pure and simple SOTA attention model that bests all previous models in

A highly efficient and modular implementation of Gaussian Processes in PyTorch

GPyTorch GPyTorch is a Gaussian process library implemented using PyTorch. GPyTorch is designed for creating scalable, flexible, and modular Gaussian

Differentiable SDE solvers with GPU support and efficient sensitivity analysis.

PyTorch Implementation of Differentiable SDE Solvers This library provides stochastic differential equation (SDE) solvers with GPU support and efficie

Implementation of LambdaNetworks, a new approach to image recognition that reaches SOTA with less compute

Lambda Networks - Pytorch Implementation of λ Networks, a new approach to image recognition that reaches SOTA on ImageNet. The new method utilizes λ l

An implementation of Performer, a linear attention-based transformer, in Pytorch

Performer - Pytorch An implementation of Performer, a linear attention-based transformer variant with a Fast Attention Via positive Orthogonal Random

Reformer, the efficient Transformer, in Pytorch

Reformer, the Efficient Transformer, in Pytorch This is a Pytorch implementation of Reformer https://openreview.net/pdf?id=rkgNKkHtvB It includes LSH

Differentiable ODE solvers with full GPU support and O(1)-memory backpropagation.

PyTorch Implementation of Differentiable ODE Solvers This library provides ordinary differential equation (ODE) solvers implemented in PyTorch. Backpr

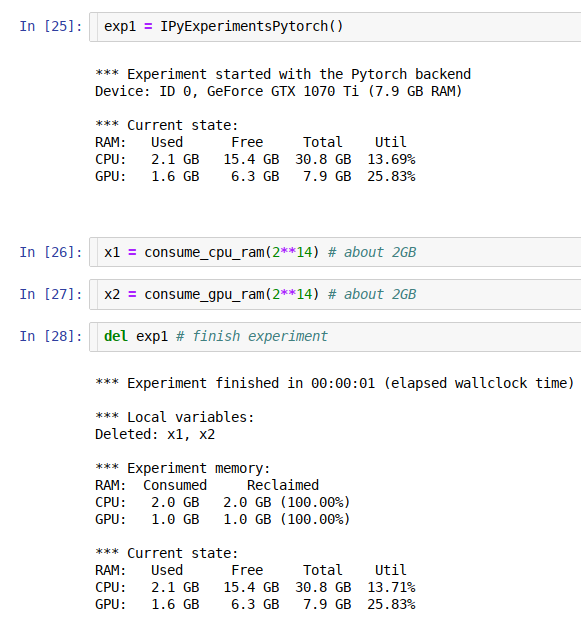

jupyter/ipython experiment containers for GPU and general RAM re-use

ipyexperiments jupyter/ipython experiment containers and utils for profiling and reclaiming GPU and general RAM, and detecting memory leaks. About Thi

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective. 10x Larger Models 10x Faster Trainin

git《Self-Attention Attribution: Interpreting Information Interactions Inside Transformer》(AAAI 2021) GitHub:

Self-Attention Attribution This repository contains the implementation for AAAI-2021 paper Self-Attention Attribution: Interpreting Information Intera

The open source code of SA-UNet: Spatial Attention U-Net for Retinal Vessel Segmentation.

SA-UNet: Spatial Attention U-Net for Retinal Vessel Segmentation(ICPR 2020) Overview This code is for the paper: Spatial Attention U-Net for Retinal V

An efficient and effective learning to rank algorithm by mining information across ranking candidates. This repository contains the tensorflow implementation of SERank model. The code is developed based on TF-Ranking.

SERank An efficient and effective learning to rank algorithm by mining information across ranking candidates. This repository contains the tensorflow

Implementation of the 😇 Attention layer from the paper, Scaling Local Self-Attention For Parameter Efficient Visual Backbones

HaloNet - Pytorch Implementation of the Attention layer from the paper, Scaling Local Self-Attention For Parameter Efficient Visual Backbones. This re

Visual Attention based OCR

Attention-OCR Authours: Qi Guo and Yuntian Deng Visual Attention based OCR. The model first runs a sliding CNN on the image (images are resized to hei

A Tensorflow model for text recognition (CNN + seq2seq with visual attention) available as a Python package and compatible with Google Cloud ML Engine.

Attention-based OCR Visual attention-based OCR model for image recognition with additional tools for creating TFRecords datasets and exporting the tra

🖺 OCR using tensorflow with attention

tensorflow-ocr 🖺 OCR using tensorflow with attention, batteries included Installation git clone --recursive http://github.com/pannous/tensorflow-ocr

PyTorch Re-Implementation of EAST: An Efficient and Accurate Scene Text Detector

Description This is a PyTorch Re-Implementation of EAST: An Efficient and Accurate Scene Text Detector. Only RBOX part is implemented. Using dice loss

This is a pytorch re-implementation of EAST: An Efficient and Accurate Scene Text Detector.

EAST: An Efficient and Accurate Scene Text Detector Description: This version will be updated soon, please pay attention to this work. The motivation

Single Shot Text Detector with Regional Attention

Single Shot Text Detector with Regional Attention Introduction SSTD is initially described in our ICCV 2017 spotlight paper. A third-party implementat

Implement 'Single Shot Text Detector with Regional Attention, ICCV 2017 Spotlight'

SSTDNet Implement 'Single Shot Text Detector with Regional Attention, ICCV 2017 Spotlight' using pytorch. This code is work for general object detecti

textspotter - An End-to-End TextSpotter with Explicit Alignment and Attention

An End-to-End TextSpotter with Explicit Alignment and Attention This is initially described in our CVPR 2018 paper. Getting Started Installation Clone

Pytorch implementation of PSEnet with Pyramid Attention Network as feature extractor

Scene Text-Spotting based on PSEnet+CRNN Pytorch implementation of an end to end Text-Spotter with a PSEnet text detector and CRNN text recognizer. We

MORAN: A Multi-Object Rectified Attention Network for Scene Text Recognition

MORAN: A Multi-Object Rectified Attention Network for Scene Text Recognition Python 2.7 Python 3.6 MORAN is a network with rectification mechanism for

Adaptive Attention Span for Reinforcement Learning

Adaptive Transformers in RL Official implementation of Adaptive Transformers in RL In this work we replicate several results from Stabilizing Transfor

UMEC: Unified Model and Embedding Compression for Efficient Recommendation Systems

[ICLR 2021] "UMEC: Unified Model and Embedding Compression for Efficient Recommendation Systems" by Jiayi Shen, Haotao Wang*, Shupeng Gui*, Jianchao Tan, Zhangyang Wang, and Ji Liu

CVPR 2021: "Generating Diverse Structure for Image Inpainting With Hierarchical VQ-VAE"

Diverse Structure Inpainting ArXiv | Papar | Supplementary Material | BibTex This repository is for the CVPR 2021 paper, "Generating Diverse Structure

![[ICLR 2021] Is Attention Better Than Matrix Decomposition?](https://github.com/Gsunshine/Enjoy-Hamburger/raw/main/assets/Hamburger.jpg)

[ICLR 2021] Is Attention Better Than Matrix Decomposition?

Enjoy-Hamburger 🍔 Official implementation of Hamburger, Is Attention Better Than Matrix Decomposition? (ICLR 2021) Under construction. Introduction T

Repository for "Exploring Sparsity in Image Super-Resolution for Efficient Inference", CVPR 2021

SMSR Reposity for "Exploring Sparsity in Image Super-Resolution for Efficient Inference" [arXiv] Highlights Locate and skip redundant computation in S

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

![[CVPR 2021] 'Searching by Generating: Flexible and Efficient One-Shot NAS with Architecture Generator'](https://github.com/eric8607242/SGNAS/raw/master/resource/SGNAS_framework.png)

[CVPR 2021] 'Searching by Generating: Flexible and Efficient One-Shot NAS with Architecture Generator'

[CVPR2021] Searching by Generating: Flexible and Efficient One-Shot NAS with Architecture Generator Overview This is the entire codebase for the paper

Official implementation of Self-supervised Graph Attention Networks (SuperGAT), ICLR 2021.

SuperGAT Official implementation of Self-supervised Graph Attention Networks (SuperGAT). This model is presented at How to Find Your Friendly Neighbor

Object-Centric Learning with Slot Attention

Slot Attention This is a re-implementation of "Object-Centric Learning with Slot Attention" in PyTorch (https://arxiv.org/abs/2006.15055). Requirement

Code for our CVPR2021 paper coordinate attention

Coordinate Attention for Efficient Mobile Network Design (preprint) This repository is a PyTorch implementation of our coordinate attention (will appe

Implementation of Perceiver, General Perception with Iterative Attention, in Pytorch

Perceiver - Pytorch Implementation of Perceiver, General Perception with Iterative Attention, in Pytorch Install $ pip install perceiver-pytorch Usage

Official pytorch implementation of paper "Inception Convolution with Efficient Dilation Search" (CVPR 2021 Oral).

IC-Conv This repository is an official implementation of the paper Inception Convolution with Efficient Dilation Search. Getting Started Download Imag

A highly efficient and modular implementation of Gaussian Processes in PyTorch

GPyTorch GPyTorch is a Gaussian process library implemented using PyTorch. GPyTorch is designed for creating scalable, flexible, and modular Gaussian

BatchFlow helps you conveniently work with random or sequential batches of your data and define data processing and machine learning workflows even for datasets that do not fit into memory.

BatchFlow BatchFlow helps you conveniently work with random or sequential batches of your data and define data processing and machine learning workflo

Out-of-Core DataFrames for Python, ML, visualize and explore big tabular data at a billion rows per second 🚀

What is Vaex? Vaex is a high performance Python library for lazy Out-of-Core DataFrames (similar to Pandas), to visualize and explore big tabular data

Simple, efficient and flexible vision toolbox for mxnet framework.

MXbox: Simple, efficient and flexible vision toolbox for mxnet framework. MXbox is a toolbox aiming to provide a general and simple interface for visi

A clear, concise, simple yet powerful and efficient API for deep learning.

The Gluon API Specification The Gluon API specification is an effort to improve speed, flexibility, and accessibility of deep learning technology for

ICRA 2021 "Towards Precise and Efficient Image Guided Depth Completion"

PENet: Precise and Efficient Depth Completion This repo is the PyTorch implementation of our paper to appear in ICRA2021 on "Towards Precise and Effic

Implementation of OmniNet, Omnidirectional Representations from Transformers, in Pytorch

Omninet - Pytorch Implementation of OmniNet, Omnidirectional Representations from Transformers, in Pytorch. The authors propose that we should be atte

D2Go is a toolkit for efficient deep learning

D2Go D2Go is a production ready software system from FacebookResearch, which supports end-to-end model training and deployment for mobile platforms. W

Calculate the efficient frontier

关于 代码主要参考Fábio Neves的文章,你可以在他的文章中找到一些细节性的解释

GANsformer: Generative Adversarial Transformers Drew A

GANsformer: Generative Adversarial Transformers Drew A. Hudson* & C. Lawrence Zitnick *I wish to thank Christopher D. Manning for the fruitf

Implementation of Transformer in Transformer, pixel level attention paired with patch level attention for image classification, in Pytorch

Transformer in Transformer Implementation of Transformer in Transformer, pixel level attention paired with patch level attention for image c

An attempt at the implementation of Glom, Geoffrey Hinton's new idea that integrates neural fields, predictive coding, top-down-bottom-up, and attention (consensus between columns)

GLOM - Pytorch (wip) An attempt at the implementation of Glom, Geoffrey Hinton's new idea that integrates neural fields, predictive coding,

Ultra-Data-Efficient GAN Training: Drawing A Lottery Ticket First, Then Training It Toughly

Ultra-Data-Efficient GAN Training: Drawing A Lottery Ticket First, Then Training It Toughly Code for this paper Ultra-Data-Efficient GAN Tra

Implementation of E(n)-Transformer, which extends the ideas of Welling's E(n)-Equivariant Graph Neural Network to attention

E(n)-Equivariant Transformer (wip) Implementation of E(n)-Equivariant Transformer, which extends the ideas from Welling's E(n)-Equivariant G

Mnemosyne: efficient learning with powerful digital flash-cards.

Mnemosyne: Optimized Flashcards and Research Project Mnemosyne is: a free, open-source, spaced-repetition flashcard program that helps you learn as ef

Lazy Profiler is a simple utility to collect CPU, GPU, RAM and GPU Memory stats while the program is running.

lazyprofiler Lazy Profiler is a simple utility to collect CPU, GPU, RAM and GPU Memory stats while the program is running. Installation Use the packag

Pytorch Code for "Medical Transformer: Gated Axial-Attention for Medical Image Segmentation"

Medical-Transformer Pytorch Code for the paper "Medical Transformer: Gated Axial-Attention for Medical Image Segmentation" About this repo: This repo

Implementation of TimeSformer, a pure attention-based solution for video classification

TimeSformer - Pytorch Implementation of TimeSformer, a pure and simple attention-based solution for reaching SOTA on video classification.

Implementation of Nyström Self-attention, from the paper Nyströmformer

Nyström Attention Implementation of Nyström Self-attention, from the paper Nyströmformer. Yannic Kilcher video Install $ pip install nystrom-attention

Official PyTorch code for ClipBERT, an efficient framework for end-to-end learning on image-text and video-text tasks

Official PyTorch code for ClipBERT, an efficient framework for end-to-end learning on image-text and video-text tasks. It takes raw videos/images + text as inputs, and outputs task predictions. ClipBERT is designed based on 2D CNNs and transformers, and uses a sparse sampling strategy to enable efficient end-to-end video-and-language learning.

BitPack is a practical tool to efficiently save ultra-low precision/mixed-precision quantized models.

BitPack is a practical tool that can efficiently save quantized neural network models with mixed bitwidth.

Learning to Initialize Neural Networks for Stable and Efficient Training

GradInit This repository hosts the code for experiments in the paper, GradInit: Learning to Initialize Neural Networks for Stable and Efficient Traini

Implementation of self-attention mechanisms for general purpose. Focused on computer vision modules. Ongoing repository.

Self-attention building blocks for computer vision applications in PyTorch Implementation of self attention mechanisms for computer vision in PyTorch

计算机视觉中用到的注意力模块和其他即插即用模块PyTorch Implementation Collection of Attention Module and Plug&Play Module

PyTorch实现多种计算机视觉中网络设计中用到的Attention机制,还收集了一些即插即用模块。由于能力有限精力有限,可能很多模块并没有包括进来,有任何的建议或者改进,可以提交issue或者进行PR。

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sockeye This package contains the Sockeye project, an open-source sequence-to-sequence framework for Neural Machine Translation based on Apache MXNet

text_recognition_toolbox: The reimplementation of a series of classical scene text recognition papers with Pytorch in a uniform way.

text recognition toolbox 1. 项目介绍 该项目是基于pytorch深度学习框架,以统一的改写方式实现了以下6篇经典的文字识别论文,论文的详情如下。该项目会持续进行更新,欢迎大家提出问题以及对代码进行贡献。 模型 论文标题 发表年份 模型方法划分 CRNN 《An End-t

Implementation of TabTransformer, attention network for tabular data, in Pytorch

Tab Transformer Implementation of Tab Transformer, attention network for tabular data, in Pytorch. This simple architecture came within a hair's bread

FERM: A Framework for Efficient Robotic Manipulation

Framework for Efficient Robotic Manipulation FERM is a framework that enables robots to learn tasks within an hour of real time training.

Efficient 3D Backbone Network for Temporal Modeling

VoV3D is an efficient and effective 3D backbone network for temporal modeling implemented on top of PySlowFast. Diverse Temporal Aggregation and

Code for our ICASSP 2021 paper: SA-Net: Shuffle Attention for Deep Convolutional Neural Networks

SA-Net: Shuffle Attention for Deep Convolutional Neural Networks (paper) By Qing-Long Zhang and Yu-Bin Yang [State Key Laboratory for Novel Software T

Authors implementation of LieTransformer: Equivariant Self-Attention for Lie Groups

LieTransformer This repository contains the implementation of the LieTransformer used for experiments in the paper LieTransformer: Equivariant self-at

Efficient neural networks for analog audio effect modeling

micro-TCN Efficient neural networks for audio effect modeling

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sockeye This package contains the Sockeye project, an open-source sequence-to-sequence framework for Neural Machine Translation based on Apache MXNet

Numenta Platform for Intelligent Computing is an implementation of Hierarchical Temporal Memory (HTM), a theory of intelligence based strictly on the neuroscience of the neocortex.

NuPIC Numenta Platform for Intelligent Computing The Numenta Platform for Intelligent Computing (NuPIC) is a machine intelligence platform that implem

Theano is a Python library that allows you to define, optimize, and evaluate mathematical expressions involving multi-dimensional arrays efficiently. It can use GPUs and perform efficient symbolic differentiation.

============================================================================================================ `MILA will stop developing Theano https:

Monitor Memory usage of Python code

Memory Profiler This is a python module for monitoring memory consumption of a process as well as line-by-line analysis of memory consumption for pyth

Diamond is a python daemon that collects system metrics and publishes them to Graphite (and others). It is capable of collecting cpu, memory, network, i/o, load and disk metrics. Additionally, it features an API for implementing custom collectors for gathering metrics from almost any source.

Diamond Diamond is a python daemon that collects system metrics and publishes them to Graphite (and others). It is capable of collecting cpu, memory,

Scalene: a high-performance, high-precision CPU and memory profiler for Python

scalene: a high-performance CPU and memory profiler for Python by Emery Berger 中文版本 (Chinese version) About Scalene % pip install -U scalene Scalen

Development tool to measure, monitor and analyze the memory behavior of Python objects in a running Python application.

README for pympler Before installing Pympler, try it with your Python version: python setup.py try If any errors are reported, check whether your Pyt