832 Repositories

Python pre-training Libraries

First steps with Python in Life Sciences

First steps with Python in Life Sciences This course material is part of the "First Steps with Python in Life Science" three-day course of SIB-trainin

Collapse by Conditioning: Training Class-conditional GANs with Limited Data

Collapse by Conditioning: Training Class-conditional GANs with Limited Data Moha

ESGD-M - A stochastic non-convex second order optimizer, suitable for training deep learning models, for PyTorch

ESGD-M - A stochastic non-convex second order optimizer, suitable for training deep learning models, for PyTorch

Repo for investigation of timeouts that happens with prolonged training on clients

Flower-timeout Repo for investigation of timeouts that happens with prolonged training on clients. This repository is meant purely for demonstration o

GitHub Actions Docker training

GitHub-Actions-Docker-training Training exercise repository for GitHub Actions using a docker base. This repository should be cloned and used for trai

TLXZoo - Pre-trained models based on TensorLayerX

Pre-trained models based on TensorLayerX. TensorLayerX is a multi-backend AI fra

MADT: Offline Pre-trained Multi-Agent Decision Transformer

MADT: Offline Pre-trained Multi-Agent Decision Transformer A link to our paper can be found on Arxiv. Overview Official codebase for Offline Pre-train

Circuit Training: An open-source framework for generating chip floor plans with distributed deep reinforcement learning

Circuit Training: An open-source framework for generating chip floor plans with distributed deep reinforcement learning. Circuit Training is an open-s

X-VLM: Multi-Grained Vision Language Pre-Training

X-VLM: learning multi-grained vision language alignments Multi-Grained Vision Language Pre-Training: Aligning Texts with Visual Concepts. Yan Zeng, Xi

TweebankNLP - Pre-trained Tweet NLP Pipeline (NER, tokenization, lemmatization, POS tagging, dependency parsing) + Models + Tweebank-NER

TweebankNLP This repo contains the new Tweebank-NER dataset and Twitter-Stanza p

ColossalAI-Examples - Examples of training models with hybrid parallelism using ColossalAI

ColossalAI-Examples This repository contains examples of training models with Co

(Pre-)compromise operations for MITRE CALDERA

(Pre-)compromise operations for CALDERA Extend your CALDERA operations over the entire adversary killchain. In contrast to MITRE's access plugin, cald

A novel Engagement Detection with Multi-Task Training (ED-MTT) system

A novel Engagement Detection with Multi-Task Training (ED-MTT) system which minimizes MSE and triplet loss together to determine the engagement level of students in an e-learning environment.

TiP-Adapter: Training-free CLIP-Adapter for Better Vision-Language Modeling

TiP-Adapter: Training-free CLIP-Adapter for Better Vision-Language Modeling This is the official code release for the paper 'TiP-Adapter: Training-fre

A PyTorch implementation of VIOLET

VIOLET: End-to-End Video-Language Transformers with Masked Visual-token Modeling A PyTorch implementation of VIOLET Overview VIOLET is an implementati

A complete, self-contained example for training ImageNet at state-of-the-art speed with FFCV

ffcv ImageNet Training A minimal, single-file PyTorch ImageNet training script designed for hackability. Run train_imagenet.py to get... ...high accur

Predicting 10 different clothing types using Xception pre-trained model.

Predicting-Clothing-Types Predicting 10 different clothing types using Xception pre-trained model from Keras library. It is reimplemented version from

Visual dialog agents with pre-trained vision-and-language encoders.

Learning Better Visual Dialog Agents with Pretrained Visual-Linguistic Representation Or READ-UP: Referring Expression Agent Dialog with Unified Pretr

"Moshpit SGD: Communication-Efficient Decentralized Training on Heterogeneous Unreliable Devices", official implementation

Moshpit SGD: Communication-Efficient Decentralized Training on Heterogeneous Unreliable Devices This repository contains the official PyTorch implemen

Implement of "Training deep neural networks via direct loss minimization" in PyTorch for 0-1 loss

This is the implementation of "Training deep neural networks via direct loss minimization" published at ICML 2016 in PyTorch. The implementation targe

Pianote - An application that helps musicians practice piano ear training

Pianote Pianote is an application that helps musicians practice piano ear traini

🔎 Monitor deep learning model training and hardware usage from your mobile phone 📱

Monitor deep learning model training and hardware usage from mobile. 🔥 Features Monitor running experiments from mobile phone (or laptop) Monitor har

Code for Reciprocal Adversarial Learning for Brain Tumor Segmentation: A Solution to BraTS Challenge 2021 Segmentation Task

BRATS 2021 Solution For Segmentation Task This repo contains the supported pytorch code and configuration files to reproduce 3D medical image segmenta

This repo generates the training data and the model for Morpheus-Deblend

Morpheus-Deblend This repo generates the training data and the model for Morpheus-Deblend. This is the active development repo for the project and as

POC of CVE-2021-26084, which is Atlassian Confluence Server OGNL Pre-Auth RCE Injection Vulneralibity.

CVE-2021-26084 Description POC of CVE-2021-26084, which is Atlassian Confluence Server OGNL(Object-Graph Navigation Language) Pre-Auth RCE Injection V

The code for our paper Semi-Supervised Learning with Multi-Head Co-Training

Semi-Supervised Learning with Multi-Head Co-Training (PyTorch) Abstract Co-training, extended from self-training, is one of the frameworks for semi-su

Pytorch implementation of MLP-Mixer with loading pre-trained models.

MLP-Mixer-Pytorch PyTorch implementation of MLP-Mixer: An all-MLP Architecture for Vision with the function of loading official ImageNet pre-trained p

Explores the python bytecode, provides some tools to access it for fun and profit.

Pyasmtools - looking at the python bytecode for fun and profit. The pyasmtools library is made up of two parts A python bytecode disassembler . See Py

A Python module for the generation and training of an entry-level feedforward neural network.

ff-neural-network A Python module for the generation and training of an entry-level feedforward neural network. This repository serves as a repurposin

Training a Resilient Q-Network against Observational Interference, Causal Inference Q-Networks

Obs-Causal-Q-Network AAAI 2022 - Training a Resilient Q-Network against Observational Interference Preprint | Slides | Colab Demo | Environment Setup

Intel® Neural Compressor is an open-source Python library running on Intel CPUs and GPUs

Intel® Neural Compressor targeting to provide unified APIs for network compression technologies, such as low precision quantization, sparsity, pruning, knowledge distillation, across different deep learning frameworks to pursue optimal inference performance.

A Comprehensive Empirical Study of Vision-Language Pre-trained Model for Supervised Cross-Modal Retrieval

CLIP4CMR A Comprehensive Empirical Study of Vision-Language Pre-trained Model for Supervised Cross-Modal Retrieval The original data and pre-calculate

Soft actor-critic is a deep reinforcement learning framework for training maximum entropy policies in continuous domains.

This repository is no longer maintained. Please use our new Softlearning package instead. Soft Actor-Critic Soft actor-critic is a deep reinforcement

Supervised 3D Pre-training on Large-scale 2D Natural Image Datasets for 3D Medical Image Analysis

Introduction This is an implementation of our paper Supervised 3D Pre-training on Large-scale 2D Natural Image Datasets for 3D Medical Image Analysis.

for a paper about leveraging discourse markers for training new models

TSLM-DISCOURSE-MARKERS Scope This repository contains: (1) Code to extract discourse markers from wikipedia (TSA). (1) Code to extract significant dis

A Comprehensive Empirical Study of Vision-Language Pre-trained Model for Supervised Cross-Modal Retrieval

CLIP4CMR A Comprehensive Empirical Study of Vision-Language Pre-trained Model for Supervised Cross-Modal Retrieval The original data and pre-calculate

Deep learning with TensorFlow and earth observation data.

Deep Learning with TensorFlow and EO Data Complete file set for Jupyter Book Autor: Development Seed Date: 04 October 2021 ISBN: (to come) Notebook tu

Official Implementation of "DialogLM: Pre-trained Model for Long Dialogue Understanding and Summarization."

DialogLM Code for AAAI 2022 paper: DialogLM: Pre-trained Model for Long Dialogue Understanding and Summarization. Pre-trained Models We release two ve

Official Implementation for Fast Training of Neural Lumigraph Representations using Meta Learning.

Fast Training of Neural Lumigraph Representations using Meta Learning Project Page | Paper | Data Alexander W. Bergman, Petr Kellnhofer, Gordon Wetzst

Teaches a student network from the knowledge obtained via training of a larger teacher network

Distilling-the-knowledge-in-neural-network Teaches a student network from the knowledge obtained via training of a larger teacher network This is an i

Cherche (search in French) allows you to create a neural search pipeline using retrievers and pre-trained language models as rankers.

Cherche (search in French) allows you to create a neural search pipeline using retrievers and pre-trained language models as rankers. Cherche is meant to be used with small to medium sized corpora. Cherche's main strength is its ability to build diverse and end-to-end pipelines.

This is the offline-training-pipeline for our project.

offline-training-pipeline This is the offline-training-pipeline for our project. We adopt the offline training and online prediction Machine Learning

Markdown Presentations for Tech Conferences, Training, Developer Advocates, and Educators.

March 1, 2021: Service on gitpitch.com has been shutdown permanently. GitPitch 4.0 Docs Twitter About Watch the Introducing GitPitch 4.0 Video Visit t

Pi-NAS: Improving Neural Architecture Search by Reducing Supernet Training Consistency Shift (ICCV 2021)

Π-NAS This repository provides the evaluation code of our submitted paper: Pi-NAS: Improving Neural Architecture Search by Reducing Supernet Training

Learnable Boundary Guided Adversarial Training (ICCV2021)

Learnable Boundary Guided Adversarial Training This repository contains the implementation code for the ICCV2021 paper: Learnable Boundary Guided Adve

Seasonal Contrast: Unsupervised Pre-Training from Uncurated Remote Sensing Data

Seasonal Contrast: Unsupervised Pre-Training from Uncurated Remote Sensing Data This is the official PyTorch implementation of the SeCo paper: @articl

Chinese version of GPT2 training code, using BERT tokenizer.

GPT2-Chinese Description Chinese version of GPT2 training code, using BERT tokenizer or BPE tokenizer. It is based on the extremely awesome repository

TensorFlow implementation of AlexNet and its training and testing on ImageNet ILSVRC 2012 dataset

AlexNet training on ImageNet LSVRC 2012 This repository contains an implementation of AlexNet convolutional neural network and its training and testin

Amazon SageMaker Delta Sharing Examples

This repository contains examples and related resources showing you how to preprocess, train, and serve your models using Amazon SageMaker with data fetched from Delta Lake.

The full training script for Enformer (Tensorflow Sonnet) on TPU clusters

Enformer TPU training script (wip) The full training script for Enformer (Tensorflow Sonnet) on TPU clusters, in an effort to migrate the model to pyt

Training DALL-E with volunteers from all over the Internet using hivemind and dalle-pytorch (NeurIPS 2021 demo)

Training DALL-E with volunteers from all over the Internet This repository is a part of the NeurIPS 2021 demonstration "Training Transformers Together

Crowd sourced training data for Rasa NLU models

NLU Training Data Crowd-sourced training data for the development and testing of Rasa NLU models. If you're interested in grabbing some data feel free

Code, pre-trained models and saliency results for the paper "Boosting RGB-D Saliency Detection by Leveraging Unlabeled RGB Images".

Boosting RGB-D Saliency Detection by Leveraging Unlabeled RGB This repository is the official implementation of the paper. Our results comming soon in

Implementations of LSTM: A Search Space Odyssey variants and their training results on the PTB dataset.

An LSTM Odyssey Code for training variants of "LSTM: A Search Space Odyssey" on Fomoro. Check out the blog post. Training Install TensorFlow. Clone th

Contrastive unpaired image-to-image translation, faster and lighter training than cyclegan (ECCV 2020, in PyTorch)

Contrastive Unpaired Translation (CUT) video (1m) | video (10m) | website | paper We provide our PyTorch implementation of unpaired image-to-image tra

Official PyTorch implementation of the paper: DeepSIM: Image Shape Manipulation from a Single Augmented Training Sample

DeepSIM: Image Shape Manipulation from a Single Augmented Training Sample (ICCV 2021 Oral) Project | Paper Official PyTorch implementation of the pape

UI2I via StyleGAN2 - Unsupervised image-to-image translation method via pre-trained StyleGAN2 network

We proposed an unsupervised image-to-image translation method via pre-trained StyleGAN2 network. paper: Unsupervised Image-to-Image Translation via Pr

Preprossing-loan-data-with-NumPy - In this project, I have cleaned and pre-processed the loan data that belongs to an affiliate bank based in the United States.

Preprossing-loan-data-with-NumPy In this project, I have cleaned and pre-processed the loan data that belongs to an affiliate bank based in the United

Augmented CLIP - Training simple models to predict CLIP image embeddings from text embeddings, and vice versa.

Train aug_clip against laion400m-embeddings found here: https://laion.ai/laion-400-open-dataset/ - note that this used the base ViT-B/32 CLIP model. S

TrainingBike - Code, models and schematics I've used to interface my stationary training bike with PC.

TrainingBike Code, models and schematics I've used to interface my stationary training bike with PC. You can find more information about the project i

BasicNeuralNetwork - This project looks over the basic structure of a neural network and how machine learning training algorithms work

BasicNeuralNetwork - This project looks over the basic structure of a neural network and how machine learning training algorithms work. For this project, I used the sigmoid function as an activation function along with stochastic gradient descent to adjust the weights and biases.

KoBERT - Korean BERT pre-trained cased (KoBERT)

KoBERT KoBERT Korean BERT pre-trained cased (KoBERT) Why'?' Training Environment Requirements How to install How to use Using with PyTorch Using with

U-2-Net: U Square Net - Modified for paired image training of style transfer

U2-Net: U Square Net Modified for paired image training of style transfer This is an unofficial repo making use of the code which was made available b

Linescanning - Package for (pre)processing of anatomical and (linescanning) fMRI data

line scanning repository This repository contains all of the tools used during the acquisition and postprocessing of line scanning data at the Spinoza

StyleGAN2-ADA-training-jupyter - Training custom datasets in styleGAN2-ADA by NVIDIA using Jupyter

styleGAN2-ADA-training-jupyter Training custom datasets in styleGAN2-ADA on Jupyter Official StyleGAN2-ADA by NIVIDIA Paper Training Generative Advers

CIFAR-10_train-test - training and testing codes for dataset CIFAR-10

CIFAR-10_train-test - training and testing codes for dataset CIFAR-10

Training Cifar-10 Classifier Using VGG16

opevcvdl-hw3 This project uses pytorch and Qt to achieve the requirements. Version Python 3.6 opencv-contrib-python 3.4.2.17 Matplotlib 3.1.1 pyqt5 5.

SOTA easy to use PyTorch-based DL training library

Easily train or fine-tune SOTA computer vision models from one training repository. SuperGradients Introduction Welcome to SuperGradients, a free open

“Robust Lightweight Facial Expression Recognition Network with Label Distribution Training”, AAAI 2021.

EfficientFace Zengqun Zhao, Qingshan Liu, Feng Zhou. "Robust Lightweight Facial Expression Recognition Network with Label Distribution Training". AAAI

PyTorch Lightning + Hydra. A feature-rich template for rapid, scalable and reproducible ML experimentation with best practices. ⚡🔥⚡

Lightning-Hydra-Template A clean and scalable template to kickstart your deep learning project 🚀 ⚡ 🔥 Click on Use this template to initialize new re

Pytorch implementation of paper Semi-supervised Knowledge Transfer for Deep Learning from Private Training Data

Pytorch implementation of paper Semi-supervised Knowledge Transfer for Deep Learning from Private Training Data

A simple consistency training framework for semi-supervised image semantic segmentation

PseudoSeg: Designing Pseudo Labels for Semantic Segmentation PseudoSeg is a simple consistency training framework for semi-supervised image semantic s

Semi-Supervised Semantic Segmentation with Cross-Consistency Training (CCT)

Semi-Supervised Semantic Segmentation with Cross-Consistency Training (CCT) Paper, Project Page This repo contains the official implementation of CVPR

Reduce end to end training time from days to hours (or hours to minutes), and energy requirements/costs by an order of magnitude using coresets and data selection.

COResets and Data Subset selection Reduce end to end training time from days to hours (or hours to minutes), and energy requirements/costs by an order

Implementation of ICLR 2020 paper "Revisiting Self-Training for Neural Sequence Generation"

Self-Training for Neural Sequence Generation This repo includes instructions for running noisy self-training algorithms from the following paper: Revi

implementation of the paper "MarginGAN: Adversarial Training in Semi-Supervised Learning"

MarginGAN This repository is the implementation of the paper "MarginGAN: Adversarial Training in Semi-Supervised Learning". 1."preliminary" is the imp

Training neural models with structured signals.

Neural Structured Learning in TensorFlow Neural Structured Learning (NSL) is a new learning paradigm to train neural networks by leveraging structured

PyTorch implementation for Graph Contrastive Learning with Augmentations

Graph Contrastive Learning with Augmentations PyTorch implementation for Graph Contrastive Learning with Augmentations [poster] [appendix] Yuning You*

CCCL: Contrastive Cascade Graph Learning.

CCGL: Contrastive Cascade Graph Learning This repo provides a reference implementation of Contrastive Cascade Graph Learning (CCGL) framework as descr

In this project, two programs can help you take full agvantage of time on the model training with a remote server

In this project, two programs can help you take full agvantage of time on the model training with a remote server, which can push notification to your phone about the information during model training, like the model indices and unexpected interrupts. Then you can do something in time for your work.

GCC: Graph Contrastive Coding for Graph Neural Network Pre-Training @ KDD 2020

GCC: Graph Contrastive Coding for Graph Neural Network Pre-Training Original implementation for paper GCC: Graph Contrastive Coding for Graph Neural N

Pytorch implementation of the paper "COAD: Contrastive Pre-training with Adversarial Fine-tuning for Zero-shot Expert Linking."

Expert-Linking Pytorch implementation of the paper "COAD: Contrastive Pre-training with Adversarial Fine-tuning for Zero-shot Expert Linking." This is

Library for managing git hooks

Autohooks Library for managing and writing git hooks in Python. Looking for automatic formatting or linting, e.g., with black and pylint, while creati

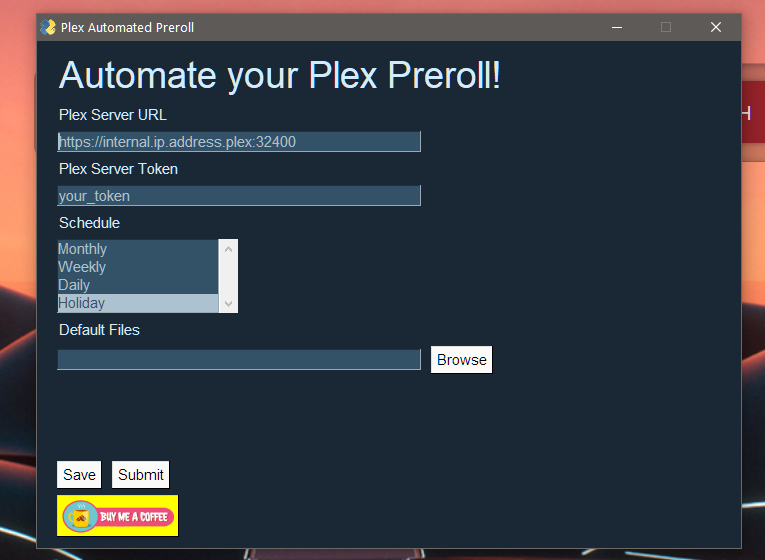

This is the new and improved Plex Automatic Pre-roll script with a GUI

Rollarr This is the new and improved Automatic Pre-roll script with a GUI for Plex now called Rollarr! It should be stable but if you find a bug pleas

Reproduce results and replicate training fo T0 (Multitask Prompted Training Enables Zero-Shot Task Generalization)

T-Zero This repository serves primarily as codebase and instructions for training, evaluation and inference of T0. T0 is the model developed in Multit

Align and Prompt: Video-and-Language Pre-training with Entity Prompts

ALPRO Align and Prompt: Video-and-Language Pre-training with Entity Prompts [Paper] Dongxu Li, Junnan Li, Hongdong Li, Juan Carlos Niebles, Steven C.H

Pre-Training Graph Neural Networks for Cold-Start Users and Items Representation.

Pretrain-Recsys This is our Tensorflow implementation for our WSDM 2021 paper: Bowen Hao, Jing Zhang, Hongzhi Yin, Cuiping Li, Hong Chen. Pre-Training

Pre-training of Graph Augmented Transformers for Medication Recommendation

G-Bert Pre-training of Graph Augmented Transformers for Medication Recommendation Intro G-Bert combined the power of Graph Neural Networks and BERT (B

Code for KDD'20 "Generative Pre-Training of Graph Neural Networks"

GPT-GNN: Generative Pre-Training of Graph Neural Networks GPT-GNN is a pre-training framework to initialize GNNs by generative pre-training. It can be

code for "Self-supervised edge features for improved Graph Neural Network training", arxivlink

Self-supervised edge features for improved Graph Neural Network training Data availability: Here is a link to the raw data for the organoids dataset.

![[ICML 2020] DrRepair: Learning to Repair Programs from Error Messages](https://github.com/michiyasunaga/DrRepair/raw/master/DrRepair-overview.png)

[ICML 2020] DrRepair: Learning to Repair Programs from Error Messages

DrRepair: Learning to Repair Programs from Error Messages This repo provides the source code & data of our paper: Graph-based, Self-Supervised Program

Autoregressive Predictive Coding: An unsupervised autoregressive model for speech representation learning

Autoregressive Predictive Coding This repository contains the official implementation (in PyTorch) of Autoregressive Predictive Coding (APC) proposed

Code and training data for our ECCV 2016 paper on Unsupervised Learning

Shuffle and Learn (Shuffle Tuple) Created by Ishan Misra Based on the ECCV 2016 Paper - "Shuffle and Learn: Unsupervised Learning using Temporal Order

![[NeurIPS'20] Self-supervised Co-Training for Video Representation Learning. Tengda Han, Weidi Xie, Andrew Zisserman.](https://github.com/TengdaHan/CoCLR/raw/main/asset/teaser.png)

[NeurIPS'20] Self-supervised Co-Training for Video Representation Learning. Tengda Han, Weidi Xie, Andrew Zisserman.

CoCLR: Self-supervised Co-Training for Video Representation Learning This repository contains the implementation of: InfoNCE (MoCo on videos) UberNCE

PyTorch code for training MM-DistillNet for multimodal knowledge distillation

There is More than Meets the Eye: Self-Supervised Multi-Object Detection and Tracking with Sound by Distilling Multimodal Knowledge MM-DistillNet is a

AdaFocus V2: End-to-End Training of Spatial Dynamic Networks for Video Recognition

AdaFocusV2 This repo contains the official code and pre-trained models for AdaFo

Deep Learning Training Scripts With Python

Deep Learning Training Scripts DNN Frameworks Caffe PyTorch Tensorflow CNN Models VGG ResNet DenseNet Inception Language Modeling GatedCNN-LM Attentio

Hub is a dataset format with a simple API for creating, storing, and collaborating on AI datasets of any size.

Hub is a dataset format with a simple API for creating, storing, and collaborating on AI datasets of any size. The hub data layout enables rapid transformations and streaming of data while training models at scale. Hub is used by Google, Waymo, Red Cross, Oxford University, and Omdena.

Code to use Augmented Shapiro Wilks Stopping, as well as code for the paper "Statistically Signifigant Stopping of Neural Network Training"

This codebase is being actively maintained, please create and issue if you have issues using it Basics All data files are included under losses and ea

PyTorch implementation of Rethinking Positional Encoding in Language Pre-training

TUPE PyTorch implementation of Rethinking Positional Encoding in Language Pre-training. Quickstart Clone this repository. git clone https://github.com