1482 Repositories

Python state-space-models Libraries

PyTorch implementation of the WarpedGANSpace: Finding non-linear RBF paths in GAN latent space (ICCV 2021)

Authors official PyTorch implementation of the "WarpedGANSpace: Finding non-linear RBF paths in GAN latent space" [ICCV 2021].

Training vision models with full-batch gradient descent and regularization

Stochastic Training is Not Necessary for Generalization -- Training competitive vision models without stochasticity This repository implements trainin

Blender addon to generate better building models from satellite imagery.

Blender addon to generate better building models from satellite imagery.

Pytorch implementation of "Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling"

RNN-for-Joint-NLU Pytorch implementation of "Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling"

TorchXRayVision: A library of chest X-ray datasets and models.

torchxrayvision A library for chest X-ray datasets and models. Including pre-trained models. ( 🎬 promo video about the project) Motivation: While the

A pytorch-based deep learning framework for multi-modal 2D/3D medical image segmentation

A 3D multi-modal medical image segmentation library in PyTorch We strongly believe in open and reproducible deep learning research. Our goal is to imp

Explainer for black box models that predict molecule properties

Explaining why that molecule exmol is a package to explain black-box predictions of molecules. The package uses model agnostic explanations to help us

Prompt-learning is the latest paradigm to adapt pre-trained language models (PLMs) to downstream NLP tasks

Prompt-learning is the latest paradigm to adapt pre-trained language models (PLMs) to downstream NLP tasks, which modifies the input text with a textual template and directly uses PLMs to conduct pre-trained tasks. This library provides a standard, flexible and extensible framework to deploy the prompt-learning pipeline. OpenPrompt supports loading PLMs directly from huggingface transformers. In the future, we will also support PLMs implemented by other libraries.

deep-table implements various state-of-the-art deep learning and self-supervised learning algorithms for tabular data using PyTorch.

deep-table implements various state-of-the-art deep learning and self-supervised learning algorithms for tabular data using PyTorch.

pcnaDeep integrates cutting-edge detection techniques with tracking and cell cycle resolving models.

pcnaDeep: a deep-learning based single-cell cycle profiler with PCNA signal Welcome! pcnaDeep integrates cutting-edge detection techniques with tracki

A Chinese to English Neural Model Translation Project

ZH-EN NMT Chinese to English Neural Machine Translation This project is inspired by Stanford's CS224N NMT Project Dataset used in this project: News C

Pytorch implementation of paper "Efficient Nearest Neighbor Language Models" (EMNLP 2021)

Pytorch implementation of paper "Efficient Nearest Neighbor Language Models" (EMNLP 2021)

Interactive Terraform visualization. State and configuration explorer.

Rover - Terraform Visualizer Rover is a Terraform visualizer. In order to do this, Rover: generates a plan file and parses the configuration in the ro

Model factory is a ML training platform to help engineers to build ML models at scale

Model Factory Machine learning today is powering many businesses today, e.g., search engine, e-commerce, news or feed recommendation. Training high qu

Pre-trained BERT Models for Ancient and Medieval Greek, and associated code for LaTeCH 2021 paper titled - "A Pilot Study for BERT Language Modelling and Morphological Analysis for Ancient and Medieval Greek"

Ancient Greek BERT The first and only available Ancient Greek sub-word BERT model! State-of-the-art post fine-tuning on Part-of-Speech Tagging and Mor

Misc YOLOL scripts for use in the Starbase space sandbox videogame

starbase-misc Misc YOLOL scripts for use in the Starbase space sandbox videogame. Each directory contains standalone YOLOL scripts. They don't really

Densely Connected Search Space for More Flexible Neural Architecture Search (CVPR2020)

DenseNAS The code of the CVPR2020 paper Densely Connected Search Space for More Flexible Neural Architecture Search. Neural architecture search (NAS)

A PyTorch implementation of EfficientNet and EfficientNetV2 (coming soon!)

EfficientNet PyTorch Quickstart Install with pip install efficientnet_pytorch and load a pretrained EfficientNet with: from efficientnet_pytorch impor

PyTorch code accompanying our paper on Maximum Entropy Generators for Energy-Based Models

Maximum Entropy Generators for Energy-Based Models All experiments have tensorboard visualizations for samples / density / train curves etc. To run th

![A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]](https://user-images.githubusercontent.com/33194443/104581604-2592cb00-56a2-11eb-9610-5eaa0afb6e1f.png)

A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]

PINTO_model_zoo Please read the contents of the LICENSE file located directly under each folder before using the model. My model conversion scripts ar

A scalable template for PyTorch projects, with examples in Image Segmentation, Object classification, GANs and Reinforcement Learning.

PyTorch Project Template is being sponsored by the following tool; please help to support us by taking a look and signing up to a free trial PyTorch P

Pytorch implementations of various Deep NLP models in cs-224n(Stanford Univ)

DeepNLP-models-Pytorch Pytorch implementations of various Deep NLP models in cs-224n(Stanford Univ: NLP with Deep Learning) This is not for Pytorch be

A collection of various deep learning architectures, models, and tips

Deep Learning Models A collection of various deep learning architectures, models, and tips for TensorFlow and PyTorch in Jupyter Notebooks. Traditiona

Visualizer for neural network, deep learning, and machine learning models

Netron is a viewer for neural network, deep learning and machine learning models. Netron supports ONNX, TensorFlow Lite, Keras, Caffe, Darknet, ncnn,

Making decision trees competitive with neural networks on CIFAR10, CIFAR100, TinyImagenet200, Imagenet

Neural-Backed Decision Trees · Site · Paper · Blog · Video Alvin Wan, *Lisa Dunlap, *Daniel Ho, Jihan Yin, Scott Lee, Henry Jin, Suzanne Petryk, Sarah

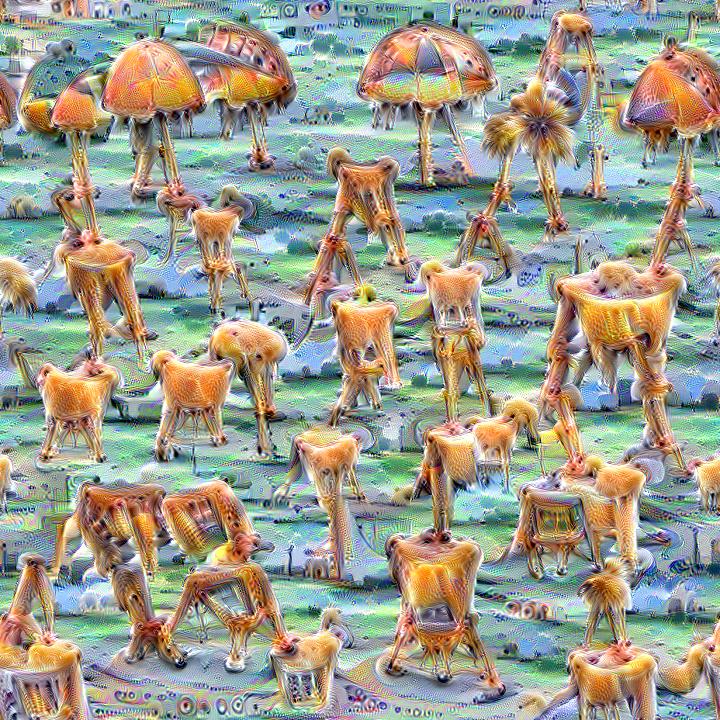

Quickly and easily create / train a custom DeepDream model

Dream-Creator This project aims to simplify the process of creating a custom DeepDream model by using pretrained GoogleNet models and custom image dat

Space shooter being built for PyWeek 32

Axium Humanity's expansion into space had lasted centuries by the time we encountered the vicious Threx. The Threx adopted a single, religious mission

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

A Tools that help Data Scientists and ML engineers train and deploy ML models.

Domino Research This repo contains projects under active development by the Domino R&D team. We build tools that help Data Scientists and ML engineers

fastgradio is a python library to quickly build and share gradio interfaces of your trained fastai models.

fastgradio is a python library to quickly build and share gradio interfaces of your trained fastai models.

GluonMM is a library of transformer models for computer vision and multi-modality research

GluonMM is a library of transformer models for computer vision and multi-modality research. It contains reference implementations of widely adopted baseline models and also research work from Amazon Research.

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

Code for ACL'2021 paper WARP 🌀 Word-level Adversarial ReProgramming

Code for ACL'2021 paper WARP 🌀 Word-level Adversarial ReProgramming. Outperforming `GPT-3` on SuperGLUE Few-Shot text classification.

TruthfulQA: Measuring How Models Imitate Human Falsehoods

TruthfulQA: Measuring How Models Imitate Human Falsehoods

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context Code in both PyTorch and TensorFlow

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context This repository contains the code in both PyTorch and TensorFlow for our paper

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

PyTorch implementation of convolutional neural networks-based text-to-speech synthesis models

Deepvoice3_pytorch PyTorch implementation of convolutional networks-based text-to-speech synthesis models: arXiv:1710.07654: Deep Voice 3: Scaling Tex

StarGAN - Official PyTorch Implementation (CVPR 2018)

StarGAN - Official PyTorch Implementation ***** New: StarGAN v2 is available at https://github.com/clovaai/stargan-v2 ***** This repository provides t

The PASS dataset: pretrained models and how to get the data - PASS: Pictures without humAns for Self-Supervised Pretraining

The PASS dataset: pretrained models and how to get the data - PASS: Pictures without humAns for Self-Supervised Pretraining

Silero Models: pre-trained speech-to-text, text-to-speech models and benchmarks made embarrassingly simple

Silero Models: pre-trained speech-to-text, text-to-speech models and benchmarks made embarrassingly simple

A script depending on VASP output for calculating Fermi-Softness.

Fermi softness calculation for Vienna Ab initio Simulation Package (VASP) Update 1.1.0: Big update: Rewrote the code. Use Bader atomic division instea

Discretized Integrated Gradients for Explaining Language Models (EMNLP 2021)

Discretized Integrated Gradients for Explaining Language Models (EMNLP 2021) Overview of paths used in DIG and IG. w is the word being attributed. The

Tevatron is a simple and efficient toolkit for training and running dense retrievers with deep language models.

Tevatron Tevatron is a simple and efficient toolkit for training and running dense retrievers with deep language models. The toolkit has a modularized

In this repository we have tested 3 VQA models on the ImageCLEF-2019 dataset.

Med-VQA In this repository we have tested 3 VQA models on the ImageCLEF-2019 dataset. Two of these are made on top of Facebook AI Reasearch's Multi-Mo

Implementation of Squeezenet in pytorch, pretrained models on Cifar 10 data to come

Pytorch Squeeznet Pytorch implementation of Squeezenet model as described in https://arxiv.org/abs/1602.07360 on cifar-10 Data. The definition of Sque

Reinforcement learning models in ViZDoom environment

DoomNet DoomNet is a ViZDoom agent trained by reinforcement learning. The agent is a neural network that outputs a probability of actions given only p

Sequence to Sequence Models with PyTorch

Sequence to Sequence models with PyTorch This repository contains implementations of Sequence to Sequence (Seq2Seq) models in PyTorch At present it ha

PICARD - Parsing Incrementally for Constrained Auto-Regressive Decoding from Language Models

This is the official implementation of the following paper: Torsten Scholak, Nathan Schucher, Dzmitry Bahdanau. PICARD - Parsing Incrementally for Con

Hierarchical unsupervised and semi-supervised topic models for sparse count data with CorEx

Anchored CorEx: Hierarchical Topic Modeling with Minimal Domain Knowledge Correlation Explanation (CorEx) is a topic model that yields rich topics tha

A model library for exploring state-of-the-art deep learning topologies and techniques for optimizing Natural Language Processing neural networks

A Deep Learning NLP/NLU library by Intel® AI Lab Overview | Models | Installation | Examples | Documentation | Tutorials | Contributing NLP Architect

Neural network models for joint POS tagging and dependency parsing (CoNLL 2017-2018)

Neural Network Models for Joint POS Tagging and Dependency Parsing Implementations of joint models for POS tagging and dependency parsing, as describe

Model Agnostic Confidence Estimator (MACEST) - A Python library for calibrating Machine Learning models' confidence scores

Model Agnostic Confidence Estimator (MACEST) - A Python library for calibrating Machine Learning models' confidence scores

Official pytorch implementation of "Scaling-up Disentanglement for Image Translation", ICCV 2021.

Official pytorch implementation of "Scaling-up Disentanglement for Image Translation", ICCV 2021.

Code and dataset for the EMNLP 2021 Finding paper "Can NLI Models Verify QA Systems’ Predictions?"

Code and dataset for the EMNLP 2021 Finding paper "Can NLI Models Verify QA Systems’ Predictions?"

A2T: Towards Improving Adversarial Training of NLP Models (EMNLP 2021 Findings)

A2T: Towards Improving Adversarial Training of NLP Models This is the source code for the EMNLP 2021 (Findings) paper "Towards Improving Adversarial T

EMNLP'2021: Can Language Models be Biomedical Knowledge Bases?

BioLAMA BioLAMA is biomedical factual knowledge triples for probing biomedical LMs. The triples are collected and pre-processed from three sources: CT

Code for evaluating Japanese pretrained models provided by NTT Ltd.

japanese-dialog-transformers 日本語の説明文はこちら This repository provides the information necessary to evaluate the Japanese Transformer Encoder-decoder dialo

TorchGeo is a PyTorch domain library, similar to torchvision, that provides datasets, transforms, samplers, and pre-trained models specific to geospatial data.

TorchGeo is a PyTorch domain library, similar to torchvision, that provides datasets, transforms, samplers, and pre-trained models specific to geospatial data.

The repository for the paper: Multilingual Translation via Grafting Pre-trained Language Models

Graformer The repository for the paper: Multilingual Translation via Grafting Pre-trained Language Models Graformer (also named BridgeTransformer in t

A Django application that provides country choices for use with forms, flag icons static files, and a country field for models.

Django Countries A Django application that provides country choices for use with forms, flag icons static files, and a country field for models. Insta

A BitField extension for Django Models

django-bitfield Provides a BitField like class (using a BigIntegerField) for your Django models. (If you're upgrading from a version before 1.2 the AP

This is a vision-based 3d model manipulation and control UI

Manipulation of 3D Models Using Hand Gesture This program allows user to manipulation 3D models (.obj format) with their hands. The project support bo

A collection of SOTA Image Classification Models in PyTorch

A collection of SOTA Image Classification Models in PyTorch

![[EMNLP 2021] LM-Critic: Language Models for Unsupervised Grammatical Error Correction](https://github.com/michiyasunaga/LM-Critic/raw/main/figs/local_optimality.png)

[EMNLP 2021] LM-Critic: Language Models for Unsupervised Grammatical Error Correction

LM-Critic: Language Models for Unsupervised Grammatical Error Correction This repo provides the source code & data of our paper: LM-Critic: Language M

Code & Models for 3DETR - an End-to-end transformer model for 3D object detection

3DETR: An End-to-End Transformer Model for 3D Object Detection PyTorch implementation and models for 3DETR. 3DETR (3D DEtection TRansformer) is a simp

Object Moderation Layer

django-oml Welcome to the documentation for django-oml! OML means Object Moderation Layer, the idea is to have a mixin model that allows you to modera

CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Generation

CPT This repository contains code and checkpoints for CPT. CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Gener

Must-read papers on improving efficiency for pre-trained language models.

Must-read papers on improving efficiency for pre-trained language models.

Entropy-controlled contexts in Python

Python module ordered ordered module is the opposite to random - it maintains order in the program. import random x = 5 def increase(): global x

Keras attention models including botnet,CoaT,CoAtNet,CMT,cotnet,halonet,resnest,resnext,resnetd,volo,mlp-mixer,resmlp,gmlp,levit

Keras_cv_attention_models Keras_cv_attention_models Usage Basic Usage Layers Model surgery AotNet ResNetD ResNeXt ResNetQ BotNet VOLO ResNeSt HaloNet

ILVR: Conditioning Method for Denoising Diffusion Probabilistic Models (ICCV 2021 Oral)

ILVR + ADM This is the implementation of ILVR: Conditioning Method for Denoising Diffusion Probabilistic Models (ICCV 2021 Oral). This repository is h

A clean and robust Pytorch implementation of PPO on continuous action space.

PPO-Continuous-Pytorch I found the current implementation of PPO on continuous action space is whether somewhat complicated or not stable. And this is

Stor is a community-driven green cryptocurrency based on a proof of space and time consensus algorithm.

Stor Blockchain Stor is a community-driven green cryptocurrency based on a proof of space and time consensus algorithm. For more information, see our

Feed forward VQGAN-CLIP model, where the goal is to eliminate the need for optimizing the latent space of VQGAN for each input prompt

Feed forward VQGAN-CLIP model, where the goal is to eliminate the need for optimizing the latent space of VQGAN for each input prompt. This is done by

Codebase for Diffusion Models Beat GANS on Image Synthesis.

Codebase for Diffusion Models Beat GANS on Image Synthesis.

Demonstrates how to divide a DL model into multiple IR model files (division) and introduce a simplest way to implement a custom layer works with OpenVINO IR models.

Demonstration of OpenVINO techniques - Model-division and a simplest-way to support custom layers Description: Model Optimizer in Intel(r) OpenVINO(tm

This repository contains the official release of the model "BanglaBERT" and associated downstream finetuning code and datasets introduced in the paper titled "BanglaBERT: Combating Embedding Barrier in Multilingual Models for Low-Resource Language Understanding".

BanglaBERT This repository contains the official release of the model "BanglaBERT" and associated downstream finetuning code and datasets introduced i

Fourier-Bayesian estimation of stochastic volatility models

fourier-bayesian-sv-estimation Fourier-Bayesian estimation of stochastic volatility models Code used to run the numerical examples of "Bayesian Approa

Code and checkpoints for training the transformer-based Table QA models introduced in the paper TAPAS: Weakly Supervised Table Parsing via Pre-training.

End-to-end neural table-text understanding models.

This repository accompanies our paper “Do Prompt-Based Models Really Understand the Meaning of Their Prompts?”

This repository accompanies our paper “Do Prompt-Based Models Really Understand the Meaning of Their Prompts?” Usage To replicate our results in Secti

PyTorch implementation of "Representing Shape Collections with Alignment-Aware Linear Models" paper.

deep-linear-shapes PyTorch implementation of "Representing Shape Collections with Alignment-Aware Linear Models" paper. If you find this code useful i

FLAML is a lightweight Python library that finds accurate machine learning models automatically, efficiently and economically

FLAML - Fast and Lightweight AutoML

InfraGenie is allows you to split out your infrastructure project into separate independent pieces, each with its own terraform state.

🧞 InfraGenie InfraGenie is allows you to split out your infrastructure project into separate independent pieces, each with its own terraform state. T

The code repository for EMNLP 2021 paper "Vision Guided Generative Pre-trained Language Models for Multimodal Abstractive Summarization".

Vision Guided Generative Pre-trained Language Models for Multimodal Abstractive Summarization [Paper] accepted at the EMNLP 2021: Vision Guided Genera

This repo uses a combination of logits and feature distillation method to teach the PSPNet model of ResNet18 backbone with the PSPNet model of ResNet50 backbone. All the models are trained and tested on the PASCAL-VOC2012 dataset.

PSPNet-logits and feature-distillation Introduction This repository is based on PSPNet and modified from semseg and Pixelwise_Knowledge_Distillation_P

Ptorch NLU, a Chinese text classification and sequence annotation toolkit, supports multi class and multi label classification tasks of Chinese long text and short text, and supports sequence annotation tasks such as Chinese named entity recognition, part of speech tagging and word segmentation.

Pytorch-NLU,一个中文文本分类、序列标注工具包,支持中文长文本、短文本的多类、多标签分类任务,支持中文命名实体识别、词性标注、分词等序列标注任务。 Ptorch NLU, a Chinese text classification and sequence annotation toolkit, supports multi class and multi label classification tasks of Chinese long text and short text, and supports sequence annotation tasks such as Chinese named entity recognition, part of speech tagging and word segmentation.

This repository contains various models targetting multimodal representation learning, multimodal fusion for downstream tasks such as multimodal sentiment analysis.

Multimodal Deep Learning 🎆 🎆 🎆 Announcing the multimodal deep learning repository that contains implementation of various deep learning-based model

Ranking Models in Unlabeled New Environments (iccv21)

Ranking Models in Unlabeled New Environments Prerequisites This code uses the following libraries Python 3.7 NumPy PyTorch 1.7.0 + torchivision 0.8.1

Abandoned plan for a clone of the old Flash game Star Relic

space-grid When I was in middle school, I was a fan of the Flash game Star Relic (no longer playable in modern browsers, but it works alright in Flash

pyntcloud is a Python library for working with 3D point clouds.

pyntcloud is a Python library for working with 3D point clouds.

Official implementation of particle-based models (GNS and DPI-Net) on the Physion dataset.

Physion: Evaluating Physical Prediction from Vision in Humans and Machines [paper] Daniel M. Bear, Elias Wang, Damian Mrowca, Felix J. Binder, Hsiao-Y

PaddleViT: State-of-the-art Visual Transformer and MLP Models for PaddlePaddle 2.0+

PaddlePaddle Vision Transformers State-of-the-art Visual Transformer and MLP Models for PaddlePaddle 🤖 PaddlePaddle Visual Transformers (PaddleViT or

The official repository for our paper "The Devil is in the Detail: Simple Tricks Improve Systematic Generalization of Transformers". We significantly improve the systematic generalization of transformer models on a variety of datasets using simple tricks and careful considerations.

Codebase for training transformers on systematic generalization datasets. The official repository for our EMNLP 2021 paper The Devil is in the Detail:

Codes to pre-train Japanese T5 models

t5-japanese Codes to pre-train a T5 (Text-to-Text Transfer Transformer) model pre-trained on Japanese web texts. The model is available at https://hug

EMNLP 2021 Adapting Language Models for Zero-shot Learning by Meta-tuning on Dataset and Prompt Collections

Adapting Language Models for Zero-shot Learning by Meta-tuning on Dataset and Prompt Collections Ruiqi Zhong, Kristy Lee*, Zheng Zhang*, Dan Klein EMN

Ongoing research training transformer language models at scale, including: BERT & GPT-2

Megatron (1 and 2) is a large, powerful transformer developed by the Applied Deep Learning Research team at NVIDIA.

Official implementation of the MM'21 paper Constrained Graphic Layout Generation via Latent Optimization

[MM'21] Constrained Graphic Layout Generation via Latent Optimization This repository provides the official code for the paper "Constrained Graphic La

Code for our ALiBi method for transformer language models.

Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation This repository contains the code and models for our paper Tra

A comprehensive CRUD API generator for SQLALchemy.

FastAPI Quick CRUD Introduction Advantage Constraint Getting started Installation Usage Design Path Parameter Query Parameter Request Body Upsert Intr