424 Repositories

Python visual-question-answering Libraries

An unsupervised learning framework for depth and ego-motion estimation from monocular videos

SfMLearner This codebase implements the system described in the paper: Unsupervised Learning of Depth and Ego-Motion from Video Tinghui Zhou, Matthew

Code for the paper: Audio-Visual Scene Analysis with Self-Supervised Multisensory Features

[Paper] [Project page] This repository contains code for the paper: Andrew Owens, Alexei A. Efros. Audio-Visual Scene Analysis with Self-Supervised Mu

Percy visual testing for Python Selenium

percy-selenium-python Percy visual testing for Python Selenium. Installation npm install @percy/cli: $ npm install --save-dev @percy/cli pip install P

A small script written in Python3 that generates a visual representation of the Mandelbrot set.

Mandelbrot Set Generator A small script written in Python3 that generates a visual representation of the Mandelbrot set. Abstract The colors in the ou

![[ACMMM 2021, Oral] Code release for](https://github.com/yikaiw/EIP/raw/main/assets/intro.png)

[ACMMM 2021, Oral] Code release for "Elastic Tactile Simulation Towards Tactile-Visual Perception"

EIP: Elastic Interaction of Particles Code release for "Elastic Tactile Simulation Towards Tactile-Visual Perception", in ACMMM (Oral) 2021. By Yikai

Visual odometry package based on hardware-accelerated NVIDIA Elbrus library with world class quality and performance.

Isaac ROS Visual Odometry This repository provides a ROS2 package that estimates stereo visual inertial odometry using the Isaac Elbrus GPU-accelerate

Python package for analyzing behavioral data for Brain Observatory: Visual Behavior

Allen Institute Visual Behavior Analysis package This repository contains code for analyzing behavioral data from the Allen Brain Observatory: Visual

Weak-supervised Visual Geo-localization via Attention-based Knowledge Distillation

Weak-supervised Visual Geo-localization via Attention-based Knowledge Distillation Introduction WAKD is a PyTorch implementation for our ICPR-2022 pap

Cilantropy: a Python Package Manager interface created to provide an "easy-to-use" visual and also a command-line interface for Pythonistas.

Cilantropy Cilantropy is a Python Package Manager interface created to provide an "easy-to-use" visual and also a command-line interface for Pythonist

Dual languaged (rus+eng) tool for packing and unpacking archives of Silky Engine.

SilkyArcTool English Dual languaged (rus+eng) GUI tool for packing and unpacking archives of Silky Engine. It is not the same arc as used in Ai6WIN. I

This repository provides data for the VAW dataset as described in the CVPR 2021 paper titled "Learning to Predict Visual Attributes in the Wild"

Visual Attributes in the Wild (VAW) This repository provides data for the VAW dataset as described in the CVPR 2021 Paper: Learning to Predict Visual

[AAAI-2022] Official implementations of MCL: Mutual Contrastive Learning for Visual Representation Learning

Mutual Contrastive Learning for Visual Representation Learning This project provides source code for our Mutual Contrastive Learning for Visual Repres

Depth image based mouse cursor visual haptic

Depth image based mouse cursor visual haptic How to run it. Install pyqt5. Install python modules pip install Pillow pip install numpy For illustrati

QuALITY: Question Answering with Long Input Texts, Yes!

QuALITY: Question Answering with Long Input Texts, Yes! Authors: Richard Yuanzhe Pang,* Alicia Parrish,* Nitish Joshi,* Nikita Nangia, Jason Phang, An

![[CoRL 21'] TANDEM: Tracking and Dense Mapping in Real-time using Deep Multi-view Stereo](https://github.com/tum-vision/tandem/raw/master/assets/tandem_poster.jpg)

[CoRL 21'] TANDEM: Tracking and Dense Mapping in Real-time using Deep Multi-view Stereo

TANDEM: Tracking and Dense Mapping in Real-time using Deep Multi-view Stereo Lukas Koestler1* Nan Yang1,2*,† Niclas Zeller2,3 Daniel Cremers1

An open-source tool for visual and modular block programing in python

PyFlow PyFlow is an open-source tool for modular visual programing in python ! Although for now the tool is in Beta and features are coming in bit by

Robotics with GPU computing

Robotics with GPU computing Cupoch is a library that implements rapid 3D data processing for robotics using CUDA. The goal of this library is to imple

BERT-based Financial Question Answering System

BERT-based Financial Question Answering System In this example, we use Jina, PyTorch, and Hugging Face transformers to build a production-ready BERT-b

Rethinking Nearest Neighbors for Visual Classification

Rethinking Nearest Neighbors for Visual Classification arXiv Environment settings Check out scripts/env_setup.sh Setup data Download the following fin

Official repository of ICCV21 paper "Viewpoint Invariant Dense Matching for Visual Geolocalization"

Viewpoint Invariant Dense Matching for Visual Geolocalization: PyTorch implementation This is the implementation of the ICCV21 paper: G Berton, C. Mas

![MEAD: A Large-scale Audio-visual Dataset for Emotional Talking-face Generation [ECCV2020]](https://github.com/uniBruce/Mead/raw/master/Figures/mead.png)

MEAD: A Large-scale Audio-visual Dataset for Emotional Talking-face Generation [ECCV2020]

MEAD: A Large-scale Audio-visual Dataset for Emotional Talking-face Generation [ECCV2020] by Kaisiyuan Wang, Qianyi Wu, Linsen Song, Zhuoqian Yang, Wa

Code for Talking Face Generation by Adversarially Disentangled Audio-Visual Representation (AAAI 2019)

Talking Face Generation by Adversarially Disentangled Audio-Visual Representation (AAAI 2019) We propose Disentangled Audio-Visual System (DAVS) to ad

A Streamlit web-app for a data-science project that aims to evaluate if the answer to a question is helpful.

How useful is the aswer? A Streamlit web-app for a data-science project that aims to evaluate if the answer to a question is helpful. If you want to l

This repository contains the code, models and datasets discussed in our paper "Few-Shot Question Answering by Pretraining Span Selection"

Splinter This repository contains the code, models and datasets discussed in our paper "Few-Shot Question Answering by Pretraining Span Selection", to

Large Scale Fine-Grained Categorization and Domain-Specific Transfer Learning. CVPR 2018

Large Scale Fine-Grained Categorization and Domain-Specific Transfer Learning Tensorflow code and models for the paper: Large Scale Fine-Grained Categ

✔️ Visual, reactive testing library for Julia. Time machine included.

PlutoTest.jl (alpha release) Visual, reactive testing library for Julia A macro @test that you can use to verify your code's correctness. But instead

A Multi-modal Perception Tracker (MPT) for speaker tracking using both audio and visual modalities

MPT A Multi-modal Perception Tracker (MPT) for speaker tracking using both audio and visual modalities. Implementation for our AAAI 2022 paper: Multi-

Code of the paper "Shaping Visual Representations with Attributes for Few-Shot Learning (ASL)".

Shaping Visual Representations with Attributes for Few-Shot Learning This code implements the Shaping Visual Representations with Attributes for Few-S

🦎 A NeoVim plugin for highlighting visual selections like in a normal document editor!

🦎 HighStr.nvim A NeoVim plugin for highlighting visual selections like in a normal document editor! Demo TL;DR HighStr.nvim is a NeoVim plugin writte

Continuous Augmented Positional Embeddings (CAPE) implementation for PyTorch

PyTorch implementation of Continuous Augmented Positional Embeddings (CAPE), by Likhomanenko et al. Enhance your Transformer positional embeddings with easy-to-use augmentations!

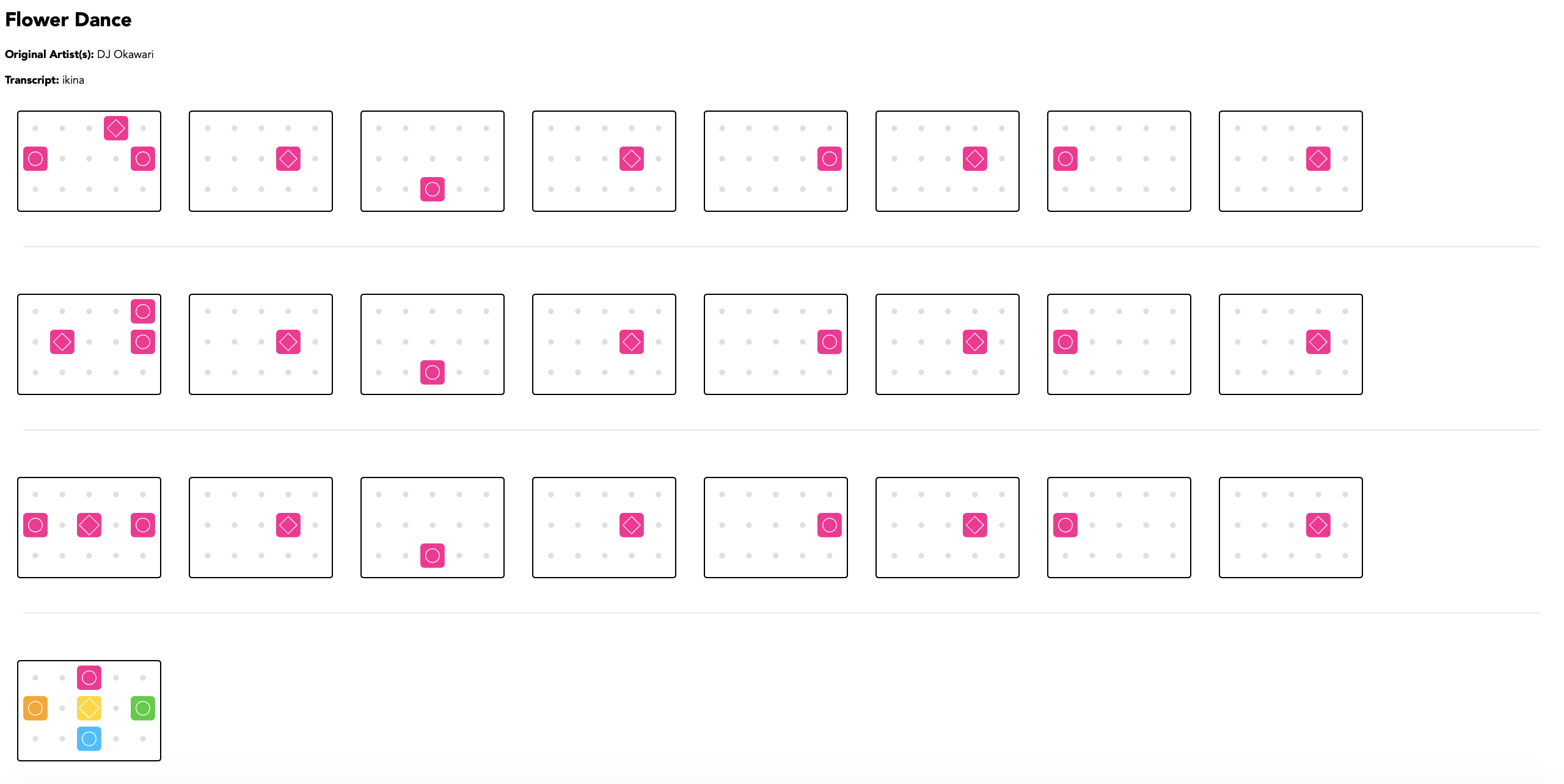

Make visual music sheets for thatskygame (graphical representations of the Sky keyboard)

sky-python-music-sheet-maker This program lets you make visual music sheets for Sky: Children of the Light. It will ask you a few questions, and does

Official Implementation of SimIPU: Simple 2D Image and 3D Point Cloud Unsupervised Pre-Training for Spatial-Aware Visual Representations

Official Implementation of SimIPU SimIPU: Simple 2D Image and 3D Point Cloud Unsupervised Pre-Training for Spatial-Aware Visual Representations Since

Visual Adversarial Imitation Learning using Variational Models (VMAIL)

Visual Adversarial Imitation Learning using Variational Models (VMAIL) This is the official implementation of the NeurIPS 2021 paper. Project website

A concise grammar of interactive graphics, built on Vega.

Vega-Lite Vega-Lite provides a higher-level grammar for visual analysis that generates complete Vega specifications. You can find more details, docume

Tensorflow 2 implementations of the C-SimCLR and C-BYOL self-supervised visual representation methods from "Compressive Visual Representations" (NeurIPS 2021)

Compressive Visual Representations This repository contains the source code for our paper, Compressive Visual Representations. We developed informatio

Class-Balanced Loss Based on Effective Number of Samples. CVPR 2019

Class-Balanced Loss Based on Effective Number of Samples Tensorflow code for the paper: Class-Balanced Loss Based on Effective Number of Samples Yin C

[CVPR 2020] Rethinking Class-Balanced Methods for Long-Tailed Visual Recognition from a Domain Adaptation Perspective

Rethinking Class-Balanced Methods for Long-Tailed Visual Recognition from a Domain Adaptation Perspective [Arxiv] This is PyTorch implementation of th

![[NeurIPS 2020] Code for the paper](https://github.com/jiawei-ren/BalancedMetaSoftmax/raw/main/cifar-10.png)

[NeurIPS 2020] Code for the paper "Balanced Meta-Softmax for Long-Tailed Visual Recognition"

Balanced Meta-Softmax Code for the paper Balanced Meta-Softmax for Long-Tailed Visual Recognition Jiawei Ren, Cunjun Yu, Shunan Sheng, Xiao Ma, Haiyu

[CVPR 2021] MetaSAug: Meta Semantic Augmentation for Long-Tailed Visual Recognition

MetaSAug: Meta Semantic Augmentation for Long-Tailed Visual Recognition (CVPR 2021) arXiv Prerequisite PyTorch = 1.2.0 Python3 torchvision PIL argpar

This repository contains code for the paper "Disentangling Label Distribution for Long-tailed Visual Recognition", published at CVPR' 2021

Disentangling Label Distribution for Long-tailed Visual Recognition (CVPR 2021) Arxiv link Blog post This codebase is built on Causal Norm. Install co

Official PyTorch Implementation of GAN-Supervised Dense Visual Alignment

GAN-Supervised Dense Visual Alignment — Official PyTorch Implementation Paper | Project Page | Video This repo contains training, evaluation and visua

Contact Extraction with Question Answering.

contactsQA Extraction of contact entities from address blocks and imprints with Extractive Question Answering. Goal Input: Dr. Max Mustermann Hauptstr

![[AAAI2022] Source code for our paper《Suppressing Static Visual Cues via Normalizing Flows for Self-Supervised Video Representation Learning》](https://github.com/mettyz/SSVC/raw/main/asset/sample.gif)

[AAAI2022] Source code for our paper《Suppressing Static Visual Cues via Normalizing Flows for Self-Supervised Video Representation Learning》

SSVC The source code for paper [Suppressing Static Visual Cues via Normalizing Flows for Self-Supervised Video Representation Learning] samples of the

HIVE: Evaluating the Human Interpretability of Visual Explanations

HIVE: Evaluating the Human Interpretability of Visual Explanations Project Page | Paper This repo provides the code for HIVE, a human evaluation frame

Data pipelines for both TensorFlow and PyTorch!

rapidnlp-datasets Data pipelines for both TensorFlow and PyTorch ! If you want to load public datasets, try: tensorflow/datasets huggingface/datasets

Lip Reading - Cross Audio-Visual Recognition using 3D Convolutional Neural Networks

Lip Reading - Cross Audio-Visual Recognition using 3D Convolutional Neural Networks - Official Project Page This repository contains the code develope

A visual dataflow programming language for sklearn

Persimmon What is it? Persimmon is a visual dataflow language for creating sklearn pipelines. It represents functions as blocks, inputs and outputs ar

OpenCodeBlocks an open-source tool for modular visual programing in python

OpenCodeBlocks OpenCodeBlocks is an open-source tool for modular visual programing in python ! Although for now the tool is in Beta and features are c

Answering Open-Domain Questions of Varying Reasoning Steps from Text

This repository contains the authors' implementation of the Iterative Retriever, Reader, and Reranker (IRRR) model in the EMNLP 2021 paper "Answering Open-Domain Questions of Varying Reasoning Steps from Text".

chaii - hindi & tamil question answering

chaii - hindi & tamil question answering This is the solution for rank 5th in Kaggle competition: chaii - Hindi and Tamil Question Answering. The comp

Code for ICLR 2020 paper "VL-BERT: Pre-training of Generic Visual-Linguistic Representations".

VL-BERT By Weijie Su, Xizhou Zhu, Yue Cao, Bin Li, Lewei Lu, Furu Wei, Jifeng Dai. This repository is an official implementation of the paper VL-BERT:

Vision-Language Pre-training for Image Captioning and Question Answering

VLP This repo hosts the source code for our AAAI2020 work Vision-Language Pre-training (VLP). We have released the pre-trained model on Conceptual Cap

Research Code for NeurIPS 2020 Spotlight paper "Large-Scale Adversarial Training for Vision-and-Language Representation Learning": UNITER adversarial training part

VILLA: Vision-and-Language Adversarial Training This is the official repository of VILLA (NeurIPS 2020 Spotlight). This repository currently supports

![[CVPR 2021] VirTex: Learning Visual Representations from Textual Annotations](https://github.com/kdexd/virtex/raw/master/docs/_static/system_figure.jpg)

[CVPR 2021] VirTex: Learning Visual Representations from Textual Annotations

VirTex: Learning Visual Representations from Textual Annotations Karan Desai and Justin Johnson University of Michigan CVPR 2021 arxiv.org/abs/2006.06

Fusion-in-Decoder Distilling Knowledge from Reader to Retriever for Question Answering

This repository contains code for: Fusion-in-Decoder models Distilling Knowledge from Reader to Retriever Dependencies Python 3 PyTorch (currently tes

Train Dense Passage Retriever (DPR) with a single GPU

Gradient Cached Dense Passage Retrieval Gradient Cached Dense Passage Retrieval (GC-DPR) - is an extension of the original DPR library. We introduce G

A Fast Knowledge Distillation Framework for Visual Recognition

FKD: A Fast Knowledge Distillation Framework for Visual Recognition Official PyTorch implementation of paper A Fast Knowledge Distillation Framework f

PyTorch implementation of paper A Fast Knowledge Distillation Framework for Visual Recognition.

FKD: A Fast Knowledge Distillation Framework for Visual Recognition Official PyTorch implementation of paper A Fast Knowledge Distillation Framework f

A unified 3D Transformer Pipeline for visual synthesis

Overview This is the official repo for the paper: NÜWA: Visual Synthesis Pre-training for Neural visUal World creAtion. NÜWA is a unified multimodal p

Predicting Event Memorability from Contextual Visual Semantics

Predicting Event Memorability from Contextual Visual Semantics

PyTorch implementation of "Debiased Visual Question Answering from Feature and Sample Perspectives" (NeurIPS 2021)

D-VQA We provide the PyTorch implementation for Debiased Visual Question Answering from Feature and Sample Perspectives (NeurIPS 2021). Dependencies P

This is a short program that takes the input from your microphone and uses OpenGL to draw a live colourful pattern

Visual-Music This is a short program that takes the input from your microphone and uses OpenGL to draw a live colourful pattern Installation and Setup

Toward a Visual Concept Vocabulary for GAN Latent Space, ICCV 2021

Toward a Visual Concept Vocabulary for GAN Latent Space Code and data from the ICCV 2021 paper Sarah Schwettmann, Evan Hernandez, David Bau, Samuel Kl

Pytorch Implementation of Zero-Shot Image-to-Text Generation for Visual-Semantic Arithmetic

Pytorch Implementation of Zero-Shot Image-to-Text Generation for Visual-Semantic Arithmetic [Paper] [Colab is coming soon] Approach Example Usage To r

Pytorch Implementation of Zero-Shot Image-to-Text Generation for Visual-Semantic Arithmetic

Pytorch Implementation of Zero-Shot Image-to-Text Generation for Visual-Semantic Arithmetic [Paper] [Colab is coming soon] Approach Example Usage To r

Source Code and data for my paper titled Linguistic Knowledge in Data Augmentation for Natural Language Processing: An Example on Chinese Question Matching

Description The source code and data for my paper titled Linguistic Knowledge in Data Augmentation for Natural Language Processing: An Example on Chin

PyTorch code of paper "LiVLR: A Lightweight Visual-Linguistic Reasoning Framework for Video Question Answering"

LiVLR-VideoQA We propose a Lightweight Visual-Linguistic Reasoning framework (LiVLR) for VideoQA. The overview of LiVLR: Evaluation on MSRVTT-QA Datas

A fast, dataset-agnostic, deep visual search engine for digital art history

imgs.ai imgs.ai is a fast, dataset-agnostic, deep visual search engine for digital art history based on neural network embeddings. It utilizes modern

An question and answer shell environment based on xonsh using ansible for setup

An question and answer shell environment based on xonsh using ansible for setup

A dual benchmarking study of visual forgery and visual forensics techniques

A dual benchmarking study of facial forgery and facial forensics In recent years, visual forgery has reached a level of sophistication that humans can

This repository contains all code and data for the Inside Out Visual Place Recognition task

Inside Out Visual Place Recognition This repository contains code and instructions to reproduce the results for the Inside Out Visual Place Recognitio

Haystack is an open source NLP framework that leverages Transformer models.

Haystack is an end-to-end framework that enables you to build powerful and production-ready pipelines for different search use cases. Whether you want

HybVIO visual-inertial odometry and SLAM system

HybVIO A visual-inertial odometry system with an optional SLAM module. This is a research-oriented codebase, which has been published for the purposes

Learning Convolutional Neural Networks with Interactive Visualization.

CNN Explainer An interactive visualization system designed to help non-experts learn about Convolutional Neural Networks (CNNs) For more information,

Code for database and frontend of webpage for Neural Fields in Visual Computing and Beyond.

Neural Fields in Visual Computing—Complementary Webpage This is based on the amazing MiniConf project from Hendrik Strobelt and Sasha Rush—thank you!

Revisiting Video Saliency: A Large-scale Benchmark and a New Model (CVPR18, PAMI19)

DHF1K =========================================================================== Wenguan Wang, J. Shen, M.-M Cheng and A. Borji, Revisiting Video Sal

Automated question generation and question answering from Turkish texts using text-to-text transformers

Turkish Question Generation Offical source code for "Automated question generation & question answering from Turkish texts using text-to-text transfor

AWS documentation corpus for zero-shot open-book question answering.

aws-documentation We present the AWS documentation corpus, an open-book QA dataset, which contains 25,175 documents along with 100 matched questions a

A unified 3D Transformer Pipeline for visual synthesis

Overview This is the official repo for the paper: "NÜWA: Visual Synthesis Pre-training for Neural visUal World creAtion". NÜWA is a unified multimodal

![PyTorch implementation for the visual prior component (i.e. perception module) of the Visually Grounded Physics Learner [Li et al., 2020].](https://github.com/ToruOwO/VGPL-Visual-Prior/raw/public/imgs/MassRope.gif)

PyTorch implementation for the visual prior component (i.e. perception module) of the Visually Grounded Physics Learner [Li et al., 2020].

VGPL-Visual-Prior PyTorch implementation for the visual prior component (i.e. perception module) of the Visually Grounded Physics Learner (VGPL). Give

Question and answer retrieval in Turkish with BERT

trfaq Google supported this work by providing Google Cloud credit. Thank you Google for supporting the open source! 🎉 What is this? At this repo, I'm

Repo for our ICML21 paper Unsupervised Learning of Visual 3D Keypoints for Control

Unsupervised Learning of Visual 3D Keypoints for Control [Project Website] [Paper] Boyuan Chen1, Pieter Abbeel1, Deepak Pathak2 1UC Berkeley 2Carnegie

Creating multimodal multitask models

Fusion Brain Challenge The English version of the document can be found here. Обновления 01.11 Мы выкладываем пример данных, аналогичных private test

Falcon: Interactive Visual Analysis for Big Data

Falcon: Interactive Visual Analysis for Big Data Crossfilter millions of records without latencies. This project is work in progress and not documente

Backprop makes it simple to use, finetune, and deploy state-of-the-art ML models.

Backprop makes it simple to use, finetune, and deploy state-of-the-art ML models. Solve a variety of tasks with pre-trained models or finetune them in

![[NeurIPS 2021 Spotlight] Code for Learning to Compose Visual Relations](https://github.com/nanlliu/compose-visual-relations/raw/master/samples/generation_samples.gif)

[NeurIPS 2021 Spotlight] Code for Learning to Compose Visual Relations

Learning to Compose Visual Relations This is the pytorch codebase for the NeurIPS 2021 Spotlight paper Learning to Compose Visual Relations. Demo Imag

Visual Memorability for Robotic Interestingness via Unsupervised Online Learning (ECCV 2020 Oral and TRO)

Visual Interestingness Refer to the project description for more details. This code based on the following paper. Chen Wang, Yuheng Qiu, Wenshan Wang,

Blind visual quality assessment on 360° Video based on progressive learning

Blind visual quality assessment on omnidirectional or 360 video (ProVQA) Blind VQA for 360° Video via Progressively Learning from Pixels, Frames and V

Implementation of DocFormer: End-to-End Transformer for Document Understanding, a multi-modal transformer based architecture for the task of Visual Document Understanding (VDU)

DocFormer - PyTorch Implementation of DocFormer: End-to-End Transformer for Document Understanding, a multi-modal transformer based architecture for t

Question answering on russian with XLMRobertaLarge as a service

QA Roberta Ru SaaS Question answering on russian with XLMRobertaLarge as a service. Thanks for the model to Alexander Kaigorodov. Stack Flask Gunicorn

Towards Calibrated Model for Long-Tailed Visual Recognition from Prior Perspective

Towards Calibrated Model for Long-Tailed Visual Recognition from Prior Perspective Zhengzhuo Xu, Zenghao Chai, Chun Yuan This is the PyTorch implement

SalGAN: Visual Saliency Prediction with Generative Adversarial Networks

SalGAN: Visual Saliency Prediction with Adversarial Networks Junting Pan Cristian Canton Ferrer Kevin McGuinness Noel O'Connor Jordi Torres Elisa Sayr

CCQA A New Web-Scale Question Answering Dataset for Model Pre-Training

CCQA: A New Web-Scale Question Answering Dataset for Model Pre-Training This is the official repository for the code and models of the paper CCQA: A N

PyTorch implementation of MulMON

MulMON This repository contains a PyTorch implementation of the paper: Learning Object-Centric Representations of Multi-object Scenes from Multiple Vi

LIMEcraft: Handcrafted superpixel selectionand inspection for Visual eXplanations

LIMEcraft LIMEcraft: Handcrafted superpixel selectionand inspection for Visual eXplanations The LIMEcraft algorithm is an explanatory method based on

Summary of related papers on visual attention

This repo is built for paper: Attention Mechanisms in Computer Vision: A Survey paper Vision-Attention-Papers Channel attention Spatial attention Temp

RRxIO - Robust Radar Visual/Thermal Inertial Odometry: Robust and accurate state estimation even in challenging visual conditions.

RRxIO - Robust Radar Visual/Thermal Inertial Odometry RRxIO offers robust and accurate state estimation even in challenging visual conditions. RRxIO c

A "finish the lyrics" game using Spotify, YouTube Transcript, and YouTube Search APIs, coupled with visual machine learning

Singify Introducing Singify, the party game! Challenge your friend to who knows songs better. Play random songs from your very own Spotify playlist an

Visual Interaction with Code - A portable visual debugger for python

VIC Visual Interaction with Code A simple tool for debugging and interacting with running python code. This tool is designed to make it easy to inspec

PyTorch implementation for paper "Full-Body Visual Self-Modeling of Robot Morphologies".

Full-Body Visual Self-Modeling of Robot Morphologies Boyuan Chen, Robert Kwiatkowskig, Carl Vondrick, Hod Lipson Columbia University Project Website |