916 Repositories

Python Non-Local-Sparse-Attention Libraries

Focal Sparse Convolutional Networks for 3D Object Detection (CVPR 2022, Oral)

Focal Sparse Convolutional Networks for 3D Object Detection (CVPR 2022, Oral) This is the official implementation of Focals Conv (CVPR 2022), a new sp

Implementation of Memorizing Transformers (ICLR 2022), attention net augmented with indexing and retrieval of memories using approximate nearest neighbors, in Pytorch

Memorizing Transformers - Pytorch Implementation of Memorizing Transformers (ICLR 2022), attention net augmented with indexing and retrieval of memori

[CVPR 2022 Oral] MixFormer: End-to-End Tracking with Iterative Mixed Attention

MixFormer The official implementation of the CVPR 2022 paper MixFormer: End-to-End Tracking with Iterative Mixed Attention [Models and Raw results] (G

The official repo for OC-SORT: Observation-Centric SORT on video Multi-Object Tracking. OC-SORT is simple, online and robust to occlusion/non-linear motion.

OC-SORT Observation-Centric SORT (OC-SORT) is a pure motion-model-based multi-object tracker. It aims to improve tracking robustness in crowded scenes

Implementation of CVPR'2022:Reconstructing Surfaces for Sparse Point Clouds with On-Surface Priors

Reconstructing Surfaces for Sparse Point Clouds with On-Surface Priors (CVPR 2022) Personal Web Pages | Paper | Project Page This repository contains

Official implementation for "Style Transformer for Image Inversion and Editing" (CVPR 2022)

Style Transformer for Image Inversion and Editing (CVPR2022) https://arxiv.org/abs/2203.07932 Existing GAN inversion methods fail to provide latent co

Implementation of Deformable Attention in Pytorch from the paper "Vision Transformer with Deformable Attention"

Deformable Attention Implementation of Deformable Attention from this paper in Pytorch, which appears to be an improvement to what was proposed in DET

PyTorch implementation of U-TAE and PaPs for satellite image time series panoptic segmentation.

Panoptic Segmentation of Satellite Image Time Series with Convolutional Temporal Attention Networks (ICCV 2021) This repository is the official implem

🐤 Nix-TTS: An Incredibly Lightweight End-to-End Text-to-Speech Model via Non End-to-End Distillation

🐤 Nix-TTS An Incredibly Lightweight End-to-End Text-to-Speech Model via Non End-to-End Distillation Rendi Chevi, Radityo Eko Prasojo, Alham Fikri Aji

This repo presents you the official code of "VISTA: Boosting 3D Object Detection via Dual Cross-VIew SpaTial Attention"

VISTA VISTA: Boosting 3D Object Detection via Dual Cross-VIew SpaTial Attention Shengheng Deng, Zhihao Liang, Lin Sun and Kui Jia* (*) Corresponding a

Implementation of the Transformer variant proposed in "Transformer Quality in Linear Time"

FLASH - Pytorch Implementation of the Transformer variant proposed in the paper Transformer Quality in Linear Time Install $ pip install FLASH-pytorch

A Text Attention Network for Spatial Deformation Robust Scene Text Image Super-resolution (CVPR2022)

A Text Attention Network for Spatial Deformation Robust Scene Text Image Super-resolution (CVPR2022) https://arxiv.org/abs/2203.09388 Jianqi Ma, Zheto

Code release for "BoxeR: Box-Attention for 2D and 3D Transformers"

BoxeR By Duy-Kien Nguyen, Jihong Ju, Olaf Booij, Martin R. Oswald, Cees Snoek. This repository is an official implementation of the paper BoxeR: Box-A

Curso práctico: NLP de cero a cien 🤗

Curso Práctico: NLP de cero a cien Comprende todos los conceptos y arquitecturas clave del estado del arte del NLP y aplícalos a casos prácticos utili

SparseInst: Sparse Instance Activation for Real-Time Instance Segmentation, CVPR 2022

SparseInst 🚀 A simple framework for real-time instance segmentation, CVPR 2022 by Tianheng Cheng, Xinggang Wang†, Shaoyu Chen, Wenqiang Zhang, Qian Z

B-cos Networks: Attention is All we Need for Interpretability

Convolutional Dynamic Alignment Networks for Interpretable Classifications M. Böhle, M. Fritz, B. Schiele. B-cos Networks: Alignment is All we Need fo

Comprehensive-E2E-TTS - PyTorch Implementation

A Non-Autoregressive End-to-End Text-to-Speech (text-to-wav), supporting a family of SOTA unsupervised duration modelings. This project grows with the research community, aiming to achieve the ultimate E2E-TTS

Multi-Scale Temporal Frequency Convolutional Network With Axial Attention for Speech Enhancement

MTFAA-Net Unofficial PyTorch implementation of Baidu's MTFAA-Net: "Multi-Scale Temporal Frequency Convolutional Network With Axial Attention for Speec

[CVPR 2022] Structured Sparse R-CNN for Direct Scene Graph Generation

Structured Sparse R-CNN for Direct Scene Graph Generation Our paper Structured Sparse R-CNN for Direct Scene Graph Generation has been accepted by CVP

Implementation of CoCa, Contrastive Captioners are Image-Text Foundation Models, in Pytorch

CoCa - Pytorch Implementation of CoCa, Contrastive Captioners are Image-Text Foundation Models, in Pytorch. They were able to elegantly fit in contras

![[ICML 2022] The official implementation of Graph Stochastic Attention (GSAT).](https://github.com/Graph-COM/GSAT/raw/main/data/arch.png)

[ICML 2022] The official implementation of Graph Stochastic Attention (GSAT).

Graph Stochastic Attention (GSAT) The official implementation of GSAT for our paper: Interpretable and Generalizable Graph Learning via Stochastic Att

Implementation of CaiT models in TensorFlow and ImageNet-1k checkpoints. Includes code for inference and fine-tuning.

CaiT-TF (Going deeper with Image Transformers) This repository provides TensorFlow / Keras implementations of different CaiT [1] variants from Touvron

🧬 Non-linear feature reduction using Deep Autoencoders and Breast Cancer classification.

Project summary This repository contains the implementation of my bachelor degree project. The aim of the project is to apply non-linear feature reduc

Codes for "Efficient Long-Range Attention Network for Image Super-resolution"

ELAN Codes for "Efficient Long-Range Attention Network for Image Super-resolution", arxiv link. Dependencies & Installation Please refer to the follow

Official implementation of cosformer-attention in cosFormer: Rethinking Softmax in Attention

cosFormer Official implementation of cosformer-attention in cosFormer: Rethinking Softmax in Attention Update log 2022/2/28 Add core code License This

QuadTree Attention for Vision Transformers (ICLR2022)

This repository contains codes for quadtree attention. This repo contains codes for feature matching, image classficiation, object detection and seman

Machine learning beginner to Kaggle competitor in 30 days. Non-coders welcome. The program starts Monday, August 2, and lasts four weeks. It's designed for people who want to learn machine learning.

30-Days-of-ML-Kaggle 🔥 About the Hands On Program 💻 Machine learning beginner → Kaggle competitor in 30 days. Non-coders welcome The program starts

(CVPR 2022) Pytorch implementation of "Self-supervised transformers for unsupervised object discovery using normalized cut"

(CVPR 2022) TokenCut Pytorch implementation of Tokencut: Self-supervised Transformers for Unsupervised Object Discovery using Normalized Cut Yangtao W

.png)

Contextual Attention Network: Transformer Meets U-Net

Contextual Attention Network: Transformer Meets U-Net Contexual attention network for medical image segmentation with state of the art results on skin

SciFive: a text-text transformer model for biomedical literature

SciFive SciFive provided a Text-Text framework for biomedical language and natural language in NLP. Under the T5's framework and desrbibed in the pape

Official pytorch implementation for Learning to Listen: Modeling Non-Deterministic Dyadic Facial Motion (CVPR 2022)

Learning to Listen: Modeling Non-Deterministic Dyadic Facial Motion This repository contains a pytorch implementation of "Learning to Listen: Modeling

Official implementation for "QS-Attn: Query-Selected Attention for Contrastive Learning in I2I Translation" (CVPR 2022)

QS-Attn: Query-Selected Attention for Contrastive Learning in I2I Translation (CVPR2022) https://arxiv.org/abs/2203.08483 Unpaired image-to-image (I2I

Implementation of a protein autoregressive language model, but with autoregressive infilling objective (editing subsequences capability)

Protein GLM (wip) Implementation of a protein autoregressive language model, but with autoregressive infilling objective (editing subsequences capabil

Implementation of 🦩 Flamingo, state-of-the-art few-shot visual question answering attention net out of Deepmind, in Pytorch

🦩 Flamingo - Pytorch Implementation of Flamingo, state-of-the-art few-shot visual question answering attention net, in Pytorch. It will include the p

Implementation of the GVP-Transformer, which was used in the paper "Learning inverse folding from millions of predicted structures" for de novo protein design alongside Alphafold2

GVP Transformer (wip) Implementation of the GVP-Transformer, which was used in the paper Learning inverse folding from millions of predicted structure

Official Implementation of HRDA: Context-Aware High-Resolution Domain-Adaptive Semantic Segmentation

HRDA: Context-Aware High-Resolution Domain-Adaptive Semantic Segmentation by Lukas Hoyer, Dengxin Dai, and Luc Van Gool [Arxiv] [Paper] Overview Unsup

![[CVPRW 2022] Attentions Help CNNs See Better: Attention-based Hybrid Image Quality Assessment Network](https://github.com/IIGROUP/AHIQ/raw/main/Figures/architecture.png)

[CVPRW 2022] Attentions Help CNNs See Better: Attention-based Hybrid Image Quality Assessment Network

Attention Helps CNN See Better: Hybrid Image Quality Assessment Network [CVPRW 2022] Code for Hybrid Image Quality Assessment Network [paper] [code] T

Example of Telegram local API and aiogram 3.x

Telegram Local Full example of Telegram local application. Contains Telegram Bot API Local Telegram Bot API server based on aiogram Bot API Server ima

code for paper"A High-precision Semantic Segmentation Method Combining Adversarial Learning and Attention Mechanism"

PyTorch implementation of UAGAN(U-net Attention Generative Adversarial Networks) This repository contains the source code for the paper "A High-precis

The code is for the paper "A Self-Distillation Embedded Supervised Affinity Attention Model for Few-Shot Segmentation"

SD-AANet The code is for the paper "A Self-Distillation Embedded Supervised Affinity Attention Model for Few-Shot Segmentation" [arxiv] Overview confi

Implementation of MeMOT - Multi-Object Tracking with Memory - in Pytorch

MeMOT - Pytorch (wip) Implementation of MeMOT - Multi-Object Tracking with Memory - in Pytorch. This paper is just one in a line of work, but importan

Attention-based CNN-LSTM and XGBoost hybrid model for stock prediction

Attention-based CNN-LSTM and XGBoost hybrid model for stock prediction Requirements The code has been tested running under Python 3.7.4, with the foll

Implementation of the Hybrid Perception Block and Dual-Pruned Self-Attention block from the ITTR paper for Image to Image Translation using Transformers

ITTR - Pytorch Implementation of the Hybrid Perception Block (HPB) and Dual-Pruned Self-Attention (DPSA) block from the ITTR paper for Image to Image

A variational Bayesian method for similarity learning in non-rigid image registration (CVPR 2022)

A variational Bayesian method for similarity learning in non-rigid image registration We provide the source code and the trained models used in the re

Backend app for visualizing CANedge log files in Grafana (directly from local disk or S3)

CANedge Grafana Backend - Visualize CAN/LIN Data in Dashboards This project enables easy dashboard visualization of log files from the CANedge CAN/LIN

A simple MTProto-based bot that can download various types of media (10MB) on a local storage

TG Media Downloader Bot 🤖 A telegram bot based on Pyrogram that downloads on a local storage the following media files: animation, audio, document, p

Implementation of a memory efficient multi-head attention as proposed in the paper, "Self-attention Does Not Need O(n²) Memory"

Memory Efficient Attention Pytorch Implementation of a memory efficient multi-head attention as proposed in the paper, Self-attention Does Not Need O(

Repository for JIDA SNP Browser Web Application: Local Deployment

JIDA JIDA is a web application that retrieves SNP information for a genomic region of interest in Homo sapiens and calculates specific summary statist

Using this repository you can send mails to multiple recipients.Was created in support of Ukraine, to turn society`s attention to war.

mails-in-support-of-UA Using this repository you can send mails to multiple recipients.Was created in support of Ukraine, to turn society`s attention

OpenSea Bulk Uploader And Trader 100000 NFTs (MAC WINDOWS ANDROID LINUX) Automatically and massively upload and sell your non-fungible tokens on OpenSea using Python Selenium

OpenSea Bulk Uploader And Trader 100000 NFTs (MAC WINDOWS ANDROID LINUX) Automatically and massively upload and sell your non-fungible tokens on OpenS

A tool to prepare websites grabbed with wget for local viewing.

makelocal A tool to prepare websites grabbed with wget for local viewing. exapmples After fetching xkcd.com with: wget -r -no-remove-listing -r -N --p

source code for 'Finding Valid Adjustments under Non-ignorability with Minimal DAG Knowledge' by A. Shah, K. Shanmugam, K. Ahuja

Source code for "Finding Valid Adjustments under Non-ignorability with Minimal DAG Knowledge" Reference: Abhin Shah, Karthikeyan Shanmugam, Kartik Ahu

PyTorch Implementation of DiffGAN-TTS: High-Fidelity and Efficient Text-to-Speech with Denoising Diffusion GANs

DiffGAN-TTS - PyTorch Implementation PyTorch implementation of DiffGAN-TTS: High

Vrcwatch - Supply the local time to VRChat as Avatar Parameters through OSC

English: README-EN.md VRCWatch VRCWatch は、VRChat 内のアバター向けに現在時刻を送信するためのプログラムです。 使

Job-Recommend-Competition - Vectorwise Interpretable Attentions for Multimodal Tabular Data

SiD - Simple Deep Model Vectorwise Interpretable Attentions for Multimodal Tabul

Adversarial-Information-Bottleneck - Distilling Robust and Non-Robust Features in Adversarial Examples by Information Bottleneck (NeurIPS21)

NeurIPS 2021 Title: Distilling Robust and Non-Robust Features in Adversarial Exa

Repo-cloner - Script takes user public liked repos and clone it to a local folder

Liked repos cloner Script takes user public liked repos and clone it to a local

PyTorch implementation of NATSpeech: A Non-Autoregressive Text-to-Speech Framework

A Non-Autoregressive Text-to-Speech (NAR-TTS) framework, including official PyTorch implementation of PortaSpeech (NeurIPS 2021) and DiffSpeech (AAAI 2022)

The Unreasonable Effectiveness of Random Pruning: Return of the Most Naive Baseline for Sparse Training

[ICLR 2022] The Unreasonable Effectiveness of Random Pruning: Return of the Most Naive Baseline for Sparse Training The Unreasonable Effectiveness of

Python implementation of NARS (Non-Axiomatic-Reasoning-System)

Python implementation of NARS (Non-Axiomatic-Reasoning-System)

Non-Autoregressive Translation with Layer-Wise Prediction and Deep Supervision

Deeply Supervised, Layer-wise Prediction-aware (DSLP) Transformer for Non-autoregressive Neural Machine Translation

End-to-end image captioning with EfficientNet-b3 + LSTM with Attention

Image captioning End-to-end image captioning with EfficientNet-b3 + LSTM with Attention Model is seq2seq model. In the encoder pretrained EfficientNet

Simple local RPG turn-based to play while learn something using the anki system

Simple local RPG turn-based to play while learn something using the anki system

HAIS_2GNN: 3D Visual Grounding with Graph and Attention

HAIS_2GNN: 3D Visual Grounding with Graph and Attention This repository is for the HAIS_2GNN research project. Tao Gu, Yue Chen Introduction The motiv

Non-Vacuous Generalisation Bounds for Shallow Neural Networks

This package requires jax, tensorflow, and numpy. Either tensorflow or scikit-learn can be used for loading data. To run in a nix-shell with required

DCSAU-Net: A Deeper and More Compact Split-Attention U-Net for Medical Image Segmentation

DCSAU-Net: A Deeper and More Compact Split-Attention U-Net for Medical Image Segmentation By Qing Xu, Wenting Duan and Na He Requirements pytorch==1.1

Framework for Spectral Clustering on the Sparse Coefficients of Learned Dictionaries

Dictionary Learning for Clustering on Hyperspectral Images Overview Framework for Spectral Clustering on the Sparse Coefficients of Learned Dictionari

The mini-AlphaStar (mini-AS, or mAS) - mini-scale version (non-official) of the AlphaStar (AS)

A mini-scale reproduction code of the AlphaStar program. Note: the original AlphaStar is the AI proposed by DeepMind to play StarCraft II.

Source code for the paper "Periodic Traveling Waves in an Integro-Difference Equation With Non-Monotonic Growth and Strong Allee Effect"

Source code for the paper "Periodic Traveling Waves in an Integro-Difference Equation With Non-Monotonic Growth and Strong Allee Effect" by Michael Ne

To create a deep learning model which can explain the content of an image in the form of speech through caption generation with attention mechanism on Flickr8K dataset.

To create a deep learning model which can explain the content of an image in the form of speech through caption generation with attention mechanism on Flickr8K dataset.

A pure PyTorch implementation of the loss described in "Online Segment to Segment Neural Transduction"

ssnt-loss ℹ️ This is a WIP project. the implementation is still being tested. A pure PyTorch implementation of the loss described in "Online Segment t

Cascaded Deep Video Deblurring Using Temporal Sharpness Prior and Non-local Spatial-Temporal Similarity

This repository is the official PyTorch implementation of Cascaded Deep Video Deblurring Using Temporal Sharpness Prior and Non-local Spatial-Temporal Similarity

An implementation of IMLE-Net: An Interpretable Multi-level Multi-channel Model for ECG Classification

IMLE-Net: An Interpretable Multi-level Multi-channel Model for ECG Classification The repostiory consists of the code, results and data set links for

Pytorch implementation of the paper "Enhancing Content Preservation in Text Style Transfer Using Reverse Attention and Conditional Layer Normalization"

Pytorch implementation of the paper "Enhancing Content Preservation in Text Style Transfer Using Reverse Attention and Conditional Layer Normalization"

Ipscanner - A simple threaded IP-Scanner written in python3 that can monitor local IP's in your network

IPScanner 🔬 A simple threaded IP-Scanner written in python3 that can monitor lo

A simple non-official manager interface I'm using for my Raspberry Pis.

My Raspberry Pi Manager Overview I have two Raspberry Pi 4 Model B devices that I hooked up to my two TVs (one in my bedroom and the other in my new g

A simple python script to dump remote files through a local file read or local file inclusion web vulnerability.

A simple python script to dump remote files through a local file read or local file inclusion web vulnerability. Features Dump a single file w

An implementation of the "Attention is all you need" paper without extra bells and whistles, or difficult syntax

Simple Transformer An implementation of the "Attention is all you need" paper without extra bells and whistles, or difficult syntax. Note: The only ex

SPLADE: Sparse Lexical and Expansion Model for First Stage Ranking

SPLADE 🍴 + 🥄 = 🔎 This repository contains the weights for four models as well as the code for running inference for our two papers: [v1]: SPLADE: S

Near-Optimal Sparse Allreduce for Distributed Deep Learning (published in PPoPP'22)

Near-Optimal Sparse Allreduce for Distributed Deep Learning (published in PPoPP'22) Ok-Topk is a scheme for distributed training with sparse gradients

Global-Local Path Networks for Monocular Depth Estimation with Vertical CutDepth [Paper]

Global-Local Path Networks for Monocular Depth Estimation with Vertical CutDepth [Paper] Downloads [Downloads] Trained ckpt files for NYU Depth V2 and

Very large and sparse networks appear often in the wild and present unique algorithmic opportunities and challenges for the practitioner

Sparse network learning with snlpy Very large and sparse networks appear often in the wild and present unique algorithmic opportunities and challenges

PyTorch implementation for the paper Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime

Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime Created by Prarthana Bhattacharyya. Disclaimer: This is n

PyTorch implementation of an end-to-end Handwritten Text Recognition (HTR) system based on attention encoder-decoder networks

AttentionHTR PyTorch implementation of an end-to-end Handwritten Text Recognition (HTR) system based on attention encoder-decoder networks. Scene Text

Framework for training options with different attention mechanism and using them to solve downstream tasks.

Using Attention in HRL Framework for training options with different attention mechanism and using them to solve downstream tasks. Requirements GPU re

Official code of Team Yao at Multi-Modal-Fact-Verification-2022

Official code of Team Yao at Multi-Modal-Fact-Verification-2022 A Multi-Modal Fact Verification dataset released as part of the De-Factify workshop in

This repository is for Contrastive Embedding Distribution Refinement and Entropy-Aware Attention Network (CEDR)

CEDR This repository is for Contrastive Embedding Distribution Refinement and Entropy-Aware Attention Network (CEDR) introduced in the following paper

A script to find the people whom you follow, but they don't follow you back

insta-non-followers A script to find the people whom you follow, but they don't follow you back Dependencies: python3 libraries - instaloader, getpass

GBSLocalLauncher - A script to compose ENV file for Local Compose

GBSLocalLauncher This is a script to compose ENV file for Local Compose. It crea

OptiPLANT is a cloud-based based system that empowers professional and non-professional data scientists to build high-quality predictive models

OptiPLANT OptiPLANT is a cloud-based based system that empowers professional and non-professional data scientists to build high-quality predictive mod

Sinkformers: Transformers with Doubly Stochastic Attention

Code for the paper : "Sinkformers: Transformers with Doubly Stochastic Attention" Paper You will find our paper here. Compat This package has been dev

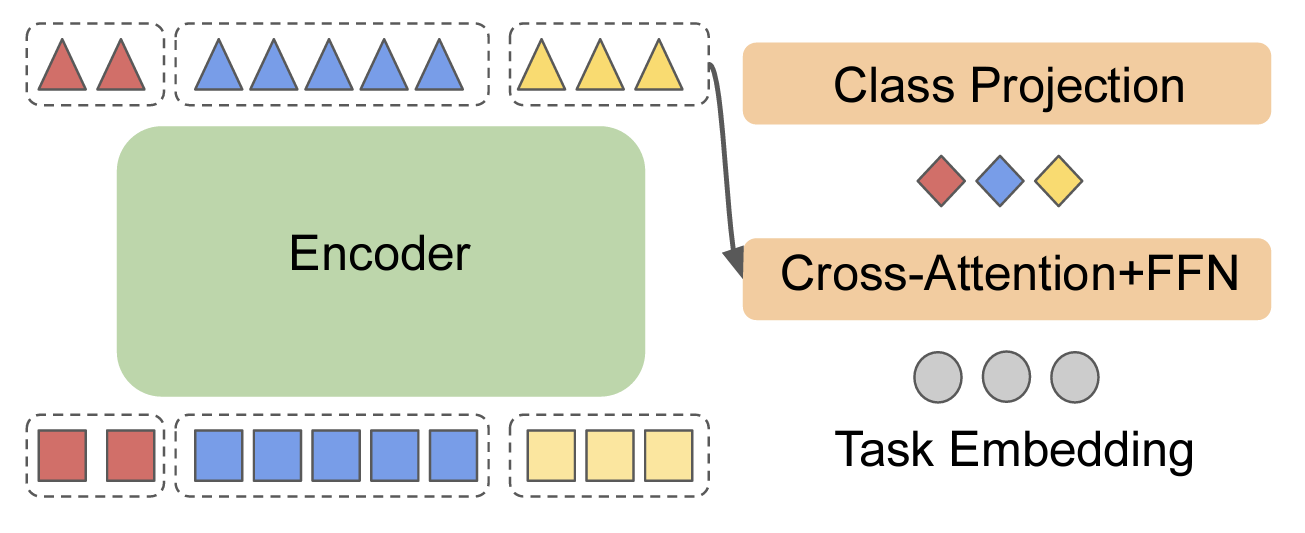

EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks

EncT5 (Unofficial) Pytorch Implementation of EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks About Finetune T5 model for classification & r

This is the repo of the manuscript "Dual-branch Attention-In-Attention Transformer for speech enhancement"

DB-AIAT: A Dual-branch attention-in-attention transformer for single-channel SE

Evidential Softmax for Sparse Multimodal Distributions in Deep Generative Models

Evidential Softmax for Sparse Multimodal Distributions in Deep Generative Models Abstract Many applications of generative models rely on the marginali

Sdf sparse conv - Deep Learning on SDF for Classifying Brain Biomarkers

Deep Learning on SDF for Classifying Brain Biomarkers To reproduce the results f

Multimodal Co-Attention Transformer (MCAT) for Survival Prediction in Gigapixel Whole Slide Images

Multimodal Co-Attention Transformer (MCAT) for Survival Prediction in Gigapixel Whole Slide Images [ICCV 2021] © Mahmood Lab - This code is made avail

Static Features Classifier - A static features classifier for Point-Could clusters using an Attention-RNN model

Static Features Classifier This is a static features classifier for Point-Could

Built a deep neural network (DNN) that functions as an end-to-end machine translation pipeline

Built a deep neural network (DNN) that functions as an end-to-end machine translation pipeline. The pipeline accepts english text as input and returns the French translation.

This project is the implementation template for HW 0 and HW 1 for both the programming and non-programming tracks

This project is the implementation template for HW 0 and HW 1 for both the programming and non-programming tracks

Ingestinator is my personal VFX pipeline tool for ingesting folders containing frame sequences that have been pulled and downloaded to a local folder

Ingestinator Ingestinator is my personal VFX pipeline tool for ingesting folders containing frame sequences that have been pulled and downloaded to a