806 Repositories

Python Anomaly-Transformer Libraries

ICCV2021, Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet

Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet, ICCV 2021 Update: 2021/03/11: update our new results. Now our T2T-ViT-14 w

This is a collection of our NAS and Vision Transformer work.

AutoML - Neural Architecture Search This is a collection of our AutoML-NAS work iRPE (NEW): Rethinking and Improving Relative Position Encoding for Vi

ICCV2021 Papers with Code

ICCV2021 Papers with Code

Efficient Training of Audio Transformers with Patchout

PaSST: Efficient Training of Audio Transformers with Patchout This is the implementation for Efficient Training of Audio Transformers with Patchout Pa

Flaxformer: transformer architectures in JAX/Flax

Flaxformer: transformer architectures in JAX/Flax Flaxformer is a transformer library for primarily NLP and multimodal research at Google. It is used

TransCD: Scene Change Detection via Transformer-based Architecture

TransCD: Scene Change Detection via Transformer-based Architecture

Official Pytorch implementation of 'RoI Tanh-polar Transformer Network for Face Parsing in the Wild.'

Official Pytorch implementation of 'RoI Tanh-polar Transformer Network for Face Parsing in the Wild.'

METER: Multimodal End-to-end TransformER

METER Code and pre-trained models will be publicized soon. Citation @article{dou2021meter, title={An Empirical Study of Training End-to-End Vision-a

Deploy optimized transformer based models on Nvidia Triton server

Deploy optimized transformer based models on Nvidia Triton server

Charsiu: A transformer-based phonetic aligner

Charsiu: A transformer-based phonetic aligner [arXiv] Note. This is a preview version. The aligner is under active development. New functions, new lan

Image Restoration Using Swin Transformer for VapourSynth

SwinIR SwinIR function for VapourSynth, based on https://github.com/JingyunLiang/SwinIR. Dependencies NumPy PyTorch, preferably with CUDA. Note that t

This tool parses log data and allows to define analysis pipelines for anomaly detection.

logdata-anomaly-miner This tool parses log data and allows to define analysis pipelines for anomaly detection. It was designed to run the analysis wit

An Open-Source Toolkit for Prompt-Learning.

An Open-Source Framework for Prompt-learning. Overview • Installation • How To Use • Docs • Paper • Citation • What's New? Nov 2021: Now we have relea

A Convolutional Transformer for Keyword Spotting

☢️ Audiomer ☢️ Audiomer: A Convolutional Transformer for Keyword Spotting [ arXiv ] [ Previous SOTA ] [ Model Architecture ] Results on SpeechCommands

Time Series Forecasting with Temporal Fusion Transformer in Pytorch

Forecasting with the Temporal Fusion Transformer Multi-horizon forecasting often contains a complex mix of inputs – including static (i.e. time-invari

Pytorch library for fast transformer implementations

Transformers are very successful models that achieve state of the art performance in many natural language tasks

A treasure chest for visual recognition powered by PaddlePaddle

简体中文 | English PaddleClas 简介 飞桨图像识别套件PaddleClas是飞桨为工业界和学术界所准备的一个图像识别任务的工具集,助力使用者训练出更好的视觉模型和应用落地。 近期更新 2021.11.1 发布PP-ShiTu技术报告,新增饮料识别demo 2021.10.23 发

toroidal - a lightweight transformer library for PyTorch

toroidal - a lightweight transformer library for PyTorch Toroidal transformers are of smaller size and lower weight than the more common E-I types. Th

Code for "Discovering Non-monotonic Autoregressive Orderings with Variational Inference" (paper and code updated from ICLR 2021)

Discovering Non-monotonic Autoregressive Orderings with Variational Inference Description This package contains the source code implementation of the

![[NeurIPS 2021]](https://github.com/VITA-Group/DePT/raw/main/figs/dept_pipeline.png)

[NeurIPS 2021] "Delayed Propagation Transformer: A Universal Computation Engine towards Practical Control in Cyber-Physical Systems"

Delayed Propagation Transformer: A Universal Computation Engine towards Practical Control in Cyber-Physical Systems Introduction Multi-agent control i

GUI implementation of a Transformer chatbot. Suggests amicable responses to messages from friends.

conversation-helper GUI implementation of a Transformer chatbot. Suggests amicable responses to messages from friends. Screenshots Upcoming Release Im

A transformer model to predict pathogenic mutations

MutFormer MutFormer is an application of the BERT (Bidirectional Encoder Representations from Transformers) NLP (Natural Language Processing) model wi

OoD Minimum Anomaly Score GAN - Code for the Paper 'OMASGAN: Out-of-Distribution Minimum Anomaly Score GAN for Sample Generation on the Boundary'

OMASGAN: Out-of-Distribution Minimum Anomaly Score GAN for Sample Generation on the Boundary Out-of-Distribution Minimum Anomaly Score GAN (OMASGAN) C

Official project repository for 'Normality-Calibrated Autoencoder for Unsupervised Anomaly Detection on Data Contamination'

NCAE_UAD Official project repository of 'Normality-Calibrated Autoencoder for Unsupervised Anomaly Detection on Data Contamination' Abstract In this p

LightLog is an open source deep learning based lightweight log analysis tool for log anomaly detection.

LightLog Introduction LightLog is an open source deep learning based lightweight log analysis tool for log anomaly detection. Function description [BG

A pre-trained model with multi-exit transformer architecture.

ElasticBERT This repository contains finetuning code and checkpoints for ElasticBERT. Towards Efficient NLP: A Standard Evaluation and A Strong Baseli

Data, model training, and evaluation code for "PubTables-1M: Towards a universal dataset and metrics for training and evaluating table extraction models".

PubTables-1M This repository contains training and evaluation code for the paper "PubTables-1M: Towards a universal dataset and metrics for training a

ElasticBERT: A pre-trained model with multi-exit transformer architecture.

This repository contains finetuning code and checkpoints for ElasticBERT. Towards Efficient NLP: A Standard Evaluation and A Strong Baseli

Anomaly Detection Based on Hierarchical Clustering of Mobile Robot Data

We proposed a new approach to detect anomalies of mobile robot data. We investigate each data seperately with two clustering method hierarchical and k-means. There are two sub-method that we used for produce an anomaly score. Then, we merge these two score and produce merged anomaly score as a result.

(Python, R, C/C++) Isolation Forest and variations such as SCiForest and EIF, with some additions (outlier detection + similarity + NA imputation)

IsoTree Fast and multi-threaded implementation of Extended Isolation Forest, Fair-Cut Forest, SCiForest (a.k.a. Split-Criterion iForest), and regular

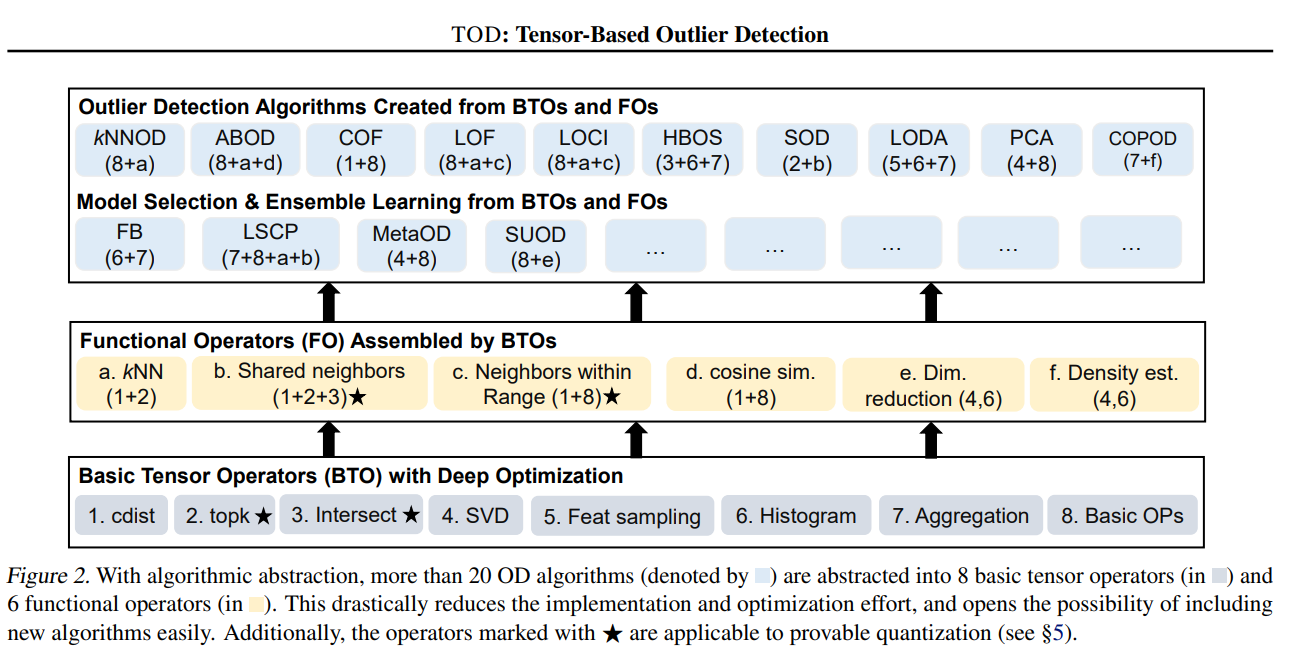

(Py)TOD: Tensor-based Outlier Detection, A General GPU-Accelerated Framework

(Py)TOD: Tensor-based Outlier Detection, A General GPU-Accelerated Framework Background: Outlier detection (OD) is a key data mining task for identify

Machine Translation Implement By Bi-GRU And Transformer

Seq2Seq Translation Implement By Bidirectional GRU And Transformer In Pytorch Before You Run The Code You should download the data through the link be

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrai

Implementation of ConvMixer-Patches Are All You Need? in TensorFlow and Keras

Patches Are All You Need? - ConvMixer ConvMixer, an extremely simple model that is similar in spirit to the ViT and the even-more-basic MLP-Mixer in t

Multivariate Time Series Transformer, public version

Multivariate Time Series Transformer Framework This code corresponds to the paper: George Zerveas et al. A Transformer-based Framework for Multivariat

Test-Time Personalization with a Transformer for Human Pose Estimation, NeurIPS 2021

Transforming Self-Supervision in Test Time for Personalizing Human Pose Estimation This is an official implementation of the NeurIPS 2021 paper: Trans

Implementation of Enformer, Deepmind's attention network for predicting gene expression, in Pytorch

Enformer - Pytorch (wip) Implementation of Enformer, Deepmind's attention network for predicting gene expression, in Pytorch. The original tensorflow

Video Background Music Generation with Controllable Music Transformer (ACM MM 2021 Oral)

CMT Code for paper Video Background Music Generation with Controllable Music Transformer (ACM MM 2021 Best Paper Award) [Paper] [Site] Directory Struc

Spatial-Temporal Transformer for Dynamic Scene Graph Generation, ICCV2021

Spatial-Temporal Transformer for Dynamic Scene Graph Generation Pytorch Implementation of our paper Spatial-Temporal Transformer for Dynamic Scene Gra

SOFT: Softmax-free Transformer with Linear Complexity, NeurIPS 2021 Spotlight

SOFT: Softmax-free Transformer with Linear Complexity SOFT: Softmax-free Transformer with Linear Complexity, Jiachen Lu, Jinghan Yao, Junge Zhang, Xia

The Official Repository for "Generalized OOD Detection: A Survey"

Generalized Out-of-Distribution Detection: A Survey 1. Overview This repository is with our survey paper: Title: Generalized Out-of-Distribution Detec

The official implementation of paper Siamese Transformer Pyramid Networks for Real-Time UAV Tracking, accepted by WACV22

SiamTPN Introduction This is the official implementation of the SiamTPN (WACV2022). The tracker intergrates pyramid feature network and transformer in

Huggingface transformers for discord

disformers Huggingface transformers for discord base source butyr/huggingface-transformer-chatbots install pip install -U disformers example see examp

Source code of our BMVC 2021 paper: AniFormer: Data-driven 3D Animation with Transformer

AniFormer This is the PyTorch implementation of our BMVC 2021 paper AniFormer: Data-driven 3D Animation with Transformer. Haoyu Chen, Hao Tang, Nicu S

Unofficial implementation of MUSIQ (Multi-Scale Image Quality Transformer)

MUSIQ: Multi-Scale Image Quality Transformer Unofficial pytorch implementation of the paper "MUSIQ: Multi-Scale Image Quality Transformer" (paper link

Source code of our BMVC 2021 paper: AniFormer: Data-driven 3D Animation with Transformer

AniFormer This is the PyTorch implementation of our BMVC 2021 paper AniFormer: Data-driven 3D Animation with Transformer. Haoyu Chen, Hao Tang, Nicu S

The Official Repository for "Generalized OOD Detection: A Survey"

Generalized Out-of-Distribution Detection: A Survey 1. Overview This repository is with our survey paper: Title: Generalized Out-of-Distribution Detec

A Word Level Transformer layer based on PyTorch and 🤗 Transformers.

Transformer Embedder A Word Level Transformer layer based on PyTorch and 🤗 Transformers. How to use Install the library from PyPI: pip install transf

Deep Learning to Create StepMania SM FIles

StepCOVNet Running Audio to SM File Generator Currently only produces .txt files. Use SMDataTools to convert .txt to .sm python stepmania_note_generat

Official Pytorch implementation of the paper "Action-Conditioned 3D Human Motion Synthesis with Transformer VAE", ICCV 2021

ACTOR Official Pytorch implementation of the paper "Action-Conditioned 3D Human Motion Synthesis with Transformer VAE", ICCV 2021. Please visit our we

Patch SVDD for Image anomaly detection

Patch SVDD Patch SVDD for Image anomaly detection. Paper: https://arxiv.org/abs/2006.16067 (published in ACCV 2020). Original Code : https://github.co

Pytorch implementation of paper "Learning Co-segmentation by Segment Swapping for Retrieval and Discovery"

SegSwap Pytorch implementation of paper "Learning Co-segmentation by Segment Swapping for Retrieval and Discovery" [PDF] [Project page] If our project

PyTorch image models, scripts, pretrained weights -- ResNet, ResNeXT, EfficientNet, EfficientNetV2, NFNet, Vision Transformer, MixNet, MobileNet-V3/V2, RegNet, DPN, CSPNet, and more

PyTorch Image Models Sponsors What's New Introduction Models Features Results Getting Started (Documentation) Train, Validation, Inference Scripts Awe

This is a collection of simple PyTorch implementations of neural networks and related algorithms. These implementations are documented with explanations,

labml.ai Deep Learning Paper Implementations This is a collection of simple PyTorch implementations of neural networks and related algorithms. These i

An NLP library with Awesome pre-trained Transformer models and easy-to-use interface, supporting wide-range of NLP tasks from research to industrial applications.

简体中文 | English News [2021-10-12] PaddleNLP 2.1版本已发布!新增开箱即用的NLP任务能力、Prompt Tuning应用示例与生成任务的高性能推理! 🎉 更多详细升级信息请查看Release Note。 [2021-08-22]《千言:面向事实一致性的生

Code of ICCV paper: Rethinking Transformer-based Set Prediction for Object Detection

Rethinking Transformer-based Set Prediction for Object Detection Here are the code for the ICCV paper. The code is adapted from Detectron2 and AdelaiD

Production First and Production Ready End-to-End Speech Recognition Toolkit

WeNet 中文版 Discussions | Docs | Papers | Runtime (x86) | Runtime (android) | Pretrained Models We share neural Net together. The main motivation of WeN

The official implementation of paper Siamese Transformer Pyramid Networks for Real-Time UAV Tracking, accepted by WACV22

SiamTPN Introduction This is the official implementation of the SiamTPN (WACV2022). The tracker intergrates pyramid feature network and transformer in

Official implementation of "Synthetic Temporal Anomaly Guided End-to-End Video Anomaly Detection" (ICCV Workshops 2021: RSL-CV).

Official PyTorch implementation of "Synthetic Temporal Anomaly Guided End-to-End Video Anomaly Detection" This is the implementation of the paper "Syn

Implementation of the paper "Generating Symbolic Reasoning Problems with Transformer GANs"

Generating Symbolic Reasoning Problems with Transformer GANs This is the implementation of the paper Generating Symbolic Reasoning Problems with Trans

Video Swin Transformer - PyTorch

Video-Swin-Transformer-Pytorch This repo is a simple usage of the official implementation "Video Swin Transformer". Introduction Video Swin Transforme

Source code for "Taming Visually Guided Sound Generation" (Oral at the BMVC 2021)

Taming Visually Guided Sound Generation • [Project Page] • [ArXiv] • [Poster] • • Listen for the samples on our project page. Overview We propose to t

Offcial implementation of "A Hybrid Video Anomaly Detection Framework via Memory-Augmented Flow Reconstruction and Flow-Guided Frame Prediction, ICCV-2021".

HF2-VAD Offcial implementation of "A Hybrid Video Anomaly Detection Framework via Memory-Augmented Flow Reconstruction and Flow-Guided Frame Predictio

xFormers is a modular and field agnostic library to flexibly generate transformer architectures by interoperable and optimized building blocks.

Description xFormers is a modular and field agnostic library to flexibly generate transformer architectures by interoperable and optimized building bl

Transformer Based Korean Sentence Spacing Corrector

TKOrrector Transformer Based Korean Sentence Spacing Corrector License Summary This solution is made available under Apache 2 license. See the LICENSE

Continuous-Time Sequential Recommendation with Temporal Graph Collaborative Transformer

Introduction This is the repository of our accepted CIKM 2021 paper "Continuous-Time Sequential Recommendation with Temporal Graph Collaborative Trans

High-Fidelity Pluralistic Image Completion with Transformers (ICCV 2021)

Image Completion Transformer (ICT) Project Page | Paper (ArXiv) | Pre-trained Models | Supplemental Material This repository is the official pytorch i

Official implementation for paper: A Latent Transformer for Disentangled Face Editing in Images and Videos.

A Latent Transformer for Disentangled Face Editing in Images and Videos Official implementation for paper: A Latent Transformer for Disentangled Face

Officially unofficial re-implementation of paper: Paint Transformer: Feed Forward Neural Painting with Stroke Prediction, ICCV 2021.

Paint Transformer: Feed Forward Neural Painting with Stroke Prediction [Paper] [Official Paddle Implementation] [Huggingface Gradio Demo] [Unofficial

Styled Handwritten Text Generation with Transformers (ICCV 21)

⚡ Handwriting Transformers [PDF] Ankan Kumar Bhunia, Salman Khan, Hisham Cholakkal, Rao Muhammad Anwer, Fahad Shahbaz Khan & Mubarak Shah Abstract: We

Web Scraping, Document Deduplication & GPT-2 Fine-tuning with a newly created scam dataset.

Web Scraping, Document Deduplication & GPT-2 Fine-tuning with a newly created scam dataset.

Anomaly detection in multi-agent trajectories: Code for training, evaluation and the OpenAI highway simulation.

Anomaly Detection in Multi-Agent Trajectories for Automated Driving This is the official project page including the paper, code, simulation, baseline

Source code for GNN-LSPE (Graph Neural Networks with Learnable Structural and Positional Representations)

Graph Neural Networks with Learnable Structural and Positional Representations Source code for the paper "Graph Neural Networks with Learnable Structu

Source code for Transformer-based Multi-task Learning for Disaster Tweet Categorisation (UCD's participation in TREC-IS 2020A, 2020B and 2021A).

Source code for "UCD participation in TREC-IS 2020A, 2020B and 2021A". *** update at: 2021/05/25 This repo so far relates to the following work: Trans

Official implementation for paper: A Latent Transformer for Disentangled Face Editing in Images and Videos.

A Latent Transformer for Disentangled Face Editing in Images and Videos Official implementation for paper: A Latent Transformer for Disentangled Face

Use PaddlePaddle to reproduce the paper:mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer

MT5_paddle Use PaddlePaddle to reproduce the paper:mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer English | 简体中文 mT5: A Massively

Pytorch reimplementation of the Vision Transformer (An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale)

Vision Transformer Pytorch reimplementation of Google's repository for the ViT model that was released with the paper An Image is Worth 16x16 Words: T

Implementation of ConvMixer in TensorFlow and Keras

ConvMixer ConvMixer, an extremely simple model that is similar in spirit to the ViT and the even-more-basic MLP-Mixer in that it operates directly on

Deeply Supervised, Layer-wise Prediction-aware (DSLP) Transformer for Non-autoregressive Neural Machine Translation

Non-Autoregressive Translation with Layer-Wise Prediction and Deep Supervision Training Efficiency We show the training efficiency of our DSLP model b

A curated list of awesome resources combining Transformers with Neural Architecture Search

A curated list of awesome resources combining Transformers with Neural Architecture Search

Official repo for BMVC2021 paper ASFormer: Transformer for Action Segmentation

ASFormer: Transformer for Action Segmentation This repo provides training & inference code for BMVC 2021 paper: ASFormer: Transformer for Action Segme

Rethinking Transformer-based Set Prediction for Object Detection

Rethinking Transformer-based Set Prediction for Object Detection Here are the code for the ICCV paper. The code is adapted from Detectron2 and AdelaiD

A collection of papers about Transformer in the field of medical image analysis.

A collection of papers about Transformer in the field of medical image analysis.

Deeply Supervised, Layer-wise Prediction-aware (DSLP) Transformer for Non-autoregressive Neural Machine Translation

Non-Autoregressive Translation with Layer-Wise Prediction and Deep Supervision Training Efficiency We show the training efficiency of our DSLP model b

CyTran: Cycle-Consistent Transformers for Non-Contrast to Contrast CT Translation

CyTran: Cycle-Consistent Transformers for Non-Contrast to Contrast CT Translation We propose a novel approach to translate unpaired contrast computed

FG-transformer-TTS Fine-grained style control in transformer-based text-to-speech synthesis

LST-TTS Official implementation for the paper Fine-grained style control in transformer-based text-to-speech synthesis. Submitted to ICASSP 2022. Audi

Code for the preprint "Well-classified Examples are Underestimated in Classification with Deep Neural Networks"

This is a repository for the paper of "Well-classified Examples are Underestimated in Classification with Deep Neural Networks" The implementation and

Quantum-enhanced transformer neural network

Example of a Quantum-enhanced transformer neural network Get the code: git clone https://github.com/rdisipio/qtransformer.git cd qtransformer Create

This is an official implementation for "Swin Transformer: Hierarchical Vision Transformer using Shifted Windows" on Semantic Segmentation.

Swin Transformer for Semantic Segmentation of satellite images This repo contains the supported code and configuration files to reproduce semantic seg

This package implements THOR: Transformer with Stochastic Experts.

THOR: Transformer with Stochastic Experts This PyTorch package implements Taming Sparsely Activated Transformer with Stochastic Experts. Installation

PyTorch implementation for paper StARformer: Transformer with State-Action-Reward Representations.

StARformer This repository contains the PyTorch implementation for our paper titled StARformer: Transformer with State-Action-Reward Representations.

Code for the ICCV 2021 Workshop paper: A Unified Efficient Pyramid Transformer for Semantic Segmentation.

Unified-EPT Code for the ICCV 2021 Workshop paper: A Unified Efficient Pyramid Transformer for Semantic Segmentation. Installation Linux, CUDA=10.0,

![[ICCV 2021 Oral] SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer](https://github.com/AllenXiangX/SnowflakeNet/raw/main/pics/network.png)

[ICCV 2021 Oral] SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer

This repository contains the source code for the paper SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer (ICCV 2021 Oral). The project page is here.

Pytorch implementation for our ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visual Question Answering".

TRAnsformer Routing Networks (TRAR) This is an official implementation for ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visu

Unofficial PyTorch implementation of MobileViT based on paper "MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer".

MobileViT RegNet Unofficial PyTorch implementation of MobileViT based on paper MOBILEVIT: LIGHT-WEIGHT, GENERAL-PURPOSE, AND MOBILE-FRIENDLY VISION TR

Google's Meena transformer chatbot implementation

Here's my attempt at recreating Meena, a state of the art chatbot developed by Google Research and described in the paper Towards a Human-like Open-Domain Chatbot.

Mesh Graphormer is a new transformer-based method for human pose and mesh reconsruction from an input image

MeshGraphormer ✨ ✨ This is our research code of Mesh Graphormer. Mesh Graphormer is a new transformer-based method for human pose and mesh reconsructi

3D-Transformer: Molecular Representation with Transformer in 3D Space

3D-Transformer: Molecular Representation with Transformer in 3D Space

![[NeurIPS 2021] Galerkin Transformer: a linear attention without softmax](https://github.com/scaomath/galerkin-transformer/raw/main/data/simple_ft.png)

[NeurIPS 2021] Galerkin Transformer: a linear attention without softmax

[NeurIPS 2021] Galerkin Transformer: linear attention without softmax Summary A non-numerical analyst oriented explanation on Toward Data Science abou

Improving 3D Object Detection with Channel-wise Transformer

"Improving 3D Object Detection with Channel-wise Transformer" Thanks for the OpenPCDet, this implementation of the CT3D is mainly based on the pcdet v