926 Repositories

Python memory-efficient-attention Libraries

An end to end ASR Transformer model training repo

END TO END ASR TRANSFORMER 本项目基于transformer 6*encoder+6*decoder的基本结构构造的端到端的语音识别系统 Model Instructions 1.数据准备: 自行下载数据,遵循文件结构如下: ├── data │ ├── train │

Markov Attention Models

Introduction This repo contains code for reproducing the results in the paper Graphical Models with Attention for Context-Specific Independence and an

Parameter Efficient Deep Probabilistic Forecasting

PEDPF Parameter Efficient Deep Probabilistic Forecasting (PEDPF) is a repository containing code to run experiments for several deep learning based pr

Python implementation of ADD: Frequency Attention and Multi-View based Knowledge Distillation to Detect Low-Quality Compressed Deepfake Images, AAAI2022.

ADD: Frequency Attention and Multi-View based Knowledge Distillation to Detect Low-Quality Compressed Deepfake Images Binh M. Le & Simon S. Woo, "ADD:

Bayesian Deep Learning and Deep Reinforcement Learning for Object Shape Error Response and Correction of Manufacturing Systems

Bayesian Deep Learning for Manufacturing 2.0 (dlmfg) Object Shape Error Response (OSER) Digital Lifecycle Management - In Process Quality Improvement

Unofficial implementation of "Coordinate Attention for Efficient Mobile Network Design"

Unofficial implementation of "Coordinate Attention for Efficient Mobile Network Design". CoordAttention tensorflow slim

Robust Lane Detection via Expanded Self Attention (WACV 2022)

Robust Lane Detection via Expanded Self Attention (WACV 2022) Minhyeok Lee, Junhyeop Lee, Dogyoon Lee, Woojin Kim, Sangwon Hwang, Sangyoun Lee Overvie

An Implementation of Transformer in Transformer in TensorFlow for image classification, attention inside local patches

Transformer-in-Transformer An Implementation of the Transformer in Transformer paper by Han et al. for image classification, attention inside local pa

Neural Caption Generator with Attention

Neural Caption Generator with Attention Tensorflow implementation of "Show

TensorFlow implementation of the paper "Hierarchical Attention Networks for Document Classification"

Hierarchical Attention Networks for Document Classification This is an implementation of the paper Hierarchical Attention Networks for Document Classi

End-To-End Memory Network using Tensorflow

MemN2N Implementation of End-To-End Memory Networks with sklearn-like interface using Tensorflow. Tasks are from the bAbl dataset. Get Started git clo

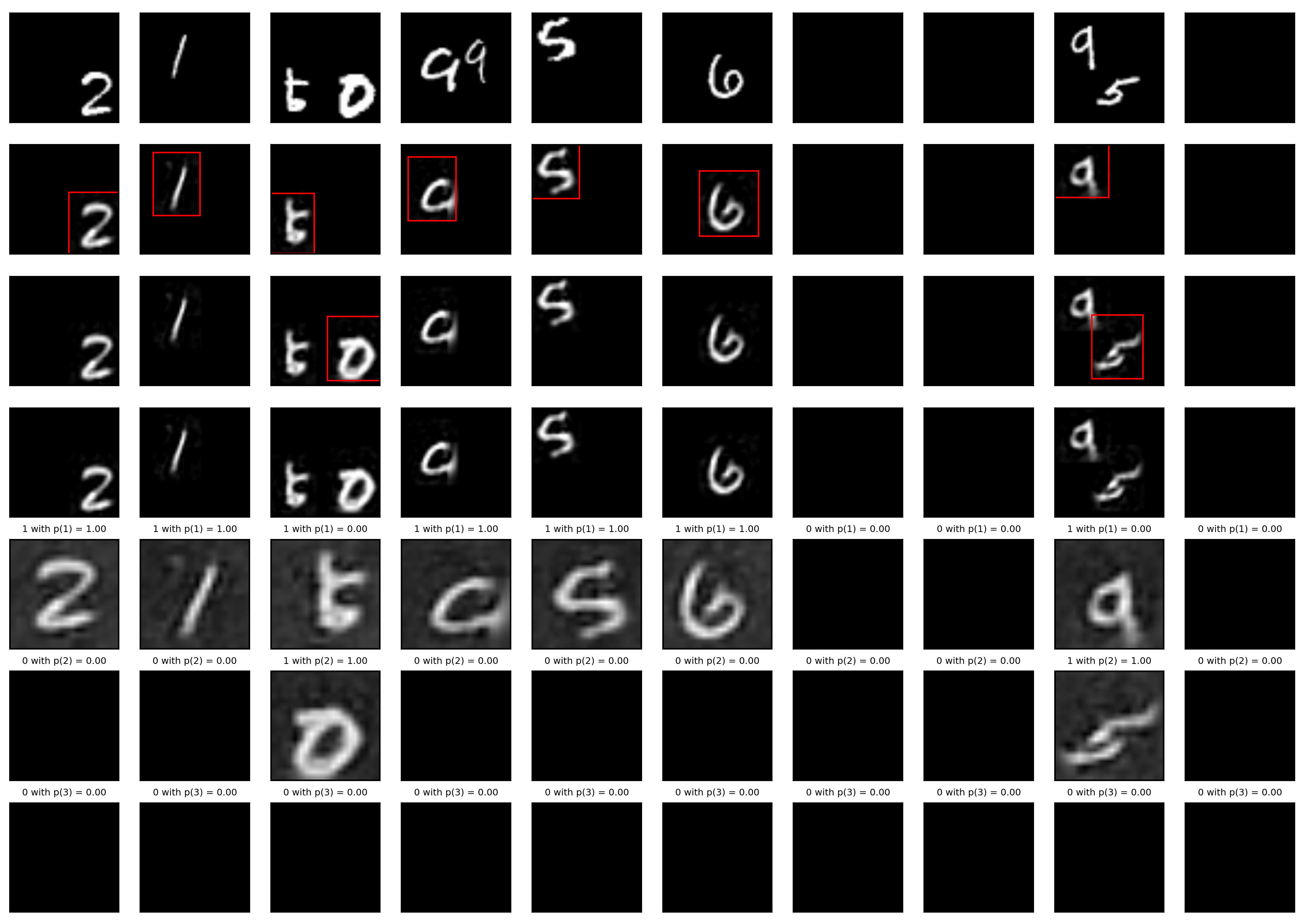

Hierarchical Attentive Recurrent Tracking

Hierarchical Attentive Recurrent Tracking This is an official Tensorflow implementation of single object tracking in videos by using hierarchical atte

A Tensorfflow implementation of Attend, Infer, Repeat

Attend, Infer, Repeat: Fast Scene Understanding with Generative Models This is an unofficial Tensorflow implementation of Attend, Infear, Repeat (AIR)

Official Pytorch Implementation of Relational Self-Attention: What's Missing in Attention for Video Understanding

Relational Self-Attention: What's Missing in Attention for Video Understanding This repository is the official implementation of "Relational Self-Atte

Official implementation of the paper Label-Efficient Semantic Segmentation with Diffusion Models

Label-Efficient Semantic Segmentation with Diffusion Models Official implementation of the paper Label-Efficient Semantic Segmentation with Diffusion

Implementation of N-Grammer, augmenting Transformers with latent n-grams, in Pytorch

N-Grammer - Pytorch Implementation of N-Grammer, augmenting Transformers with latent n-grams, in Pytorch Install $ pip install n-grammer-pytorch Usage

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

PCAM: Product of Cross-Attention Matrices for Rigid Registration of Point Clouds

PCAM: Product of Cross-Attention Matrices for Rigid Registration of Point Clouds PCAM: Product of Cross-Attention Matrices for Rigid Registration of P

Tiny-NewsRec: Efficient and Effective PLM-based News Recommendation

Tiny-NewsRec The source codes for our paper "Tiny-NewsRec: Efficient and Effective PLM-based News Recommendation". Requirements PyTorch == 1.6.0 Tensor

Official PyTorch implementation for paper "Efficient Two-Stage Detection of Human–Object Interactions with a Novel Unary–Pairwise Transformer"

UPT: Unary–Pairwise Transformers This repository contains the official PyTorch implementation for the paper Frederic Z. Zhang, Dylan Campbell and Step

Efficient Two-Step Networks for Temporal Action Segmentation (Neurocomputing 2021)

Efficient Two-Step Networks for Temporal Action Segmentation This repository provides a PyTorch implementation of the paper Efficient Two-Step Network

This project is created to visualize the system statistics such as memory usage, CPU usage, memory accessible by process and much more using Kibana Dashboard with Elasticsearch.

System Stats Visualizer This project is created to visualize the system statistics such as memory usage, CPU usage, memory accessible by process and m

Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch

NÜWA - Pytorch (wip) Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch. This repository will be popul

A simple and efficient computing package for Genshin Impact gacha analysis

GGanalysisLite计算包 这个版本的计算包追求计算速度,而GGanalysis包有着更多计算功能。 GGanalysisLite包通过卷积计算分布列,通过FFT和快速幂加速卷积计算。 测试玩家得到的排名值rank的数学意义是:与抽了同样数量五星的其他玩家相比,测试玩家花费的抽数大于等于比例

MoViNets PyTorch implementation: Mobile Video Networks for Efficient Video Recognition;

MoViNet-pytorch Pytorch unofficial implementation of MoViNets: Mobile Video Networks for Efficient Video Recognition. Authors: Dan Kondratyuk, Liangzh

Neural HMMs are all you need (for high-quality attention-free TTS)

Neural HMMs are all you need (for high-quality attention-free TTS) Shivam Mehta, Éva Székely, Jonas Beskow, and Gustav Eje Henter This is the official

Rainbow DQN implementation accompanying the paper "Fast and Data-Efficient Training of Rainbow" which reaches 205.7 median HNS after 10M frames. 🌈

Rainbow 🌈 An implementation of Rainbow DQN which reaches a median HNS of 205.7 after only 10M frames (the original Rainbow from Hessel et al. 2017 re

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

End-2-end speech synthesis with recurrent neural networks

Introduction New: Interactive demo using Google Colaboratory can be found here TTS-Cube is an end-2-end speech synthesis system that provides a full p

(ACL-IJCNLP 2021) Convolutions and Self-Attention: Re-interpreting Relative Positions in Pre-trained Language Models.

BERT Convolutions Code for the paper Convolutions and Self-Attention: Re-interpreting Relative Positions in Pre-trained Language Models. Contains expe

Train Dense Passage Retriever (DPR) with a single GPU

Gradient Cached Dense Passage Retrieval Gradient Cached Dense Passage Retrieval (GC-DPR) - is an extension of the original DPR library. We introduce G

A Fast Knowledge Distillation Framework for Visual Recognition

FKD: A Fast Knowledge Distillation Framework for Visual Recognition Official PyTorch implementation of paper A Fast Knowledge Distillation Framework f

Paddle Graph Learning (PGL) is an efficient and flexible graph learning framework based on PaddlePaddle

DOC | Quick Start | 中文 Breaking News !! 🔥 🔥 🔥 OGB-LSC KDD CUP 2021 winners announced!! (2021.06.17) Super excited to announce our PGL team won TWO

Code release for "Masked-attention Mask Transformer for Universal Image Segmentation"

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexander Kirillov, Ro

A PyTorch Implementation of PGL-SUM from "Combining Global and Local Attention with Positional Encoding for Video Summarization", Proc. IEEE ISM 2021

PGL-SUM: Combining Global and Local Attention with Positional Encoding for Video Summarization PyTorch Implementation of PGL-SUM From "PGL-SUM: Combin

[NeurIPS'21 Spotlight] PyTorch code for our paper "Aligned Structured Sparsity Learning for Efficient Image Super-Resolution"

ASSL This repository is for a new network pruning method (Aligned Structured Sparsity Learning, ASSL) for efficient single image super-resolution (SR)

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving

GSAN Introduction Code for paper GSAN: Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving, wh

PyTorch implementation of paper A Fast Knowledge Distillation Framework for Visual Recognition.

FKD: A Fast Knowledge Distillation Framework for Visual Recognition Official PyTorch implementation of paper A Fast Knowledge Distillation Framework f

Modified GPT using average pooling to reduce the softmax attention memory constraints.

NLP-GPT-Upsampling This repository contains an implementation of Open AI's GPT Model. In particular, this implementation takes inspiration from the Ny

BERT Attention Analysis

BERT Attention Analysis This repository contains code for What Does BERT Look At? An Analysis of BERT's Attention. It includes code for getting attent

This is a repository with the code for the ACL 2019 paper

The Story of Heads This is the official repo for the following papers: (ACL 2019) Analyzing Multi-Head Self-Attention: Specialized Heads Do the Heavy

Source code for "Efficient Training of BERT by Progressively Stacking"

Introduction This repository is the code to reproduce the result of Efficient Training of BERT by Progressively Stacking. The code is based on Fairseq

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed+Megatron trained the world's most powerful language model: MT-530B DeepSpeed is hiring, come join us! DeepSpeed is a deep learning optimizat

[ACL-IJCNLP 2021] "EarlyBERT: Efficient BERT Training via Early-bird Lottery Tickets"

EarlyBERT This is the official implementation for the paper in ACL-IJCNLP 2021 "EarlyBERT: Efficient BERT Training via Early-bird Lottery Tickets" by

Research code for "What to Pre-Train on? Efficient Intermediate Task Selection", EMNLP 2021

efficient-task-transfer This repository contains code for the experiments in our paper "What to Pre-Train on? Efficient Intermediate Task Selection".

PyTorch implementation of the ACL, 2021 paper Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks.

Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks This repo contains the PyTorch implementation of the ACL, 2021 pa

Pytorch implementation of Bert and Pals: Projected Attention Layers for Efficient Adaptation in Multi-Task Learning

PyTorch implementation of BERT and PALs Introduction Work by Asa Cooper Stickland and Iain Murray, University of Edinburgh. Code for BERT and PALs; mo

Adapter-BERT: Parameter-Efficient Transfer Learning for NLP.

Adapter-BERT: Parameter-Efficient Transfer Learning for NLP.

DeepFill v1/v2 with Contextual Attention and Gated Convolution, CVPR 2018, and ICCV 2019 Oral

Generative Image Inpainting An open source framework for generative image inpainting task, with the support of Contextual Attention (CVPR 2018) and Ga

Efficient training of deep recommenders on cloud.

HybridBackend Introduction HybridBackend is a training framework for deep recommenders which bridges the gap between evolving cloud infrastructure and

Implementation of Vaswani, Ashish, et al. "Attention is all you need."

Attention Is All You Need Paper Implementation This is my from-scratch implementation of the original transformer architecture from the following pape

Official Repository for "Robust On-Policy Data Collection for Data Efficient Policy Evaluation" (NeurIPS 2021 Workshop on OfflineRL).

Robust On-Policy Data Collection for Data-Efficient Policy Evaluation Source code of Robust On-Policy Data Collection for Data-Efficient Policy Evalua

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

PyContinual (An Easy and Extendible Framework for Continual Learning)

PyContinual (An Easy and Extendible Framework for Continual Learning) Easy to Use You can sumply change the baseline, backbone and task, and then read

Official PyTorch Implementation of HELP: Hardware-adaptive Efficient Latency Prediction for NAS via Meta-Learning (NeurIPS 2021 Spotlight)

[NeurIPS 2021 Spotlight] HELP: Hardware-adaptive Efficient Latency Prediction for NAS via Meta-Learning [Paper] This is Official PyTorch implementatio

Representing Long-Range Context for Graph Neural Networks with Global Attention

Graph Augmentation Graph augmentation/self-supervision/etc. Algorithms gcn gcn+virtual node gin gin+virtual node PNA GraphTrans Augmentation methods N

Sample Code for "Pessimism Meets Invariance: Provably Efficient Offline Mean-Field Multi-Agent RL"

Sample Code for "Pessimism Meets Invariance: Provably Efficient Offline Mean-Field Multi-Agent RL" This is the official codebase for Pessimism Meets I

The official implementation of EIGNN: Efficient Infinite-Depth Graph Neural Networks (NeurIPS 2021)

EIGNN: Efficient Infinite-Depth Graph Neural Networks The official implementation of EIGNN: Efficient Infinite-Depth Graph Neural Networks (NeurIPS 20

A library for low-memory inferencing in PyTorch.

Pylomin Pylomin (PYtorch LOw-Memory INference) is a library for low-memory inferencing in PyTorch. Installation ... Usage For example, the following c

A simple, lightweight Discord bot running with only 512 MB memory on Heroku

Haruka This used to be a music bot, but people keep using it for NSFW content. Can't everyone be less horny? Bot commands See the built-in help comman

Memory tests solver with using OpenCV

Human Benchmark project This project is OpenCV based programs which are puzzle solvers for 7 different games for https://humanbenchmark.com/. made as

Long Expressive Memory (LEM)

Long Expressive Memory for Sequence Modeling This repository contains the implementation to reproduce the numerical experiments of the paper Long Expr

Prevent `CUDA error: out of memory` in just 1 line of code.

🐨 Koila Koila solves CUDA error: out of memory error painlessly. Fix it with just one line of code, and forget it. 🚀 Features 🙅 Prevents CUDA error

![[IEEE Transactions on Computational Imaging] Self-Gated Memory Recurrent Network for Efficient Scalable HDR Deghosting](https://github.com/Susmit-A/HDRRNN/raw/master/github_images/overview.png)

[IEEE Transactions on Computational Imaging] Self-Gated Memory Recurrent Network for Efficient Scalable HDR Deghosting

Few-shot Deep HDR Deghosting This repository contains code and pretrained models for our paper: Self-Gated Memory Recurrent Network for Efficient Scal

This repository contains the source code of our work on designing efficient CNNs for computer vision

Efficient networks for Computer Vision This repo contains source code of our work on designing efficient networks for different computer vision tasks:

Single-step adversarial training (AT) has received wide attention as it proved to be both efficient and robust.

Subspace Adversarial Training Single-step adversarial training (AT) has received wide attention as it proved to be both efficient and robust. However,

Our implementation used for the MICCAI 2021 FLARE Challenge titled 'Efficient Multi-Organ Segmentation Using SpatialConfiguartion-Net with Low GPU Memory Requirements'.

Efficient Multi-Organ Segmentation Using SpatialConfiguartion-Net with Low GPU Memory Requirements Our implementation used for the MICCAI 2021 FLARE C

Image Captioning using CNN ,LSTM and Attention

Image Captioning using CNN ,LSTM and Attention This is a deeplearning model which tries to summarize an image into a text . Installation Install this

An optimized prompt tuning strategy comparable to fine-tuning across model scales and tasks.

P-tuning v2 P-Tuning v2: Prompt Tuning Can Be Comparable to Finetuning Universally Across Scales and Tasks An optimized prompt tuning strategy achievi

BinTuner is a cost-efficient auto-tuning framework, which can deliver a near-optimal binary code that reveals much more differences than -Ox settings.

BinTuner is a cost-efficient auto-tuning framework, which can deliver a near-optimal binary code that reveals much more differences than -Ox settings. it also can assist the binary code analysis research in generating more diversified datasets for training and testing. The BinTuner framework is based on OpenTuner, thanks to all contributors for their contributions.

SuMa++: Efficient LiDAR-based Semantic SLAM (Chen et al IROS 2019)

SuMa++: Efficient LiDAR-based Semantic SLAM This repository contains the implementation of SuMa++, which generates semantic maps only using three-dime

A unofficial pytorch implementation of PAN(PSENet2): Efficient and Accurate Arbitrary-Shaped Text Detection with Pixel Aggregation Network

Efficient and Accurate Arbitrary-Shaped Text Detection with Pixel Aggregation Network Requirements pytorch 1.1+ torchvision 0.3+ pyclipper opencv3 gcc

Image Super-Resolution Using Very Deep Residual Channel Attention Networks

Image Super-Resolution Using Very Deep Residual Channel Attention Networks

Paper: Cross-View Kernel Similarity Metric Learning Using Pairwise Constraints for Person Re-identification

Cross-View Kernel Similarity Metric Learning Using Pairwise Constraints for Person Re-identification T M Feroz Ali, Subhasis Chaudhuri, ICVGIP-20-21

Turdshovel is an interactive CLI tool that allows users to dump objects from .NET memory dumps

Turdshovel Description Turdshovel is an interactive CLI tool that allows users to dump objects from .NET memory dumps without having to fully understa

Official repository for Natural Image Matting via Guided Contextual Attention

GCA-Matting: Natural Image Matting via Guided Contextual Attention The source codes and models of Natural Image Matting via Guided Contextual Attentio

GNNAdvisor: An Efficient Runtime System for GNN Acceleration on GPUs

GNNAdvisor: An Efficient Runtime System for GNN Acceleration on GPUs [Paper, Slides, Video Talk] at USENIX OSDI'21 @inproceedings{GNNAdvisor, title=

Prototypical Cross-Attention Networks for Multiple Object Tracking and Segmentation, NeurIPS 2021 Spotlight

PCAN for Multiple Object Tracking and Segmentation This is the offical implementation of paper PCAN for MOTS. We also present a trailer that consists

Multi-modal co-attention for drug-target interaction annotation and Its Application to SARS-CoV-2

CoaDTI Multi-modal co-attention for drug-target interaction annotation and Its Application to SARS-CoV-2 Abstract Environment The test was conducted i

A simple and efficient tool to parallelize Pandas operations on all available CPUs

Pandaral·lel Without parallelization With parallelization Installation $ pip install pandarallel [--upgrade] [--user] Requirements On Windows, Pandara

Revisiting Video Saliency: A Large-scale Benchmark and a New Model (CVPR18, PAMI19)

DHF1K =========================================================================== Wenguan Wang, J. Shen, M.-M Cheng and A. Borji, Revisiting Video Sal

Code for paper " AdderNet: Do We Really Need Multiplications in Deep Learning?"

AdderNet: Do We Really Need Multiplications in Deep Learning? This code is a demo of CVPR 2020 paper AdderNet: Do We Really Need Multiplications in De

Efficient semidefinite bounds for multi-label discrete graphical models.

Low rank solvers #################################### benchmark/ : folder with the random instances used in the paper. ############################

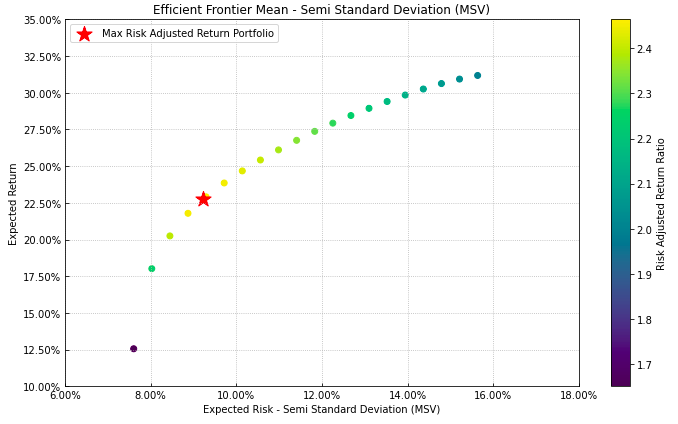

Portfolio Optimization and Quantitative Strategic Asset Allocation in Python

Riskfolio-Lib Quantitative Strategic Asset Allocation, Easy for Everyone. Description Riskfolio-Lib is a library for making quantitative strategic ass

Code to reprudece NeurIPS paper: Accelerated Sparse Neural Training: A Provable and Efficient Method to Find N:M Transposable Masks

Accelerated Sparse Neural Training: A Provable and Efficient Method to FindN:M Transposable Masks Recently, researchers proposed pruning deep neural n

An easy to use, user-friendly and efficient code for extracting OpenAI CLIP (Global/Grid) features from image and text respectively.

Extracting OpenAI CLIP (Global/Grid) Features from Image and Text This repo aims at providing an easy to use and efficient code for extracting image &

Deep Reinforced Attention Regression for Partial Sketch Based Image Retrieval.

DARP-SBIR Intro This repository contains the source code implementation for ICDM submission paper Deep Reinforced Attention Regression for Partial Ske

This is the official PyTorch implementation for "Mesa: A Memory-saving Training Framework for Transformers".

A Memory-saving Training Framework for Transformers This is the official PyTorch implementation for Mesa: A Memory-saving Training Framework for Trans

The Most Efficient Temporal Difference Learning Framework for 2048

moporgic/TDL2048+ TDL2048+ is a highly optimized temporal difference (TD) learning framework for 2048. Features Many common methods related to 2048 ar

Efficient Speech Processing Tookit for Automatic Speaker Recognition

Sugar Efficient Speech Processing Tookit for Automatic Speaker Recognition | HuggingFace | What's New EfficientTDNN: Efficient Architecture Search for

Iterative Normalization: Beyond Standardization towards Efficient Whitening

IterNorm Code for reproducing the results in the following paper: Iterative Normalization: Beyond Standardization towards Efficient Whitening Lei Huan

A simple, fast, and efficient object detector without FPN

You Only Look One-level Feature (YOLOF), CVPR2021 A simple, fast, and efficient object detector without FPN. This repo provides an implementation for

Complex heatmaps are efficient to visualize associations between different sources of data sets and reveal potential patterns.

Make Complex Heatmaps Complex heatmaps are efficient to visualize associations between different sources of data sets and reveal potential patterns. H

A fast, efficient universal vector embedding utility package.

Magnitude: a fast, simple vector embedding utility library A feature-packed Python package and vector storage file format for utilizing vector embeddi

A library for efficient similarity search and clustering of dense vectors.

Faiss Faiss is a library for efficient similarity search and clustering of dense vectors. It contains algorithms that search in sets of vectors of any

This is the official PyTorch implementation for "Mesa: A Memory-saving Training Framework for Transformers".

Mesa: A Memory-saving Training Framework for Transformers This is the official PyTorch implementation for Mesa: A Memory-saving Training Framework for

Quantization library for PyTorch. Support low-precision and mixed-precision quantization, with hardware implementation through TVM.

HAWQ: Hessian AWare Quantization HAWQ is an advanced quantization library written for PyTorch. HAWQ enables low-precision and mixed-precision uniform

This repo contains implementation of different architectures for emotion recognition in conversations.

Emotion Recognition in Conversations Updates 🔥 🔥 🔥 Date Announcements 03/08/2021 🎆 🎆 We have released a new dataset M2H2: A Multimodal Multiparty

Codebase for "ProtoAttend: Attention-Based Prototypical Learning."

Codebase for "ProtoAttend: Attention-Based Prototypical Learning." Authors: Sercan O. Arik and Tomas Pfister Paper: Sercan O. Arik and Tomas Pfister,