325 Repositories

Python PSPNet-knowledge-distillation Libraries

PyTorch implementation of "Dataset Knowledge Transfer for Class-Incremental Learning Without Memory" (WACV2022)

Dataset Knowledge Transfer for Class-Incremental Learning Without Memory [Paper] [Slides] Summary Introduction Installation Reproducing results Citati

Temporal Knowledge Graph Reasoning Triggered by Memories

MTDM Temporal Knowledge Graph Reasoning Triggered by Memories To alleviate the time dependence, we propose a memory-triggered decision-making (MTDM) n

Reinforcement Knowledge Graph Reasoning for Explainable Recommendation

Reinforcement Knowledge Graph Reasoning for Explainable Recommendation This repository contains the source code of the SIGIR 2019 paper "Reinforcement

Jointly Learning Explainable Rules for Recommendation with Knowledge Graph

Jointly Learning Explainable Rules for Recommendation with Knowledge Graph

Incorporating User Micro-behaviors and Item Knowledge 59 60 3 into Multi-task Learning for Session-based Recommendation

MKM-SR Incorporating User Micro-behaviors and Item Knowledge into Multi-task Learning for Session-based Recommendation Paper data and code This is the

Knowledge-aware Coupled Graph Neural Network for Social Recommendation

KCGN AAAI-2021 《Knowledge-aware Coupled Graph Neural Network for Social Recommendation》 Environments python 3.8 pytorch-1.6 DGL 0.5.3 (https://github.

This folder contains the implementation of the multi-relational attribute propagation algorithm.

MrAP This folder contains the implementation of the multi-relational attribute propagation algorithm. It requires the package pytorch-scatter. Please

A Python library for adversarial machine learning focusing on benchmarking adversarial robustness.

ARES This repository contains the code for ARES (Adversarial Robustness Evaluation for Safety), a Python library for adversarial machine learning rese

novel deep learning research works with PaddlePaddle

Research 发布基于飞桨的前沿研究工作,包括CV、NLP、KG、STDM等领域的顶会论文和比赛冠军模型。 目录 计算机视觉(Computer Vision) 自然语言处理(Natrual Language Processing) 知识图谱(Knowledge Graph) 时空数据挖掘(Spa

Asymmetric metric learning for knowledge transfer

Asymmetric metric learning This is the official code that enables the reproduction of the results from our paper: Asymmetric metric learning for knowl

Code for the ICME 2021 paper "Exploring Driving-Aware Salient Object Detection via Knowledge Transfer"

TSOD Code for the ICME 2021 paper "Exploring Driving-Aware Salient Object Detection via Knowledge Transfer" Usage For training, open train_test, run p

Source Code for our paper: Understand me, if you refer to Aspect Knowledge: Knowledge-aware Gated Recurrent Memory Network

KaGRMN-DSG_ABSA This repository contains the PyTorch source Code for our paper: Understand me, if you refer to Aspect Knowledge: Knowledge-aware Gated

KUIZ is a web application quiz where you can create/take a quiz for learning and sharing knowledge from various subjects, questions and answers.

KUIZ KUIZ is a web application quiz where you can create/take a quiz for learning and sharing knowledge from various subjects, questions and answers.

The official implementation of CVPR 2021 Paper: Improving Weakly Supervised Visual Grounding by Contrastive Knowledge Distillation.

Improving Weakly Supervised Visual Grounding by Contrastive Knowledge Distillation This repository is the official implementation of CVPR 2021 paper:

novel deep learning research works with PaddlePaddle

Research 发布基于飞桨的前沿研究工作,包括CV、NLP、KG、STDM等领域的顶会论文和比赛冠军模型。 目录 计算机视觉(Computer Vision) 自然语言处理(Natrual Language Processing) 知识图谱(Knowledge Graph) 时空数据挖掘(Spa

A PoC Corporation Relationship Knowledge Graph System on top of Nebula Graph.

Corp-Rel is a PoC of Corpartion Relationship Knowledge Graph System. It's built on top of the Open Source Graph Database: Nebula Graph with a dataset

A framework for evaluating Knowledge Graph Embedding Models in a fine-grained manner.

A framework for evaluating Knowledge Graph Embedding Models in a fine-grained manner.

This is the official pytorch implementation of Student Helping Teacher: Teacher Evolution via Self-Knowledge Distillation(TESKD)

Student Helping Teacher: Teacher Evolution via Self-Knowledge Distillation (TESKD) By Zheng Li[1,4], Xiang Li[2], Lingfeng Yang[2,4], Jian Yang[2], Zh

RNG-KBQA: Generation Augmented Iterative Ranking for Knowledge Base Question Answering

RNG-KBQA: Generation Augmented Iterative Ranking for Knowledge Base Question Answering Authors: Xi Ye, Semih Yavuz, Kazuma Hashimoto, Yingbo Zhou and

Large-scale open domain KNOwledge grounded conVERsation system based on PaddlePaddle

Knover Knover is a toolkit for knowledge grounded dialogue generation based on PaddlePaddle. Knover allows researchers and developers to carry out eff

End-to-end image segmentation kit based on PaddlePaddle.

English | 简体中文 PaddleSeg PaddleSeg has released the new version including the following features: Our team won the AutoNUE@CVPR 2021 challenge, where

This is the source code for: Context-aware Entity Typing in Knowledge Graphs.

This is the source code for: Context-aware Entity Typing in Knowledge Graphs.

Distiller is an open-source Python package for neural network compression research.

Wiki and tutorials | Documentation | Getting Started | Algorithms | Design | FAQ Distiller is an open-source Python package for neural network compres

Convolutional 2D Knowledge Graph Embeddings resources

ConvE Convolutional 2D Knowledge Graph Embeddings resources. Paper: Convolutional 2D Knowledge Graph Embeddings Used in the paper, but do not use thes

Improving Convolutional Networks via Attention Transfer (ICLR 2017)

Attention Transfer PyTorch code for "Paying More Attention to Attention: Improving the Performance of Convolutional Neural Networks via Attention Tran

EMNLP'2021: Can Language Models be Biomedical Knowledge Bases?

BioLAMA BioLAMA is biomedical factual knowledge triples for probing biomedical LMs. The triples are collected and pre-processed from three sources: CT

Does Pretraining for Summarization Reuqire Knowledge Transfer?

Pretraining summarization models using a corpus of nonsense

Beta Distribution Guided Aspect-aware Graph for Aspect Category Sentiment Analysis with Affective Knowledge. Proceedings of EMNLP 2021

AAGCN-ACSA EMNLP 2021 Introduction This repository was used in our paper: Beta Distribution Guided Aspect-aware Graph for Aspect Category Sentiment An

Obsidian tools - a Python package for analysing an Obsidian.md vault

obsidiantools is a Python package for getting structured metadata about your Obsidian.md notes and analysing your vault.

Code for the paper in Findings of EMNLP 2021: "EfficientBERT: Progressively Searching Multilayer Perceptron via Warm-up Knowledge Distillation".

This repository contains the code for the paper in Findings of EMNLP 2021: "EfficientBERT: Progressively Searching Multilayer Perceptron via Warm-up Knowledge Distillation".

ROSITA: Enhancing Vision-and-Language Semantic Alignments via Cross- and Intra-modal Knowledge Integration

ROSITA News & Updates (24/08/2021) Release the demo to perform fine-grained semantic alignments using the pretrained ROSITA model. (15/08/2021) Releas

This repository contains the PyTorch implementation of the paper STaCK: Sentence Ordering with Temporal Commonsense Knowledge appearing at EMNLP 2021.

STaCK: Sentence Ordering with Temporal Commonsense Knowledge This repository contains the pytorch implementation of the paper STaCK: Sentence Ordering

Variational Attention: Propagating Domain-Specific Knowledge for Multi-Domain Learning in Crowd Counting (ICCV, 2021)

DKPNet ICCV 2021 Variational Attention: Propagating Domain-Specific Knowledge for Multi-Domain Learning in Crowd Counting Baseline of DKPNet is availa

A fluid medium for storing, relating, and surfacing thoughts.

Conceptarium A fluid medium for storing, relating, and surfacing thoughts. Read more... Instructions The conceptarium takes up about 1GB RAM when runn

yolov5目标检测模型的知识蒸馏(基于响应的蒸馏)

代码地址: https://github.com/Sharpiless/yolov5-knowledge-distillation 教师模型: python train.py --weights weights/yolov5m.pt \ --cfg models/yolov5m.ya

✨Rubrix is a production-ready Python framework for exploring, annotating, and managing data in NLP projects.

✨A Python framework to explore, label, and monitor data for NLP projects

Implementation for the EMNLP 2021 paper "Interactive Machine Comprehension with Dynamic Knowledge Graphs".

Interactive Machine Comprehension with Dynamic Knowledge Graphs Implementation for the EMNLP 2021 paper. Dependencies apt-get -y update apt-get instal

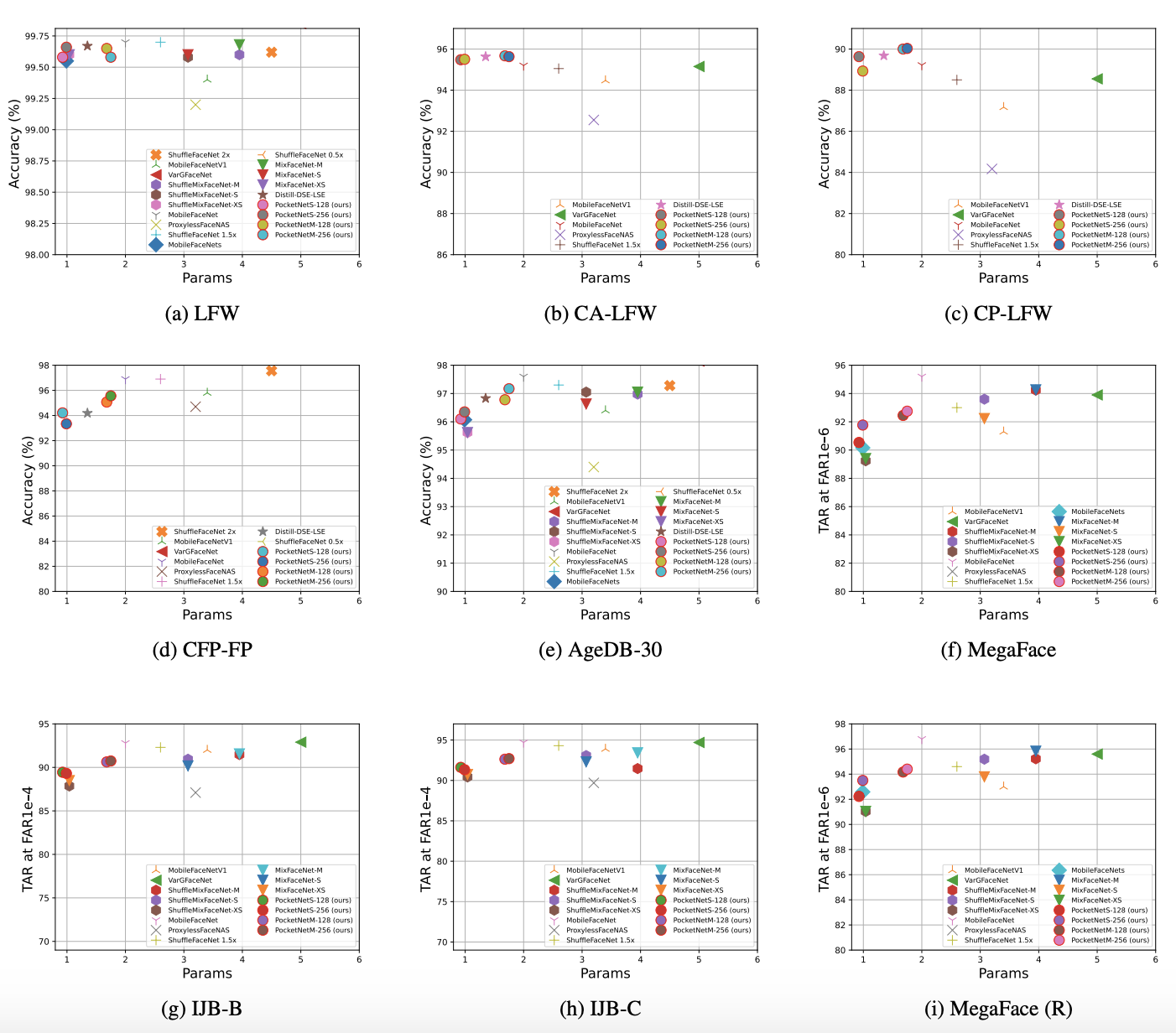

PocketNet: Extreme Lightweight Face Recognition Network using Neural Architecture Search and Multi-Step Knowledge Distillation

PocketNet This is the official repository of the paper: PocketNet: Extreme Lightweight Face Recognition Network using Neural Architecture Search and M

ZSL-KG is a general-purpose zero-shot learning framework with a novel transformer graph convolutional network (TrGCN) to learn class representation from common sense knowledge graphs.

ZSL-KG is a general-purpose zero-shot learning framework with a novel transformer graph convolutional network (TrGCN) to learn class representa

This repo uses a combination of logits and feature distillation method to teach the PSPNet model of ResNet18 backbone with the PSPNet model of ResNet50 backbone. All the models are trained and tested on the PASCAL-VOC2012 dataset.

PSPNet-logits and feature-distillation Introduction This repository is based on PSPNet and modified from semseg and Pixelwise_Knowledge_Distillation_P

🌈 PyTorch Implementation for EMNLP'21 Findings "Reasoning Visual Dialog with Sparse Graph Learning and Knowledge Transfer"

SGLKT-VisDial Pytorch Implementation for the paper: Reasoning Visual Dialog with Sparse Graph Learning and Knowledge Transfer Gi-Cheon Kang, Junseok P

sssegmentation is a general framework for our research on strongly supervised semantic segmentation.

sssegmentation is a general framework for our research on strongly supervised semantic segmentation.

TorchDistiller - a collection of the open source pytorch code for knowledge distillation, especially for the perception tasks, including semantic segmentation, depth estimation, object detection and instance segmentation.

This project is a collection of the open source pytorch code for knowledge distillation, especially for the perception tasks, including semantic segmentation, depth estimation, object detection and instance segmentation.

DomainWordsDict, Chinese words dict that contains more than 68 domains, which can be used as text classification、knowledge enhance task

DomainWordsDict, Chinese words dict that contains more than 68 domains, which can be used as text classification、knowledge enhance task。涵盖68个领域、共计916万词的专业词典知识库,可用于文本分类、知识增强、领域词汇库扩充等自然语言处理应用。

PyTorch implementation of PSPNet segmentation network

pspnet-pytorch PyTorch implementation of PSPNet segmentation network Original paper Pyramid Scene Parsing Network Details This is a slightly different

Online Multi-Granularity Distillation for GAN Compression (ICCV2021)

Online Multi-Granularity Distillation for GAN Compression (ICCV2021) This repository contains the pytorch codes and trained models described in the IC

Extract knowledge from raw text

Extract knowledge from raw text This repository is a nearly copy-paste of "From Text to Knowledge: The Information Extraction Pipeline" with some cosm

Collective Multi-type Entity Alignment Between Knowledge Graphs (WWW'20)

CG-MuAlign A reference implementation for "Collective Multi-type Entity Alignment Between Knowledge Graphs", published in WWW 2020. If you find our pa

open-information-extraction-system, build open-knowledge-graph(SPO, subject-predicate-object) by pyltp(version==3.4.0)

中文开放信息抽取系统, open-information-extraction-system, build open-knowledge-graph(SPO, subject-predicate-object) by pyltp(version==3.4.0)

A library for finding knowledge neurons in pretrained transformer models.

knowledge-neurons An open source repository replicating the 2021 paper Knowledge Neurons in Pretrained Transformers by Dai et al., and extending the t

Versatile Generative Language Model

Versatile Generative Language Model This is the implementation of the paper: Exploring Versatile Generative Language Model Via Parameter-Efficient Tra

TF2 implementation of knowledge distillation using the "function matching" hypothesis from the paper Knowledge distillation: A good teacher is patient and consistent by Beyer et al.

FunMatch-Distillation TF2 implementation of knowledge distillation using the "function matching" hypothesis from the paper Knowledge distillation: A g

A library for finding knowledge neurons in pretrained transformer models.

knowledge-neurons An open source repository replicating the 2021 paper Knowledge Neurons in Pretrained Transformers by Dai et al., and extending the t

Code for ICCV 2021 paper "Distilling Holistic Knowledge with Graph Neural Networks"

HKD Code for ICCV 2021 paper "Distilling Holistic Knowledge with Graph Neural Networks" cifia-100 result The implementation of compared methods are ba

Official Pytorch implementation of ICLR 2018 paper Deep Learning for Physical Processes: Integrating Prior Scientific Knowledge.

Deep Learning for Physical Processes: Integrating Prior Scientific Knowledge: Official Pytorch implementation of ICLR 2018 paper Deep Learning for Phy

Convolutional 2D Knowledge Graph Embeddings resources

ConvE Convolutional 2D Knowledge Graph Embeddings resources. Paper: Convolutional 2D Knowledge Graph Embeddings Used in the paper, but do not use thes

![[IJCAI-2021] A benchmark of data-free knowledge distillation from paper](https://github.com/zju-vipa/DataFree/raw/main/assets/cmi.png)

[IJCAI-2021] A benchmark of data-free knowledge distillation from paper "Contrastive Model Inversion for Data-Free Knowledge Distillation"

DataFree A benchmark of data-free knowledge distillation from paper "Contrastive Model Inversion for Data-Free Knowledge Distillation" Authors: Gongfa

Rubrix is a free and open-source tool for exploring and iterating on data for artificial intelligence projects.

Open-source tool for exploring, labeling, and monitoring data for AI projects

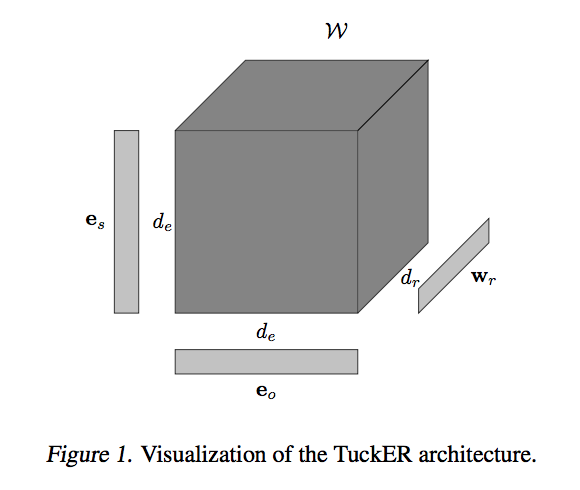

TuckER: Tensor Factorization for Knowledge Graph Completion

TuckER: Tensor Factorization for Knowledge Graph Completion This codebase contains PyTorch implementation of the paper: TuckER: Tensor Factorization f

Align before Fuse: Vision and Language Representation Learning with Momentum Distillation

This is the official PyTorch implementation of the ALBEF paper [Blog]. This repository supports pre-training on custom datasets, as well as finetuning on VQA, SNLI-VE, NLVR2, Image-Text Retrieval on MSCOCO and Flickr30k, and visual grounding on RefCOCO+. Pre-trained and finetuned checkpoints are released.

S2-BNN: Bridging the Gap Between Self-Supervised Real and 1-bit Neural Networks via Guided Distribution Calibration (CVPR 2021)

S2-BNN (Self-supervised Binary Neural Networks Using Distillation Loss) This is the official pytorch implementation of our paper: "S2-BNN: Bridging th

Code and dataset for ACL2018 paper "Exploiting Document Knowledge for Aspect-level Sentiment Classification"

Aspect-level Sentiment Classification Code and dataset for ACL2018 [paper] ‘‘Exploiting Document Knowledge for Aspect-level Sentiment Classification’’

🤖 A Python library for learning and evaluating knowledge graph embeddings

PyKEEN PyKEEN (Python KnowlEdge EmbeddiNgs) is a Python package designed to train and evaluate knowledge graph embedding models (incorporating multi-m

noisy labels; missing labels; semi-supervised learning; entropy; uncertainty; robustness and generalisation.

ProSelfLC: CVPR 2021 ProSelfLC: Progressive Self Label Correction for Training Robust Deep Neural Networks For any specific discussion or potential fu

Deep Learning and Logical Reasoning from Data and Knowledge

Logic Tensor Networks (LTN) Logic Tensor Network (LTN) is a neurosymbolic framework that supports querying, learning and reasoning with both rich data

Pytorch version of VidLanKD: Improving Language Understanding viaVideo-Distilled Knowledge Transfer

VidLanKD Implementation of VidLanKD: Improving Language Understanding via Video-Distilled Knowledge Transfer by Zineng Tang, Jaemin Cho, Hao Tan, Mohi

Source code for the paper "PLOME: Pre-training with Misspelled Knowledge for Chinese Spelling Correction" in ACL2021

PLOME:Pre-training with Misspelled Knowledge for Chinese Spelling Correction (ACL2021) This repository provides the code and data of the work in ACL20

Code implementation of Data Efficient Stagewise Knowledge Distillation paper.

Data Efficient Stagewise Knowledge Distillation Table of Contents Data Efficient Stagewise Knowledge Distillation Table of Contents Requirements Image

ACL'2021: Learning Dense Representations of Phrases at Scale

DensePhrases DensePhrases is an extractive phrase search tool based on your natural language inputs. From 5 million Wikipedia articles, it can search

Source code for paper: Knowledge Inheritance for Pre-trained Language Models

Knowledge-Inheritance Source code paper: Knowledge Inheritance for Pre-trained Language Models (preprint). The trained model parameters (in Fairseq fo

Official PyTorch Implementation of Embedding Transfer with Label Relaxation for Improved Metric Learning, CVPR 2021

Embedding Transfer with Label Relaxation for Improved Metric Learning Official PyTorch implementation of CVPR 2021 paper Embedding Transfer with Label

Daily knowledge pills to get better in Python.

Python daily pills Daily knowledge pills to get better Python code. Why Does your Python code suffers of any of this symptoms? Incorrect Indentation I

Code for the paper "Query Embedding on Hyper-relational Knowledge Graphs"

Query Embedding on Hyper-Relational Knowledge Graphs This repository contains the code used for the experiments in the paper Query Embedding on Hyper-

Compositional and Parameter-Efficient Representations for Large Knowledge Graphs

NodePiece - Compositional and Parameter-Efficient Representations for Large Knowledge Graphs NodePiece is a "tokenizer" for reducing entity vocabulary

Extracting Summary Knowledge Graphs from Long Documents

GraphSum This repo contains the data and code for the G2G model in the paper: Extracting Summary Knowledge Graphs from Long Documents. The other basel

UmlsBERT: Clinical Domain Knowledge Augmentation of Contextual Embeddings Using the Unified Medical Language System Metathesaurus

UmlsBERT: Clinical Domain Knowledge Augmentation of Contextual Embeddings Using the Unified Medical Language System Metathesaurus General info This is

Using pretrained language models for biomedical knowledge graph completion.

LMs for biomedical KG completion This repository contains code to run the experiments described in: Scientific Language Models for Biomedical Knowledg

DSTC10 Track 2 - Knowledge-grounded Task-oriented Dialogue Modeling on Spoken Conversations

DSTC10 Track 2 - Knowledge-grounded Task-oriented Dialogue Modeling on Spoken Conversations This repository contains the data, scripts and baseline co

2021海华AI挑战赛·中文阅读理解·技术组·第三名

文字是人类用以记录和表达的最基本工具,也是信息传播的重要媒介。透过文字与符号,我们可以追寻人类文明的起源,可以传播知识与经验,读懂文字是认识与了解的第一步。对于人工智能而言,它的核心问题之一就是认知,而认知的核心则是语义理解。

MERLOT: Multimodal Neural Script Knowledge Models

merlot MERLOT: Multimodal Neural Script Knowledge Models MERLOT is a model for learning what we are calling "neural script knowledge" -- representatio

The implementation of PEMP in paper "Prior-Enhanced Few-Shot Segmentation with Meta-Prototypes"

Prior-Enhanced network with Meta-Prototypes (PEMP) This is the PyTorch implementation of PEMP. Overview of PEMP Meta-Prototypes & Adaptive Prototypes

Paddle implementation for "Highly Efficient Knowledge Graph Embedding Learning with Closed-Form Orthogonal Procrustes Analysis" (NAACL 2021)

ProcrustEs-KGE Paddle implementation for Highly Efficient Knowledge Graph Embedding Learning with Orthogonal Procrustes Analysis 🙈 A more detailed re

Code for CVPR2021 "Visualizing Adapted Knowledge in Domain Transfer". Visualization for domain adaptation. #explainable-ai

Visualizing Adapted Knowledge in Domain Transfer @inproceedings{hou2021visualizing, title={Visualizing Adapted Knowledge in Domain Transfer}, auth

This is the repo for the paper `SumGNN: Multi-typed Drug Interaction Prediction via Efficient Knowledge Graph Summarization'. (published in Bioinformatics'21)

SumGNN: Multi-typed Drug Interaction Prediction via Efficient Knowledge Graph Summarization This is the code for our paper ``SumGNN: Multi-typed Drug

An implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep Neural Networks in PyTorch.

Neural Attention Distillation This is an implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep

Multi-Scale Aligned Distillation for Low-Resolution Detection (CVPR2021)

MSAD Multi-Scale Aligned Distillation for Low-Resolution Detection Lu Qi*, Jason Kuen*, Jiuxiang Gu, Zhe Lin, Yi Wang, Yukang Chen, Yanwei Li, Jiaya J

Official implementation for (Show, Attend and Distill: Knowledge Distillation via Attention-based Feature Matching, AAAI-2021)

Show, Attend and Distill: Knowledge Distillation via Attention-based Feature Matching Official pytorch implementation of "Show, Attend and Distill: Kn

Distilling Knowledge via Knowledge Review, CVPR 2021

ReviewKD Distilling Knowledge via Knowledge Review Pengguang Chen, Shu Liu, Hengshuang Zhao, Jiaya Jia This project provides an implementation for the

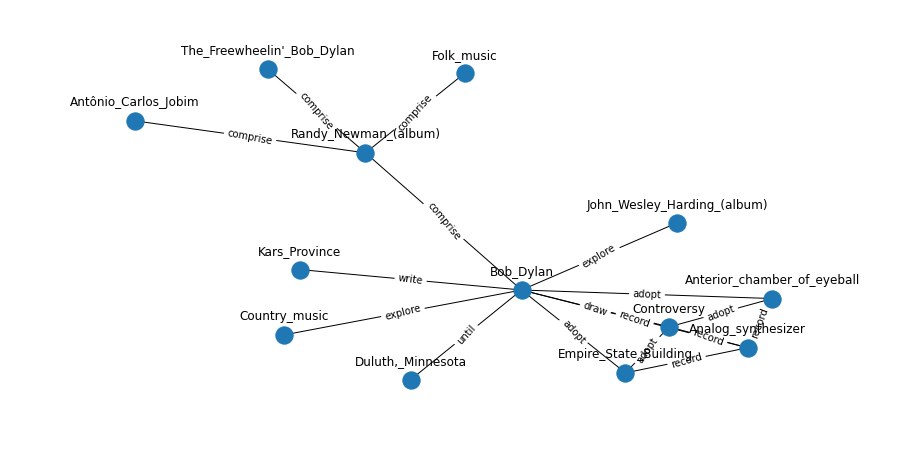

Language models are open knowledge graphs ( non official implementation )

language-models-are-knowledge-graphs-pytorch Language models are open knowledge graphs ( work in progress ) A non official reimplementation of Languag

Code for paper PairRE: Knowledge Graph Embeddings via Paired Relation Vectors.

PairRE Code for paper PairRE: Knowledge Graph Embeddings via Paired Relation Vectors. This implementation of PairRE for Open Graph Benchmak datasets (

Continuous Query Decomposition for Complex Query Answering in Incomplete Knowledge Graphs

Continuous Query Decomposition This repository contains the official implementation for our ICLR 2021 (Oral) paper, Complex Query Answering with Neura

RL and distillation in CARLA using a factorized world model

World on Rails Learning to drive from a world on rails Dian Chen, Vladlen Koltun, Philipp Krähenbühl, arXiv techical report (arXiv 2105.00636) This re

Codes for our paper "SentiLARE: Sentiment-Aware Language Representation Learning with Linguistic Knowledge" (EMNLP 2020)

SentiLARE: Sentiment-Aware Language Representation Learning with Linguistic Knowledge Introduction SentiLARE is a sentiment-aware pre-trained language

KE-Dialogue: Injecting knowledge graph into a fully end-to-end dialogue system.

Learning Knowledge Bases with Parameters for Task-Oriented Dialogue Systems This is the implementation of the paper: Learning Knowledge Bases with Par

PyKale is a PyTorch library for multimodal learning and transfer learning as well as deep learning and dimensionality reduction on graphs, images, texts, and videos

PyKale is a PyTorch library for multimodal learning and transfer learning as well as deep learning and dimensionality reduction on graphs, images, texts, and videos. By adopting a unified pipeline-based API design, PyKale enforces standardization and minimalism, via reusing existing resources, reducing repetitions and redundancy, and recycling learning models across areas.

ATOMIC 2020: On Symbolic and Neural Commonsense Knowledge Graphs

(Comet-) ATOMIC 2020: On Symbolic and Neural Commonsense Knowledge Graphs Paper Jena D. Hwang, Chandra Bhagavatula, Ronan Le Bras, Jeff Da, Keisuke Sa

SpikeX - SpaCy Pipes for Knowledge Extraction

SpikeX is a collection of pipes ready to be plugged in a spaCy pipeline. It aims to help in building knowledge extraction tools with almost-zero effort.

Y. Zhang, Q. Yao, W. Dai, L. Chen. AutoSF: Searching Scoring Functions for Knowledge Graph Embedding. IEEE International Conference on Data Engineering (ICDE). 2020

AutoSF The code for our paper "AutoSF: Searching Scoring Functions for Knowledge Graph Embedding" and this paper has been accepted by ICDE2020. News:

Knowledge Graph,Question Answering System,基于知识图谱和向量检索的医疗诊断问答系统

Knowledge Graph,Question Answering System,基于知识图谱和向量检索的医疗诊断问答系统

AMTML-KD: Adaptive Multi-teacher Multi-level Knowledge Distillation

AMTML-KD: Adaptive Multi-teacher Multi-level Knowledge Distillation