1357 Repositories

Python self-critical-sequence-training Libraries

BYOL for Audio: Self-Supervised Learning for General-Purpose Audio Representation

BYOL for Audio: Self-Supervised Learning for General-Purpose Audio Representation This is a demo implementation of BYOL for Audio (BYOL-A), a self-sup

A Practical Debugging Tool for Training Deep Neural Networks

Cockpit is a visual and statistical debugger specifically designed for deep learning!

Just Go with the Flow: Self-Supervised Scene Flow Estimation

Just Go with the Flow: Self-Supervised Scene Flow Estimation Code release for the paper Just Go with the Flow: Self-Supervised Scene Flow Estimation,

PlenOctrees: NeRF-SH Training & Conversion

PlenOctrees Official Repo: NeRF-SH training and conversion This repository contains code to train NeRF-SH and to extract the PlenOctree, constituting

Pytorch implementation of "Training a 85.4% Top-1 Accuracy Vision Transformer with 56M Parameters on ImageNet"

Token Labeling: Training an 85.4% Top-1 Accuracy Vision Transformer with 56M Parameters on ImageNet (arxiv) This is a Pytorch implementation of our te

SiT: Self-supervised vIsion Transformer

This repository contains the official PyTorch self-supervised pretraining, finetuning, and evaluation codes for SiT (Self-supervised image Transformer).

(CVPR 2021) ST3D: Self-training for Unsupervised Domain Adaptation on 3D Object Detection

ST3D Code release for the paper ST3D: Self-training for Unsupervised Domain Adaptation on 3D Object Detection, CVPR 2021 Authors: Jihan Yang*, Shaoshu

Coreference resolution for English, German and Polish, optimised for limited training data and easily extensible for further languages

Coreferee Author: Richard Paul Hudson, msg systems ag 1. Introduction 1.1 The basic idea 1.2 Getting started 1.2.1 English 1.2.2 German 1.2.3 Polish 1

Training code of Spatial Time Memory Network. Semi-supervised video object segmentation.

Training-code-of-STM This repository fully reproduces Space-Time Memory Networks Performance on Davis17 val set&Weights backbone training stage traini

Focus on Algorithm Design, Not on Data Wrangling

The dataTap Python library is the primary interface for using dataTap's rich data management tools. Create datasets, stream annotations, and analyze model performance all with one library.

Self-Supervised Learning for Domain Adaptation on Point-Clouds

Self-Supervised Learning for Domain Adaptation on Point-Clouds Introduction Self-supervised learning (SSL) allows to learn useful representations from

skweak: A software toolkit for weak supervision applied to NLP tasks

Labelled data remains a scarce resource in many practical NLP scenarios. This is especially the case when working with resource-poor languages (or text domains), or when using task-specific labels without pre-existing datasets. The only available option is often to collect and annotate texts by hand, which is expensive and time-consuming.

Source code for AAAI20 "Generating Persona Consistent Dialogues by Exploiting Natural Language Inference".

Generating Persona Consistent Dialogues by Exploiting Natural Language Inference Source code for RCDG model in AAAI20 Generating Persona Consistent Di

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

![[CVPRW 21]](https://github.com/VITA-Group/BNN_NoBN/raw/main/Figs/res.png)

[CVPRW 21] "BNN - BN = ? Training Binary Neural Networks without Batch Normalization", Tianlong Chen, Zhenyu Zhang, Xu Ouyang, Zechun Liu, Zhiqiang Shen, Zhangyang Wang

BNN - BN = ? Training Binary Neural Networks without Batch Normalization Codes for this paper BNN - BN = ? Training Binary Neural Networks without Bat

This repository allows you to anonymize sensitive information in images/videos. The solution is fully compatible with the DL-based training/inference solutions that we already published/will publish for Object Detection and Semantic Segmentation.

BMW-Anonymization-Api Data privacy and individuals’ anonymity are and always have been a major concern for data-driven companies. Therefore, we design

Diffgram - Supervised Learning Data Platform

Data Annotation, Data Labeling, Annotation Tooling, Training Data for Machine Learning

Official code of our work, Unified Pre-training for Program Understanding and Generation [NAACL 2021].

PLBART Code pre-release of our work, Unified Pre-training for Program Understanding and Generation accepted at NAACL 2021. Note. A detailed documentat

Sandbox for training deep learning networks

Deep learning networks This repo is used to research convolutional networks primarily for computer vision tasks. For this purpose, the repo contains (

Provided is code that demonstrates the training and evaluation of the work presented in the paper: "On the Detection of Digital Face Manipulation" published in CVPR 2020.

FFD Source Code Provided is code that demonstrates the training and evaluation of the work presented in the paper: "On the Detection of Digital Face M

Code for the paper "MASTER: Multi-Aspect Non-local Network for Scene Text Recognition" (Pattern Recognition 2021)

MASTER-PyTorch PyTorch reimplementation of "MASTER: Multi-Aspect Non-local Network for Scene Text Recognition" (Pattern Recognition 2021). This projec

Based on the paper "Geometry-aware Instance-reweighted Adversarial Training" ICLR 2021 oral

Geometry-aware Instance-reweighted Adversarial Training This repository provides codes for Geometry-aware Instance-reweighted Adversarial Training (ht

Trading environnement for RL agents, backtesting and training.

TradzQAI Trading environnement for RL agents, backtesting and training. Live session with coinbasepro-python is finaly arrived ! Available sessions: L

Trading and Backtesting environment for training reinforcement learning agent or simple rule base algo.

TradingGym TradingGym is a toolkit for training and backtesting the reinforcement learning algorithms. This was inspired by OpenAI Gym and imitated th

Solving the Traveling Salesman Problem using Self-Organizing Maps

Solving the Traveling Salesman Problem using Self-Organizing Maps This repository contains an implementation of a Self Organizing Map that can be used

CLASP - Contrastive Language-Aminoacid Sequence Pretraining

CLASP - Contrastive Language-Aminoacid Sequence Pretraining Repository for creating models pretrained on language and aminoacid sequences similar to C

Learning recognition/segmentation models without end-to-end training. 40%-60% less GPU memory footprint. Same training time. Better performance.

InfoPro-Pytorch The Information Propagation algorithm for training deep networks with local supervision. (ICLR 2021) Revisiting Locally Supervised Lea

Code for pre-training CharacterBERT models (as well as BERT models).

Pre-training CharacterBERT (and BERT) This is a repository for pre-training BERT and CharacterBERT. DISCLAIMER: The code was largely adapted from an o

Official code for the CVPR 2021 paper "How Well Do Self-Supervised Models Transfer?"

How Well Do Self-Supervised Models Transfer? This repository hosts the code for the experiments in the CVPR 2021 paper How Well Do Self-Supervised Mod

The implementation of ICASSP 2020 paper "Pixel-level self-paced learning for super-resolution"

Pixel-level Self-Paced Learning for Super-Resolution This is an official implementaion of the paper Pixel-level Self-Paced Learning for Super-Resoluti

SSD: A Unified Framework for Self-Supervised Outlier Detection [ICLR 2021]

SSD: A Unified Framework for Self-Supervised Outlier Detection [ICLR 2021] Pdf: https://openreview.net/forum?id=v5gjXpmR8J Code for our ICLR 2021 pape

![[CVPR 2021] Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers](https://github.com/fudan-zvg/SETR/raw/main/fig/image.png)

[CVPR 2021] Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers

[CVPR 2021] Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers

This project is part of Eleuther AI's quest to create a massive repository of high quality text data for training language models.

This project is part of Eleuther AI's quest to create a massive repository of high quality text data for training language models.

Code for CVPR 2021 paper: Revamping Cross-Modal Recipe Retrieval with Hierarchical Transformers and Self-supervised Learning

Revamping Cross-Modal Recipe Retrieval with Hierarchical Transformers and Self-supervised Learning This is the PyTorch companion code for the paper: A

YunoHost is an operating system aiming to simplify as much as possible the administration of a server.

YunoHost is an operating system aiming to simplify as much as possible the administration of a server. This repository corresponds to the core code, written mostly in Python and Bash.

a Disqus alternative

Isso – a commenting server similar to Disqus Isso – Ich schrei sonst – is a lightweight commenting server written in Python and JavaScript. It aims to

Simple Pose: Rethinking and Improving a Bottom-up Approach for Multi-Person Pose Estimation

SimplePose Code and pre-trained models for our paper, “Simple Pose: Rethinking and Improving a Bottom-up Approach for Multi-Person Pose Estimation”, a

Minimal, self-hosted, 0-config alternative to ngrok. Caddy+OpenSSH+50 lines of Python.

If you have a webserver running on one computer (say your development laptop), and you want to expose it securely (ie HTTPS) via a public URL, SirTunnel allows you to easily do that.

List of ngrok alternatives and other ngrok-like tunneling software and services. Focus on self-hosting.

List of ngrok alternatives and other ngrok-like tunneling software and services. Focus on self-hosting.

Code for paper "A Critical Assessment of State-of-the-Art in Entity Alignment" (https://arxiv.org/abs/2010.16314)

A Critical Assessment of State-of-the-Art in Entity Alignment This repository contains the source code for the paper A Critical Assessment of State-of

Official implementation for (Refine Myself by Teaching Myself : Feature Refinement via Self-Knowledge Distillation, CVPR-2021)

FRSKD Official implementation for Refine Myself by Teaching Myself : Feature Refinement via Self-Knowledge Distillation (CVPR-2021) Requirements Pytho

Code for the paper: Adversarial Training Against Location-Optimized Adversarial Patches. ECCV-W 2020.

Adversarial Training Against Location-Optimized Adversarial Patches arXiv | Paper | Code | Video | Slides Code for the paper: Sukrut Rao, David Stutz,

![[ICLR 2021]](https://github.com/RICE-EIC/CPT/raw/master/imgs/cpt.png)

[ICLR 2021] "CPT: Efficient Deep Neural Network Training via Cyclic Precision" by Yonggan Fu, Han Guo, Meng Li, Xin Yang, Yining Ding, Vikas Chandra, Yingyan Lin

CPT: Efficient Deep Neural Network Training via Cyclic Precision Yonggan Fu, Han Guo, Meng Li, Xin Yang, Yining Ding, Vikas Chandra, Yingyan Lin Accep

High-level batteries-included neural network training library for Pytorch

Pywick High-Level Training framework for Pytorch Pywick is a high-level Pytorch training framework that aims to get you up and running quickly with st

higher is a pytorch library allowing users to obtain higher order gradients over losses spanning training loops rather than individual training steps.

higher is a library providing support for higher-order optimization, e.g. through unrolled first-order optimization loops, of "meta" aspects of these

Training RNNs as Fast as CNNs (https://arxiv.org/abs/1709.02755)

News SRU++, a new SRU variant, is released. [tech report] [blog] The experimental code and SRU++ implementation are available on the dev branch which

The easiest way to use deep metric learning in your application. Modular, flexible, and extensible. Written in PyTorch.

News March 3: v0.9.97 has various bug fixes and improvements: Bug fixes for NTXentLoss Efficiency improvement for AccuracyCalculator, by using torch i

A GPU-accelerated library containing highly optimized building blocks and an execution engine for data processing to accelerate deep learning training and inference applications.

NVIDIA DALI The NVIDIA Data Loading Library (DALI) is a library for data loading and pre-processing to accelerate deep learning applications. It provi

A PyTorch Extension: Tools for easy mixed precision and distributed training in Pytorch

Introduction This repository holds NVIDIA-maintained utilities to streamline mixed precision and distributed training in Pytorch. Some of the code her

Massively parallel self-organizing maps: accelerate training on multicore CPUs, GPUs, and clusters

Somoclu Somoclu is a massively parallel implementation of self-organizing maps. It exploits multicore CPUs, it is able to rely on MPI for distributing

Decentralized deep learning in PyTorch. Built to train models on thousands of volunteers across the world.

Hivemind: decentralized deep learning in PyTorch Hivemind is a PyTorch library to train large neural networks across the Internet. Its intended usage

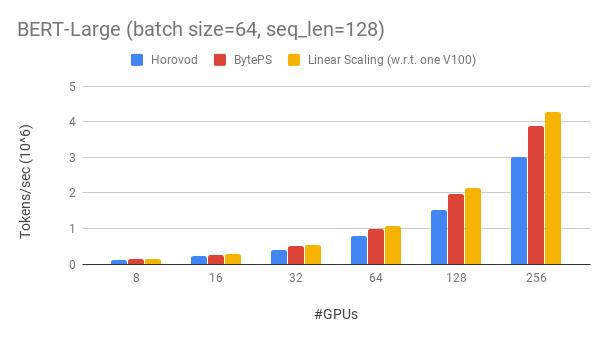

A high performance and generic framework for distributed DNN training

BytePS BytePS is a high performance and general distributed training framework. It supports TensorFlow, Keras, PyTorch, and MXNet, and can run on eith

PyTorch extensions for high performance and large scale training.

Description FairScale is a PyTorch extension library for high performance and large scale training on one or multiple machines/nodes. This library ext

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective. 10x Larger Models 10x Faster Trainin

Petastorm library enables single machine or distributed training and evaluation of deep learning models from datasets in Apache Parquet format. It supports ML frameworks such as Tensorflow, Pytorch, and PySpark and can be used from pure Python code.

Petastorm Contents Petastorm Installation Generating a dataset Plain Python API Tensorflow API Pytorch API Spark Dataset Converter API Analyzing petas

Distributed training framework for TensorFlow, Keras, PyTorch, and Apache MXNet.

Horovod Horovod is a distributed deep learning training framework for TensorFlow, Keras, PyTorch, and Apache MXNet. The goal of Horovod is to make dis

An open source reinforcement learning framework for training, evaluating, and deploying robust trading agents.

TensorTrade: Trade Efficiently with Reinforcement Learning TensorTrade is still in Beta, meaning it should be used very cautiously if used in producti

git《Self-Attention Attribution: Interpreting Information Interactions Inside Transformer》(AAAI 2021) GitHub:

Self-Attention Attribution This repository contains the implementation for AAAI-2021 paper Self-Attention Attribution: Interpreting Information Intera

Code for CoMatch: Semi-supervised Learning with Contrastive Graph Regularization

CoMatch: Semi-supervised Learning with Contrastive Graph Regularization (Salesforce Research) This is a PyTorch implementation of the CoMatch paper [B

PyTorch implementation of "Contrast to Divide: self-supervised pre-training for learning with noisy labels"

Contrast to Divide: self-supervised pre-training for learning with noisy labels This is an official implementation of "Contrast to Divide: self-superv

![[arXiv] What-If Motion Prediction for Autonomous Driving ❓🚗💨](https://github.com/wqi/WIMP/raw/master/docs/img/demo.gif)

[arXiv] What-If Motion Prediction for Autonomous Driving ❓🚗💨

WIMP - What If Motion Predictor Reference PyTorch Implementation for What If Motion Prediction [PDF] [Dynamic Visualizations] Setup Requirements The W

BossNAS: Exploring Hybrid CNN-transformers with Block-wisely Self-supervised Neural Architecture Search

BossNAS This repository contains PyTorch evaluation code, retraining code and pretrained models of our paper: BossNAS: Exploring Hybrid CNN-transforme

An efficient and effective learning to rank algorithm by mining information across ranking candidates. This repository contains the tensorflow implementation of SERank model. The code is developed based on TF-Ranking.

SERank An efficient and effective learning to rank algorithm by mining information across ranking candidates. This repository contains the tensorflow

Optimising chemical reactions using machine learning

Summit Summit is a set of tools for optimising chemical processes. We’ve started by targeting reactions. What is Summit? Currently, reaction optimisat

Code for our paper at ECCV 2020: Post-Training Piecewise Linear Quantization for Deep Neural Networks

PWLQ Updates 2020/07/16 - We are working on getting permission from our institution to release our source code. We will release it once we are granted

Implementation of the 😇 Attention layer from the paper, Scaling Local Self-Attention For Parameter Efficient Visual Backbones

HaloNet - Pytorch Implementation of the Attention layer from the paper, Scaling Local Self-Attention For Parameter Efficient Visual Backbones. This re

Understanding and Improving Encoder Layer Fusion in Sequence-to-Sequence Learning (ICLR 2021)

Understanding and Improving Encoder Layer Fusion in Sequence-to-Sequence Learning (ICLR 2021) Citation Please cite as: @inproceedings{liu2020understan

Repository providing a wide range of self-supervised pretrained models for computer vision tasks.

Hierarchical Pretraining: Research Repository This is a research repository for reproducing the results from the project "Self-supervised pretraining

Convolutional Recurrent Neural Networks(CRNN) for Scene Text Recognition

CRNN_Tensorflow This is a TensorFlow implementation of a Deep Neural Network for scene text recognition. It is mainly based on the paper "An End-to-En

Generate text images for training deep learning ocr model

New version release:https://github.com/oh-my-ocr/text_renderer Text Renderer Generate text images for training deep learning OCR model (e.g. CRNN). Su

A synthetic data generator for text recognition

TextRecognitionDataGenerator A synthetic data generator for text recognition What is it for? Generating text image samples to train an OCR software. N

a Deep Learning Framework for Text

DeLFT DeLFT (Deep Learning Framework for Text) is a Keras and TensorFlow framework for text processing, focusing on sequence labelling (e.g. named ent

Awesome multilingual OCR toolkits based on PaddlePaddle (practical ultra lightweight OCR system, provide data annotation and synthesis tools, support training and deployment among server, mobile, embedded and IoT devices)

English | 简体中文 Introduction PaddleOCR aims to create multilingual, awesome, leading, and practical OCR tools that help users train better models and a

Dataset Cartography: Mapping and Diagnosing Datasets with Training Dynamics

Dataset Cartography Code for the paper Dataset Cartography: Mapping and Diagnosing Datasets with Training Dynamics at EMNLP 2020. This repository cont

Code for the paper "Training GANs with Stronger Augmentations via Contrastive Discriminator" (ICLR 2021)

Training GANs with Stronger Augmentations via Contrastive Discriminator (ICLR 2021) This repository contains the code for reproducing the paper: Train

A PyTorch Extension: Tools for easy mixed precision and distributed training in Pytorch

This repository holds NVIDIA-maintained utilities to streamline mixed precision and distributed training in Pytorch. Some of the code here will be included in upstream Pytorch eventually. The intention of Apex is to make up-to-date utilities available to users as quickly as possible.

Official code of our work, Unified Pre-training for Program Understanding and Generation [NAACL 2021].

PLBART Code pre-release of our work, Unified Pre-training for Program Understanding and Generation accepted at NAACL 2021. Note. A detailed documentat

Official implementation of Self-supervised Graph Attention Networks (SuperGAT), ICLR 2021.

SuperGAT Official implementation of Self-supervised Graph Attention Networks (SuperGAT). This model is presented at How to Find Your Friendly Neighbor

![[CVPR2021] The source code for our paper 《Removing the Background by Adding the Background: Towards Background Robust Self-supervised Video Representation Learning》.](https://github.com/FingerRec/TBE/raw/main/figures/be_visualization.png)

[CVPR2021] The source code for our paper 《Removing the Background by Adding the Background: Towards Background Robust Self-supervised Video Representation Learning》.

TBE The source code for our paper "Removing the Background by Adding the Background: Towards Background Robust Self-supervised Video Representation Le

![[CVPR2021 Oral] UP-DETR: Unsupervised Pre-training for Object Detection with Transformers](https://github.com/dddzg/up-detr/raw/master/.github/UP-DETR.png)

[CVPR2021 Oral] UP-DETR: Unsupervised Pre-training for Object Detection with Transformers

UP-DETR: Unsupervised Pre-training for Object Detection with Transformers This is the official PyTorch implementation and models for UP-DETR paper: @a

Consistency Regularization for Adversarial Robustness

Consistency Regularization for Adversarial Robustness Official PyTorch implementation of Consistency Regularization for Adversarial Robustness by Jiho

Open Sound Strip, Sequence or Record in Audacity

Audacity Tools For Blender Sound editing in Blender Video Sequence Editor with Audacity integrated. Send/receive the full edited sequence or single st

Implementation of Barlow Twins paper

barlowtwins PyTorch Implementation of Barlow Twins paper: Barlow Twins: Self-Supervised Learning via Redundancy Reduction This is currently a work in

A complete end-to-end demonstration in which we collect training data in Unity and use that data to train a deep neural network to predict the pose of a cube. This model is then deployed in a simulated robotic pick-and-place task.

Object Pose Estimation Demo This tutorial will go through the steps necessary to perform pose estimation with a UR3 robotic arm in Unity. You’ll gain

PArallel Distributed Deep LEarning: Machine Learning Framework from Industrial Practice (『飞桨』核心框架,深度学习&机器学习高性能单机、分布式训练和跨平台部署)

English | 简体中文 Welcome to the PaddlePaddle GitHub. PaddlePaddle, as the only independent R&D deep learning platform in China, has been officially open

Distributed training framework for TensorFlow, Keras, PyTorch, and Apache MXNet.

Horovod Horovod is a distributed deep learning training framework for TensorFlow, Keras, PyTorch, and Apache MXNet. The goal of Horovod is to make dis

A very simple framework for state-of-the-art Natural Language Processing (NLP)

A very simple framework for state-of-the-art NLP. Developed by Humboldt University of Berlin and friends. Flair is: A powerful NLP library. Flair allo

A Neural Net Training Interface on TensorFlow, with focus on speed + flexibility

Tensorpack is a neural network training interface based on TensorFlow. Features: It's Yet Another TF high-level API, with speed, and flexibility built

Simple tools for logging and visualizing, loading and training

TNT TNT is a library providing powerful dataloading, logging and visualization utilities for Python. It is closely integrated with PyTorch and is desi

Sequence learning toolkit for Python

seqlearn seqlearn is a sequence classification toolkit for Python. It is designed to extend scikit-learn and offer as similar as possible an API. Comp

Implementation of COCO-LM, Correcting and Contrasting Text Sequences for Language Model Pretraining, in Pytorch

COCO LM Pretraining (wip) Implementation of COCO-LM, Correcting and Contrasting Text Sequences for Language Model Pretraining, in Pytorch. They were a

网络协议2天集训

网络协议2天集训 抓包工具安装 Wireshark wireshark下载地址 Tcpdump CentOS yum install tcpdump -y Ubuntu apt-get install tcpdump -y k8s抓包测试环境 查看虚拟网卡veth pair 查看

Dense Contrastive Learning (DenseCL) for self-supervised representation learning, CVPR 2021.

Dense Contrastive Learning for Self-Supervised Visual Pre-Training This project hosts the code for implementing the DenseCL algorithm for se

Ultra-Data-Efficient GAN Training: Drawing A Lottery Ticket First, Then Training It Toughly

Ultra-Data-Efficient GAN Training: Drawing A Lottery Ticket First, Then Training It Toughly Code for this paper Ultra-Data-Efficient GAN Tra

The open-source core of Pinry, a tiling image board system for people who want to save, tag, and share images, videos and webpages in an easy to skim through format.

The open-source core of Pinry, a tiling image board system for people who want to save, tag, and share images, videos and webpages in an easy to skim

A :baby: buddy to help caregivers track sleep, feedings, diaper changes, and tummy time to learn about and predict baby's needs without (as much) guess work.

Baby Buddy A buddy for babies! Helps caregivers track sleep, feedings, diaper changes, tummy time and more to learn about and predict baby's needs wit

🗃 Open source self-hosted web archiving. Takes URLs/browser history/bookmarks/Pocket/Pinboard/etc., saves HTML, JS, PDFs, media, and more...

ArchiveBox Open-source self-hosted web archiving. ▶️ Quickstart | Demo | Github | Documentation | Info & Motivation | Community | Roadmap "Your own pe

:mag: Ambar: Document Search Engine

🔍 Ambar: Document Search Engine Ambar is an open-source document search engine with automated crawling, OCR, tagging and instant full-text search. Am

Supysonic is a Python implementation of the Subsonic server API.

Supysonic Supysonic is a Python implementation of the Subsonic server API. Current supported features are: browsing (by folders or tags) streaming of