317 Repositories

Python patient-knowledge-distillation Libraries

The Codebase for Causal Distillation for Language Models.

Causal Distillation for Language Models Zhengxuan Wu*,Atticus Geiger*, Josh Rozner, Elisa Kreiss, Hanson Lu, Thomas Icard, Christopher Potts, Noah D.

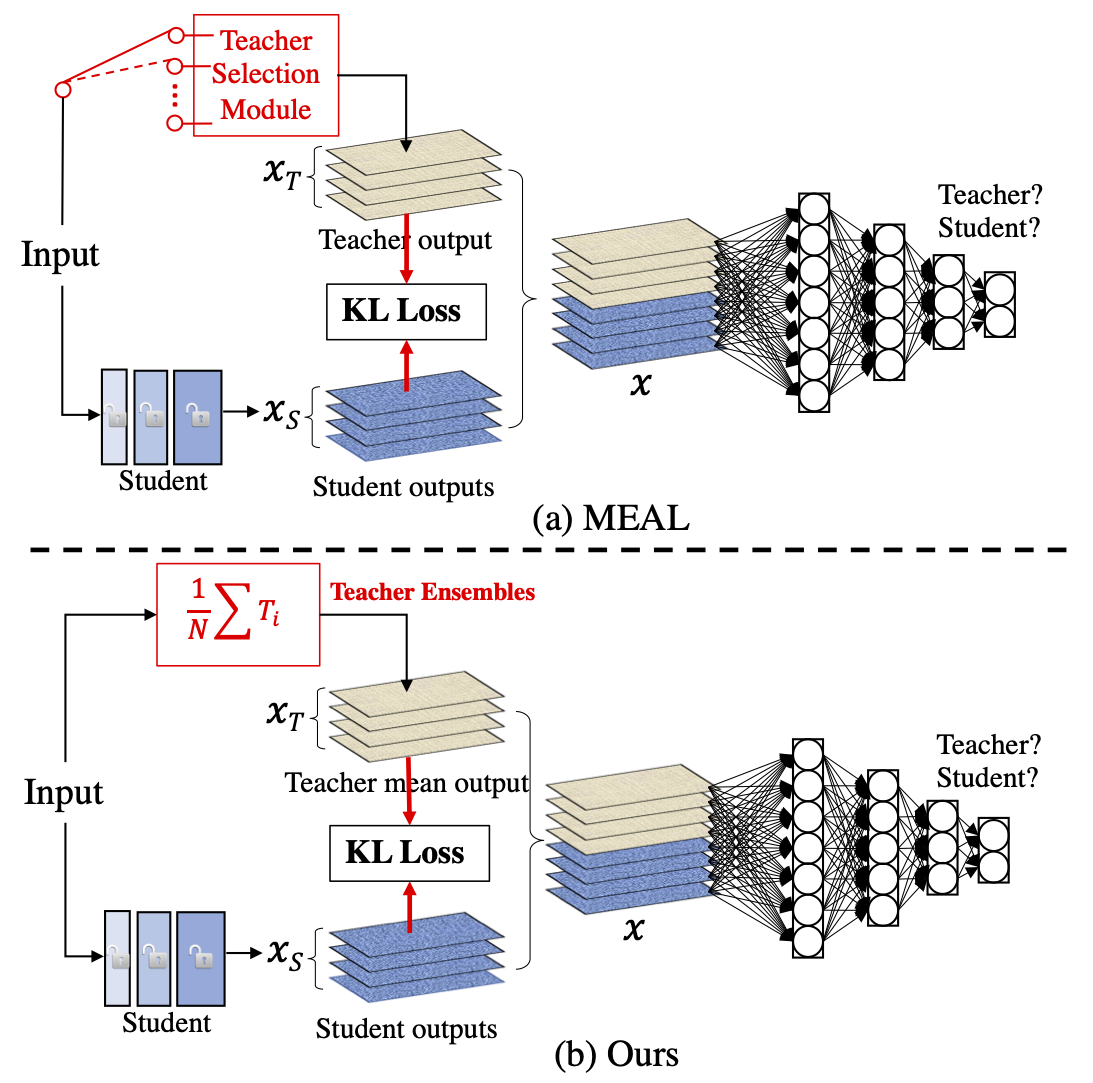

MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tricks

MEAL-V2 This is the official pytorch implementation of our paper: "MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tric

Open-CyKG: An Open Cyber Threat Intelligence Knowledge Graph

Open-CyKG: An Open Cyber Threat Intelligence Knowledge Graph Model Description Open-CyKG is a framework that is constructed using an attenti

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

Code for ACL 2019 Paper: "COMET: Commonsense Transformers for Automatic Knowledge Graph Construction"

To run a generation experiment (either conceptnet or atomic), follow these instructions: First Steps First clone, the repo: git clone https://github.c

Sorce code and datasets for "K-BERT: Enabling Language Representation with Knowledge Graph",

K-BERT Sorce code and datasets for "K-BERT: Enabling Language Representation with Knowledge Graph", which is implemented based on the UER framework. R

LUKE -- Language Understanding with Knowledge-based Embeddings

LUKE (Language Understanding with Knowledge-based Embeddings) is a new pre-trained contextualized representation of words and entities based on transf

Source code for TACL paper "KEPLER: A Unified Model for Knowledge Embedding and Pre-trained Language Representation".

KEPLER: A Unified Model for Knowledge Embedding and Pre-trained Language Representation Source code for TACL 2021 paper KEPLER: A Unified Model for Kn

Fusion-in-Decoder Distilling Knowledge from Reader to Retriever for Question Answering

This repository contains code for: Fusion-in-Decoder models Distilling Knowledge from Reader to Retriever Dependencies Python 3 PyTorch (currently tes

A Fast Knowledge Distillation Framework for Visual Recognition

FKD: A Fast Knowledge Distillation Framework for Visual Recognition Official PyTorch implementation of paper A Fast Knowledge Distillation Framework f

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

![Code and Data for the paper: Molecular Contrastive Learning with Chemical Element Knowledge Graph [AAAI 2022]](https://github.com/Fangyin1994/KCL/raw/main/fig/overview.png)

Code and Data for the paper: Molecular Contrastive Learning with Chemical Element Knowledge Graph [AAAI 2022]

Knowledge-enhanced Contrastive Learning (KCL) Molecular Contrastive Learning with Chemical Element Knowledge Graph [ AAAI 2022 ]. We construct a Chemi

PyTorch implementation of paper A Fast Knowledge Distillation Framework for Visual Recognition.

FKD: A Fast Knowledge Distillation Framework for Visual Recognition Official PyTorch implementation of paper A Fast Knowledge Distillation Framework f

Code for the paper Open Sesame: Getting Inside BERT's Linguistic Knowledge.

Open Sesame This repository contains the code for the paper Open Sesame: Getting Inside BERT's Linguistic Knowledge. Credits We built the project on t

Code for Editing Factual Knowledge in Language Models

KnowledgeEditor Code for Editing Factual Knowledge in Language Models (https://arxiv.org/abs/2104.08164). @inproceedings{decao2021editing, title={Ed

Code for ACL2021 long paper: Knowledgeable or Educated Guess? Revisiting Language Models as Knowledge Bases

LANKA This is the source code for paper: Knowledgeable or Educated Guess? Revisiting Language Models as Knowledge Bases (ACL 2021, long paper) Referen

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

EasyTransfer is designed to make the development of transfer learning in NLP applications easier.

EasyTransfer is designed to make the development of transfer learning in NLP applications easier. The literature has witnessed the success of applying

[KBS] Aspect-based sentiment analysis via affective knowledge enhanced graph convolutional networks

#Sentic GCN Introduction This repository was used in our paper: Aspect-Based Sentiment Analysis via Affective Knowledge Enhanced Graph Convolutional N

Dataset for the Research2Clinics @ NeurIPS 2021 Paper: What Do You See in this Patient? Behavioral Testing of Clinical NLP Models

Behavioral Testing of Clinical NLP Models This repository contains code for testing the behavior of clinical prediction models based on patient letter

The implementation for "Comprehensive Knowledge Distillation with Causal Intervention".

Comprehensive Knowledge Distillation with Causal Intervention This repository is a PyTorch implementation of "Comprehensive Knowledge Distillation wit

PyContinual (An Easy and Extendible Framework for Continual Learning)

PyContinual (An Easy and Extendible Framework for Continual Learning) Easy to Use You can sumply change the baseline, backbone and task, and then read

Official PyTorch implementation of Data-free Knowledge Distillation for Object Detection, WACV 2021.

Introduction This repository is the official PyTorch implementation of Data-free Knowledge Distillation for Object Detection, WACV 2021. Data-free Kno

This repository contains the implementation of the paper: Federated Distillation of Natural Language Understanding with Confident Sinkhorns

Federated Distillation of Natural Language Understanding with Confident Sinkhorns This repository provides an alternative method for ensembled distill

Official Pytorch Implementation of Unsupervised Image Denoising With Frequency Domain Knowledge (BMVC2021 Oral Accepted Paper)

Unsupervised Image Denoising with Frequency Domain Knowledge (BMVC 2021 Oral) : Official Project Page This repository provides the official PyTorch im

Source Code and data for my paper titled Linguistic Knowledge in Data Augmentation for Natural Language Processing: An Example on Chinese Question Matching

Description The source code and data for my paper titled Linguistic Knowledge in Data Augmentation for Natural Language Processing: An Example on Chin

Official Pytorch Implementation of Unsupervised Image Denoising with Frequency Domain Knowledge

Unsupervised Image Denoising with Frequency Domain Knowledge (BMVC 2021 Oral) : Official Project Page This repository provides the official PyTorch im

Data and evaluation code for the paper WikiNEuRal: Combined Neural and Knowledge-based Silver Data Creation for Multilingual NER (EMNLP 2021).

Data and evaluation code for the paper WikiNEuRal: Combined Neural and Knowledge-based Silver Data Creation for Multilingual NER. @inproceedings{tedes

WSDM‘2022: Knowledge Enhanced Sports Game Summarization

Knowledge Enhanced Sports Game Summarization Cooming Soon! :) Data will be released after approval process. Code will be published once the author of

Pytorch implementation for Patient Knowledge Distillation for BERT Model Compression

Patient Knowledge Distillation for BERT Model Compression Knowledge distillation for BERT model Installation Run command below to install the environm

PatientDB is a flask app to store patient information.

PatientDB PatientDB on Heroku "PatientDB is a simple web app that stores patient information, able to edit the information, and able to query the data

EvDistill: Asynchronous Events to End-task Learning via Bidirectional Reconstruction-guided Cross-modal Knowledge Distillation (CVPR'21)

EvDistill: Asynchronous Events to End-task Learning via Bidirectional Reconstruction-guided Cross-modal Knowledge Distillation (CVPR'21) Citation If y

An experimental project I'm undertaking for the sole purpose of increasing my Python knowledge

5ePy is an experimental project I'm undertaking for the sole purpose of increasing my Python knowledge. #Goals Goal: Create a working, albeit lightwei

Code for DisCo: Remedy Self-supervised Learning on Lightweight Models with Distilled Contrastive Learning

DisCo: Remedy Self-supervised Learning on Lightweight Models with Distilled Contrastive Learning Pytorch Implementation for DisCo: Remedy Self-supervi

Switch spaces for knowledge graph embeddings

SwisE Switch spaces for knowledge graph embeddings. Requirements: python3 pytorch numpy tqdm Reproduce the results To reproduce the reported results,

FasterAI: A library to make smaller and faster models with FastAI.

Fasterai fasterai is a library created to make neural network smaller and faster. It essentially relies on common compression techniques for networks

Focal and Global Knowledge Distillation for Detectors

FGD Paper: Focal and Global Knowledge Distillation for Detectors Install MMDetection and MS COCO2017 Our codes are based on MMDetection. Please follow

The purpose of this project is to share knowledge on how awesome Streamlit is and can be

Awesome Streamlit The fastest way to build Awesome Tools and Apps! Powered by Python! The purpose of this project is to share knowledge on how Awesome

Quantization library for PyTorch. Support low-precision and mixed-precision quantization, with hardware implementation through TVM.

HAWQ: Hessian AWare Quantization HAWQ is an advanced quantization library written for PyTorch. HAWQ enables low-precision and mixed-precision uniform

DA2Lite is an automated model compression toolkit for PyTorch.

DA2Lite (Deep Architecture to Lite) is a toolkit to compress and accelerate deep network models. ⭐ Star us on GitHub — it helps!! Frameworks & Librari

Knowledge Distillation Toolbox for Semantic Segmentation

SegDistill: Toolbox for Knowledge Distillation on Semantic Segmentation Networks This repo contains the supported code and configuration files for Seg

NLP and Text Generation Experiments in TensorFlow 2.x / 1.x

Code has been run on Google Colab, thanks Google for providing computational resources Contents Natural Language Processing(自然语言处理) Text Classificati

A quick GUI script to pseudo-anonymize patient videos for use in the GRK

grk_patient_sorter A quick GUI script to pseudo-anonymize patient videos for use in the GRK. Source directory — the highest level folder that will be

Source code release of the paper: Knowledge-Guided Deep Fractal Neural Networks for Human Pose Estimation.

GNet-pose Project Page: http://guanghan.info/projects/guided-fractal/ UPDATE 9/27/2018: Prototxts and model that achieved 93.9Pck on LSP dataset. http

The software associated with a paper accepted at EMNLP 2021 titled "Open Knowledge Graphs Canonicalization using Variational Autoencoders".

Open-KG-canonicalization The software associated with a paper accepted at EMNLP 2021 titled "Open Knowledge Graphs Canonicalization using Variational

🛠️ Tools for Transformers compression using Lightning ⚡

Bert-squeeze is a repository aiming to provide code to reduce the size of Transformer-based models or decrease their latency at inference time.

Implementation of [Time in a Box: Advancing Knowledge Graph Completion with Temporal Scopes].

Time2box Implementation of [Time in a Box: Advancing Knowledge Graph Completion with Temporal Scopes].

Robust and Accurate Object Detection via Self-Knowledge Distillation

Robust and Accurate Object Detection via Self-Knowledge Distillation paper:https://arxiv.org/abs/2111.07239 Environments Python 3.7 Cuda 10.1 Prepare

Solving Zero-Shot Learning in Named Entity Recognition with Common Sense Knowledge

Zero-Shot Learning in Named Entity Recognition with Common Sense Knowledge Associated code for the paper Zero-Shot Learning in Named Entity Recognitio

Extracting knowledge graphs from language models as a diagnostic benchmark of model performance.

Interpreting Language Models Through Knowledge Graph Extraction Idea: How do we interpret what a language model learns at various stages of training?

Code accompanying the paper "Knowledge Base Completion Meets Transfer Learning"

Knowledge Base Completion Meets Transfer Learning This code accompanies the paper Knowledge Base Completion Meets Transfer Learning published at EMNLP

CoReD: Generalizing Fake Media Detection with Continual Representation using Distillation (ACMMM'21 Oral Paper)

CoReD: Generalizing Fake Media Detection with Continual Representation using Distillation (ACMMM'21 Oral Paper) (Accepted for oral presentation at ACM

This is a sport analytics project that combines the knowledge of OOP and Webscraping

This is a sport analytics project that combines the knowledge of Object Oriented Programming (OOP) and Webscraping, the weekly scraping of the English Premier league table is carried out to assess the performance of each club from the beginning of the season to the end.

![[AAAI-2021] Visual Boundary Knowledge Translation for Foreground Segmentation](https://user-images.githubusercontent.com/6896182/141064192-3dad4369-7307-459a-8bb3-5e9d639239af.png)

[AAAI-2021] Visual Boundary Knowledge Translation for Foreground Segmentation

Trans-Net Code for (Visual Boundary Knowledge Translation for Foreground Segmentation, AAAI2021). [https://ojs.aaai.org/index.php/AAAI/article/view/16

Head and Neck Tumour Segmentation and Prediction of Patient Survival Project

Head-and-Neck-Tumour-Segmentation-and-Prediction-of-Patient-Survival Welcome to the Head and Neck Tumour Segmentation and Prediction of Patient Surviv

Entity-Based Knowledge Conflicts in Question Answering.

Entity-Based Knowledge Conflicts in Question Answering Run Instructions | Paper | Citation | License This repository provides the Substitution Framewo

Readings for "A Unified View of Relational Deep Learning for Polypharmacy Side Effect, Combination Therapy, and Drug-Drug Interaction Prediction."

Polypharmacy - DDI - Synergy Survey The Survey Paper This repository accompanies our survey paper A Unified View of Relational Deep Learning for Polyp

Instance-conditional Knowledge Distillation for Object Detection

Instance-conditional Knowledge Distillation for Object Detection This is a MegEngine implementation of the paper "Instance-conditional Knowledge Disti

Knowledgeable Prompt-tuning: Incorporating Knowledge into Prompt Verbalizer for Text Classification

Knowledgeable Prompt-tuning: Incorporating Knowledge into Prompt Verbalizer for Text Classification

The official github repository for Towards Continual Knowledge Learning of Language Models

Towards Continual Knowledge Learning of Language Models This is the official github repository for Towards Continual Knowledge Learning of Language Mo

Code repository for EMNLP 2021 paper 'Adversarial Attacks on Knowledge Graph Embeddings via Instance Attribution Methods'

Adversarial Attacks on Knowledge Graph Embeddings via Instance Attribution Methods This is the code repository to accompany the EMNLP 2021 paper on ad

Generate knowledge graphs with interesting geometries, like lattices

Geometric Graphs Generate knowledge graphs with interesting geometries, like lattices. Works on Python 3.9+ because it uses cool new features. Get out

Arch-Net: Model Distillation for Architecture Agnostic Model Deployment

Arch-Net: Model Distillation for Architecture Agnostic Model Deployment The official implementation of Arch-Net: Model Distillation for Architecture A

Readings for "A Unified View of Relational Deep Learning for Polypharmacy Side Effect, Combination Therapy, and Drug-Drug Interaction Prediction."

Polypharmacy - DDI - Synergy Survey The Survey Paper This repository accompanies our survey paper A Unified View of Relational Deep Learning for Polyp

[NeurIPS 2021] Introspective Distillation for Robust Question Answering

Introspective Distillation (IntroD) This repository is the Pytorch implementation of our paper "Introspective Distillation for Robust Question Answeri

The implementation our EMNLP 2021 paper "Enhanced Language Representation with Label Knowledge for Span Extraction".

LEAR The implementation our EMNLP 2021 paper "Enhanced Language Representation with Label Knowledge for Span Extraction". **The code is in the "master

RMNA: A Neighbor Aggregation-Based Knowledge Graph Representation Learning Model Using Rule Mining

RMNA: A Neighbor Aggregation-Based Knowledge Graph Representation Learning Model Using Rule Mining Our code is based on Learning Attention-based Embed

A treasure chest for visual recognition powered by PaddlePaddle

简体中文 | English PaddleClas 简介 飞桨图像识别套件PaddleClas是飞桨为工业界和学术界所准备的一个图像识别任务的工具集,助力使用者训练出更好的视觉模型和应用落地。 近期更新 2021.11.1 发布PP-ShiTu技术报告,新增饮料识别demo 2021.10.23 发

Data and Code for paper Outlining and Filling: Hierarchical Query Graph Generation for Answering Complex Questions over Knowledge Graph is available for research purposes.

Data and Code for paper Outlining and Filling: Hierarchical Query Graph Generation for Answering Complex Questions over Knowledge Graph is available f

![DiscoNet: Learning Distilled Collaboration Graph for Multi-Agent Perception [NeurIPS 2021]](https://github.com/ai4ce/DiscoNet/raw/main/img.png)

DiscoNet: Learning Distilled Collaboration Graph for Multi-Agent Perception [NeurIPS 2021]

DiscoNet: Learning Distilled Collaboration Graph for Multi-Agent Perception [NeurIPS 2021] Yiming Li, Shunli Ren, Pengxiang Wu, Siheng Chen, Chen Feng

Enhancing Knowledge Tracing via Adversarial Training

Enhancing Knowledge Tracing via Adversarial Training This repository contains source code for the paper "Enhancing Knowledge Tracing via Adversarial T

![[ICCV'21] Learning Conditional Knowledge Distillation for Degraded-Reference Image Quality Assessment](https://user-images.githubusercontent.com/35843017/128686858-534ac4c1-8556-4999-a2cb-695119251e78.jpg)

[ICCV'21] Learning Conditional Knowledge Distillation for Degraded-Reference Image Quality Assessment

CKDN The official implementation of the ICCV2021 paper "Learning Conditional Knowledge Distillation for Degraded-Reference Image Quality Assessment" O

The code of paper ConE: Cone Embeddings for Multi-Hop Reasoning over Knowledge Graphs. Zhanqiu Zhang, Jie Wang, Jiajun Chen, Shuiwang Ji, Feng Wu. NeurIPS 2021.

ConE: Cone Embeddings for Multi-Hop Reasoning over Knowledge Graphs This is the code of paper ConE: Cone Embeddings for Multi-Hop Reasoning over Knowl

![[NeurIPS-2021] Mosaicking to Distill: Knowledge Distillation from Out-of-Domain Data](https://github.com/zju-vipa/MosaicKD/raw/main/assets/intro.jpg)

[NeurIPS-2021] Mosaicking to Distill: Knowledge Distillation from Out-of-Domain Data

MosaicKD Code for NeurIPS-21 paper "Mosaicking to Distill: Knowledge Distillation from Out-of-Domain Data" 1. Motivation Natural images share common l

Rot-Pro: Modeling Transitivity by Projection in Knowledge Graph Embedding

Rot-Pro : Modeling Transitivity by Projection in Knowledge Graph Embedding This repository contains the source code for the Rot-Pro model, presented a

SMORE: Knowledge Graph Completion and Multi-hop Reasoning in Massive Knowledge Graphs

SMORE: Knowledge Graph Completion and Multi-hop Reasoning in Massive Knowledge Graphs SMORE is a a versatile framework that scales multi-hop query emb

![[NeurIPS-2021] Mosaicking to Distill: Knowledge Distillation from Out-of-Domain Data](https://github.com/zju-vipa/MosaicKD/raw/main/assets/intro.jpg)

[NeurIPS-2021] Mosaicking to Distill: Knowledge Distillation from Out-of-Domain Data

MosaicKD Code for NeurIPS-21 paper "Mosaicking to Distill: Knowledge Distillation from Out-of-Domain Data" 1. Motivation Natural images share common l

ConE: Cone Embeddings for Multi-Hop Reasoning over Knowledge Graphs

ConE: Cone Embeddings for Multi-Hop Reasoning over Knowledge Graphs This is the code of paper ConE: Cone Embeddings for Multi-Hop Reasoning over Knowl

Probabilistic Entity Representation Model for Reasoning over Knowledge Graphs

Implementation for the paper: Probabilistic Entity Representation Model for Reasoning over Knowledge Graphs, Nurendra Choudhary, Nikhil Rao, Sumeet Ka

Unified Instance and Knowledge Alignment Pretraining for Aspect-based Sentiment Analysis

Unified Instance and Knowledge Alignment Pretraining for Aspect-based Sentiment Analysis Requirements python 3.7 pytorch-gpu 1.7 numpy 1.19.4 pytorch_

Light-weight network, depth estimation, knowledge distillation, real-time depth estimation, auxiliary data.

light-weight-depth-estimation Boosting Light-Weight Depth Estimation Via Knowledge Distillation, https://arxiv.org/abs/2105.06143 Junjie Hu, Chenyou F

IEEE Winter Conference on Applications of Computer Vision 2022 Accepted

SSKT(Accepted WACV2022) Concept map Dataset Image dataset CIFAR10 (torchvision) CIFAR100 (torchvision) STL10 (torchvision) Pascal VOC (torchvision) Im

A novel pipeline framework for multi-hop complex KGQA task. About the paper title: Improving Multi-hop Embedded Knowledge Graph Question Answering by Introducing Relational Chain Reasoning

Rce-KGQA A novel pipeline framework for multi-hop complex KGQA task. This framework mainly contains two modules, answering_filtering_module and relati

Created as part of CS50 AI's coursework. This AI makes use of knowledge entailment to calculate the best probabilities to win Minesweeper.

Minesweeper-AI Created as part of CS50 AI's coursework. This AI makes use of knowledge entailment to calculate the best probabilities to win Minesweep

Adaptive, interpretable wavelets across domains (NeurIPS 2021)

Adaptive wavelets Wavelets which adapt given data (and optionally a pre-trained model). This yields models which are faster, more compressible, and mo

Blog focused on skills enhancement and knowledge sharing. Tech Stack's: Vue.js, Django and Django-Ninja

Blog focused on skills enhancement and knowledge sharing. Tech Stack's: Vue.js, Django and Django-Ninja

This is the code of NeurIPS'21 paper "Towards Enabling Meta-Learning from Target Models".

ST This is the code of NeurIPS 2021 paper "Towards Enabling Meta-Learning from Target Models". If you use any content of this repo for your work, plea

Neural Lexicon Reader: Reduce Pronunciation Errors in End-to-end TTS by Leveraging External Textual Knowledge

Neural Lexicon Reader: Reduce Pronunciation Errors in End-to-end TTS by Leveraging External Textual Knowledge This is an implementation of the paper,

Knowledge Oriented Programming Language

KoPL: 面向知识的推理问答编程语言 安装 | 快速开始 | 文档 KoPL全称 Knowledge oriented Programing Language, 是一个为复杂推理问答而设计的编程语言。我们可以将自然语言问题表示为由基本函数组合而成的KoPL程序,程序运行的结果就是问题的答案。目前,

Code and datasets for the paper "KnowPrompt: Knowledge-aware Prompt-tuning with Synergistic Optimization for Relation Extraction"

KnowPrompt Code and datasets for our paper "KnowPrompt: Knowledge-aware Prompt-tuning with Synergistic Optimization for Relation Extraction" Requireme

Code for Findings at EMNLP 2021 paper: "Learn Continually, Generalize Rapidly: Lifelong Knowledge Accumulation for Few-shot Learning"

Learn Continually, Generalize Rapidly: Lifelong Knowledge Accumulation for Few-shot Learning This repo is for Findings at EMNLP 2021 paper: Learn Cont

Watson Natural Language Understanding and Knowledge Studio

Material de demonstração dos serviços: Watson Natural Language Understanding e Knowledge Studio Visão Geral: https://www.ibm.com/br-pt/cloud/watson-na

Source code for the paper "PLOME: Pre-training with Misspelled Knowledge for Chinese Spelling Correction" in ACL2021

PLOME:Pre-training with Misspelled Knowledge for Chinese Spelling Correction (ACL2021) This repository provides the code and data of the work in ACL20

MutualGuide is a compact object detector specially designed for embedded devices

Introduction MutualGuide is a compact object detector specially designed for embedded devices. Comparing to existing detectors, this repo contains two

Neural Lexicon Reader: Reduce Pronunciation Errors in End-to-end TTS by Leveraging External Textual Knowledge

Neural Lexicon Reader: Reduce Pronunciation Errors in End-to-end TTS by Leveraging External Textual Knowledge This is an implementation of the paper,

This repository contains the code for the paper in EMNLP 2021: "HRKD: Hierarchical Relational Knowledge Distillation for Cross-domain Language Model Compression".

HRKD: Hierarchical Relational Knowledge Distillation for Cross-domain Language Model Compression This repository contains the code for the paper in EM

PyTorch implementation of "Dataset Knowledge Transfer for Class-Incremental Learning Without Memory" (WACV2022)

Dataset Knowledge Transfer for Class-Incremental Learning Without Memory [Paper] [Slides] Summary Introduction Installation Reproducing results Citati

Temporal Knowledge Graph Reasoning Triggered by Memories

MTDM Temporal Knowledge Graph Reasoning Triggered by Memories To alleviate the time dependence, we propose a memory-triggered decision-making (MTDM) n

Reinforcement Knowledge Graph Reasoning for Explainable Recommendation

Reinforcement Knowledge Graph Reasoning for Explainable Recommendation This repository contains the source code of the SIGIR 2019 paper "Reinforcement

Jointly Learning Explainable Rules for Recommendation with Knowledge Graph

Jointly Learning Explainable Rules for Recommendation with Knowledge Graph