1357 Repositories

Python self-critical-sequence-training Libraries

A Python training and inference implementation of Yolov5 helmet detection in Jetson Xavier nx and Jetson nano

yolov5-helmet-detection-python A Python implementation of Yolov5 to detect head or helmet in the wild in Jetson Xavier nx and Jetson nano. In Jetson X

QAT(quantize aware training) for classification with MQBench

MQBench Quantization Aware Training with PyTorch I am using MQBench(Model Quantization Benchmark)(http://mqbench.tech/) to quantize the model for depl

MQBench Quantization Aware Training with PyTorch

MQBench Quantization Aware Training with PyTorch I am using MQBench(Model Quantization Benchmark)(http://mqbench.tech/) to quantize the model for depl

A Discord Self bot written in python

WitheredBot A Discord Self bot written in python Requirement Python = 3.9 How to Configure git clone https://github.com/a-a-a-aa/WitheredBot.git cd W

Official Code Release for "TIP-Adapter: Training-free clIP-Adapter for Better Vision-Language Modeling"

Official Code Release for "TIP-Adapter: Training-free clIP-Adapter for Better Vision-Language Modeling" Pipeline of Tip-Adapter Tip-Adapter can provid

TaCL: Improving BERT Pre-training with Token-aware Contrastive Learning

TaCL: Improving BERT Pre-training with Token-aware Contrastive Learning Authors: Yixuan Su, Fangyu Liu, Zaiqiao Meng, Lei Shu, Ehsan Shareghi, and Nig

An implementation of Equivariant e2 convolutional kernals into a convolutional self attention network, applied to radio astronomy data.

EquivariantSelfAttention An implementation of Equivariant e2 convolutional kernals into a convolutional self attention network, applied to radio astro

We present a regularized self-labeling approach to improve the generalization and robustness properties of fine-tuning.

Overview This repository provides the implementation for the paper "Improved Regularization and Robustness for Fine-tuning in Neural Networks", which

Official implementation of "Membership Inference Attacks Against Self-supervised Speech Models"

Introduction Official implementation of "Membership Inference Attacks Against Self-supervised Speech Models". In this work, we demonstrate that existi

Self-Supervised Collision Handling via Generative 3D Garment Models for Virtual Try-On

Self-Supervised Collision Handling via Generative 3D Garment Models for Virtual Try-On [Project website] [Dataset] [Video] Abstract We propose a new g

TaCL: Improve BERT Pre-training with Token-aware Contrastive Learning

TaCL: Improve BERT Pre-training with Token-aware Contrastive Learning

Visualize the training curve from the *.csv file (tensorboard format).

Training-Curve-Vis Visualize the training curve from the *.csv file (tensorboard format). Feature Custom labels Curve smoothing Support for multiple c

[ICCV 2021 Oral] Just Ask: Learning to Answer Questions from Millions of Narrated Videos

Just Ask: Learning to Answer Questions from Millions of Narrated Videos Webpage • Demo • Paper This repository provides the code for our paper, includ

[ICCV 2021] Self-supervised Monocular Depth Estimation for All Day Images using Domain Separation

ADDS-DepthNet This is the official implementation of the paper Self-supervised Monocular Depth Estimation for All Day Images using Domain Separation I

This repo holds codes of the ICCV21 paper: Visual Alignment Constraint for Continuous Sign Language Recognition.

VAC_CSLR This repo holds codes of the paper: Visual Alignment Constraint for Continuous Sign Language Recognition.(ICCV 2021) [paper] Prerequisites Th

![[ICCV' 21]](https://github.com/hansen7/OcCo/raw/master/assets/teaser.png)

[ICCV' 21] "Unsupervised Point Cloud Pre-training via Occlusion Completion"

OcCo: Unsupervised Point Cloud Pre-training via Occlusion Completion This repository is the official implementation of paper: "Unsupervised Point Clou

Dataset and Code for the paper "DepthTrack: Unveiling the Power of RGBD Tracking" (ICCV2021), and "Depth-only Object Tracking" (BMVC2021)

DeT and DOT Code and datasets for "DepthTrack: Unveiling the Power of RGBD Tracking" (ICCV2021) "Depth-only Object Tracking" (BMVC2021) @InProceedings

MultiSiam: Self-supervised Multi-instance Siamese Representation Learning for Autonomous Driving

MultiSiam: Self-supervised Multi-instance Siamese Representation Learning for Autonomous Driving Code will be available soon. Motivation Architecture

ICCV2021, Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet

Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet, ICCV 2021 Update: 2021/03/11: update our new results. Now our T2T-ViT-14 w

A customisable game where you have to quickly click on black tiles in order of appearance while avoiding clicking on white squares.

W.I.P-Aim-Memory-Game A customisable game where you have to quickly click on black tiles in order of appearance while avoiding clicking on white squar

Efficient Training of Audio Transformers with Patchout

PaSST: Efficient Training of Audio Transformers with Patchout This is the implementation for Efficient Training of Audio Transformers with Patchout Pa

This is the implementation of the paper LiST: Lite Self-training Makes Efficient Few-shot Learners.

LiST (Lite Self-Training) This is the implementation of the paper LiST: Lite Self-training Makes Efficient Few-shot Learners. LiST is short for Lite S

An open-source Discord Nuker can be used as a self-bot or a regular bot.

How to use Double click avery.exe, and follow the prompts Features Important! Make sure to use [9] (Scrape Info) before using these, or some things ma

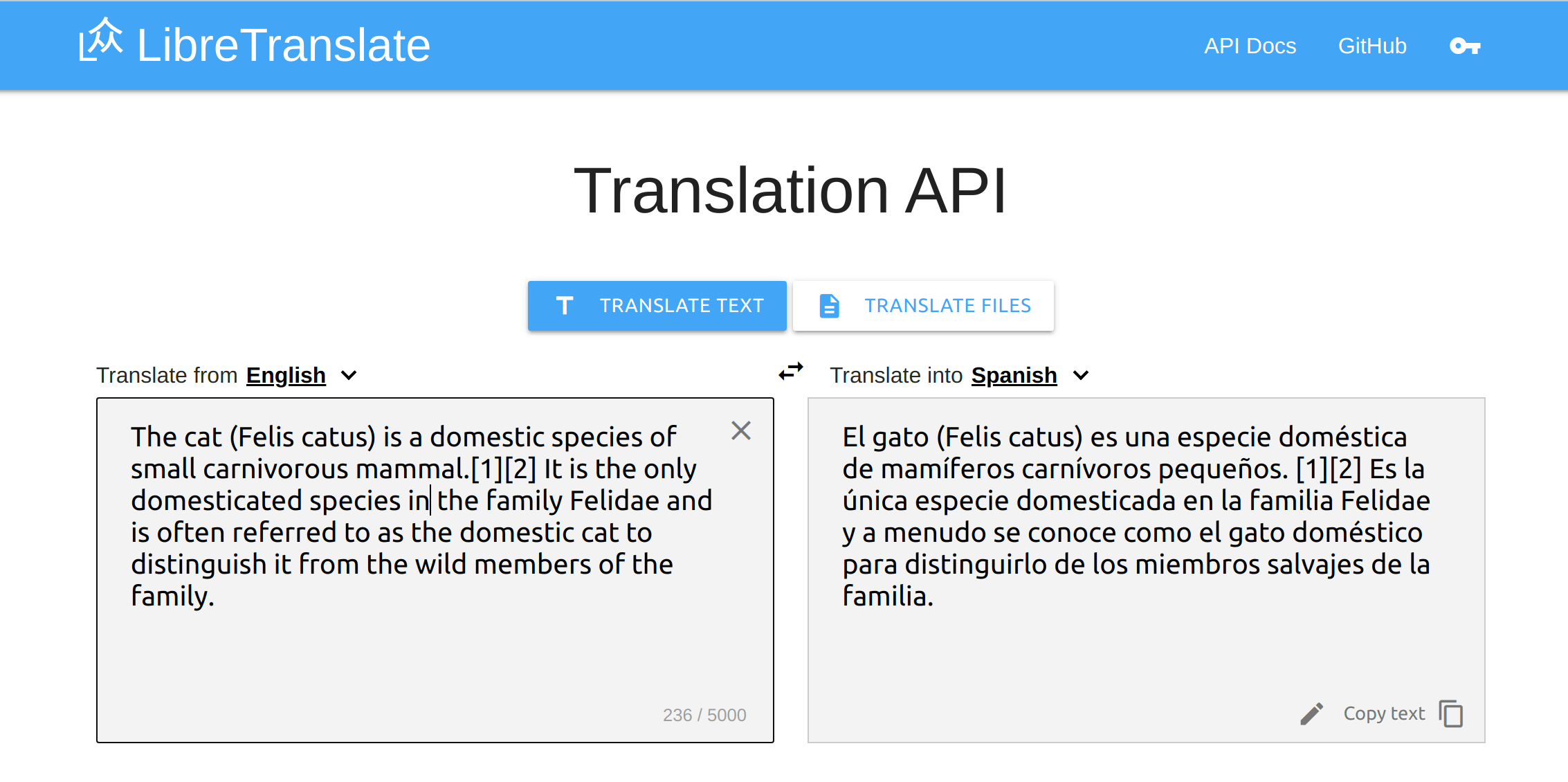

Free and Open Source Machine Translation API. 100% self-hosted, offline capable and easy to setup.

LibreTranslate Try it online! | API Docs | Community Forum Free and Open Source Machine Translation API, entirely self-hosted. Unlike other APIs, it d

Skyformer: Remodel Self-Attention with Gaussian Kernel and Nystr\"om Method (NeurIPS 2021)

Skyformer This repository is the official implementation of Skyformer: Remodel Self-Attention with Gaussian Kernel and Nystr"om Method (NeurIPS 2021).

Self-supervised Product Quantization for Deep Unsupervised Image Retrieval - ICCV2021

Self-supervised Product Quantization for Deep Unsupervised Image Retrieval Pytorch implementation of SPQ Accepted to ICCV 2021 - paper Young Kyun Jang

[ICML'21] Estimate the accuracy of the classifier in various environments through self-supervision

What Does Rotation Prediction Tell Us about Classifier Accuracy under Varying Testing Environments? [Paper] [ICML'21 Project] PyTorch Implementation T

A Simple Framwork for CV Pre-training Model (SOCO, VirTex, BEiT)

A Simple Framwork for CV Pre-training Model (SOCO, VirTex, BEiT)

Efficient Sharpness-aware Minimization for Improved Training of Neural Networks

Efficient Sharpness-aware Minimization for Improved Training of Neural Networks Code for “Efficient Sharpness-aware Minimization for Improved Training

Code for EMNLP2021 paper "Allocating Large Vocabulary Capacity for Cross-lingual Language Model Pre-training"

VoCapXLM Code for EMNLP2021 paper Allocating Large Vocabulary Capacity for Cross-lingual Language Model Pre-training Environment DockerFile: dancingso

Sequence Modeling with Structured State Spaces

Structured State Spaces for Sequence Modeling This repository provides implementations and experiments for the following papers. S4 Efficiently Modeli

A CROSS-MODAL FUSION NETWORK BASED ON SELF-ATTENTION AND RESIDUAL STRUCTURE FOR MULTIMODAL EMOTION RECOGNITION

CFN-SR A CROSS-MODAL FUSION NETWORK BASED ON SELF-ATTENTION AND RESIDUAL STRUCTURE FOR MULTIMODAL EMOTION RECOGNITION The audio-video based multimodal

The spiritual successor to knockknock for PyTorch Lightning, get notified when your training ends

Who's there? The spiritual successor to knockknock for PyTorch Lightning, to get a notification when your training is complete or when it crashes duri

Sequence Modeling with Structured State Spaces

Structured State Spaces for Sequence Modeling This repository provides implementations and experiments for the following papers. S4 Efficiently Modeli

A modular, research-friendly framework for high-performance and inference of sequence models at many scales

T5X T5X is a modular, composable, research-friendly framework for high-performance, configurable, self-service training, evaluation, and inference of

Skyformer: Remodel Self-Attention with Gaussian Kernel and Nystr\"om Method (NeurIPS 2021)

Skyformer This repository is the official implementation of Skyformer: Remodel Self-Attention with Gaussian Kernel and Nystr"om Method (NeurIPS 2021).

Revealing and Protecting Labels in Distributed Training

Revealing and Protecting Labels in Distributed Training

FastCover: A Self-Supervised Learning Framework for Multi-Hop Influence Maximization in Social Networks by Anonymous.

FastCover: A Self-Supervised Learning Framework for Multi-Hop Influence Maximization in Social Networks by Anonymous.

Structure Information is the Key: Self-Attention RoI Feature Extractor in 3D Object Detection

Structure Information is the Key: Self-Attention RoI Feature Extractor in 3D Object Detection abstract:Unlike 2D object detection where all RoI featur

training script for space time memory network

Trainig Script for Space Time Memory Network This codebase implemented training code for Space Time Memory Network with some cyclic features. Requirem

Training Certifiably Robust Neural Networks with Efficient Local Lipschitz Bounds (Local-Lip)

Training Certifiably Robust Neural Networks with Efficient Local Lipschitz Bounds (Local-Lip) Introduction TL;DR: We propose an efficient and trainabl

This codebase facilitates fast experimentation of differentially private training of Hugging Face transformers.

private-transformers This codebase facilitates fast experimentation of differentially private training of Hugging Face transformers. What is this? Why

Implementation for paper "Towards the Generalization of Contrastive Self-Supervised Learning"

Contrastive Self-Supervised Learning on CIFAR-10 Paper "Towards the Generalization of Contrastive Self-Supervised Learning", Weiran Huang, Mingyang Yi

Your self-hosted bookmark archive. Free and open source.

Your self-hosted bookmark archive. Free and open source. Contents About LinkAce Support Setup Contribution About LinkAce LinkAce is a self-hosted arch

Locally Constrained Self-Attentive Sequential Recommendation

LOCKER This is the pytorch implementation of this paper: Locally Constrained Self-Attentive Sequential Recommendation. Zhankui He, Handong Zhao, Zhe L

Enhancing Knowledge Tracing via Adversarial Training

Enhancing Knowledge Tracing via Adversarial Training This repository contains source code for the paper "Enhancing Knowledge Tracing via Adversarial T

SEC'21: Sparse Bitmap Compression for Memory-Efficient Training onthe Edge

Training Deep Learning Models on The Edge Training on the Edge enables continuous learning from new data for deployed neural networks on memory-constr

Generic image compressor for machine learning. Pytorch code for our paper "Lossy compression for lossless prediction".

Lossy Compression for Lossless Prediction Using: Training: This repostiory contains our implementation of the paper: Lossy Compression for Lossless Pr

Implementation of average- and worst-case robust flatness measures for adversarial training.

Relating Adversarially Robust Generalization to Flat Minima This repository contains code corresponding to the MLSys'21 paper: D. Stutz, M. Hein, B. S

A complete end-to-end machine learning portal that covers processes starting from model training to the model predicting results using FastAPI.

Machine Learning Portal Goal Application Workflow Process Design Live Project Goal A complete end-to-end machine learning portal that covers processes

Chinese NER(Named Entity Recognition) using BERT(Softmax, CRF, Span)

Chinese NER(Named Entity Recognition) using BERT(Softmax, CRF, Span)

[NeurIPS 2021] Official implementation of paper "Learning to Simulate Self-driven Particles System with Coordinated Policy Optimization".

Code for Coordinated Policy Optimization Webpage | Code | Paper | Talk (English) | Talk (Chinese) Hi there! This is the source code of the paper “Lear

Open source single image super-resolution toolbox containing various functionality for training a diverse number of state-of-the-art super-resolution models. Also acts as the companion code for the IEEE signal processing letters paper titled 'Improving Super-Resolution Performance using Meta-Attention Layers’.

Deep-FIR Codebase - Super Resolution Meta Attention Networks About This repository contains the main coding framework accompanying our work on meta-at

The official repository for "Intermediate Layers Matter in Momentum Contrastive Self Supervised Learning" paper.

Intermdiate layer matters - SSL The official repository for "Intermediate Layers Matter in Momentum Contrastive Self Supervised Learning" paper. Downl

Paper Code:A Self-adaptive Weighted Differential Evolution Approach for Large-scale Feature Selection

1. SaWDE.m is the main function 2. DataPartition.m is used to randomly partition the original data into training sets and test sets with a ratio of 7

Implementation for On Provable Benefits of Depth in Training Graph Convolutional Networks

Implementation for On Provable Benefits of Depth in Training Graph Convolutional Networks Setup This implementation is based on PyTorch = 1.0.0. Smal

[NeurIPS 2021] The PyTorch implementation of paper "Self-Supervised Learning Disentangled Group Representation as Feature"

IP-IRM [NeurIPS 2021] The PyTorch implementation of paper "Self-Supervised Learning Disentangled Group Representation as Feature". Codes will be relea

Colossal-AI: A Unified Deep Learning System for Large-Scale Parallel Training

ColossalAI An integrated large-scale model training system with efficient parallelization techniques. arXiv: Colossal-AI: A Unified Deep Learning Syst

Self Driving Car Prototype

Package Delivery Rover 🚀 This project is a prototype of Self Driving Car. It's based on embedded systems, to meet the current requirement of delivery

Data, model training, and evaluation code for "PubTables-1M: Towards a universal dataset and metrics for training and evaluating table extraction models".

PubTables-1M This repository contains training and evaluation code for the paper "PubTables-1M: Towards a universal dataset and metrics for training a

Predicting lncRNA–protein interactions based on graph autoencoders and collaborative training

Predicting lncRNA–protein interactions based on graph autoencoders and collaborative training Code for our paper "Predicting lncRNA–protein interactio

Colossal-AI: A Unified Deep Learning System for Large-Scale Parallel Training

ColossalAI An integrated large-scale model training system with efficient parallelization techniques Installation PyPI pip install colossalai Install

AugMax: Adversarial Composition of Random Augmentations for Robust Training

[NeurIPS'21] "AugMax: Adversarial Composition of Random Augmentations for Robust Training" by Haotao Wang, Chaowei Xiao, Jean Kossaifi, Zhiding Yu, Animashree Anandkumar, and Zhangyang Wang.

Exponential Graph is Provably Efficient for Decentralized Deep Training

Exponential Graph is Provably Efficient for Decentralized Deep Training This code repository is for the paper Exponential Graph is Provably Efficient

![[NeurIPS'21]](https://github.com/VITA-Group/AugMax/raw/main/images/AugMax.PNG)

[NeurIPS'21] "AugMax: Adversarial Composition of Random Augmentations for Robust Training" by Haotao Wang, Chaowei Xiao, Jean Kossaifi, Zhiding Yu, Animashree Anandkumar, and Zhangyang Wang.

AugMax: Adversarial Composition of Random Augmentations for Robust Training Haotao Wang, Chaowei Xiao, Jean Kossaifi, Zhiding Yu, Anima Anandkumar, an

Official implementation of NeurIPS 2021 paper "Contextual Similarity Aggregation with Self-attention for Visual Re-ranking"

CSA: Contextual Similarity Aggregation with Self-attention for Visual Re-ranking PyTorch training code for CSA (Contextual Similarity Aggregation). We

Improving Transferability of Representations via Augmentation-Aware Self-Supervision

Improving Transferability of Representations via Augmentation-Aware Self-Supervision Accepted to NeurIPS 2021 TL;DR: Learning augmentation-aware infor

In this project I played with mlflow, streamlit and fastapi to create a training and prediction app on digits

Fastapi + MLflow + streamlit Setup env. I hope I covered all. pip install -r requirements.txt Start app Go in the root dir and run these Streamlit str

PyTorch implementation of "A Simple Baseline for Low-Budget Active Learning".

A Simple Baseline for Low-Budget Active Learning This repository is the implementation of A Simple Baseline for Low-Budget Active Learning. In this pa

Asterisk is a framework to generate high-quality training datasets at scale

Asterisk is a framework to generate high-quality training datasets at scale

A PyTorch Implementation of Gated Graph Sequence Neural Networks (GGNN)

A PyTorch Implementation of GGNN This is a PyTorch implementation of the Gated Graph Sequence Neural Networks (GGNN) as described in the paper Gated G

![[NeurIPS 2021] Better Safe Than Sorry: Preventing Delusive Adversaries with Adversarial Training](https://github.com/TLMichael/Delusive-Adversary/raw/main/fig/Thumbnail.png)

[NeurIPS 2021] Better Safe Than Sorry: Preventing Delusive Adversaries with Adversarial Training

Better Safe Than Sorry: Preventing Delusive Adversaries with Adversarial Training Code for NeurIPS 2021 paper "Better Safe Than Sorry: Preventing Delu

code for generating data set ES-ImageNet with corresponding training code

es-imagenet-master code for generating data set ES-ImageNet with corresponding training code dataset generator some codes of ODG algorithm The variabl

A Context-aware Visual Attention-based training pipeline for Object Detection from a Webpage screenshot!

CoVA: Context-aware Visual Attention for Webpage Information Extraction Abstract Webpage information extraction (WIE) is an important step to create k

Code for the prototype tool in our paper "CoProtector: Protect Open-Source Code against Unauthorized Training Usage with Data Poisoning".

CoProtector Code for the prototype tool in our paper "CoProtector: Protect Open-Source Code against Unauthorized Training Usage with Data Poisoning".

Project repo for the paper SILT: Self-supervised Lighting Transfer Using Implicit Image Decomposition

SILT: Self-supervised Lighting Transfer Using Implicit Image Decomposition (BMVC 2021) Project repo for the paper SILT: Self-supervised Lighting Trans

IEEE Winter Conference on Applications of Computer Vision 2022 Accepted

SSKT(Accepted WACV2022) Concept map Dataset Image dataset CIFAR10 (torchvision) CIFAR100 (torchvision) STL10 (torchvision) Pascal VOC (torchvision) Im

Code for the prototype tool in our paper "CoProtector: Protect Open-Source Code against Unauthorized Training Usage with Data Poisoning".

CoProtector Code for the prototype tool in our paper "CoProtector: Protect Open-Source Code against Unauthorized Training Usage with Data Poisoning".

This is the official source code for SLATE. We provide the code for the model, the training code, and a dataset loader for the 3D Shapes dataset. This code is implemented in Pytorch.

SLATE This is the official source code for SLATE. We provide the code for the model, the training code and a dataset loader for the 3D Shapes dataset.

Code for Generating Disentangled Arguments with Prompts: A Simple Event Extraction Framework that Works

GDAP Code for Generating Disentangled Arguments with Prompts: A Simple Event Extraction Framework that Works Environment Python (verified: v3.8) CUDA

PyTorch Implementation of ByteDance's Cross-speaker Emotion Transfer Based on Speaker Condition Layer Normalization and Semi-Supervised Training in Text-To-Speech

Cross-Speaker-Emotion-Transfer - PyTorch Implementation PyTorch Implementation of ByteDance's Cross-speaker Emotion Transfer Based on Speaker Conditio

PyTorch Code for NeurIPS 2021 paper Anti-Backdoor Learning: Training Clean Models on Poisoned Data.

Anti-Backdoor Learning PyTorch Code for NeurIPS 2021 paper Anti-Backdoor Learning: Training Clean Models on Poisoned Data. Check the unlearning effect

wger Workout Manager is a free, open source web application that helps you manage your personal workouts, weight and diet plans and can also be used as a simple gym management utility.

wger (ˈvɛɡɐ) Workout Manager is a free, open source web application that helps you manage your personal workouts, weight and diet plans and can also be used as a simple gym management utility.

Zero-dependency Cryptography Python Module with a self made method

TesohhCrypt TesohhCrypt is a zero-dependency Cryptography Python Module, with a method that i made. (likely someone already made a similar one, but i

Efficient Training of Visual Transformers with Small Datasets

Official codes for "Efficient Training of Visual Transformers with Small Datasets", NerIPS 2021.

Convolutional Recurrent Neural Network (CRNN) for image-based sequence recognition.

Convolutional Recurrent Neural Network This software implements the Convolutional Recurrent Neural Network (CRNN), a combination of CNN, RNN and CTC l

Training Very Deep Neural Networks Without Skip-Connections

DiracNets v2 update (January 2018): The code was updated for DiracNets-v2 in which we removed NCReLU by adding per-channel a and b multipliers without

Training RNNs as Fast as CNNs

News SRU++, a new SRU variant, is released. [tech report] [blog] The experimental code and SRU++ implementation are available on the dev branch which

PyTorch Implementation of Unsupervised Depth Completion with Calibrated Backprojection Layers (ORAL, ICCV 2021)

Unsupervised Depth Completion with Calibrated Backprojection Layers PyTorch implementation of Unsupervised Depth Completion with Calibrated Backprojec

Self-supervised learning on Graph Representation Learning (node-level task)

graph_SSL Self-supervised learning on Graph Representation Learning (node-level task) How to run the code To run GRACE, sh run_GRACE.sh To run GCA, sh

Official implementation of NeurIPS 2021 paper "Contextual Similarity Aggregation with Self-attention for Visual Re-ranking"

CSA: Contextual Similarity Aggregation with Self-attention for Visual Re-ranking PyTorch training code for CSA (Contextual Similarity Aggregation). We

2021 CCF BDCI 全国信息检索挑战杯(CCIR-Cup)智能人机交互自然语言理解赛道第二名参赛解决方案

2021 CCF BDCI 全国信息检索挑战杯(CCIR-Cup) 智能人机交互自然语言理解赛道第二名解决方案 比赛网址: CCIR-Cup-智能人机交互自然语言理解 1.依赖环境: python==3.8 torch==1.7.1+cu110 numpy==1.19.2 transformers=

This repository is the official implementation of Unleashing the Power of Contrastive Self-Supervised Visual Models via Contrast-Regularized Fine-Tuning (NeurIPS21).

Core-tuning This repository is the official implementation of ``Unleashing the Power of Contrastive Self-Supervised Visual Models via Contrast-Regular

PyTorch Implementation of Unsupervised Depth Completion with Calibrated Backprojection Layers (ORAL, ICCV 2021)

PyTorch Implementation of Unsupervised Depth Completion with Calibrated Backprojection Layers (ORAL, ICCV 2021)

CVNets: A library for training computer vision networks

CVNets: A library for training computer vision networks This repository contains the source code for training computer vision models. Specifically, it

This repo is for Self-Supervised Monocular Depth Estimation with Internal Feature Fusion(arXiv), BMVC2021

DIFFNet This repo is for Self-Supervised Monocular Depth Estimation with Internal Feature Fusion(arXiv), BMVC2021 A new backbone for self-supervised d

Codebase for the self-supervised goal reaching benchmark introduced in the LEXA paper

LEXA Benchmark Codebase for the self-supervised goal reaching benchmark introduced in the LEXA paper (Discovering and Achieving Goals via World Models

![[NeurIPS 2021 Spotlight] Aligning Pretraining for Detection via Object-Level Contrastive Learning](https://github.com/hologerry/SoCo/raw/main/figures/overview.png)

[NeurIPS 2021 Spotlight] Aligning Pretraining for Detection via Object-Level Contrastive Learning

SoCo [NeurIPS 2021 Spotlight] Aligning Pretraining for Detection via Object-Level Contrastive Learning By Fangyun Wei*, Yue Gao*, Zhirong Wu, Han Hu,

This repository includes the code of the sequence-to-sequence model for discontinuous constituent parsing described in paper Discontinuous Grammar as a Foreign Language.

Discontinuous Grammar as a Foreign Language This repository includes the code of the sequence-to-sequence model for discontinuous constituent parsing

Simple Discord bot for snekbox (sandboxed Python code execution), self-host or use a global instance

snakeboxed Simple Discord bot for snekbox (sandboxed Python code execution), self-host or use a global instance