1357 Repositories

Python self-critical-sequence-training Libraries

Pytorch implementation of the paper "COAD: Contrastive Pre-training with Adversarial Fine-tuning for Zero-shot Expert Linking."

Expert-Linking Pytorch implementation of the paper "COAD: Contrastive Pre-training with Adversarial Fine-tuning for Zero-shot Expert Linking." This is

Code for ICDM2020 full paper: "Sub-graph Contrast for Scalable Self-Supervised Graph Representation Learning"

Subg-Con Sub-graph Contrast for Scalable Self-Supervised Graph Representation Learning (Jiao et al., ICDM 2020): https://arxiv.org/abs/2009.10273 Over

Graph InfoClust: Leveraging cluster-level node information for unsupervised graph representation learning

Graph-InfoClust-GIC [PAKDD 2021] PAKDD'21 version Graph InfoClust: Maximizing Coarse-Grain Mutual Information in Graphs Preprint version Graph InfoClu

Pytorch implementation of SELF-ATTENTIVE VAD, ICASSP 2021

SELF-ATTENTIVE VAD: CONTEXT-AWARE DETECTION OF VOICE FROM NOISE (ICASSP 2021) Pytorch implementation of SELF-ATTENTIVE VAD | Paper | Dataset Yong Rae

Unofficial implementation of Google "CutPaste: Self-Supervised Learning for Anomaly Detection and Localization" in PyTorch

CutPaste CutPaste: image from paper Unofficial implementation of Google's "CutPaste: Self-Supervised Learning for Anomaly Detection and Localization"

Reproduce results and replicate training fo T0 (Multitask Prompted Training Enables Zero-Shot Task Generalization)

T-Zero This repository serves primarily as codebase and instructions for training, evaluation and inference of T0. T0 is the model developed in Multit

Align and Prompt: Video-and-Language Pre-training with Entity Prompts

ALPRO Align and Prompt: Video-and-Language Pre-training with Entity Prompts [Paper] Dongxu Li, Junnan Li, Hongdong Li, Juan Carlos Niebles, Steven C.H

CVE-2021-43936 is a critical vulnerability (CVSS3 10.0) leading to Remote Code Execution (RCE) in WebHMI Firmware.

CVE-2021-43936 CVE-2021-43936 is a critical vulnerability (CVSS3 10.0) leading to Remote Code Execution (RCE) in WebHMI Firmware. This vulnerability w

In this project, we develop a face recognize platform based on MTCNN object-detection netcwork and FaceNet self-supervised network.

模式识别大作业——人脸检测与识别平台 本项目是一个简易的人脸检测识别平台,提供了人脸信息录入和人脸识别的功能。前端采用 html+css+js,后端采用 pytorch,

Bidirectionally transformed strings

bistring The bistring library provides non-destructive versions of common string processing operations like normalization, case folding, and find/repl

Code for "SUGAR: Subgraph Neural Network with Reinforcement Pooling and Self-Supervised Mutual Information Mechanism"

SUGAR Code for "SUGAR: Subgraph Neural Network with Reinforcement Pooling and Self-Supervised Mutual Information Mechanism" Overview train.py: the cor

Implementation of paper "Self-supervised Learning on Graphs:Deep Insights and New Directions"

SelfTask-GNN A PyTorch implementation of "Self-supervised Learning on Graphs: Deep Insights and New Directions". [paper] In this paper, we first deepe

Pre-Training Graph Neural Networks for Cold-Start Users and Items Representation.

Pretrain-Recsys This is our Tensorflow implementation for our WSDM 2021 paper: Bowen Hao, Jing Zhang, Hongzhi Yin, Cuiping Li, Hong Chen. Pre-Training

PyTorch code of "SLAPS: Self-Supervision Improves Structure Learning for Graph Neural Networks"

SLAPS-GNN This repo contains the implementation of the model proposed in SLAPS: Self-Supervision Improves Structure Learning for Graph Neural Networks

Pre-training of Graph Augmented Transformers for Medication Recommendation

G-Bert Pre-training of Graph Augmented Transformers for Medication Recommendation Intro G-Bert combined the power of Graph Neural Networks and BERT (B

Code for KDD'20 "Generative Pre-Training of Graph Neural Networks"

GPT-GNN: Generative Pre-Training of Graph Neural Networks GPT-GNN is a pre-training framework to initialize GNNs by generative pre-training. It can be

Official PyTorch Implementation of "Self-supervised Auxiliary Learning with Meta-paths for Heterogeneous Graphs". NeurIPS 2020.

Self-supervised Auxiliary Learning with Meta-paths for Heterogeneous Graphs This repository is the implementation of SELAR. Dasol Hwang* , Jinyoung Pa

Reference Code for AAAI-20 paper "Multi-Stage Self-Supervised Learning for Graph Convolutional Networks on Graphs with Few Labels"

Reference Code for AAAI-20 paper "Multi-Stage Self-Supervised Learning for Graph Convolutional Networks on Graphs with Few Labels" Please refer to htt

code for "Self-supervised edge features for improved Graph Neural Network training", arxivlink

Self-supervised edge features for improved Graph Neural Network training Data availability: Here is a link to the raw data for the organoids dataset.

![[ICML 2020] DrRepair: Learning to Repair Programs from Error Messages](https://github.com/michiyasunaga/DrRepair/raw/master/DrRepair-overview.png)

[ICML 2020] DrRepair: Learning to Repair Programs from Error Messages

DrRepair: Learning to Repair Programs from Error Messages This repo provides the source code & data of our paper: Graph-based, Self-Supervised Program

A Self-Supervised Contrastive Learning Framework for Aspect Detection

AspDecSSCL A Self-Supervised Contrastive Learning Framework for Aspect Detection This repository is a pytorch implementation for the following AAAI'21

Self-Guided Contrastive Learning for BERT Sentence Representations

Self-Guided Contrastive Learning for BERT Sentence Representations This repository is dedicated for releasing the implementation of the models utilize

Autoregressive Predictive Coding: An unsupervised autoregressive model for speech representation learning

Autoregressive Predictive Coding This repository contains the official implementation (in PyTorch) of Autoregressive Predictive Coding (APC) proposed

Self-labelling via simultaneous clustering and representation learning. (ICLR 2020)

Self-labelling via simultaneous clustering and representation learning 🆗 🆗 🎉 NEW models (20th August 2020): Added standard SeLa pretrained torchvis

Self-supervised Label Augmentation via Input Transformations (ICML 2020)

Self-supervised Label Augmentation via Input Transformations Authors: Hankook Lee, Sung Ju Hwang, Jinwoo Shin (KAIST) Accepted to ICML 2020 Install de

Code to generate datasets used in "How Useful is Self-Supervised Pretraining for Visual Tasks?"

Synthetic dataset rendering Framework for producing the synthetic datasets used in: How Useful is Self-Supervised Pretraining for Visual Tasks? Alejan

Official code for the paper "Self-Supervised Prototypical Transfer Learning for Few-Shot Classification"

Self-Supervised Prototypical Transfer Learning for Few-Shot Classification This repository contains the reference source code and pre-trained models (

![SCAN: Learning to Classify Images without Labels, incl. SimCLR. [ECCV 2020]](https://github.com/wvangansbeke/Unsupervised-Classification/raw/master/images/teaser.jpg)

SCAN: Learning to Classify Images without Labels, incl. SimCLR. [ECCV 2020]

Learning to Classify Images without Labels This repo contains the Pytorch implementation of our paper: SCAN: Learning to Classify Images without Label

Official PyTorch implementation of the paper "Self-Supervised Relational Reasoning for Representation Learning", NeurIPS 2020 Spotlight.

Official PyTorch implementation of the paper: "Self-Supervised Relational Reasoning for Representation Learning" (2020), Patacchiola, M., and Storkey,

Code and training data for our ECCV 2016 paper on Unsupervised Learning

Shuffle and Learn (Shuffle Tuple) Created by Ishan Misra Based on the ECCV 2016 Paper - "Shuffle and Learn: Unsupervised Learning using Temporal Order

Joint-task Self-supervised Learning for Temporal Correspondence (NeurIPS 2019)

Joint-task Self-supervised Learning for Temporal Correspondence Project | Paper Overview Joint-task Self-supervised Learning for Temporal Corresponden

Official implementation of ACMMM'20 paper 'Self-supervised Video Representation Learning Using Inter-intra Contrastive Framework'

Self-supervised Video Representation Learning Using Inter-intra Contrastive Framework Official code for paper, Self-supervised Video Representation Le

Implementation of our paper "Video Playback Rate Perception for Self-supervised Spatio-Temporal Representation Learning".

PRP Introduction This is the implementation of our paper "Video Playback Rate Perception for Self-supervised Spatio-Temporal Representation Learning".

code for our ECCV-2020 paper: Self-supervised Video Representation Learning by Pace Prediction

Video_Pace This repository contains the code for the following paper: Jiangliu Wang, Jianbo Jiao and Yunhui Liu, "Self-Supervised Video Representation

Video Representation Learning by Recognizing Temporal Transformations. In ECCV, 2020.

Video Representation Learning by Recognizing Temporal Transformations [Project Page] Simon Jenni, Givi Meishvili, and Paolo Favaro. In ECCV, 2020. Thi

![[NeurIPS'20] Self-supervised Co-Training for Video Representation Learning. Tengda Han, Weidi Xie, Andrew Zisserman.](https://github.com/TengdaHan/CoCLR/raw/main/asset/teaser.png)

[NeurIPS'20] Self-supervised Co-Training for Video Representation Learning. Tengda Han, Weidi Xie, Andrew Zisserman.

CoCLR: Self-supervised Co-Training for Video Representation Learning This repository contains the implementation of: InfoNCE (MoCo on videos) UberNCE

Code for Paper: Self-supervised Learning of Motion Capture

Self-supervised Learning of Motion Capture This is code for the paper: Hsiao-Yu Fish Tung, Hsiao-Wei Tung, Ersin Yumer, Katerina Fragkiadaki, Self-sup

An unsupervised learning framework for depth and ego-motion estimation from monocular videos

SfMLearner This codebase implements the system described in the paper: Unsupervised Learning of Depth and Ego-Motion from Video Tinghui Zhou, Matthew

Code for the paper: Audio-Visual Scene Analysis with Self-Supervised Multisensory Features

[Paper] [Project page] This repository contains code for the paper: Andrew Owens, Alexei A. Efros. Audio-Visual Scene Analysis with Self-Supervised Mu

Codebase for ECCV18 "The Sound of Pixels"

Sound-of-Pixels Codebase for ECCV18 "The Sound of Pixels". *This repository is under construction, but the core parts are already there. Environment T

PyTorch code for training MM-DistillNet for multimodal knowledge distillation

There is More than Meets the Eye: Self-Supervised Multi-Object Detection and Tracking with Sound by Distilling Multimodal Knowledge MM-DistillNet is a

Code for the paper: Fighting Fake News: Image Splice Detection via Learned Self-Consistency

Fighting Fake News: Image Splice Detection via Learned Self-Consistency [paper] [website] Minyoung Huh *12, Andrew Liu *1, Andrew Owens1, Alexei A. Ef

Multi-task Self-supervised Object Detection via Recycling of Bounding Box Annotations (CVPR, 2019)

Multi-task Self-supervised Object Detection via Recycling of Bounding Box Annotations (CVPR 2019) To make better use of given limited labels, we propo

AdaFocus V2: End-to-End Training of Spatial Dynamic Networks for Video Recognition

AdaFocusV2 This repo contains the official code and pre-trained models for AdaFo

Deep Learning Training Scripts With Python

Deep Learning Training Scripts DNN Frameworks Caffe PyTorch Tensorflow CNN Models VGG ResNet DenseNet Inception Language Modeling GatedCNN-LM Attentio

This program analyzes a DNA sequence and outputs snippets of DNA that are likely to be protein-coding genes.

This program analyzes a DNA sequence and outputs snippets of DNA that are likely to be protein-coding genes.

The code for SAG-DTA: Prediction of Drug–Target Affinity Using Self-Attention Graph Network.

SAG-DTA The code is the implementation for the paper 'SAG-DTA: Prediction of Drug–Target Affinity Using Self-Attention Graph Network'. Requirements py

The easiest way to use deep metric learning in your application. Modular, flexible, and extensible. Written in PyTorch.

News December 27: v1.1.0 New loss functions: CentroidTripletLoss and VICRegLoss Mean reciprocal rank + per-class accuracies See the release notes Than

Retrieve annotated intron sequences and classify them as minor (U12-type) or major (U2-type)

(intron I nterrogator and C lassifier) intronIC is a program that can be used to classify intron sequences as minor (U12-type) or major (U2-type), usi

Marketplace for self published books

Nile API API for the imaginary Nile marketplace for self published books. This is a project created to try out FastAPI as the post promising ASGI serv

Hub is a dataset format with a simple API for creating, storing, and collaborating on AI datasets of any size.

Hub is a dataset format with a simple API for creating, storing, and collaborating on AI datasets of any size. The hub data layout enables rapid transformations and streaming of data while training models at scale. Hub is used by Google, Waymo, Red Cross, Oxford University, and Omdena.

Modern, privacy-friendly, and detailed web analytics that works without cookies or JS.

Modern, privacy-friendly, and cookie-free web analytics. Getting started » Screenshots • Features • Office Hours Motivation There are a lot of web ana

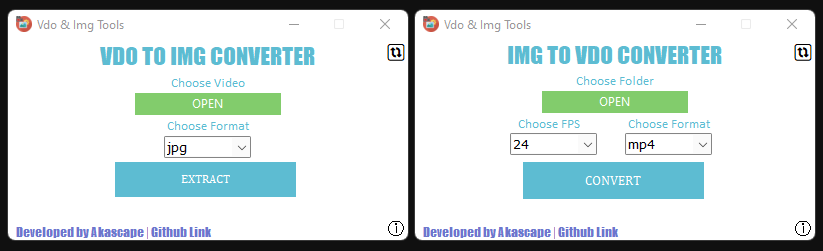

An easy to use GUI based video to image sequence converter (and vice versa).

Vdo & Img Conversion Tools This is a quick conversion tool made with python that can save you a lot of time. With this tool you can extract image sequ

A human-readable PyTorch implementation of "Self-attention Does Not Need O(n^2) Memory"

memory_efficient_attention.pytorch A human-readable PyTorch implementation of "Self-attention Does Not Need O(n^2) Memory" (Rabe&Staats'21). def effic

Sequence lineage information extracted from RKI sequence data repo

Pango lineage information for German SARS-CoV-2 sequences This repository contains a join of the metadata and pango lineage tables of all German SARS-

PyTorch Implementation of PIXOR: Real-time 3D Object Detection from Point Clouds

PIXOR: Real-time 3D Object Detection from Point Clouds This is a custom implementation of the paper from Uber ATG using PyTorch 1.0. It represents the

Code to use Augmented Shapiro Wilks Stopping, as well as code for the paper "Statistically Signifigant Stopping of Neural Network Training"

This codebase is being actively maintained, please create and issue if you have issues using it Basics All data files are included under losses and ea

PyTorch implementation of Rethinking Positional Encoding in Language Pre-training

TUPE PyTorch implementation of Rethinking Positional Encoding in Language Pre-training. Quickstart Clone this repository. git clone https://github.com

An self sufficient AI that crawls the web to learn how to generate art from keywords

Roxx-IO - The Smart Artist AI! TO DO / IDEAS Implement Web-Scraping Functionality Figure out a less annoying (and an off button for it) text to speech

Code release for SLIP Self-supervision meets Language-Image Pre-training

SLIP: Self-supervision meets Language-Image Pre-training What you can find in this repo: Pre-trained models (with ViT-Small, Base, Large) and code to

A toolkit to generate MR sequence diagrams

mrsd: a toolkit to generate MR sequence diagrams mrsd is a Python toolkit to generate MR sequence diagrams, as shown below for the basic FLASH sequenc

Self-describing JSON-RPC services made easy

ReflectRPC Self-describing JSON-RPC services made easy Contents What is ReflectRPC? Installation Features Datatypes Custom Datatypes Returning Errors

These are the scripts used for the project of ‘Assembly of a pan-genome for global cattle reveals missing sequence and novel structural variation, providing new insights into their diversity and evolution history’

script-SV-genotyping These are the scripts used for the project of ‘Assembly of a pan-genome for global cattle reveals missing sequence and novel stru

Turn any live video stream or locally stored video into a dataset of interesting samples for ML training, or any other type of analysis.

Sieve Video Data Collection Example Find samples that are interesting within hours of raw video, for free and completely automatically using Sieve API

Self-Adaptable Point Processes with Nonparametric Time Decays

NPPDecay This is our implementation for the paper Self-Adaptable Point Processes with Nonparametric Time Decays, by Zhimeng Pan, Zheng Wang, Jeff M. P

Implementation of our paper "DMT: Dynamic Mutual Training for Semi-Supervised Learning"

DMT: Dynamic Mutual Training for Semi-Supervised Learning This repository contains the code for our paper DMT: Dynamic Mutual Training for Semi-Superv

![[NeurIPS-2020] Self-paced Contrastive Learning with Hybrid Memory for Domain Adaptive Object Re-ID.](https://github.com/yxgeee/SpCL/raw/master/figs/framework.png)

[NeurIPS-2020] Self-paced Contrastive Learning with Hybrid Memory for Domain Adaptive Object Re-ID.

Self-paced Contrastive Learning (SpCL) The official repository for Self-paced Contrastive Learning with Hybrid Memory for Domain Adaptive Object Re-ID

A PyTorch Extension: Tools for easy mixed precision and distributed training in Pytorch

Introduction This is a Python package available on PyPI for NVIDIA-maintained utilities to streamline mixed precision and distributed training in Pyto

ONNX Runtime: cross-platform, high performance ML inferencing and training accelerator

ONNX Runtime is a cross-platform inference and training machine-learning accelerator. ONNX Runtime inference can enable faster customer experiences an

Python SDK for building, training, and deploying ML models

Overview of Kubeflow Fairing Kubeflow Fairing is a Python package that streamlines the process of building, training, and deploying machine learning (

A Japanese tokenizer based on recurrent neural networks

Nagisa is a python module for Japanese word segmentation/POS-tagging. It is designed to be a simple and easy-to-use tool. This tool has the following

The code for paper "Contrastive Spatio-Temporal Pretext Learning for Self-supervised Video Representation" which is accepted by AAAI 2022

Contrastive Spatio Temporal Pretext Learning for Self-supervised Video Representation (AAAI 2022) The code for paper "Contrastive Spatio-Temporal Pret

Pytorch implementation of SenFormer: Efficient Self-Ensemble Framework for Semantic Segmentation

SenFormer: Efficient Self-Ensemble Framework for Semantic Segmentation Efficient Self-Ensemble Framework for Semantic Segmentation by Walid Bousselham

BARTpho: Pre-trained Sequence-to-Sequence Models for Vietnamese

Table of contents Introduction Using BARTpho with fairseq Using BARTpho with transformers Notes BARTpho: Pre-trained Sequence-to-Sequence Models for V

A simple, personal chat program that runs on a single computer. No Internet, just you.

MultiChat A simple, personal chat program that runs on a single computer. No Internet, just you. Simple and Local MultiChat was created with ease of u

Graph Self-Supervised Learning for Optoelectronic Properties of Organic Semiconductors

SSL_OSC Graph Self-Supervised Learning for Optoelectronic Properties of Organic Semiconductors

Training deep models using anime, illustration images.

animeface deep models for anime images. Datasets anime-face-dataset Anime faces collected from Getchu.com. Based on Mckinsey666's dataset. 63.6K image

learned_optimization: Training and evaluating learned optimizers in JAX

learned_optimization: Training and evaluating learned optimizers in JAX learned_optimization is a research codebase for training learned optimizers. I

A full pipeline AutoML tool for tabular data

HyperGBM Doc | 中文 We Are Hiring! Dear folks,we are offering challenging opportunities located in Beijing for both professionals and students who are k

3D Human Pose Machines with Self-supervised Learning

3D Human Pose Machines with Self-supervised Learning Keze Wang, Liang Lin, Chenhan Jiang, Chen Qian, and Pengxu Wei, “3D Human Pose Machines with Self

Training PyTorch models with differential privacy

Opacus is a library that enables training PyTorch models with differential privacy. It supports training with minimal code changes required on the cli

Infrastructure as Code (IaC) for a self-hosted version of Gnosis Safe on AWS

Welcome to Yearn Gnosis Safe! Setting up your local environment Infrastructure Deploying Gnosis Safe Prerequisites 1. Create infrastructure for secret

Tensorflow Implementation of ECCV'18 paper: Multimodal Human Motion Synthesis

MT-VAE for Multimodal Human Motion Synthesis This is the code for ECCV 2018 paper MT-VAE: Learning Motion Transformations to Generate Multimodal Human

Codes for building and training the neural network model described in Domain-informed neural networks for interaction localization within astroparticle experiments.

Domain-informed Neural Networks Codes for building and training the neural network model described in Domain-informed neural networks for interaction

"Learning and Analyzing Generation Order for Undirected Sequence Models" in Findings of EMNLP, 2021

undirected-generation-dev This repo contains the source code of the models described in the following paper "Learning and Analyzing Generation Order f

On the Complementarity between Pre-Training and Back-Translation for Neural Machine Translation (Findings of EMNLP 2021))

PTvsBT On the Complementarity between Pre-Training and Back-Translation for Neural Machine Translation (Findings of EMNLP 2021) Citation Please cite a

Ensembling Off-the-shelf Models for GAN Training

Vision-aided GAN video (3m) | website | paper Can the collective knowledge from a large bank of pretrained vision models be leveraged to improve GAN t

Fine-grained Post-training for Improving Retrieval-based Dialogue Systems - NAACL 2021

Fine-grained Post-training for Multi-turn Response Selection Implements the model described in the following paper Fine-grained Post-training for Impr

Training and Evaluation Code for Neural Volumes

Neural Volumes This repository contains training and evaluation code for the paper Neural Volumes. The method learns a 3D volumetric representation of

A simple, 2-person chat program that runs on a single computer. No Internet, just you

localChat A simple, 2-person chat program that runs on a single computer. No Internet, just you. Simple and Local This was created with ease of use in

Snakemake workflow for converting FASTQ files to self-contained CRAM files with maximum lossless compression.

Snakemake workflow: name A Snakemake workflow for description Usage The usage of this workflow is described in the Snakemake Workflow Catalog. If

Ensembling Off-the-shelf Models for GAN Training

Data-Efficient GANs with DiffAugment project | paper | datasets | video | slides Generated using only 100 images of Obama, grumpy cats, pandas, the Br

SeqLike - flexible biological sequence objects in Python

SeqLike - flexible biological sequence objects in Python Introduction A single object API that makes working with biological sequences in Python more

Automate the case review on legal case documents and find the most critical cases using network analysis

Automation on Legal Court Cases Review This project is to automate the case review on legal case documents and find the most critical cases using netw

The official implementation of the Hybrid Self-Attention NEAT algorithm

PUREPLES - Pure Python Library for ES-HyperNEAT About This is a library of evolutionary algorithms with a focus on neuroevolution, implemented in pure

UniSpeech - Large Scale Self-Supervised Learning for Speech

UniSpeech The family of UniSpeech: WavLM (arXiv): WavLM: Large-Scale Self-Supervised Pre-training for Full Stack Speech Processing UniSpeech (ICML 202

Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition

SEW (Squeezed and Efficient Wav2vec) The repo contains the code of the paper "Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speec

Edge-Augmented Graph Transformer

Edge-augmented Graph Transformer Introduction This is the official implementation of the Edge-augmented Graph Transformer (EGT) as described in https:

Nystromformer: A Nystrom-based Algorithm for Approximating Self-Attention

Nystromformer: A Nystrom-based Algorithm for Approximating Self-Attention April 6, 2021 We extended segment-means to compute landmarks without requiri

Transformer training code for sequential tasks

Sequential Transformer This is a code for training Transformers on sequential tasks such as language modeling. Unlike the original Transformer archite